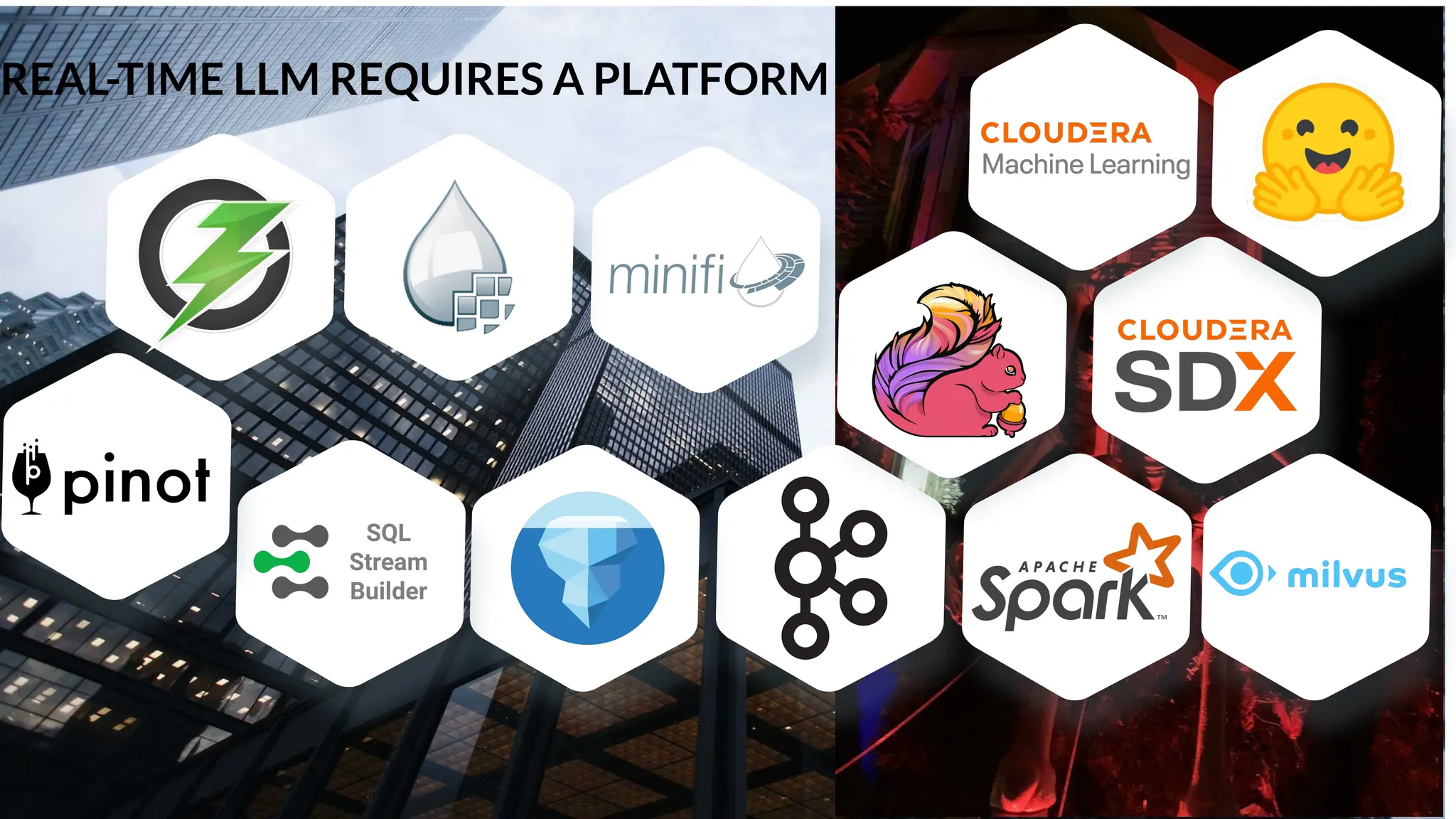

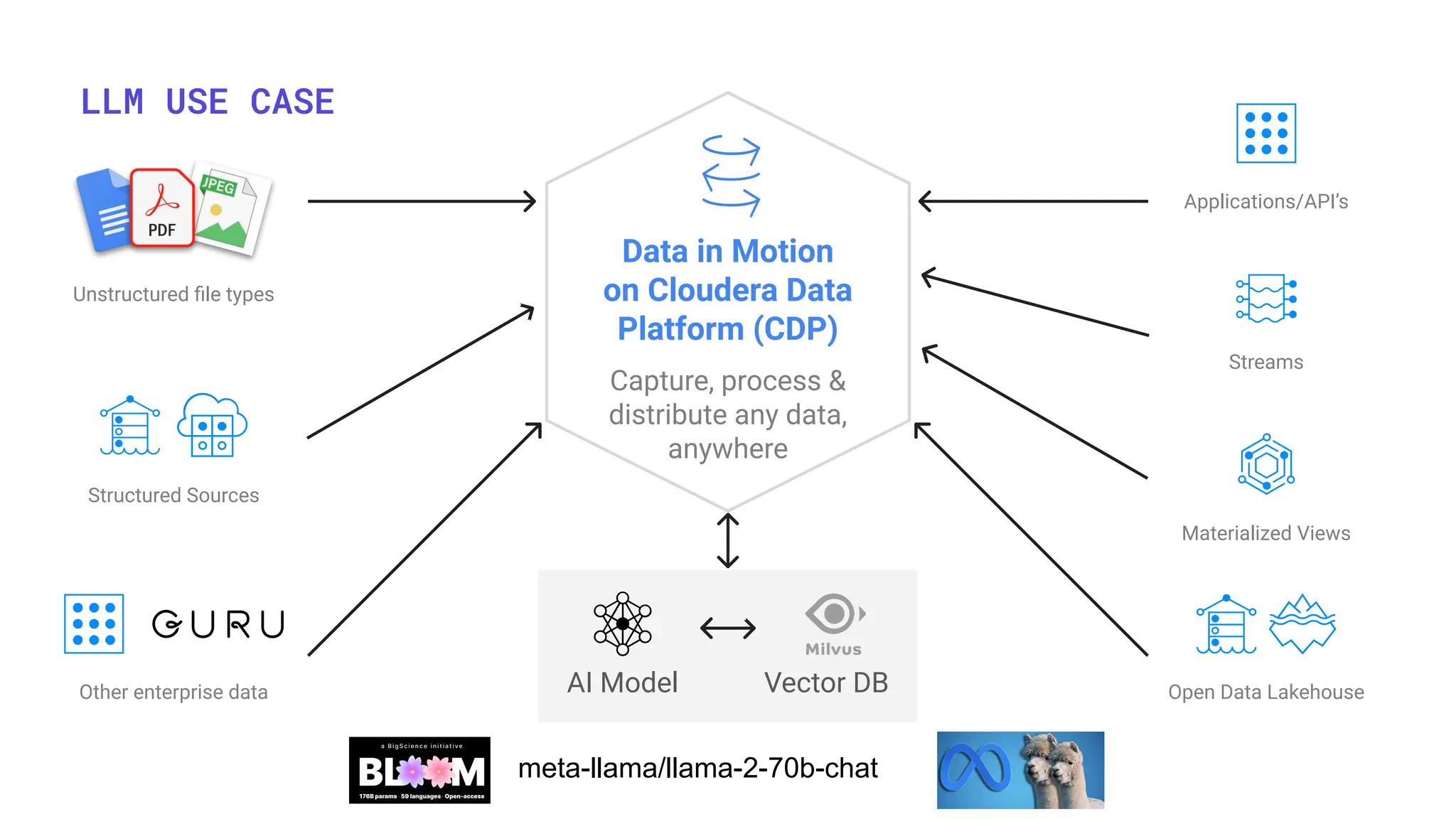

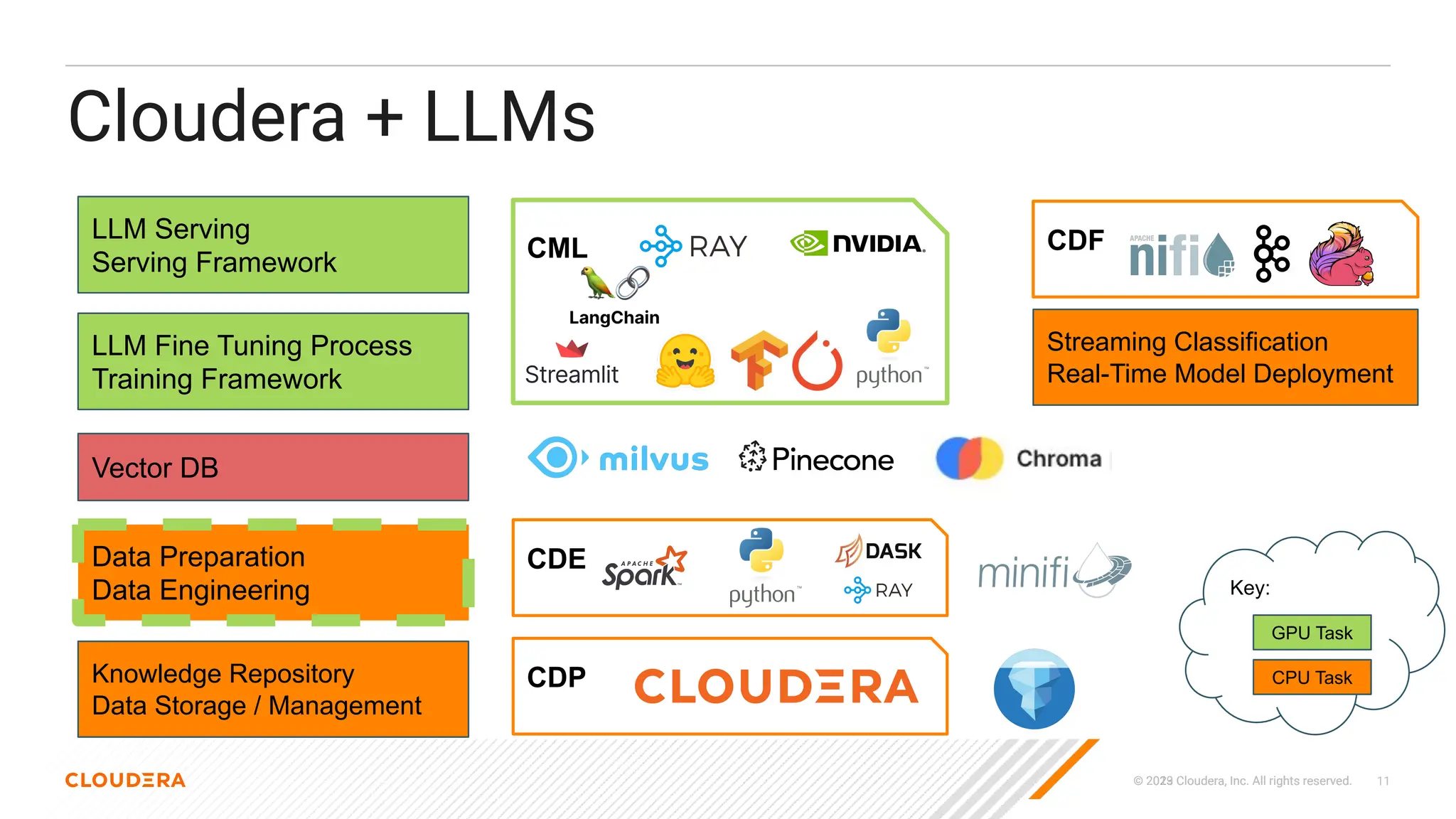

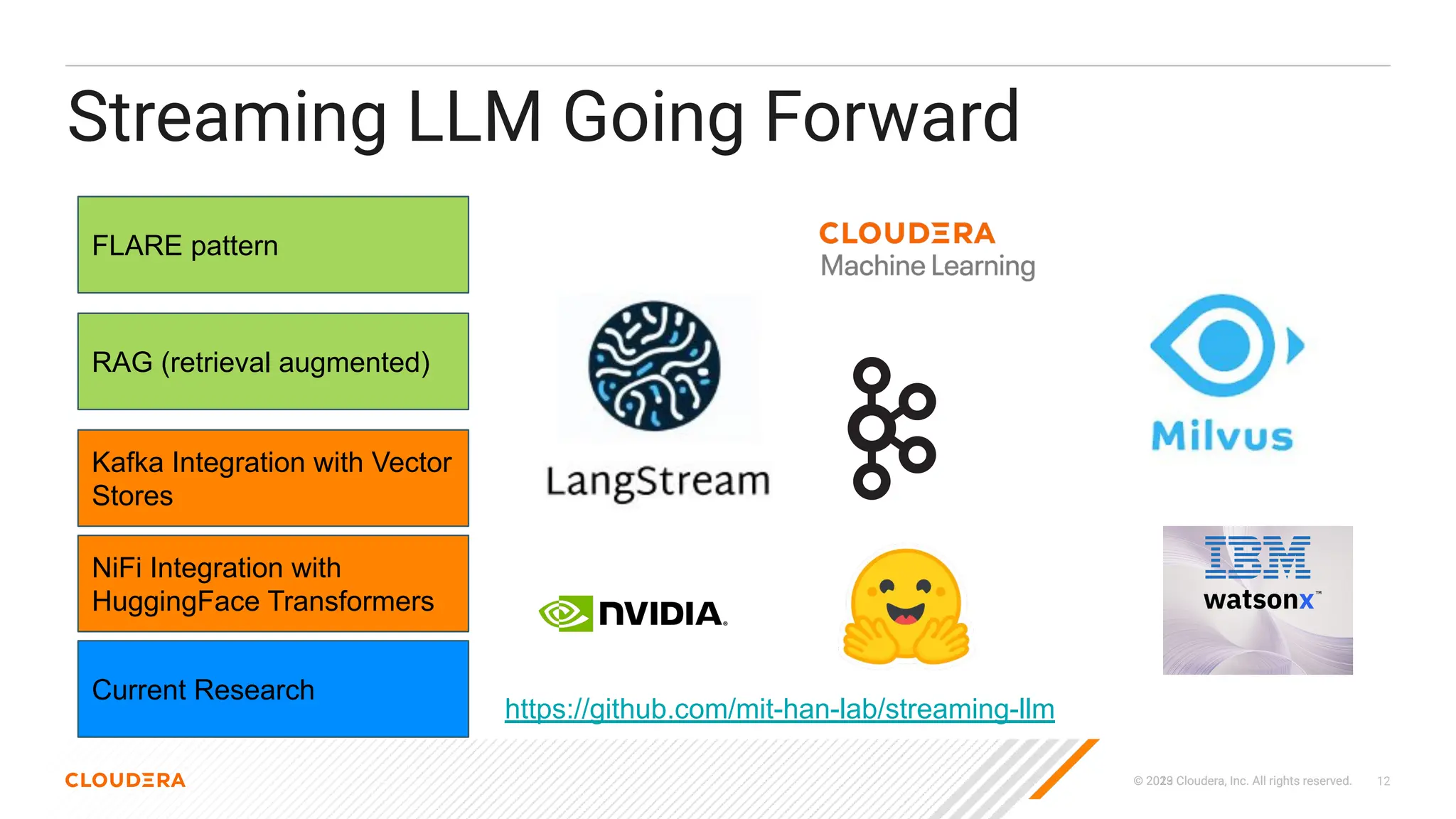

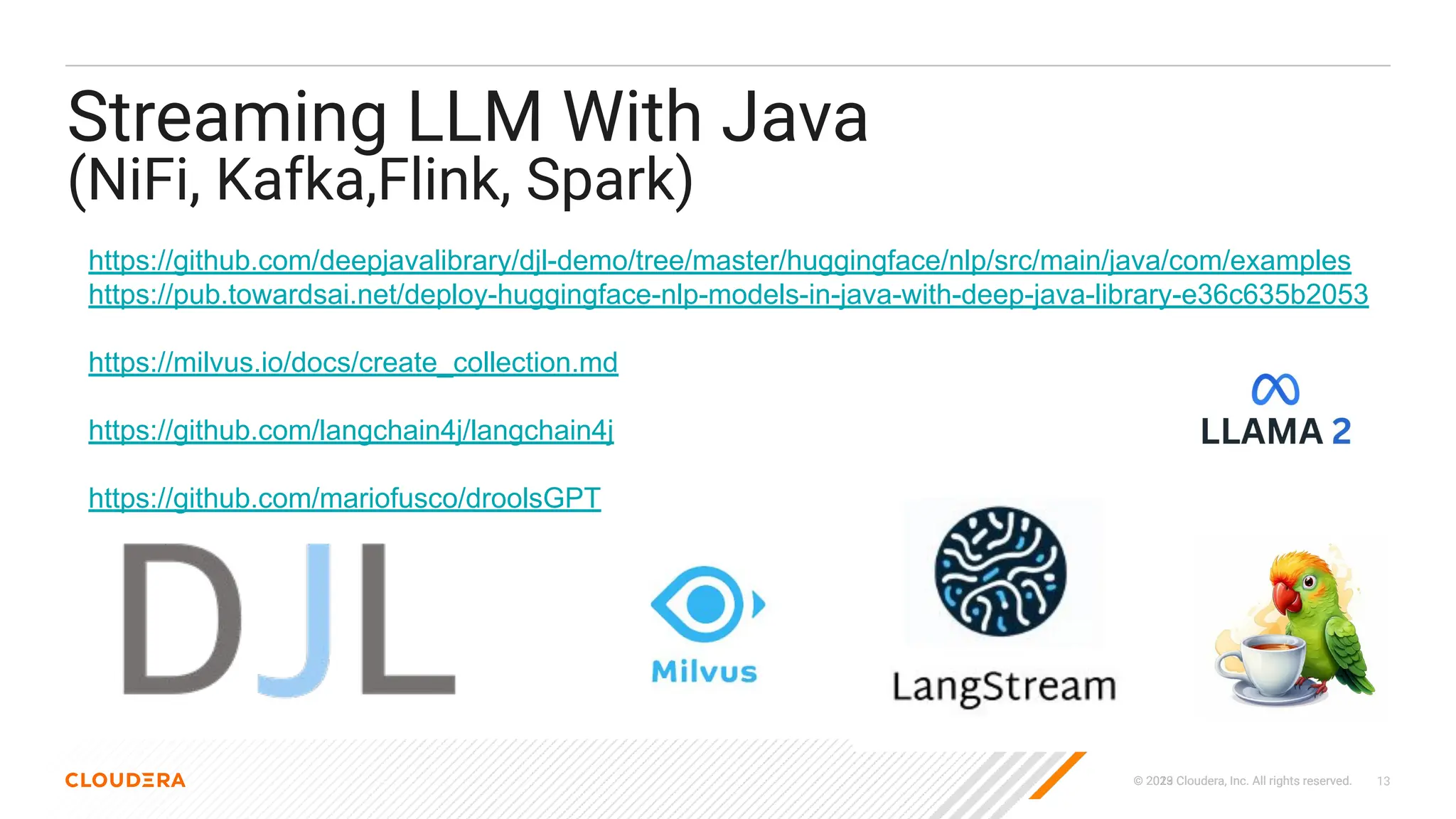

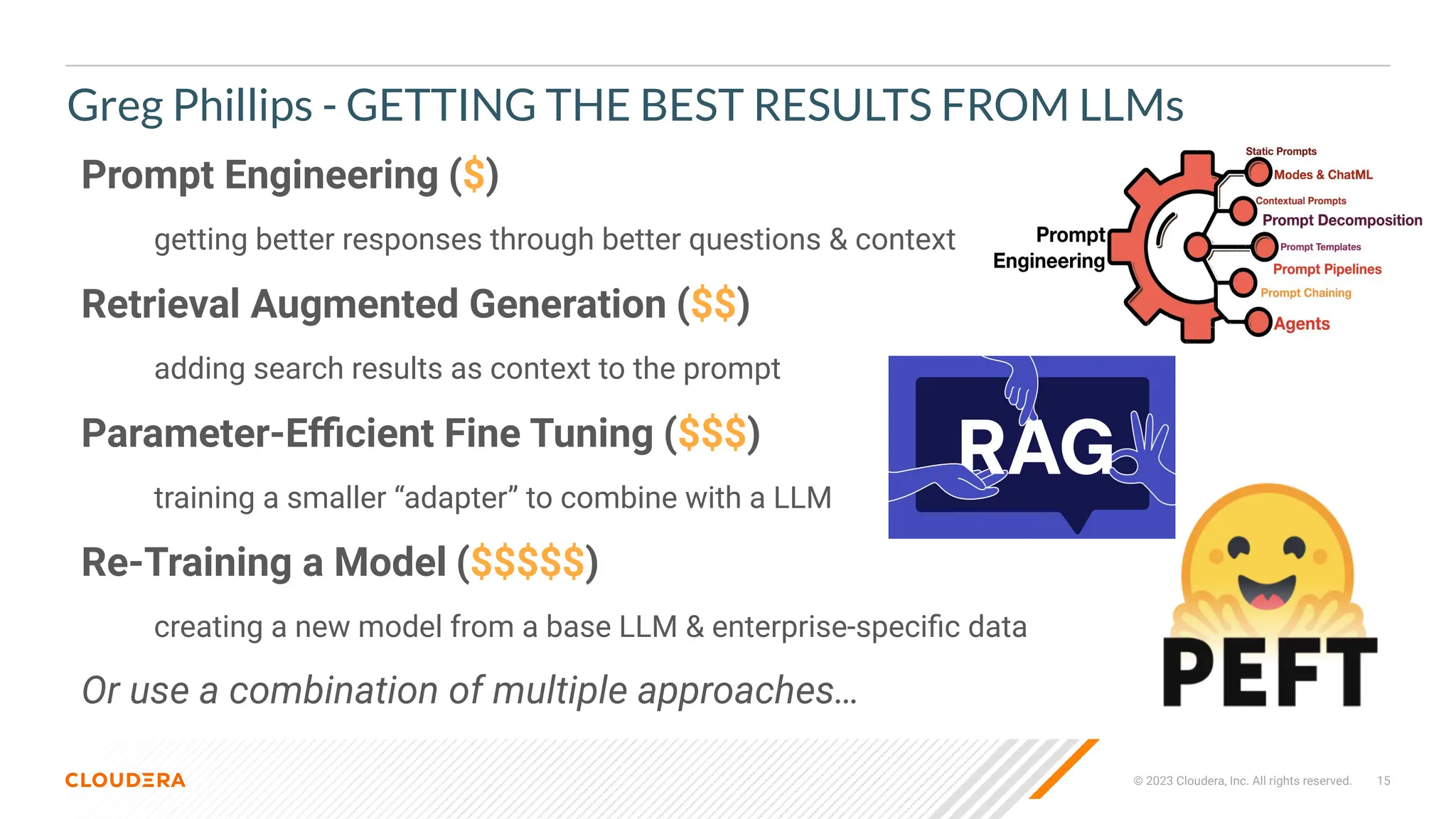

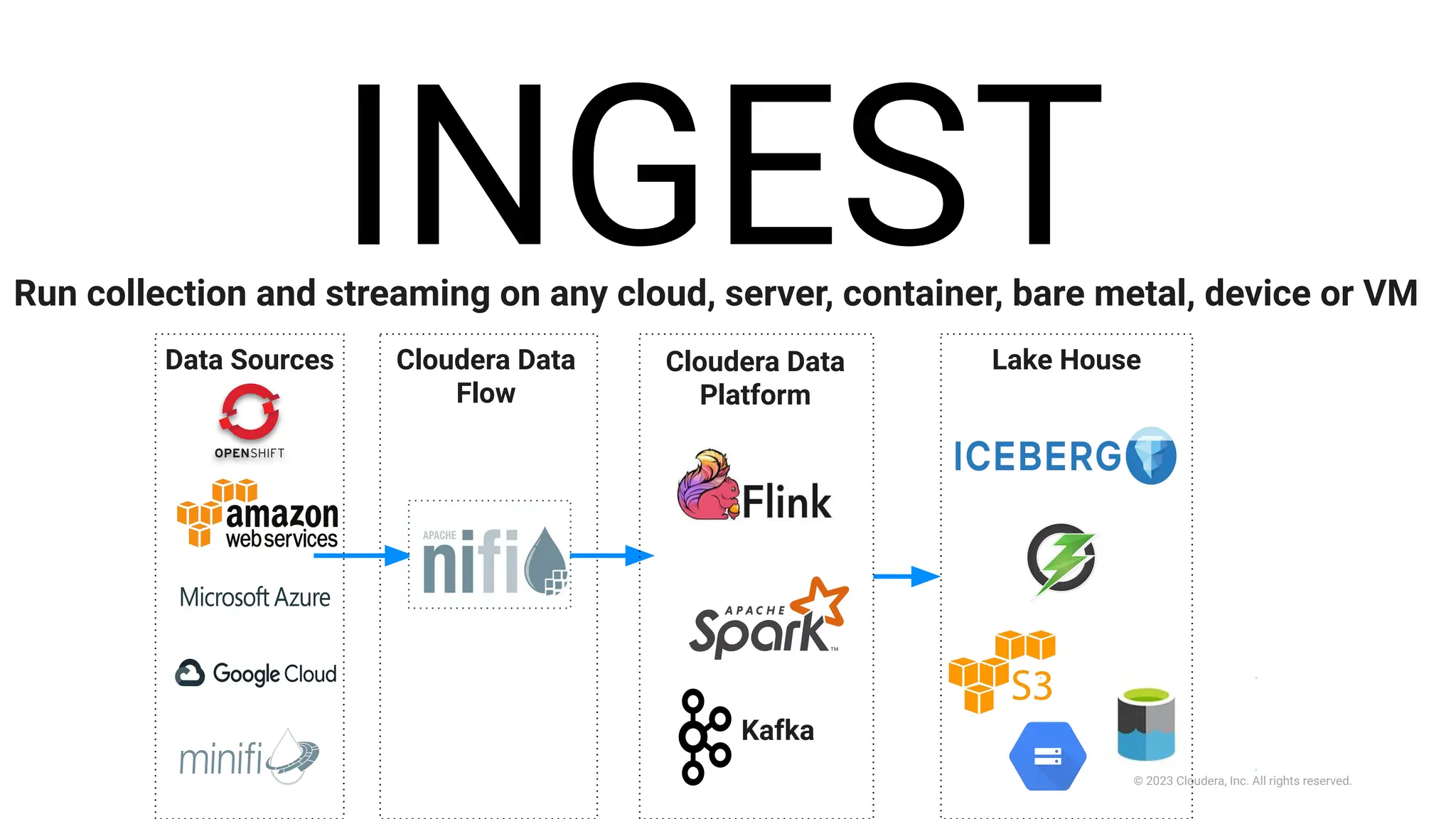

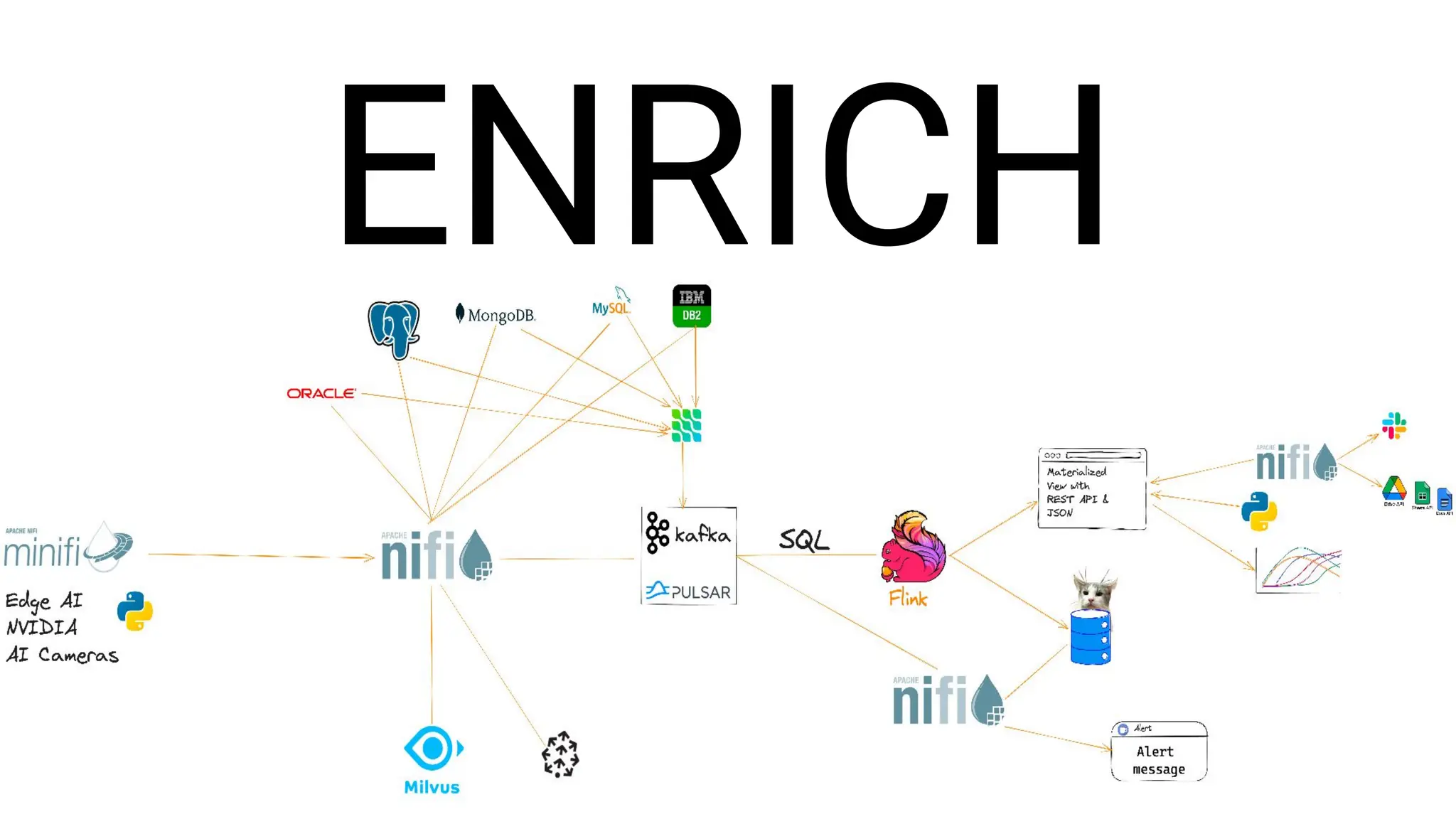

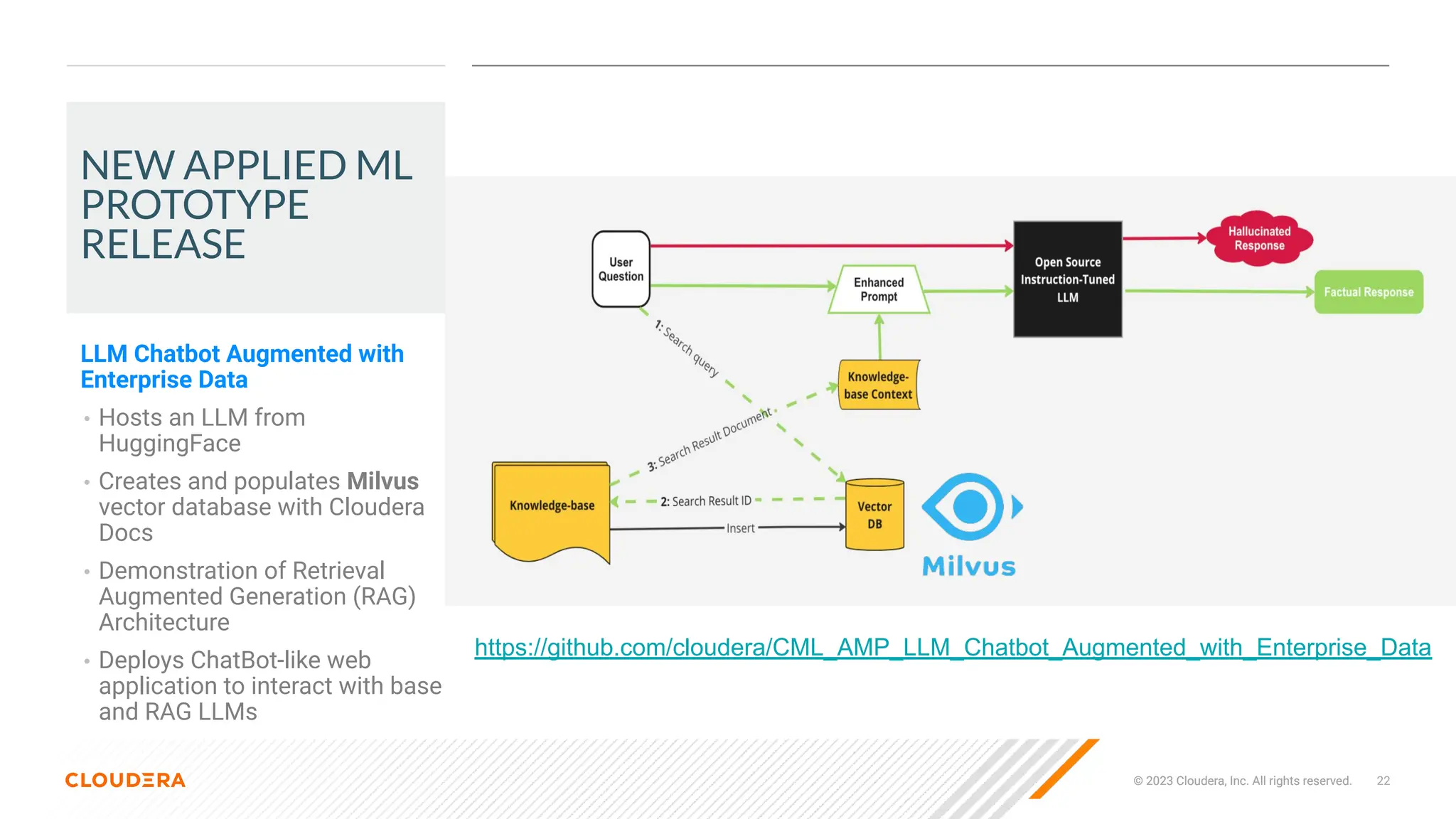

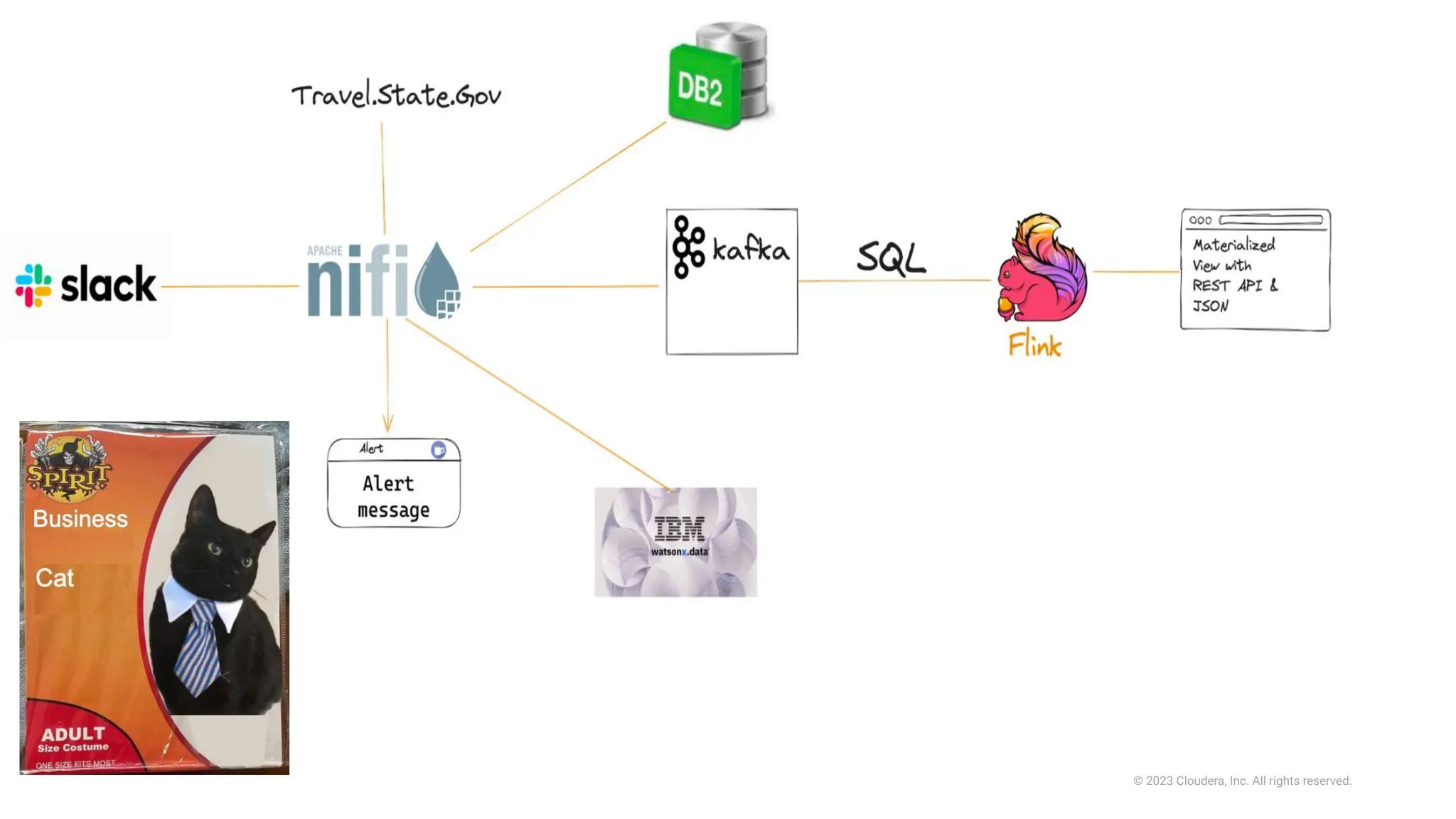

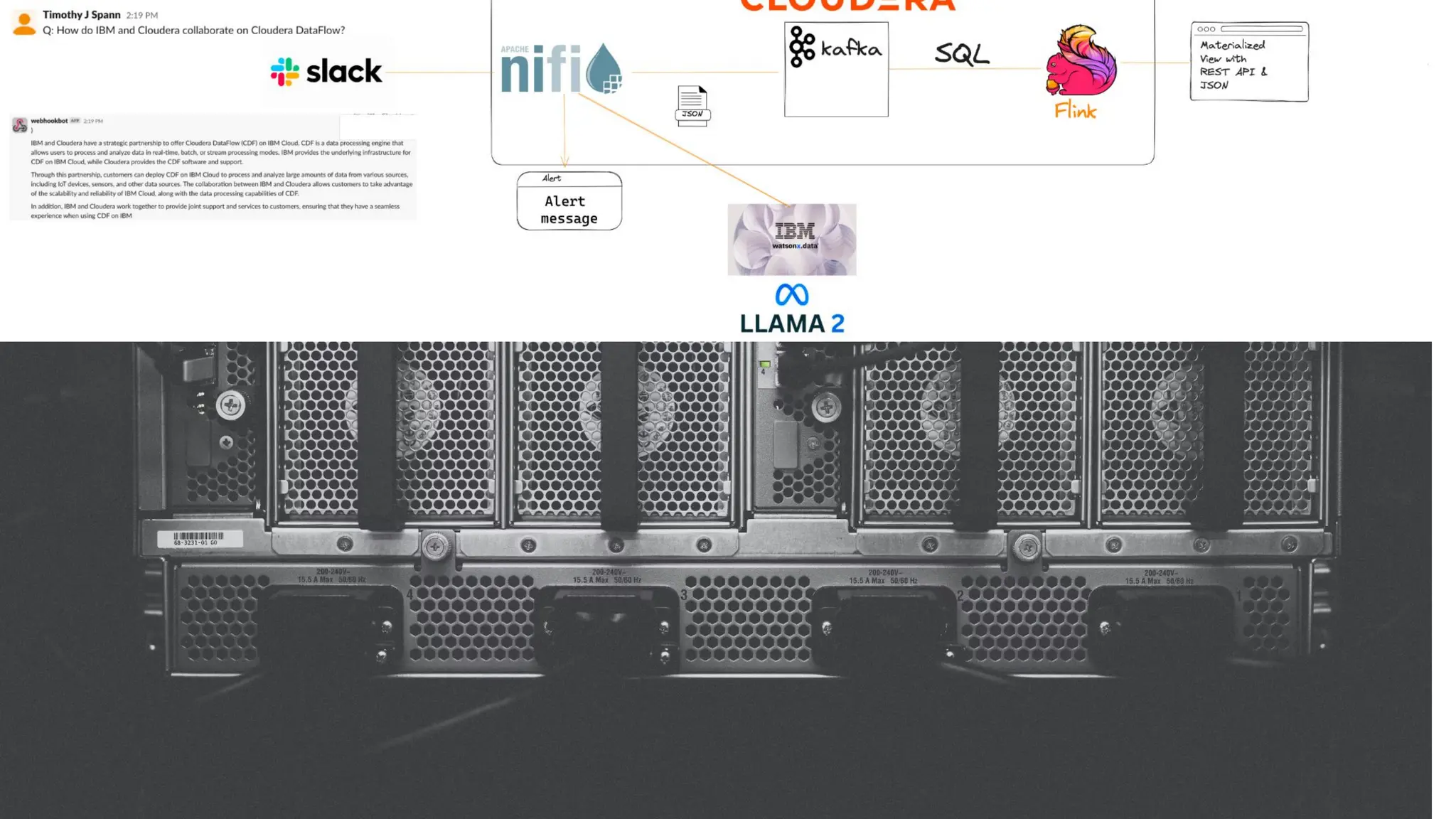

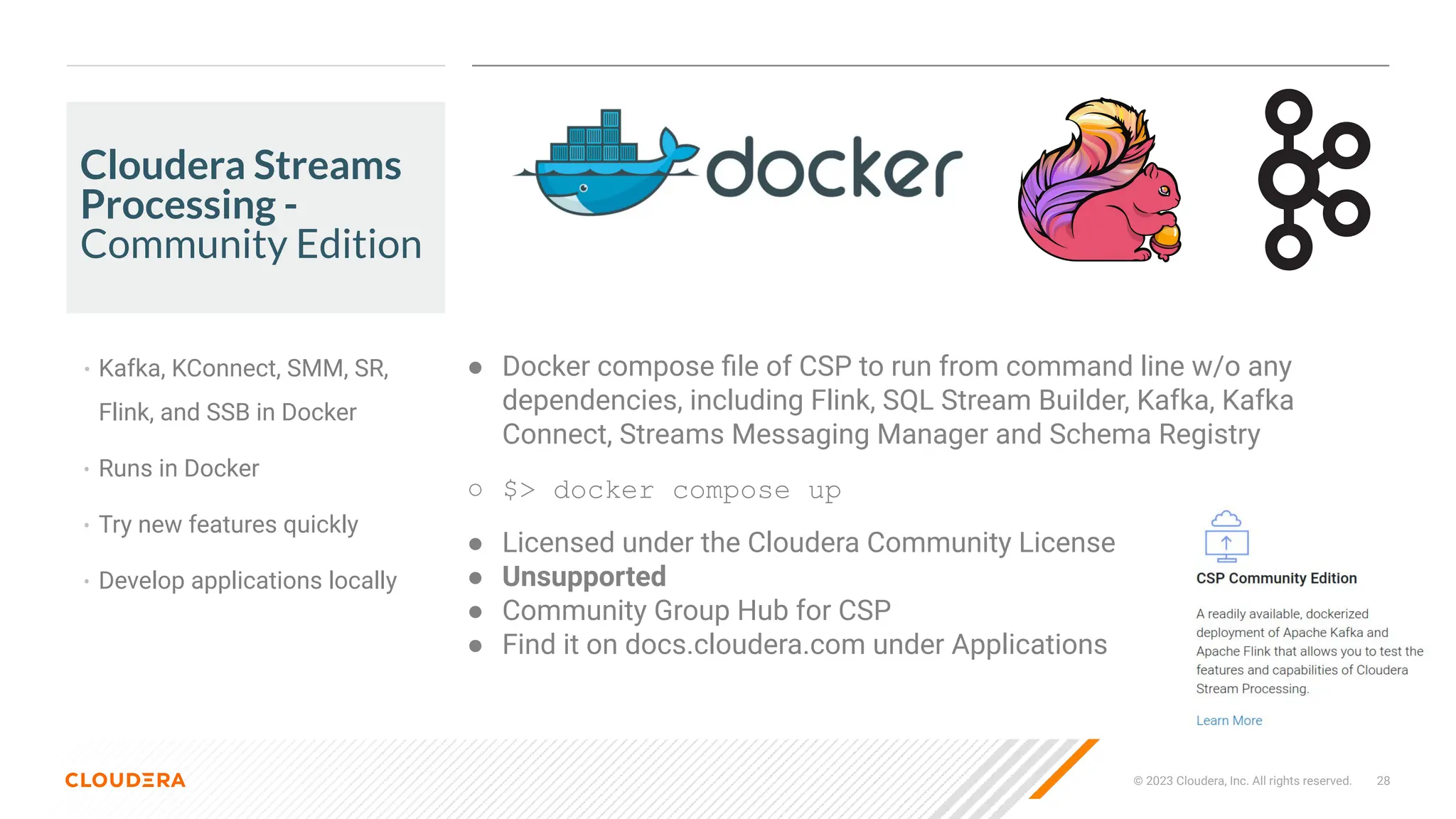

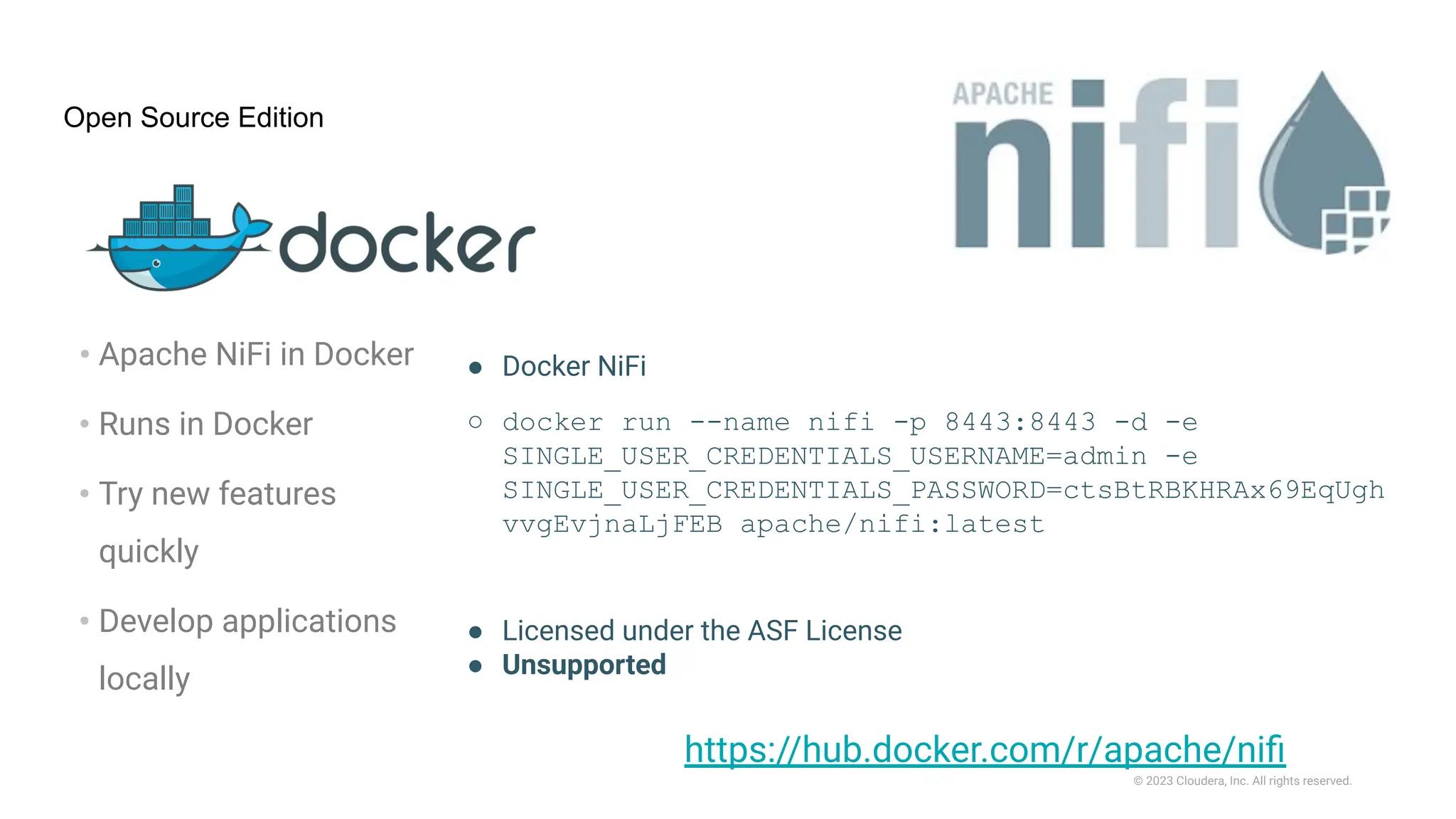

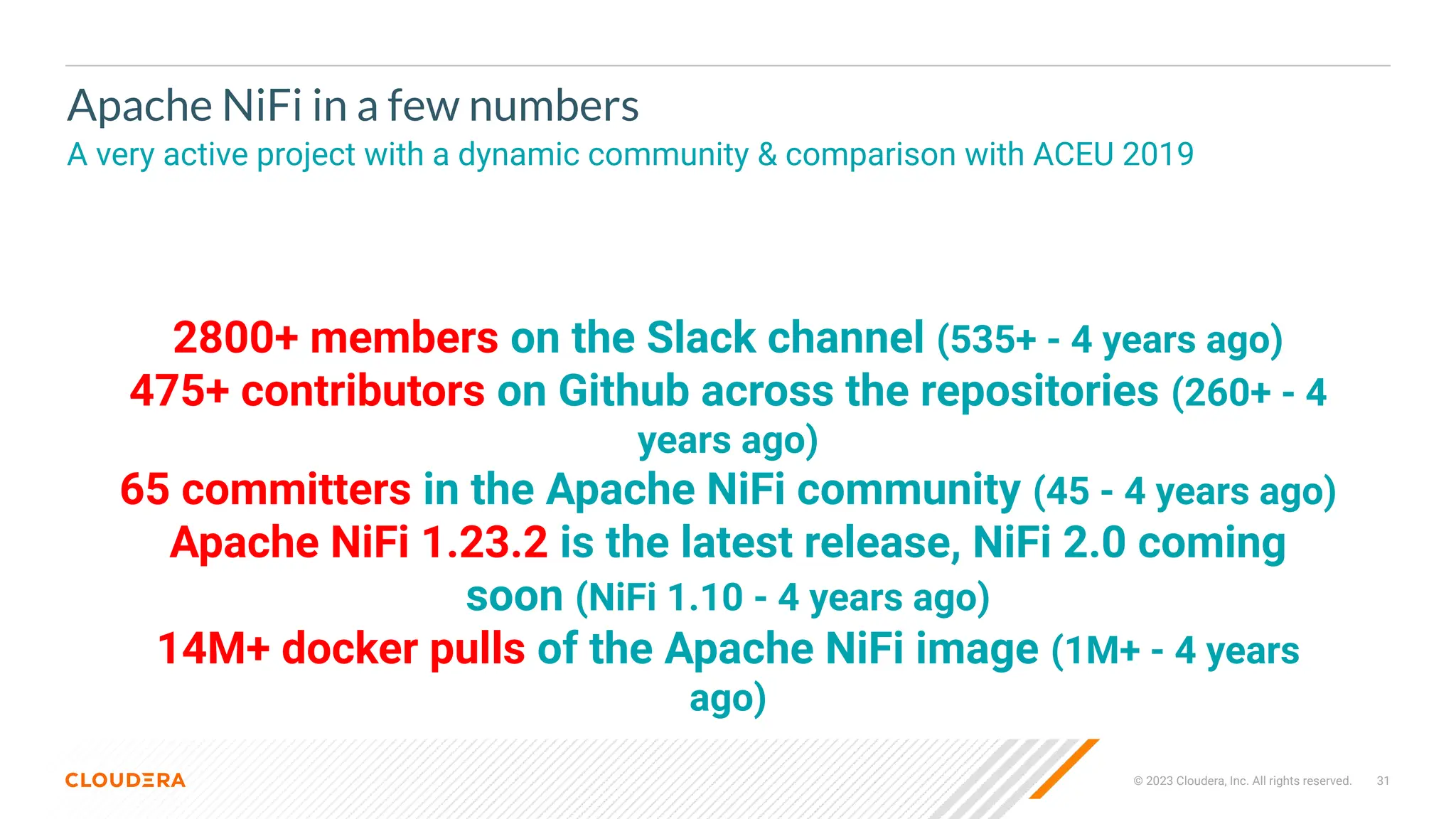

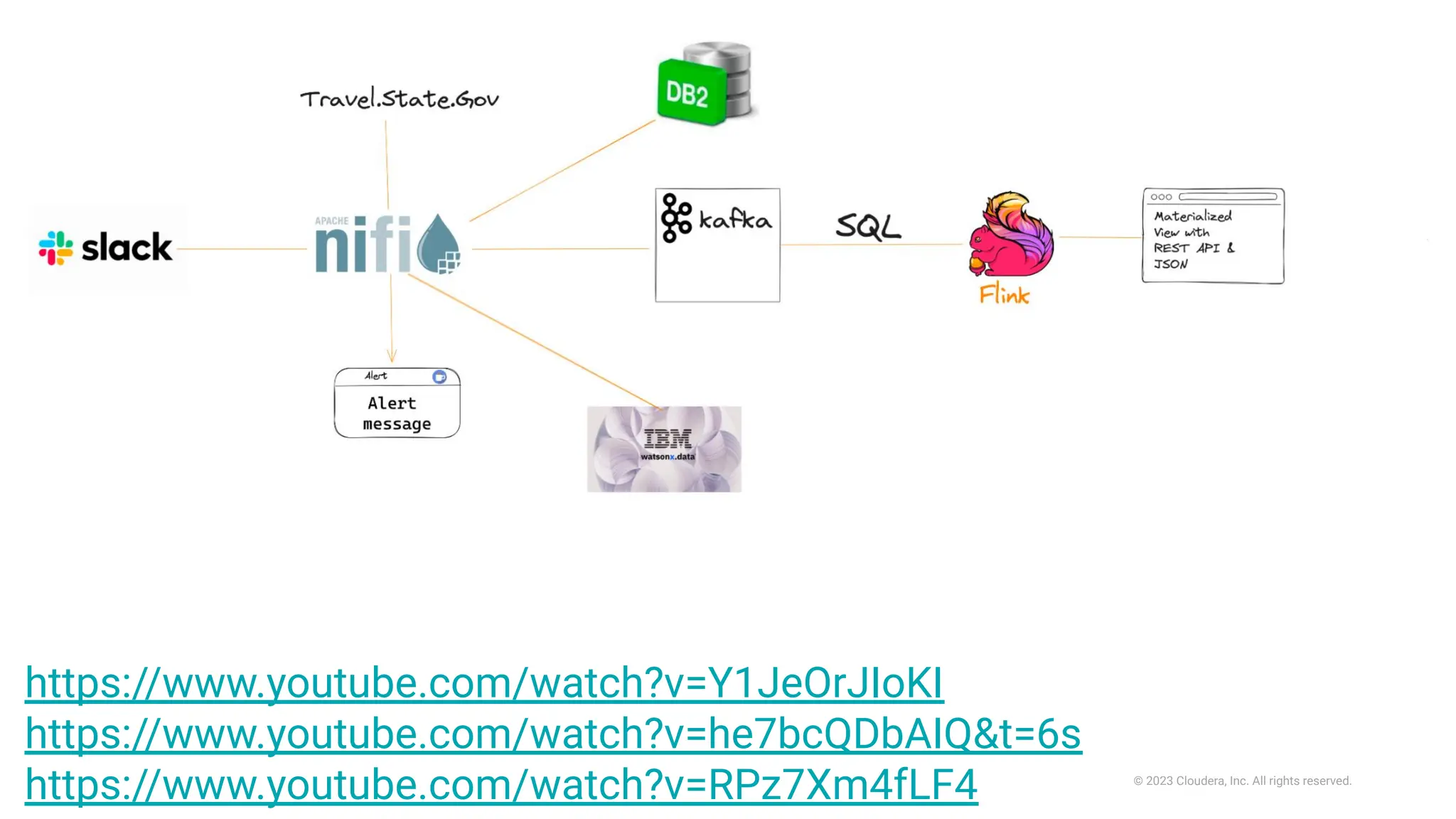

The document discusses incorporating generative AI into real-time streaming data pipelines using technologies like Apache NiFi, Kafka, and Flink. It outlines strategies for deploying large language models (LLMs) in an enterprise context, focusing on data management and the retrieval-augmented generation (RAG) approach. Additionally, it provides resources and links for further exploration of LLM applications in streaming environments.