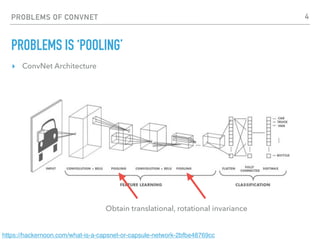

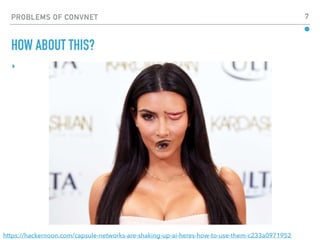

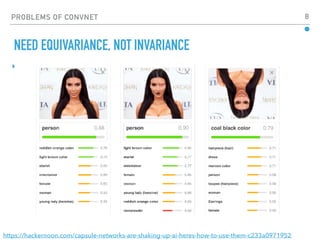

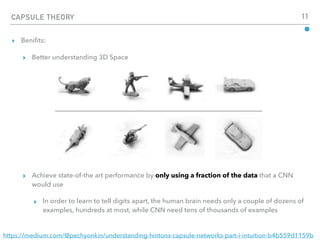

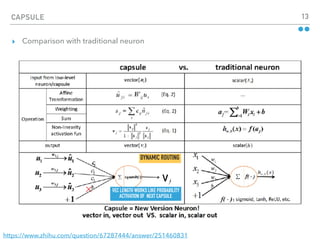

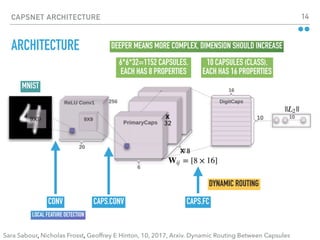

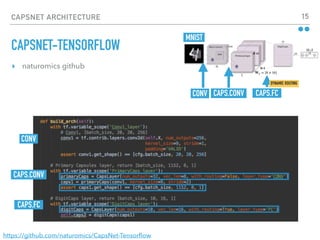

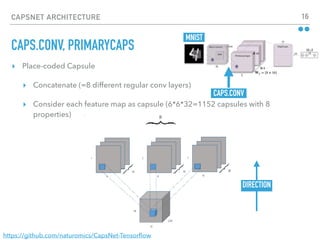

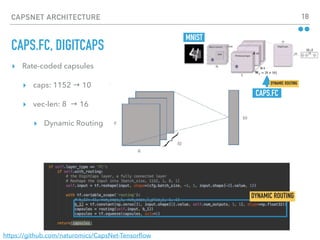

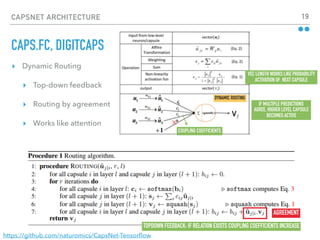

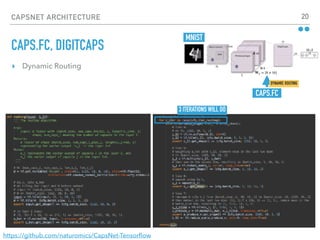

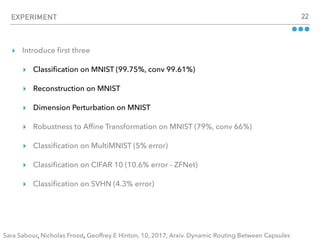

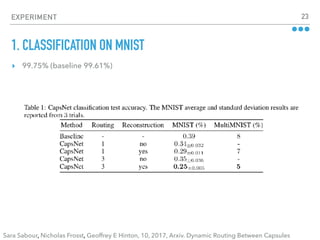

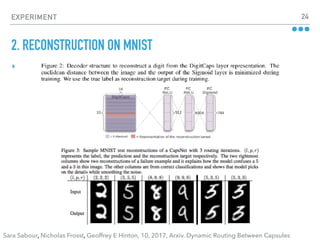

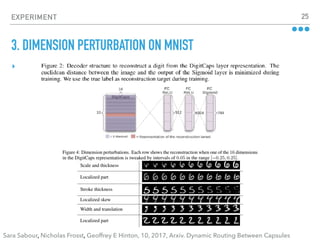

The document discusses dynamic routing between capsules, focused on a new neural network architecture called Capsule Networks (CapsNet), which aims to address limitations in traditional convolutional networks by representing geometric relationships between features. It highlights the benefits of CapsNet, such as improved performance with fewer training examples and better handling of 3D space. Experiments demonstrate CapsNet's effectiveness on various datasets, achieving classification accuracy and robustness beyond that of conventional models.