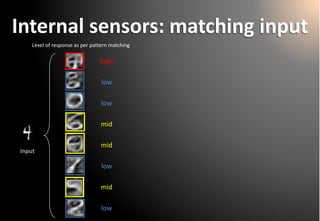

The document provides an overview of how artificial neural networks (ANNs) function, focusing on their ability to learn and recognize patterns, particularly in handwritten digit identification. It uses analogies from human reading and real-world examples like mail sorting to illustrate the underlying concepts and processes involved in ANNs. The content is structured around a presentation given at a Python meetup in Barcelona, emphasizing visual understanding without the use of complex mathematics or coding.

![Inside the ANN (Artificial Neural Network) A visual and intuitive journey* to understand how it stores knowledge and how it takes decisions*no code, no math included[following BCN Python Group’s request after my presentation on Machine Learning last September 25th2014]

PresentedbyXavier Arrufat

BCN Python Meetup–November2014

Barcelona, November20th, 2014](https://image.slidesharecdn.com/20141120pythonbcninsideannsrev07-141206103704-conversion-gate02/85/Inside-the-ANN-A-visual-and-intuitive-journey-to-understand-how-artificial-neural-networks-store-knowledge-and-how-they-make-decisions-no-code-no-math-included-1-320.jpg)

![Questions from last Meetup(Python BCN -september25th2014)

1.How does an ANN work? Examples?

2.You must be an engineer… a mathematicianwould never say ANNs are easy [to understand]!](https://image.slidesharecdn.com/20141120pythonbcninsideannsrev07-141206103704-conversion-gate02/85/Inside-the-ANN-A-visual-and-intuitive-journey-to-understand-how-artificial-neural-networks-store-knowledge-and-how-they-make-decisions-no-code-no-math-included-2-320.jpg)

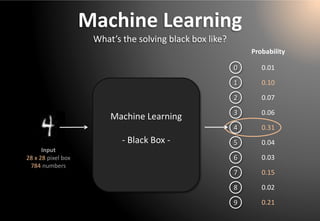

![MNIST dataset

http://yann.lecun.com/exdb/mnist/

Training set of 60,000 examples (6,000 per digit)

Test set of 10,000 examples (1,000 per digit)

Each character is a 28x28 pixel box =>

784 numbers per character within range [0:white, background, 255:black, foreground]

(N.B.: when using ANNs, normalize values to range [0,1] or [-1,1] before continuing)](https://image.slidesharecdn.com/20141120pythonbcninsideannsrev07-141206103704-conversion-gate02/85/Inside-the-ANN-A-visual-and-intuitive-journey-to-understand-how-artificial-neural-networks-store-knowledge-and-how-they-make-decisions-no-code-no-math-included-15-320.jpg)

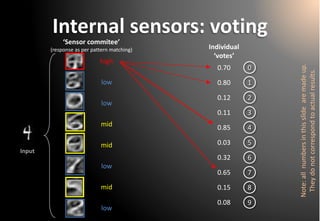

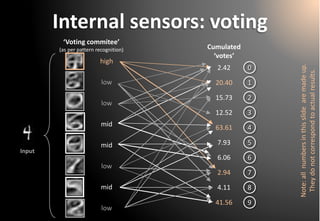

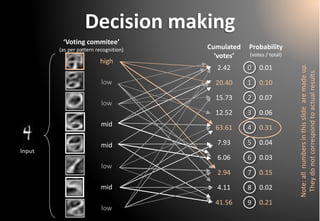

![All steps in one page

0.0

0.9

*

=

0.00.90.9

*

=0.8

Σ

1) Multiplyinput * filter, pixelper pixel

2) Addup resultingvalues. Youget a simple real numberR

4) Cast weighted votes on your filter’s favorite classes

7.35

Input

Filter

3) (optional–non-lineal activationfunction) SquashR withintherange(0,1) [ or (-1,1) ]

Note: all numbersin thisslidearemadeup. Theydo notcorrespondto actual results.

5) Normalize votes: compute class probabilities

6) Make a decisionbased on probabilites

0) Get a convenient set of filtersandvotingrules(a.k.a. ‘ANN training’)](https://image.slidesharecdn.com/20141120pythonbcninsideannsrev07-141206103704-conversion-gate02/85/Inside-the-ANN-A-visual-and-intuitive-journey-to-understand-how-artificial-neural-networks-store-knowledge-and-how-they-make-decisions-no-code-no-math-included-37-320.jpg)