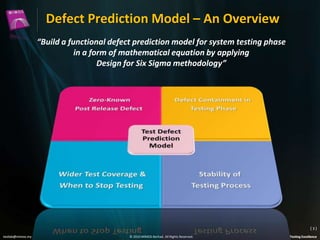

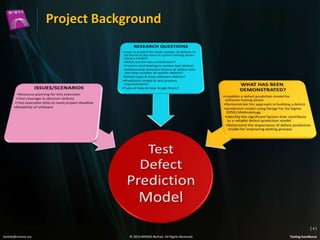

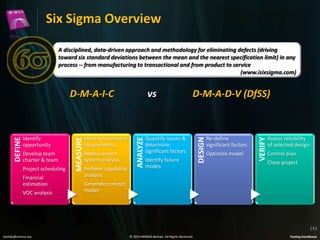

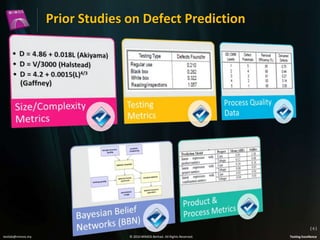

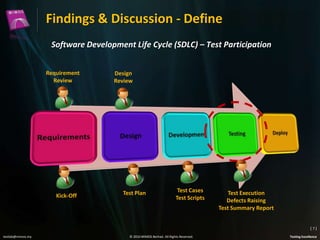

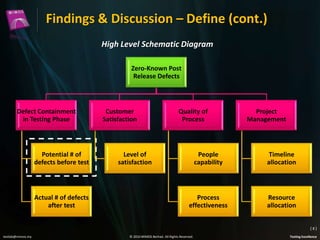

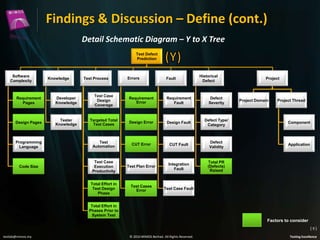

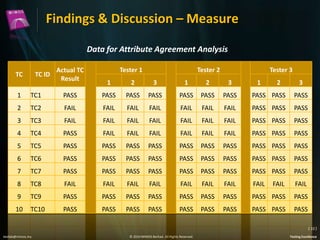

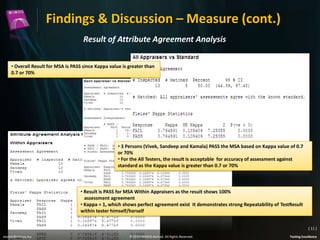

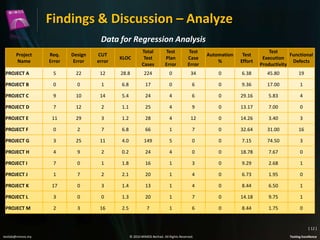

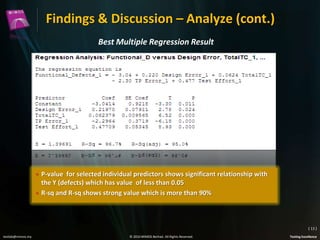

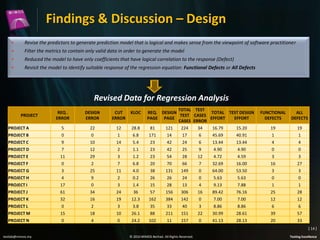

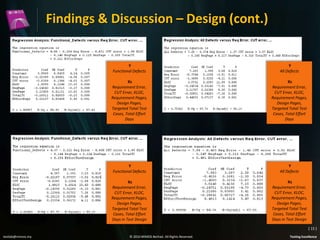

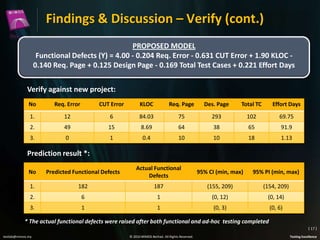

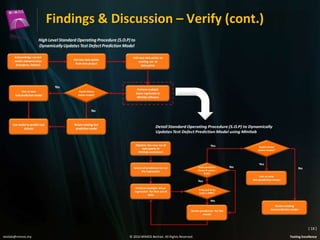

The document outlines the establishment of a defect prediction model for system testing using product metrics and Six Sigma methodology. It details the project background, prior studies, and the systematic findings from defining, measuring, analyzing, designing, and verifying the model. Conclusions highlight that the model can effectively predict defects in the testing phase and improve the testing process, along with recommendations for enhancing its predictive capabilities.