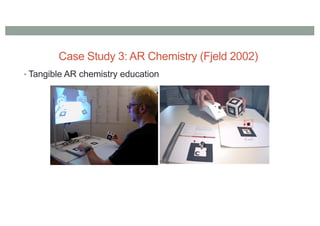

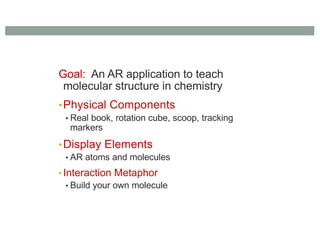

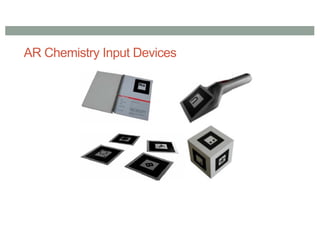

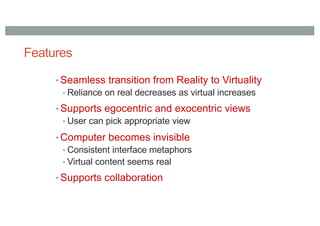

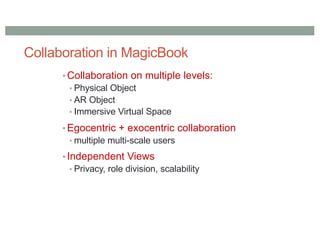

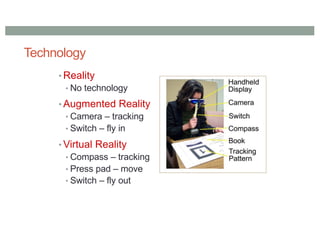

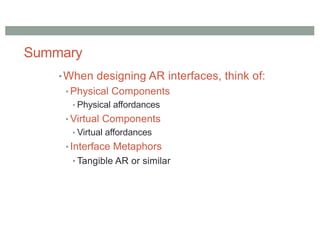

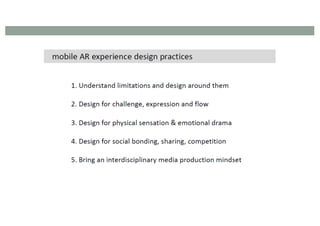

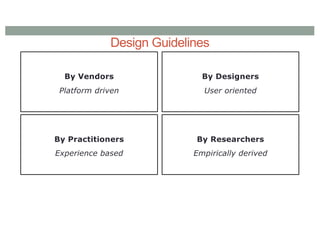

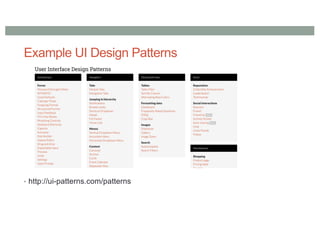

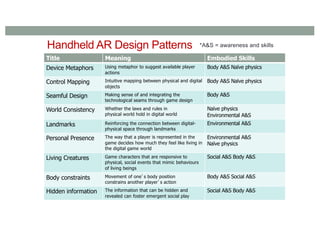

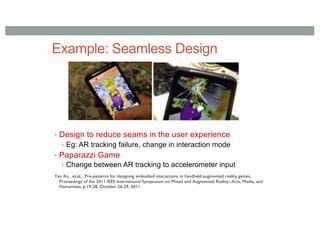

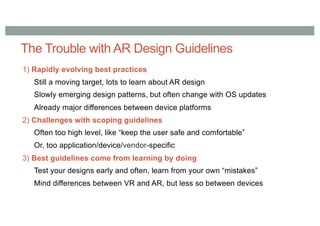

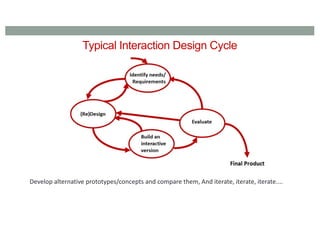

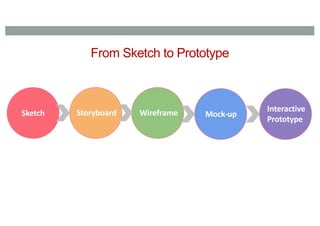

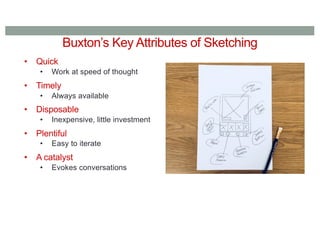

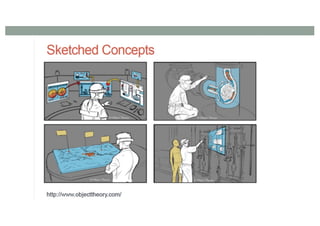

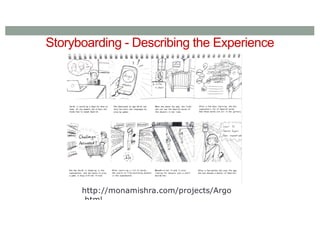

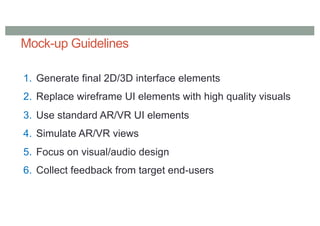

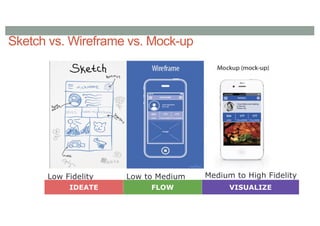

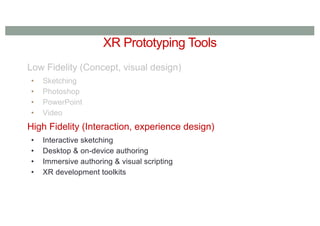

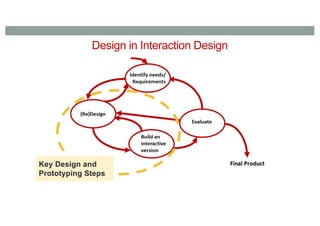

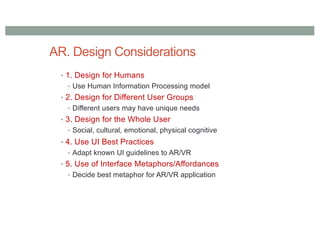

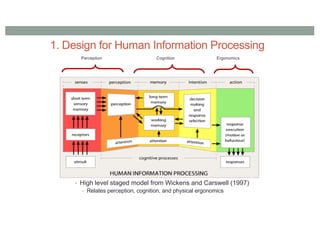

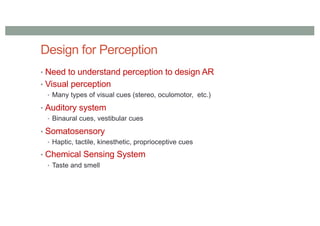

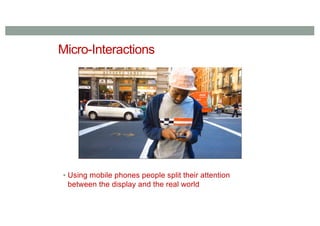

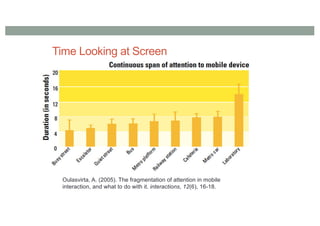

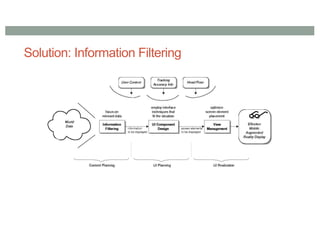

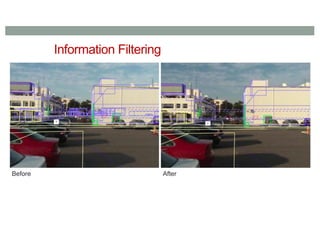

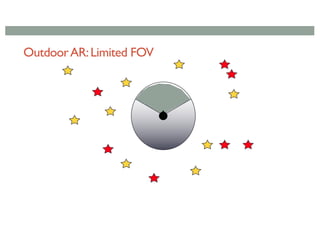

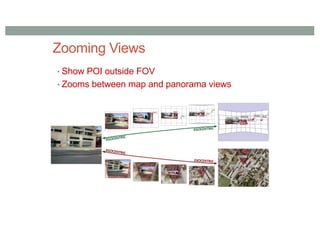

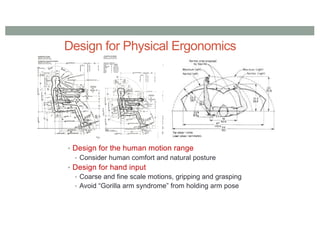

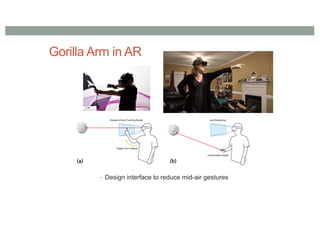

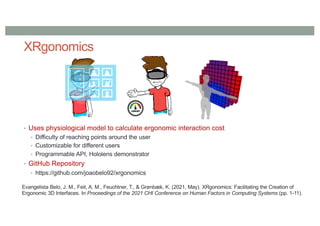

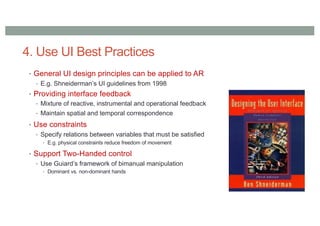

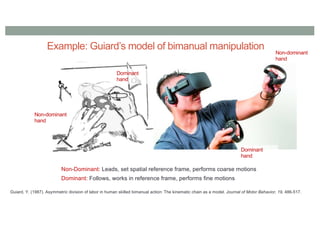

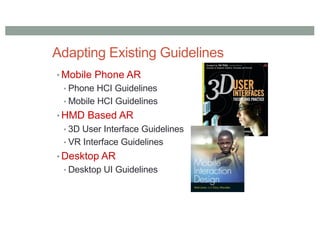

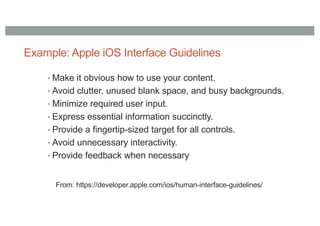

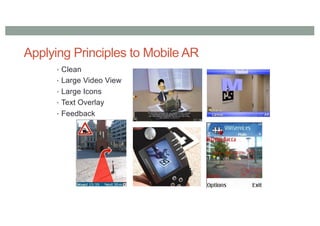

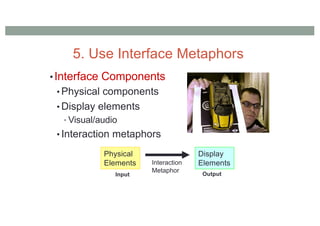

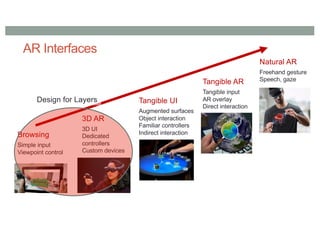

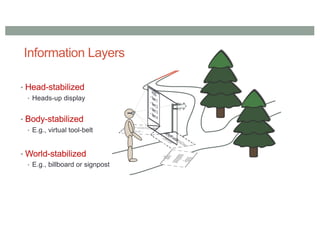

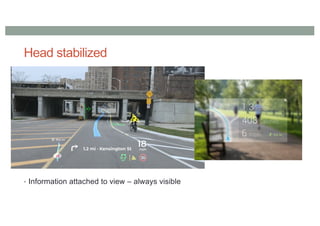

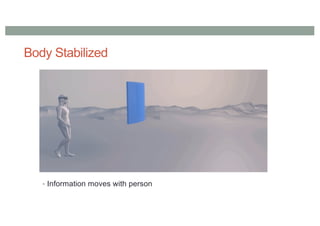

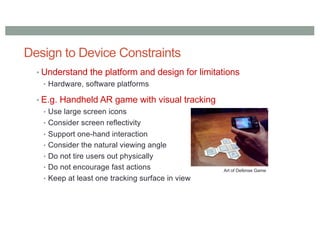

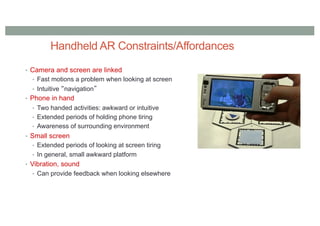

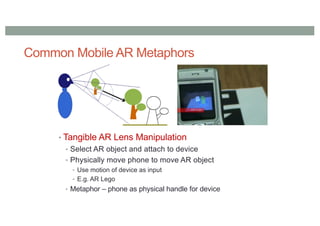

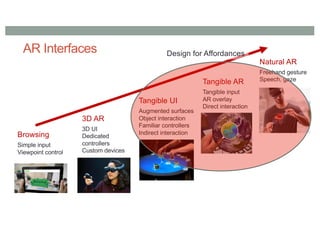

The document outlines the design and prototyping processes for augmented reality systems, emphasizing the interaction design cycle and various prototyping techniques. It discusses the importance of iterative design, tools for creating low and high-fidelity prototypes, and the significance of human-centered design principles tailored for different user groups and contexts. Key considerations include cognitive load, user ergonomics, and the effective use of metaphors and feedback in AR interfaces.

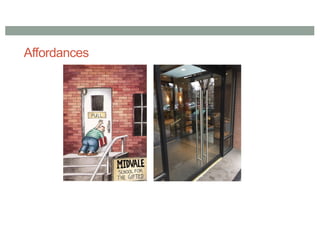

![Affordances

”… the perceived and actual properties of the thing, primarily

those fundamental properties that determine just how the

thing could possibly be used. [...]

Affordances provide strong clues to the operations of things.”

(Norman, The Psychology of Everyday Things 1988, p.9)](https://image.slidesharecdn.com/comp4010-lecture6-2022-221222213905-1be05878/85/2022-COMP4010-Lecture-6-Designing-AR-Systems-108-320.jpg)