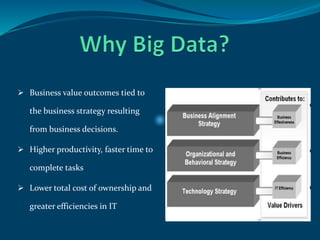

The document presents an overview of the Big Data concept discussed at the .NET Tech Summit 2015, highlighting the challenges and requirements for handling large volumes of diverse data. It covers the importance of NoSQL databases and tools like Hadoop, which enable scalability and efficiency in data management, particularly for unstructured data. The discussion emphasizes the business value achieved through enhanced productivity and cost efficiency in IT operations.

![ Built for cloud

Scale out architecture

Agility afforded by cloud

computing

Sharding automatically

distributes data evenly across

multi-node clusters

Automatically manages

redundant servers. (replica sets)

Horizontal scalality

Application

Document Oriented

{

author:”delwar”,

date:new Date(),

Topics:’Big Data Concept’,

tag:[“tools”,”database”]

}](https://image.slidesharecdn.com/8-150608081558-lva1-app6891/85/Big-Data-12-320.jpg)