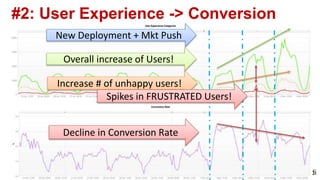

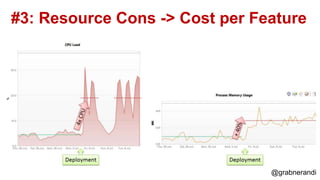

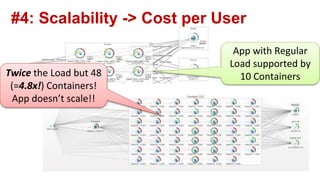

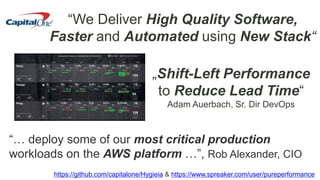

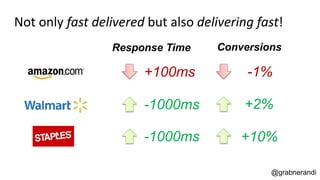

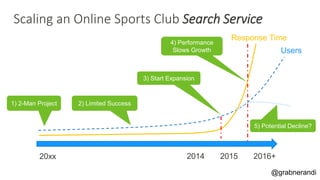

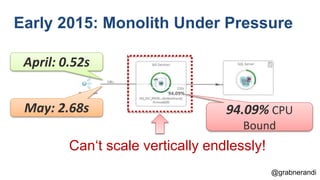

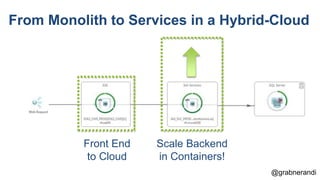

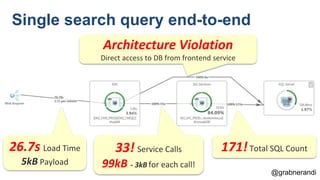

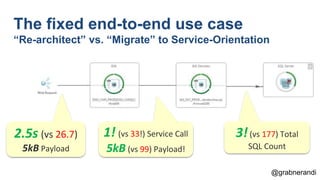

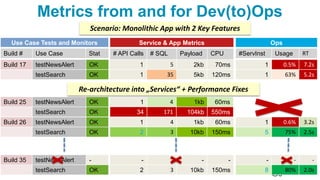

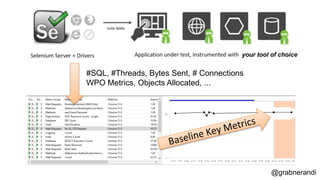

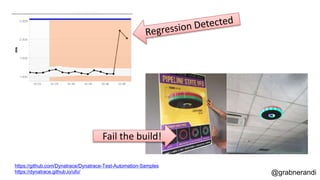

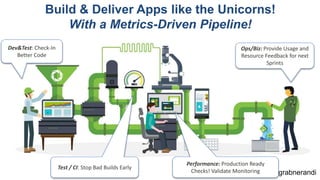

The document discusses the importance of metrics-driven DevOps, highlighting how availability, user experience, resource consumption, scalability, and performance impact overall success. It emphasizes the transition from monolithic applications to a service-oriented architecture to enhance performance and facilitate faster deployments. Key insights include the necessity of measuring success and not blindly automating processes without assessing their effectiveness.