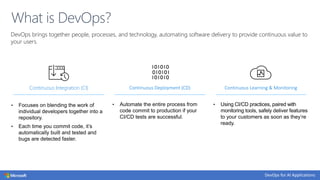

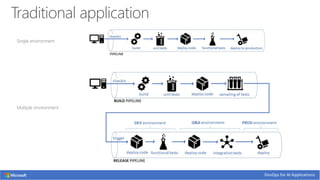

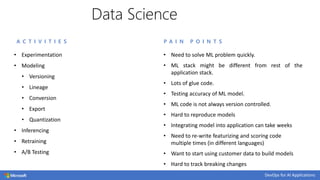

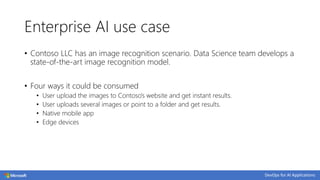

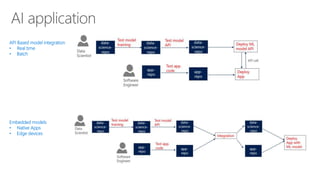

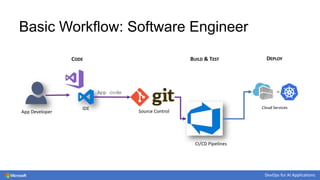

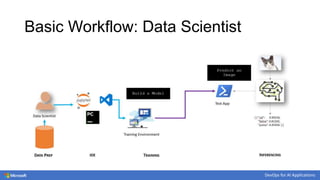

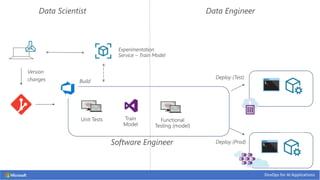

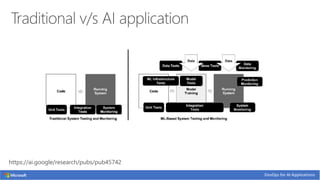

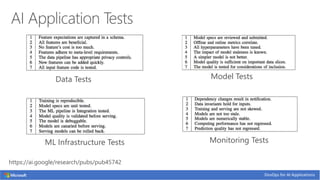

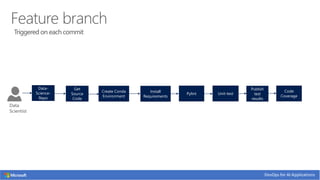

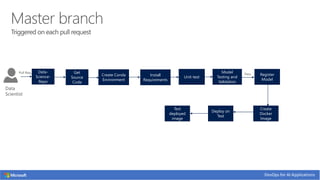

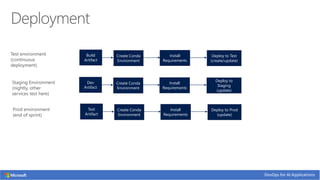

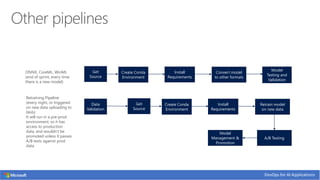

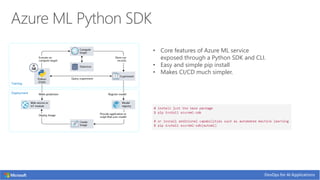

The document discusses the integration of DevOps practices into AI application development, emphasizing continuous integration and deployment as key components. It highlights challenges in machine learning processes within enterprise environments, such as model versioning and reproducibility, and proposes a structured DevOps approach to streamline AI workflows. Utilizing Azure ML and DevOps tools is suggested to improve the agility and quality of data science teams in managing AI application lifecycles.