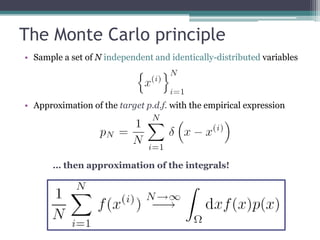

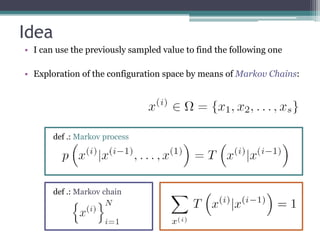

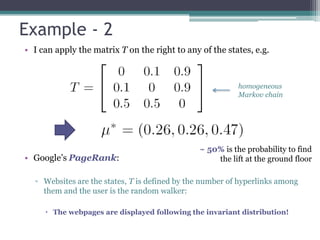

1) Markov Chain Monte Carlo (MCMC) methods use Markov chains to sample from complex probability distributions and are useful for problems that cannot be solved efficiently using other methods.

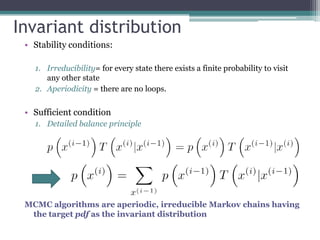

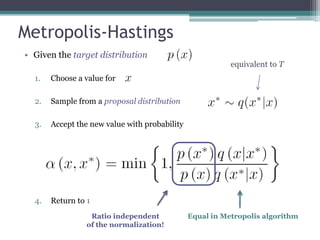

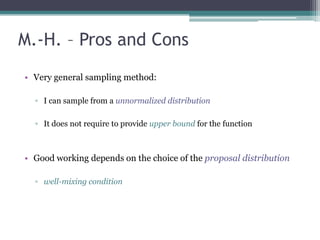

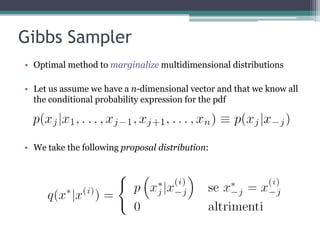

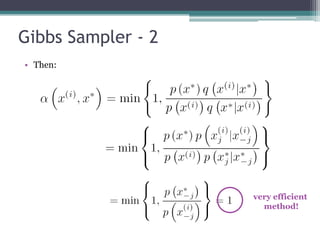

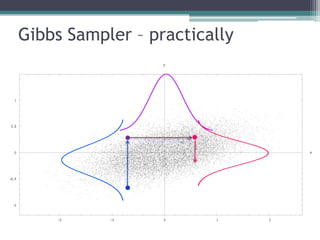

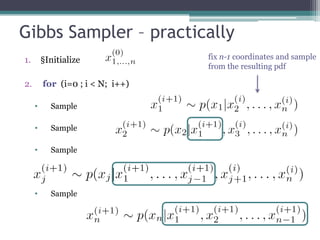

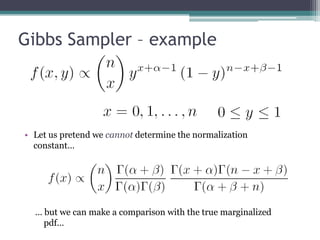

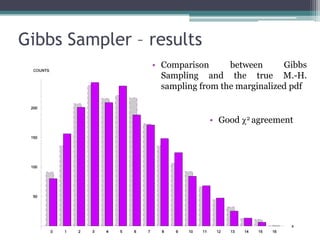

2) Common MCMC algorithms include Metropolis-Hastings, which samples from a target distribution using a proposal distribution, and Gibbs sampling, which efficiently samples multidimensional distributions by updating variables sequentially.

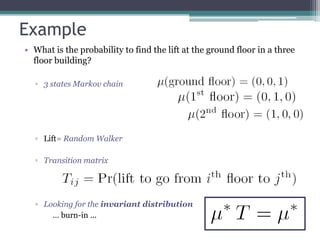

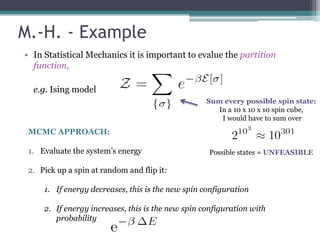

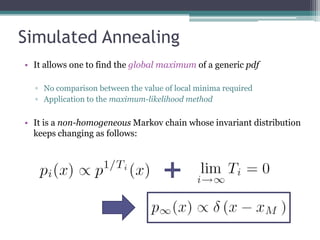

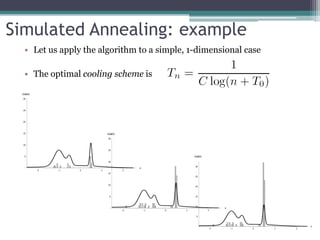

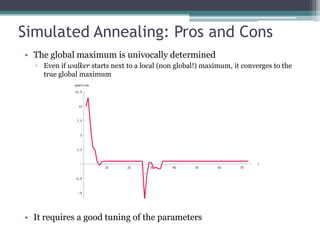

3) MCMC methods like simulated annealing can find global maxima of probability distributions and have applications in statistical mechanics, optimization, and Bayesian inference.