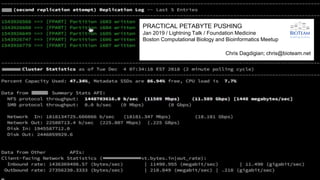

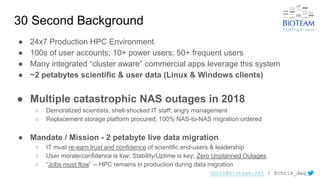

The document outlines a case study of a 2 petabyte data migration in a high-performance computing (HPC) environment, detailing the challenges faced during numerous NAS outages and subsequent data migration efforts. Key strategies include avoiding simultaneous data management and movement, understanding vendor-specific data protection overhead, and utilizing tools like 'fpart' and 'fpsync' for effective replication. It emphasizes the importance of proactive communication regarding management expectations and the necessity for thorough testing and documentation throughout the migration process.

![chris@bioteam.net / @chris_dag

Lightning Talk ProTip: CONCLUSIONS FIRST

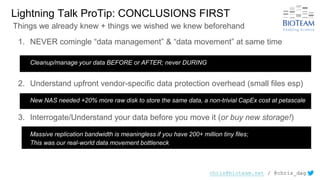

4. Be proactive in setting (and re-setting) management expectations

Data transfer time estimates based off of aggregate network bandwidth were

insanely wrong. Real world throughput range was: [ 2mb/sec -- 13GB/sec ]

5. Tasks that take days/weeks require visibility & transparency

Users & management will want a dashboard or progress view

6. Work against full filesystems or network shares ONLY (See tip #1 …)

Attempts to get clever with curated “exclude-these-files-and-folders” lists add

complexity and introduce vectors for human/operator error

Things we already knew + things we wished we knew beforehand](https://image.slidesharecdn.com/practicalpetabytepushing-190123192804/85/Practical-Petabyte-Pushing-4-320.jpg)

![chris@bioteam.net / @chris_dag

Materials & Methods - Process

The Process (one filesystem or share at a time):

● [A] Perform initial full replication in background on live “in-use” file system

● [B] Perform additional ‘re-sync’ replications to stay current

● [C] Perform ‘delete pass’ sync to catch data that was deleted from source filesystem while

replication(s) were occuring

● Repeat tasks [B] and [C] until time window for full sync + delete-pass is small enough to fit

within an acceptable maintenance/outage window

● Schedule outage window; make source filesystem Read-Only at a global level; perform final

replication sync; migrate client mounts; have backout plan handy

● Test, test, test, test, test, test (admins & end-users should both be involved testing)

● Have a plan to document & support the previously unknown storage users that will come out of the

woodwork once you mark the source filesystem read/only (!)

Things we already knew + things we wished we knew beforehand](https://image.slidesharecdn.com/practicalpetabytepushing-190123192804/85/Practical-Petabyte-Pushing-6-320.jpg)