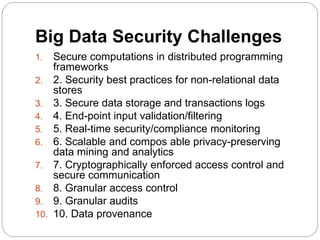

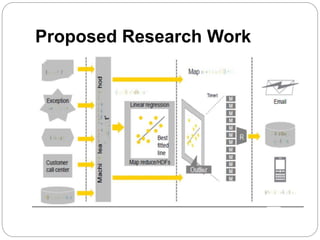

The document discusses the importance of security in big data analytics, emphasizing the need for a comprehensive security framework to address emerging threats and challenges associated with machine learning. It outlines characteristics of big data, the infrastructure required for its organization and analysis, and the specific security challenges that arise in distributed computing environments. The conclusion highlights the growing role of machine learning in improving data analytics and security measures.