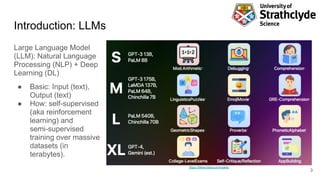

This document discusses using large language models (LLMs) like GPT-3 and BERT in research. It provides a brief history of LLMs, describing how they use transformers, massive datasets and GPUs. Popular LLMs include GPT-3, BERT, LLaMA and T5. The document suggests using tools like Sentence-BERT, T5 and GPT-3 with applications like Elicit for research tasks like summarization and question answering. Finally, it provides more resources on LLMs and references recent papers about models such as GPT-4, LaMDA, PaLM and Chinchilla.