This document provides an overview of fine-tuning the Llama 2 large language model (LLM) developed by Meta, emphasizing its advancements and competitive positioning in the AI landscape. Llama 2 is notable for its open-source nature, extensive training on 2 trillion tokens, and improved adaptability for specialized tasks through an innovative fine-tuning process. The article also discusses its foundation in responsible AI development, highlighting transparency and community engagement as central to its operational philosophy.

![20/27

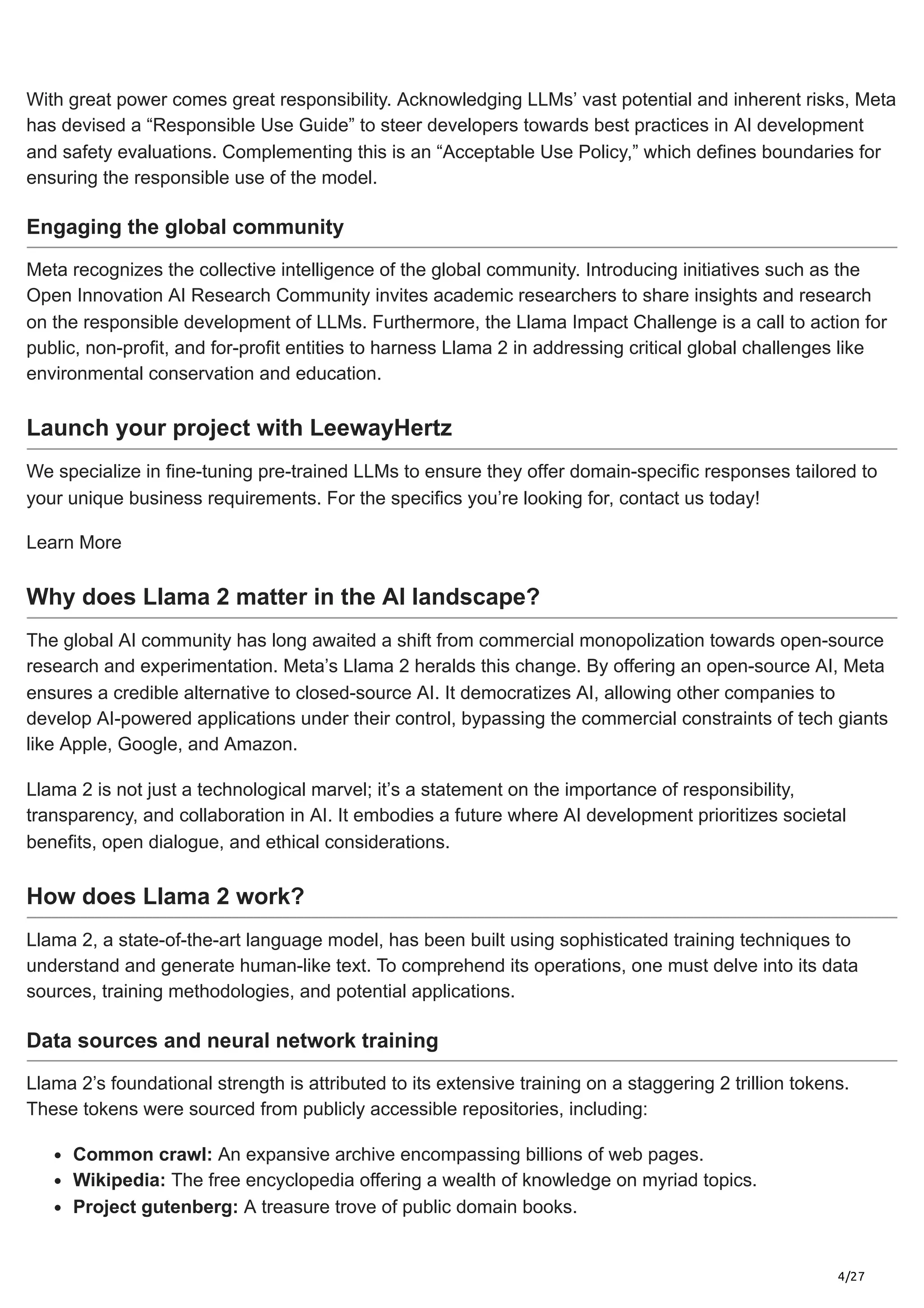

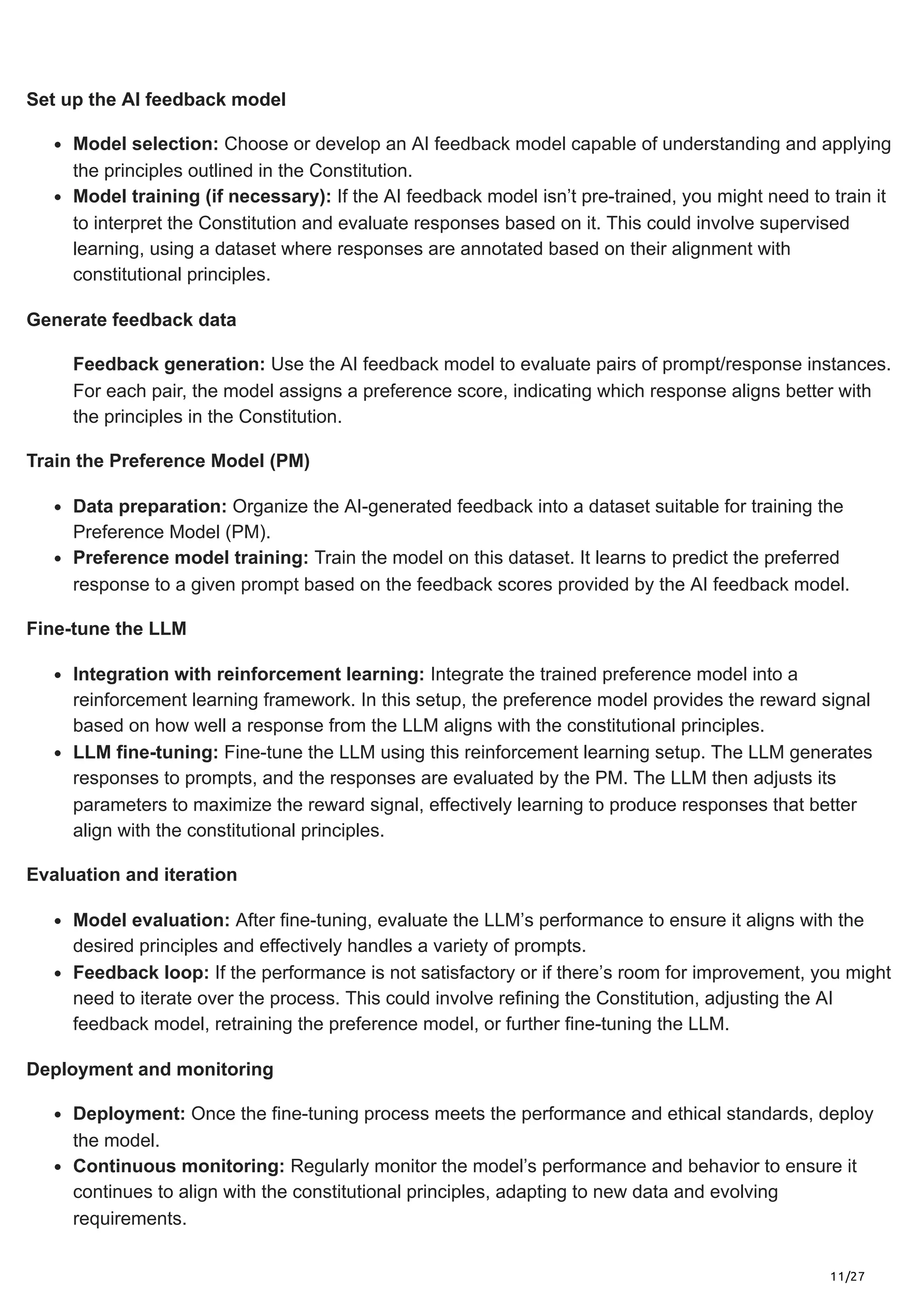

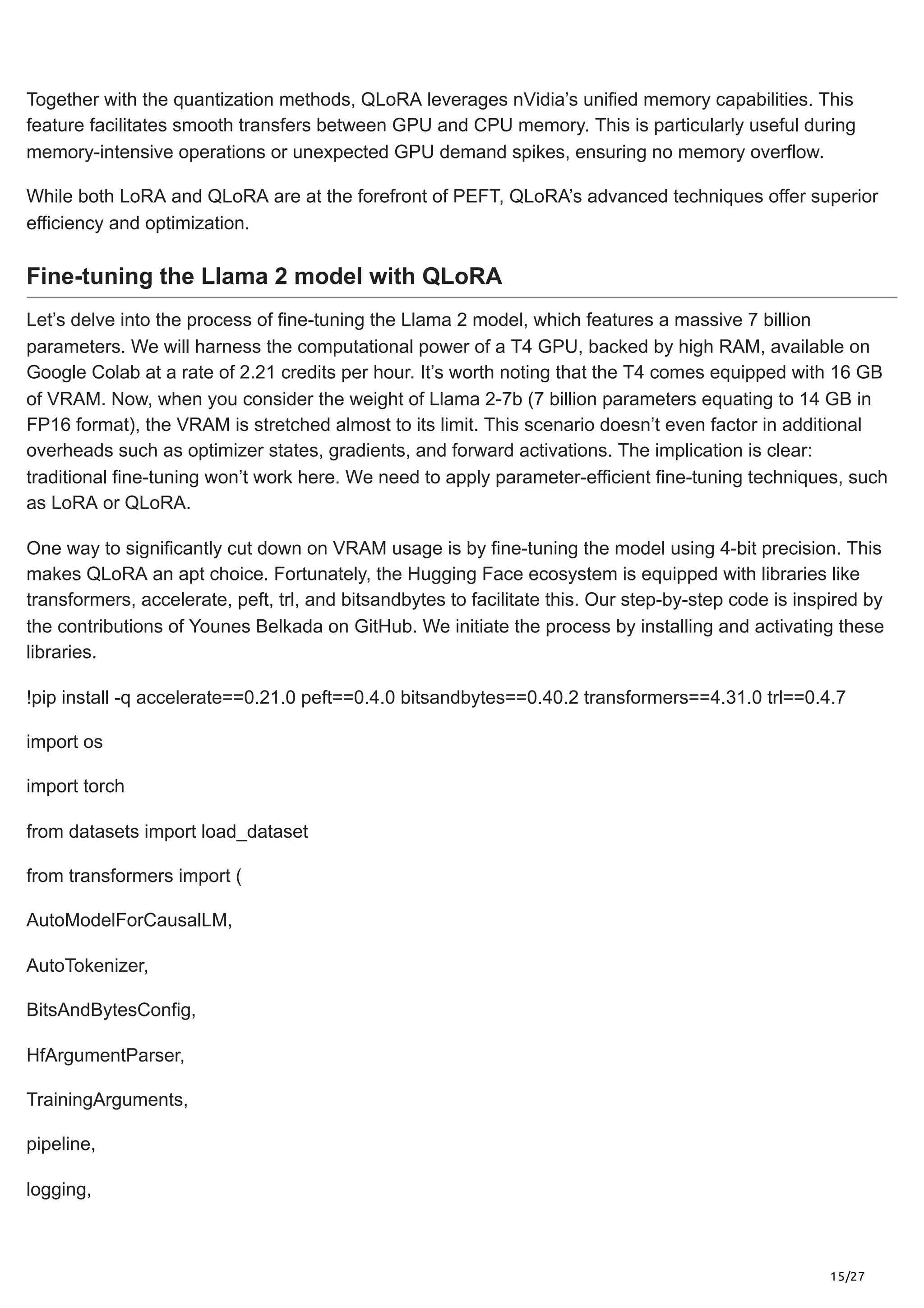

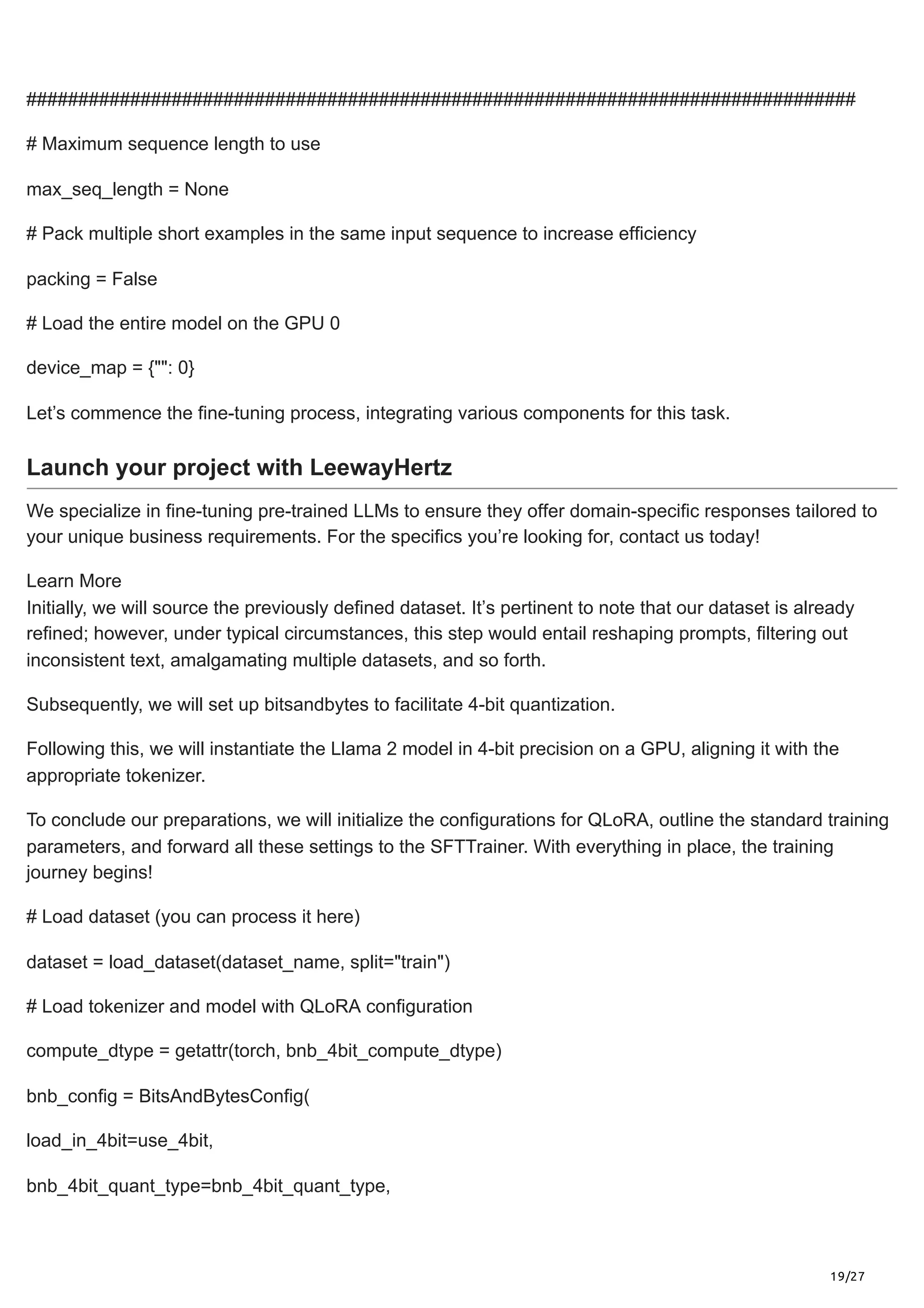

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=use_nested_quant,

)

# Check GPU compatibility with bfloat16

if compute_dtype == torch.float16 and use_4bit:

major, _ = torch.cuda.get_device_capability()

if major >= 8:

print("=" * 80)

print("Your GPU supports bfloat16: accelerate training with bf16=True")

print("=" * 80)

# Load base model

model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=bnb_config,

device_map=device_map

)

model.config.use_cache = False

model.config.pretraining_tp = 1

# Load LLaMA tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

tokenizer.add_special_tokens({'pad_token': '[PAD]'})

tokenizer.pad_token = tokenizer.eos_token

tokenizer.padding_side = "right"

# Load LoRA configuration

peft_config = LoraConfig(

lora_alpha=lora_alpha,](https://image.slidesharecdn.com/fine-tuningllama2anoverview-240219064119-0a7baf3a/75/FINE-TUNING-LLAMA-2-DOMAIN-ADAPTATION-OF-A-PRE-TRAINED-MODEL-20-2048.jpg)

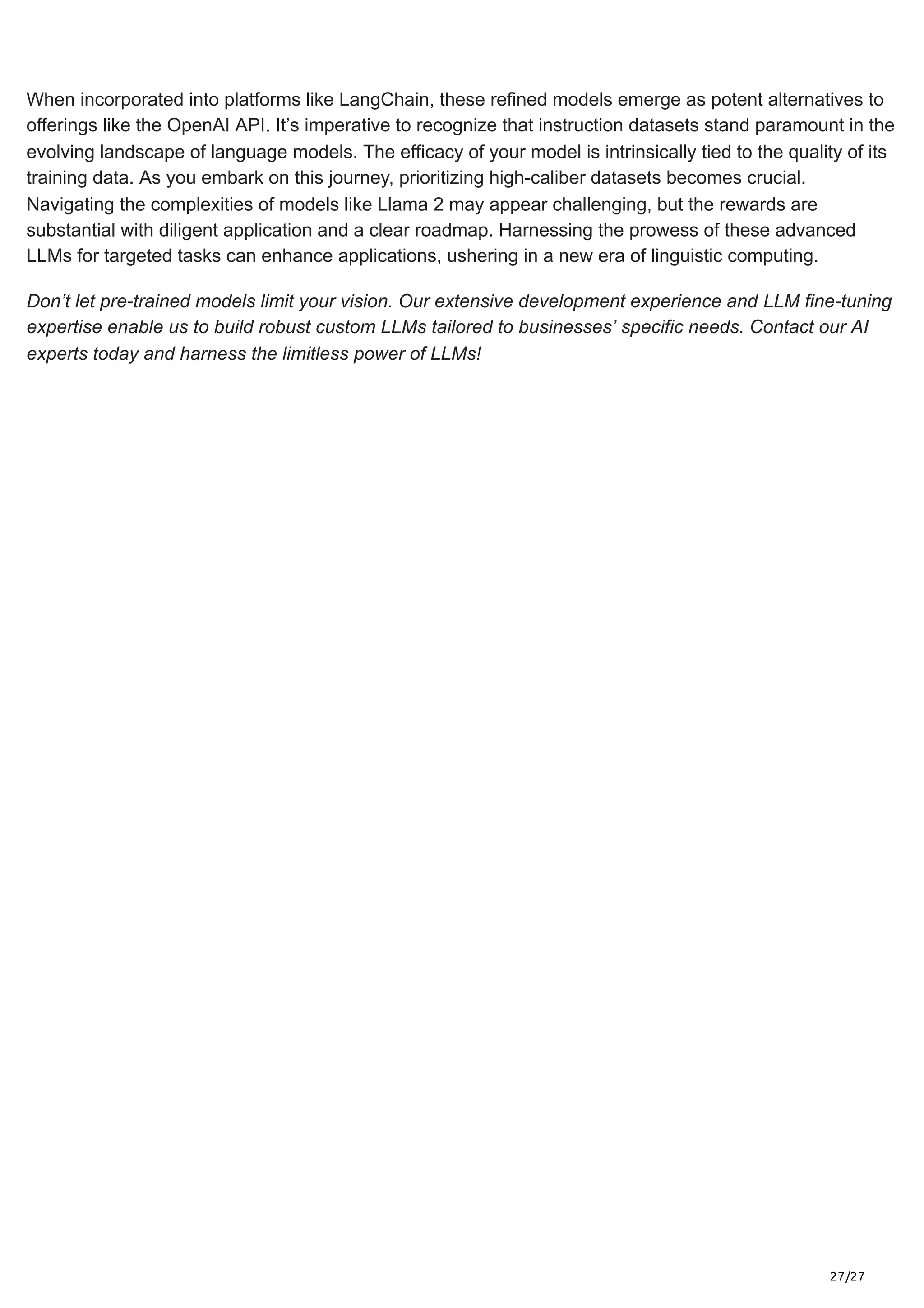

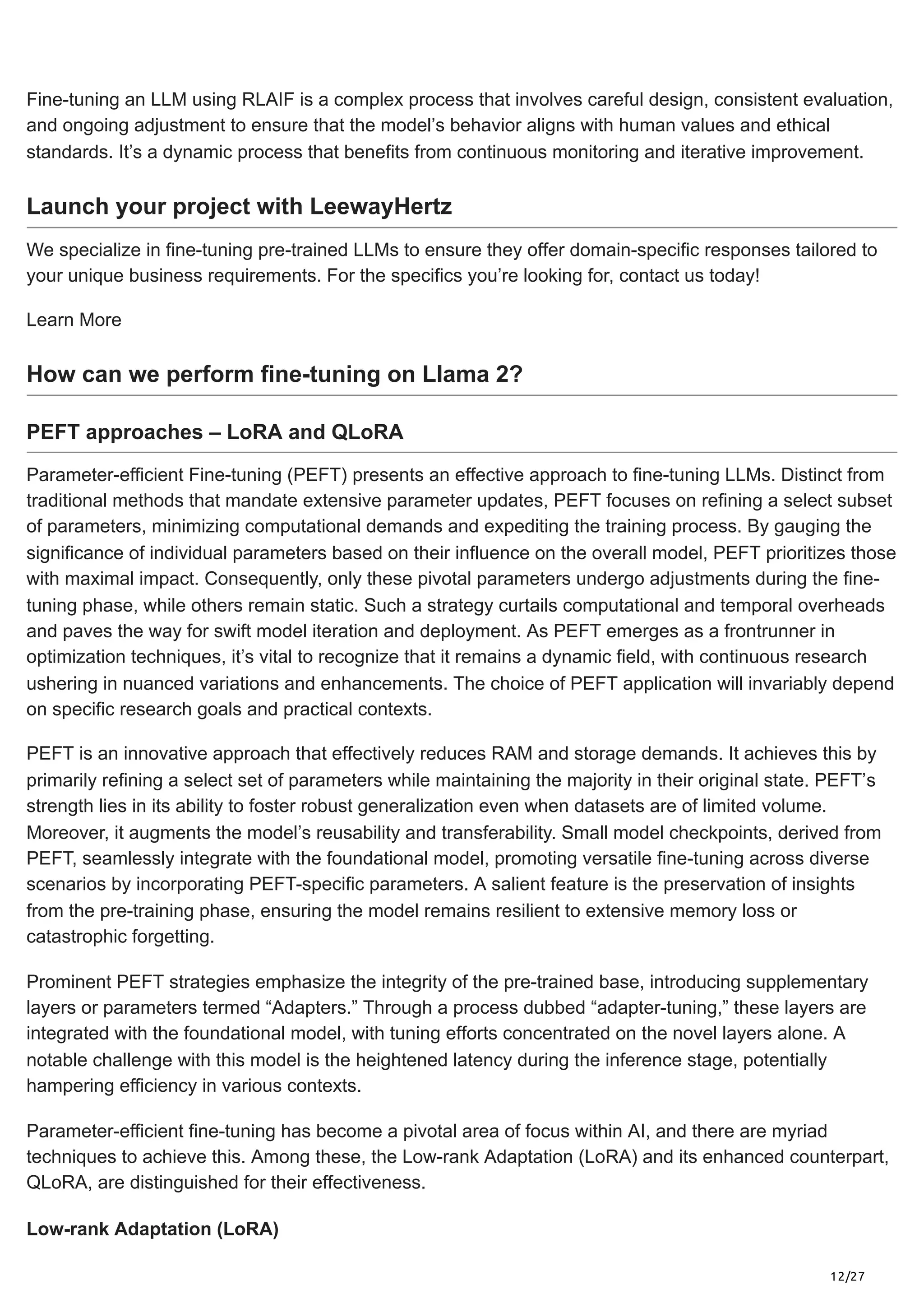

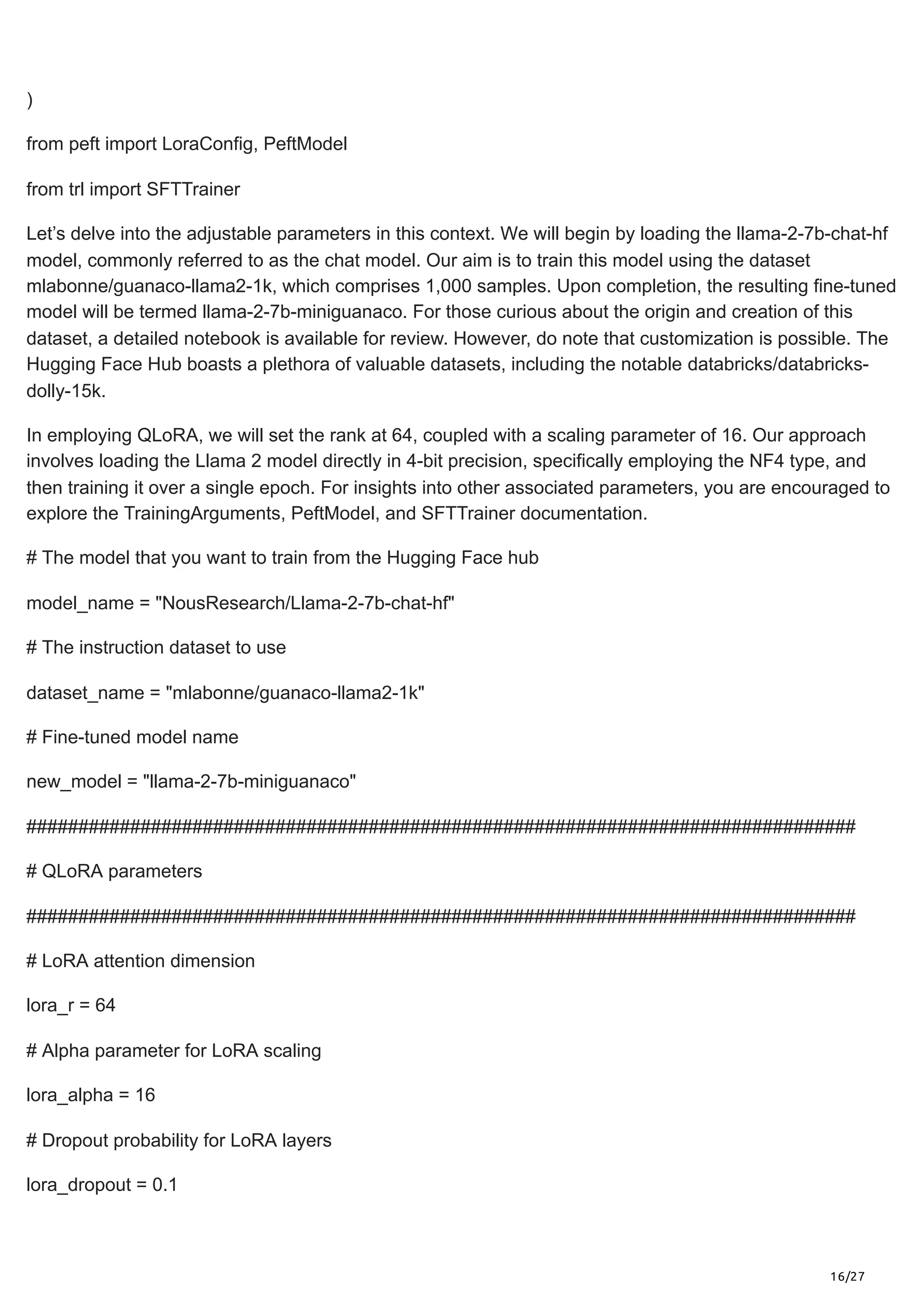

![23/27

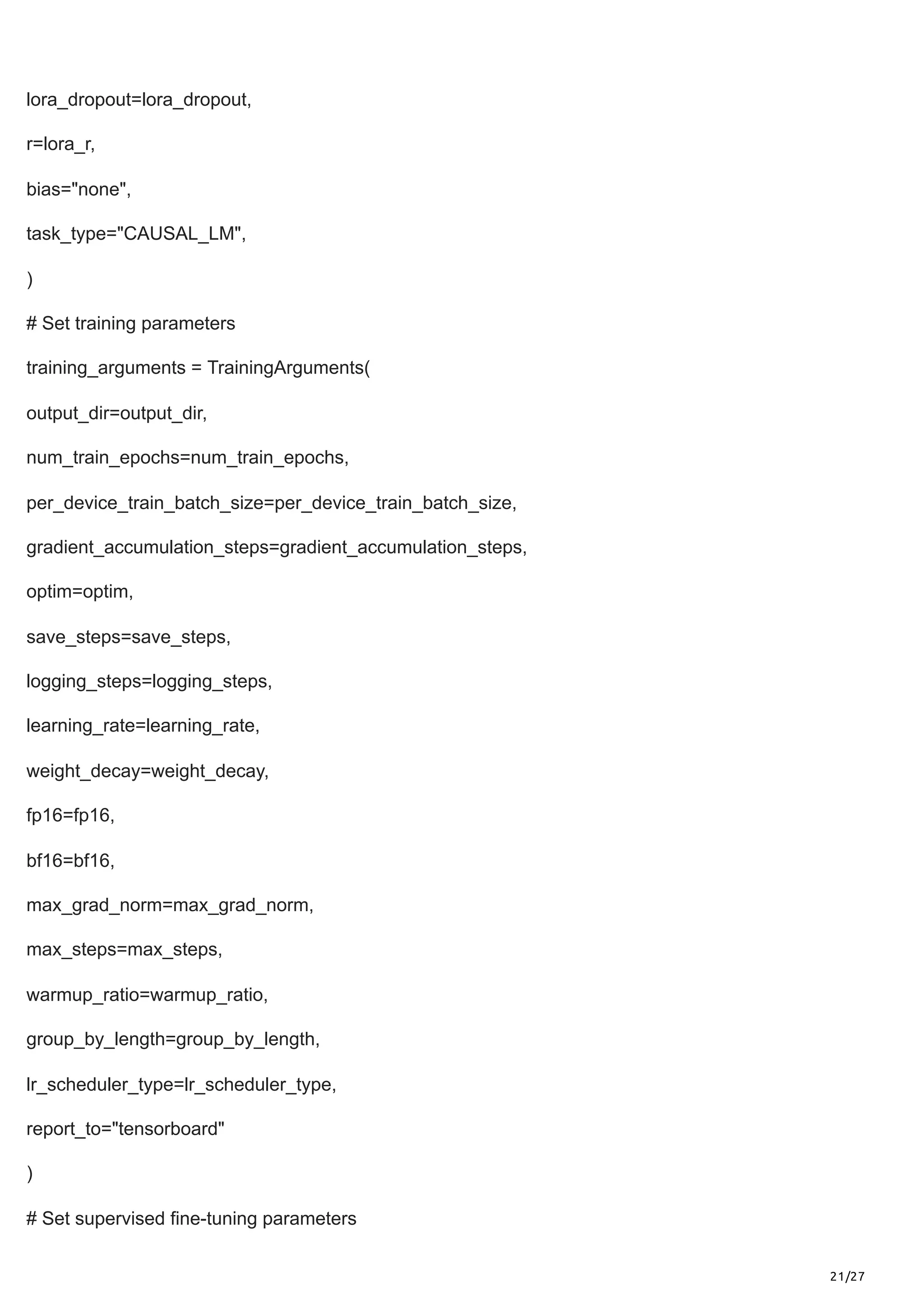

%load_ext tensorboard

%tensorboard --logdir results/runs

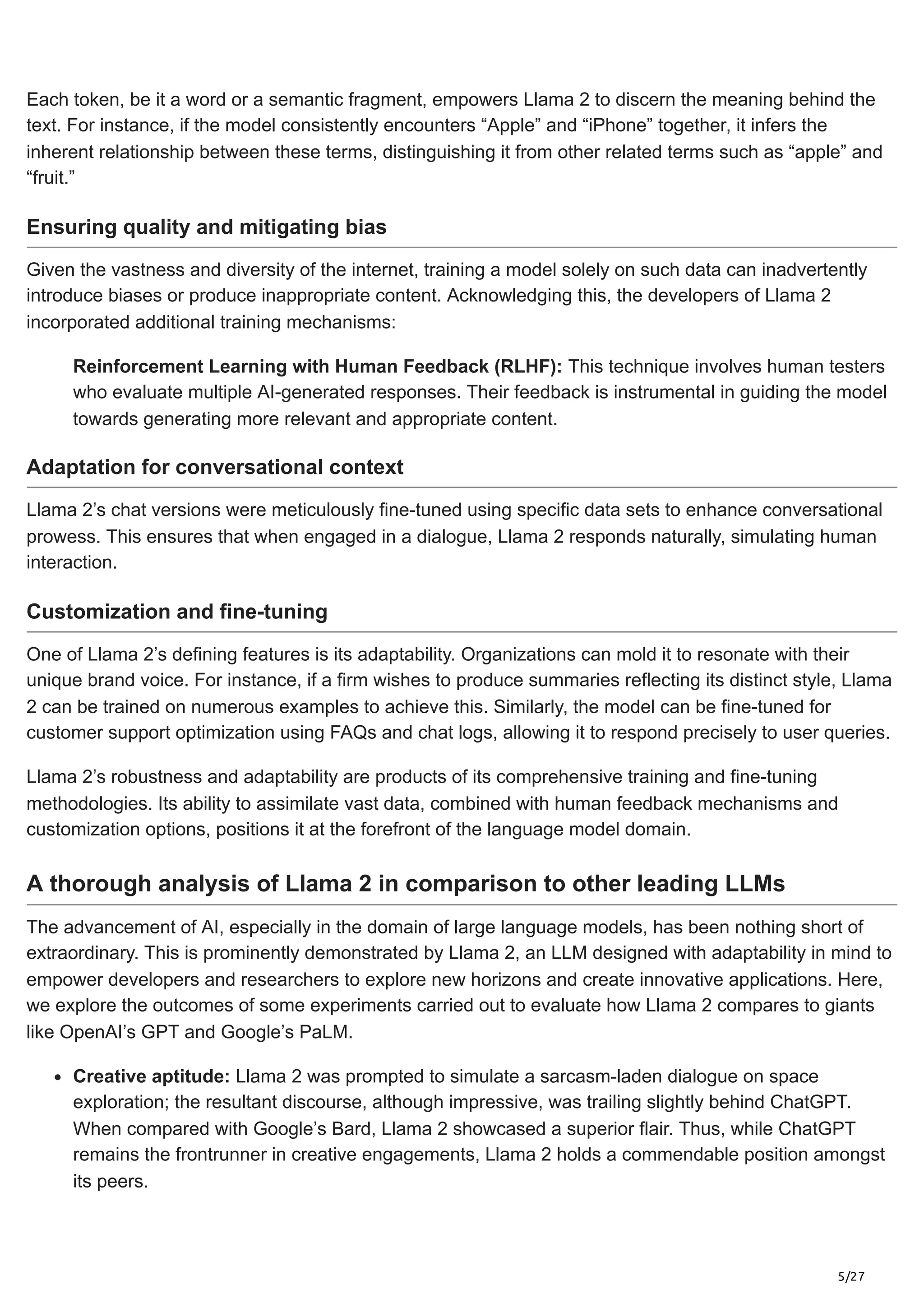

Let’s confirm that the model is operating as expected. While a comprehensive evaluation would be ideal,

we can utilize the text generation pipeline for preliminary assessments by posing questions such as,

“What is a large language model?” It’s crucial to adjust the input to align with Llama 2’s prompt structure.

# Ignore warnings

logging.set_verbosity(logging.CRITICAL)

# Run text generation pipeline with our next model

prompt = "What is a large language model?"

pipe = pipeline(task="text-generation", model=model, tokenizer=tokenizer, max_length=200)

result = pipe(f"[INST] {prompt} [/INST]")

print(result[0]['generated_text'])

The model provides the subsequent answer:

/usr/local/lib/python3.10/dist-packages/transformers/generation/utils.py:1270: UserWarning: You have

modified the pretrained model configuration to control generation. This is a deprecated strategy to control

generation and will be removed soon, in a future version. Please use a generation configuration file (see

https://huggingface.co/docs/transformers/main_classes/text_generation )

warnings.warn(

/usr/local/lib/python3.10/dist-packages/torch/utils/checkpoint.py:31: UserWarning: None of the inputs

have requires_grad=True. Gradients will be None

warnings.warn("None of the inputs have requires_grad=True. Gradients will be None")

INST] What is a large language model? [/INST] A large language model is a type of artificial intelligence

(AI) model that is trained on a large dataset of text to generate human-like language outputs. It is

designed to be able to understand and generate text in a way that is similar to human language, and can

be used for a variety of applications such as chatbots, language translation, and text summarization.

Large language models are typically trained using deep learning techniques, such as recurrent neural

networks (RNNs) or transformer models, and are often based on pre-trained models such as BERT or

RoBERTa. These models are trained on large datasets of text, such as books, articles, or websites, and

are designed to learn the patterns and structures of language.

Some examples of large language models include:

* BERT (Bidirectional Encoder Representations from Transformers):](https://image.slidesharecdn.com/fine-tuningllama2anoverview-240219064119-0a7baf3a/75/FINE-TUNING-LLAMA-2-DOMAIN-ADAPTATION-OF-A-PRE-TRAINED-MODEL-23-2048.jpg)

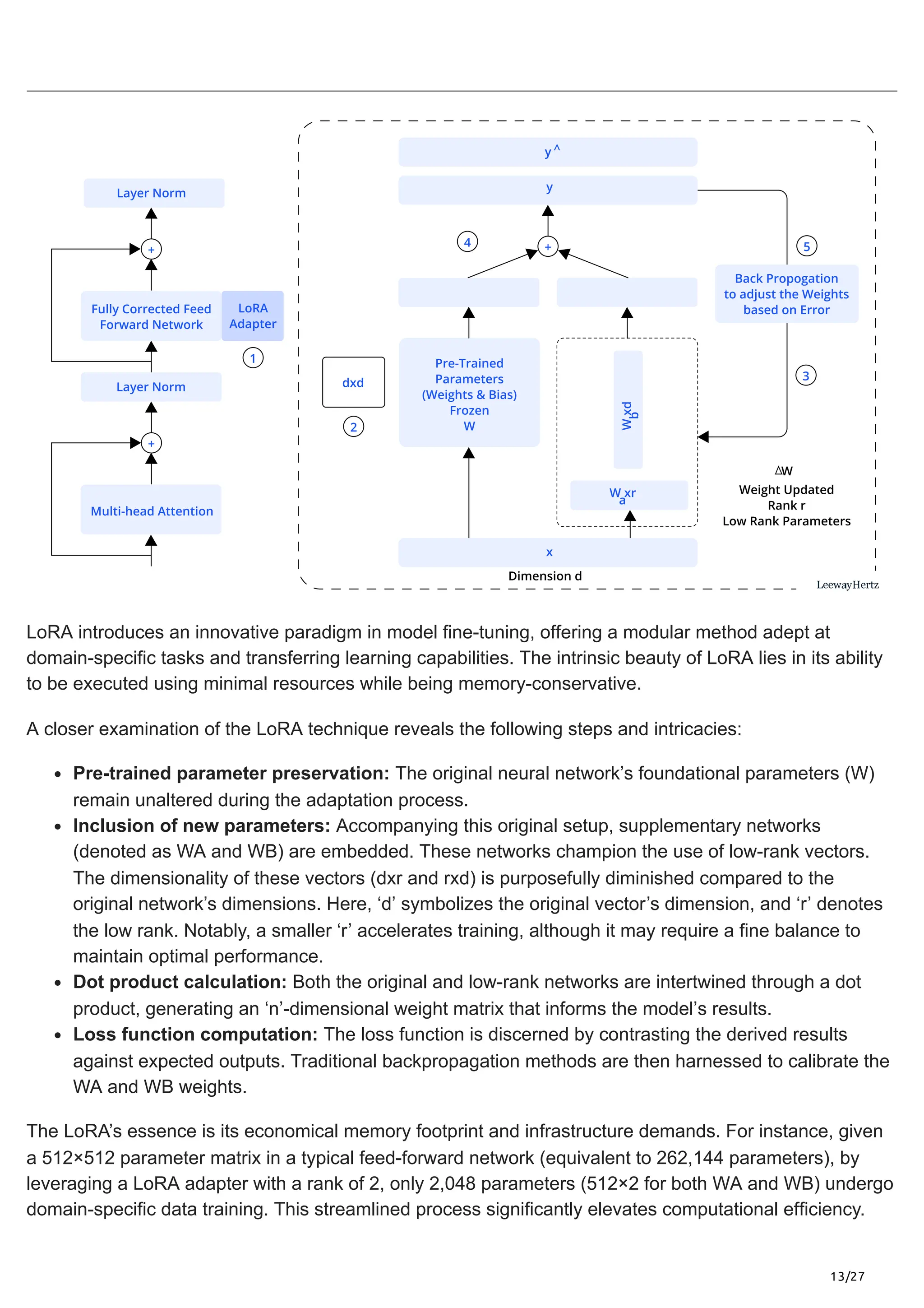

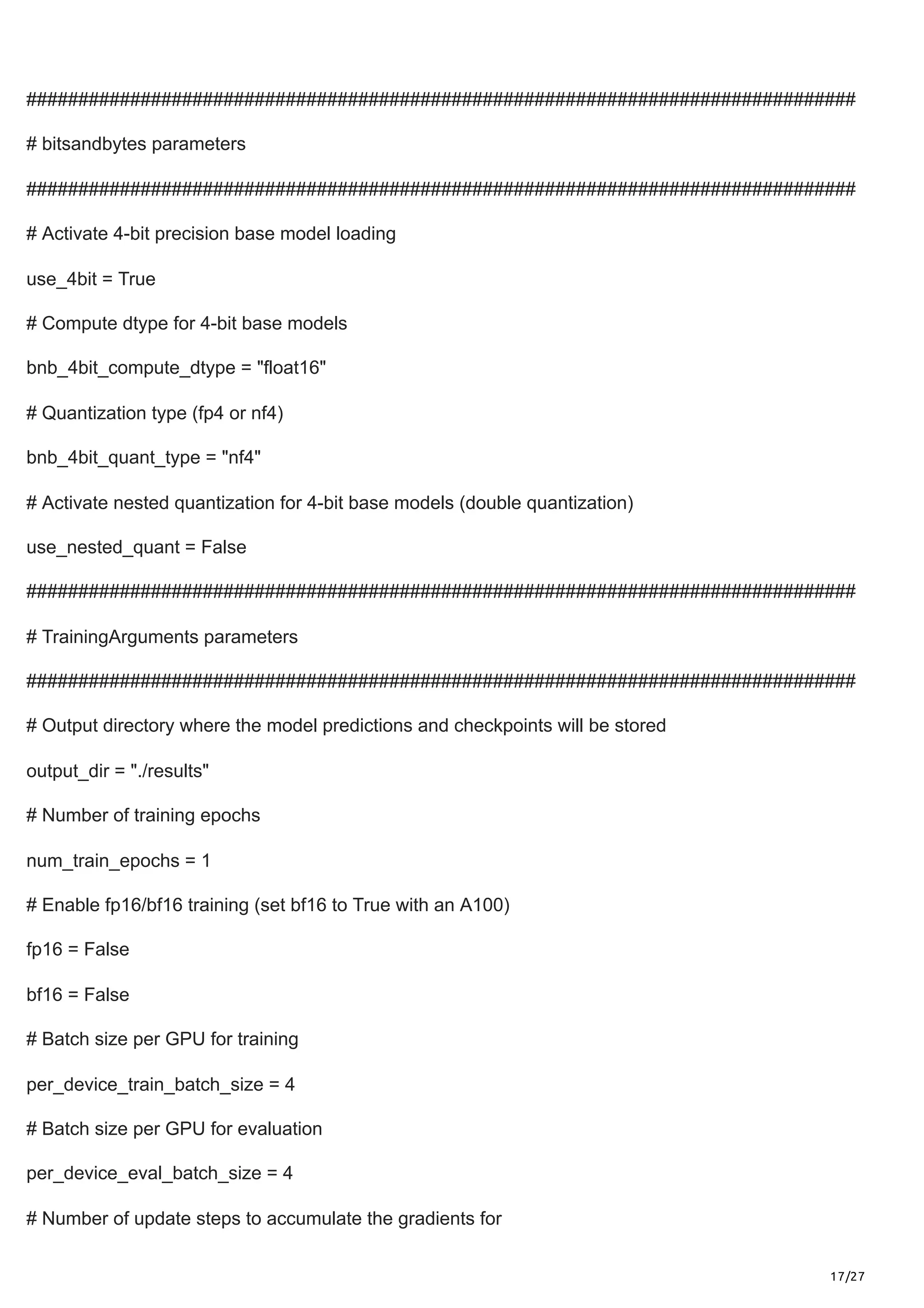

![24/27

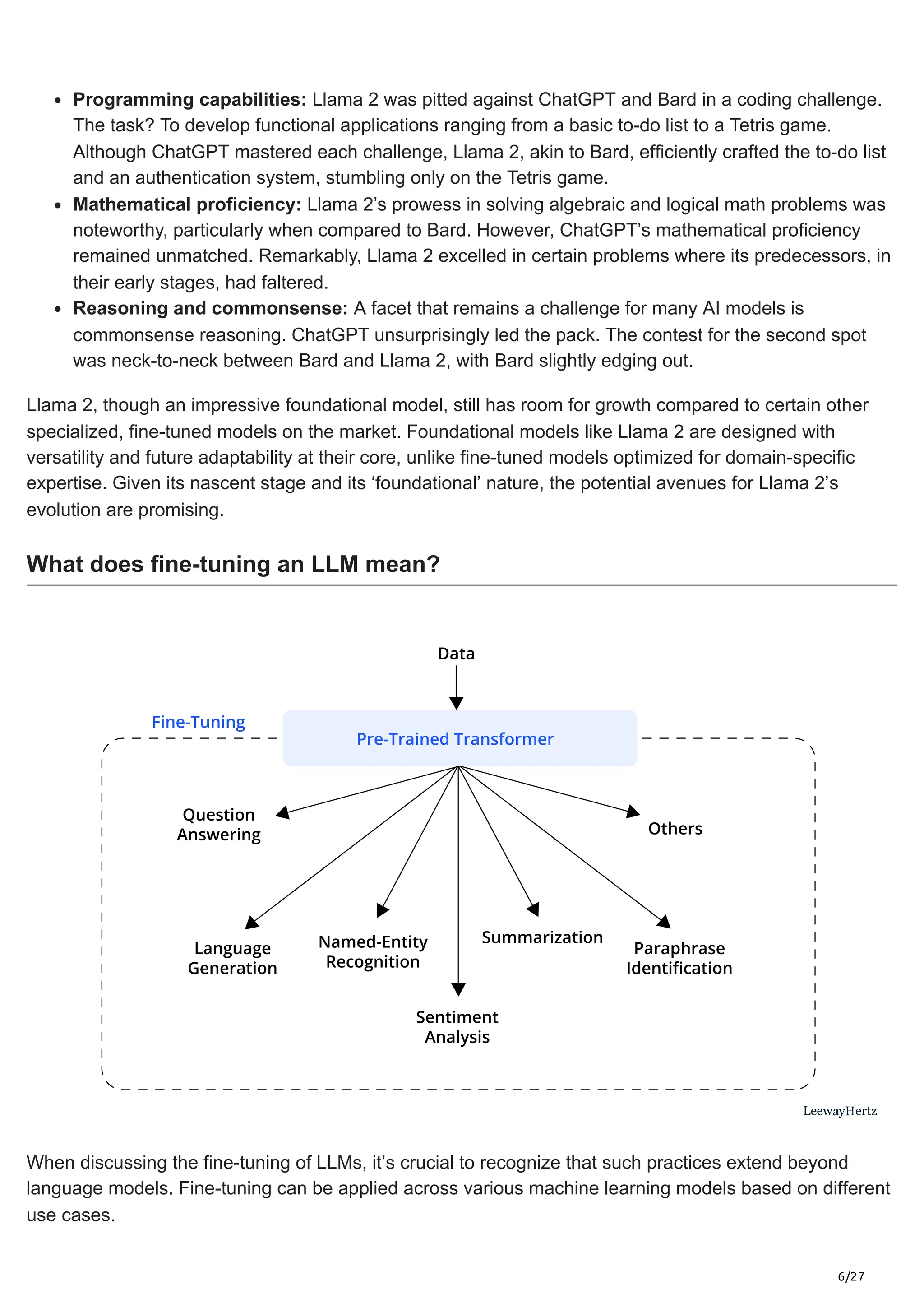

Drawing from our observations, the coherence demonstrated by a model encompassing merely 7 billion

parameters is quite impressive. Feel free to experiment further by posing more complex questions,

perhaps drawing from datasets like BigBench-Hard. Historically, the Guanaco dataset has been pivotal in

crafting top-tier models. To achieve this, consider training a Llama 2 model utilizing the

mlabonne/guanaco-llama2 dataset.

So, how do we save our refined llama-2-7b-miniguanaco model? The key lies in integrating the LoRA

weights with the foundational model. Presently, a direct, seamless method to achieve this eludes us. The

procedure involves reloading the base model in FP16 precision and harnessing the capabilities of the peft

library for amalgamation. Regrettably, this approach has occasionally been met with VRAM-related

challenges, even after its clearance. It might be beneficial to restart the notebook, initiate the primary

three cells, and then progress to the subsequent one.

# Reload model in FP16 and merge it with LoRA weights

base_model = AutoModelForCausalLM.from_pretrained(

model_name,

low_cpu_mem_usage=True,

return_dict=True,

torch_dtype=torch.float16,

device_map=device_map,

)

model = PeftModel.from_pretrained(base_model, new_model)

model = model.merge_and_unload()

# Reload tokenizer to save it

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

tokenizer.add_special_tokens({'pad_token': '[PAD]'})

tokenizer.pad_token = tokenizer.eos_token

tokenizer.padding_side = "right"

Having successfully combined our weights and reinstated the tokenizer, we are positioned to upload the

entirety to the Hugging Face Hub, ensuring our model’s preservation.

!huggingface-cli login

model.push_to_hub(new_model, use_temp_dir=False)](https://image.slidesharecdn.com/fine-tuningllama2anoverview-240219064119-0a7baf3a/75/FINE-TUNING-LLAMA-2-DOMAIN-ADAPTATION-OF-A-PRE-TRAINED-MODEL-24-2048.jpg)