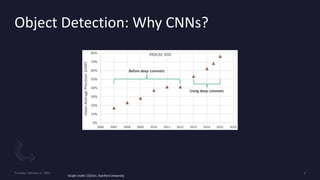

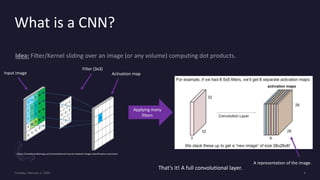

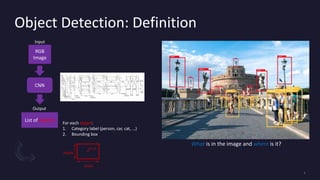

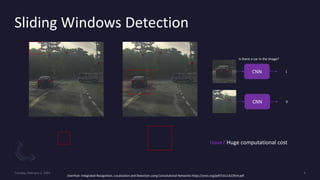

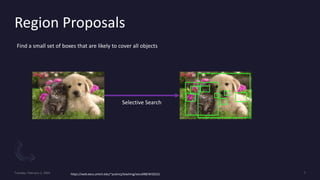

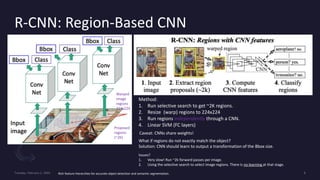

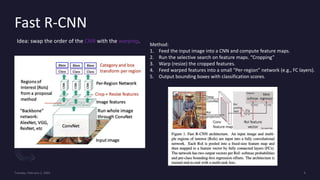

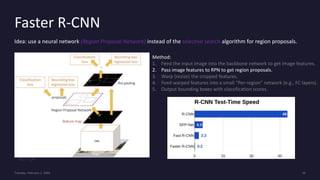

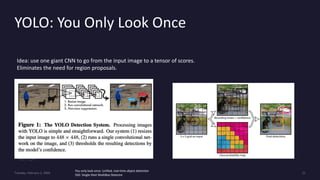

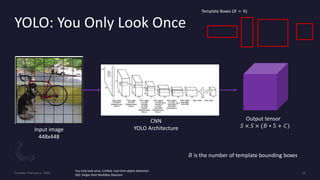

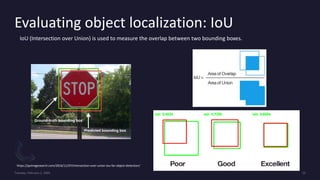

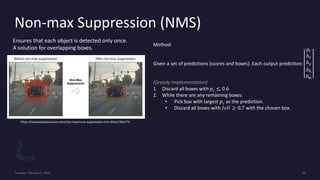

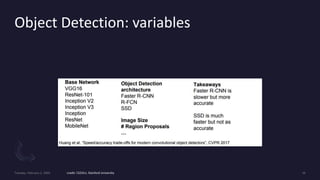

This document provides an overview of object detection using convolutional neural networks (CNNs). It discusses why CNNs are well-suited for object detection, defines object detection, and describes several popular CNN-based object detection algorithms including R-CNN, Fast R-CNN, Faster R-CNN, and YOLO. It also covers important object detection concepts like region proposals, sliding windows, IoU for evaluating localization accuracy, and NMS for removing overlapping detections. Open-source resources for implementing these algorithms are also provided.