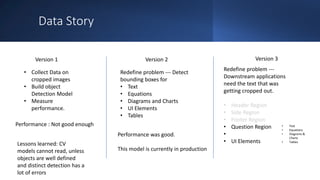

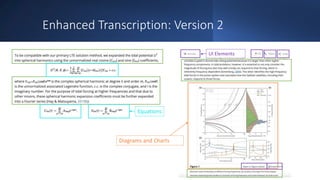

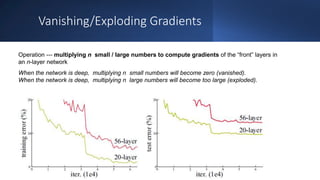

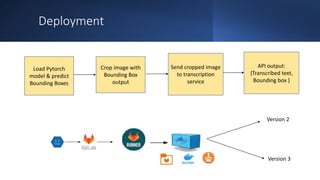

The document discusses the current state and future prospects of computer vision, detailing the development and performance of object detection models, particularly focusing on the YOLO algorithm. It highlights the limitations of existing models in reading complex images and emphasizes the importance of proper problem definition and metrics in enhancing model performance. Key takeaways include the effectiveness of YOLO for real-time applications and the need for careful integration of model outputs with transcription services.