CapsuleGAN: Generative Adversarial Capsule Network

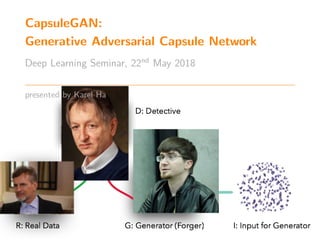

- 1. CapsuleGAN: Generative Adversarial Capsule Network Deep Learning Seminar, 22nd May 2018 presented by Karel Ha

- 3. Motivation

- 4. 1

- 5. The pooling operation used in convolutional neural networks is a big mistake and the fact that it works so well is a disaster. G. Hinton http://condo.ca/wp-content/uploads/2017/03/ Vector-director-Institute-artificial-intelligence-Toronto-MaRS-Discovery-District-H ca_.jpg 1

- 6. The pooling operation used in convolutional neural networks is a big mistake and the fact that it works so well is a disaster. G. Hinton [...] it makes much more sense to represent a pose as a small matrix that converts a vector of positional coordinates relative to the viewer into positional coordinates relative to the shape itself. G. Hinton http://condo.ca/wp-content/uploads/2017/03/ Vector-director-Institute-artificial-intelligence-Toronto-MaRS-Discovery-District-H ca_.jpg 1

- 8. Part-Whole Geometric Relationships “What is wrong with convolutional neural nets?” https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 2

- 9. Part-Whole Geometric Relationships “What is wrong with convolutional neural nets?” To a CNN (with MaxPool)... https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 2

- 10. Part-Whole Geometric Relationships “What is wrong with convolutional neural nets?” To a CNN (with MaxPool)... ...both pictures are similar, since they both contain similar elements. https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 2

- 11. Part-Whole Geometric Relationships “What is wrong with convolutional neural nets?” To a CNN (with MaxPool)... ...both pictures are similar, since they both contain similar elements. ...a mere presence of objects can be a very strong indicator to consider a face in the image. https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 2

- 12. Part-Whole Geometric Relationships “What is wrong with convolutional neural nets?” To a CNN (with MaxPool)... ...both pictures are similar, since they both contain similar elements. ...a mere presence of objects can be a very strong indicator to consider a face in the image. ...orientational and relative spatial relationships are not very important. https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 2

- 13. Part-Whole Geometric Relationships Scene Graphs from Computer Graphics http://math.hws.edu/graphicsbook/c2/scene-graph.png 3

- 14. Part-Whole Geometric Relationships Scene Graphs from Computer Graphics ...takes into account relative positions of objects. http://math.hws.edu/graphicsbook/c2/scene-graph.png 3

- 15. Part-Whole Geometric Relationships Scene Graphs from Computer Graphics ...takes into account relative positions of objects. The internal representation in computer memory: http://math.hws.edu/graphicsbook/c2/scene-graph.png 3

- 16. Part-Whole Geometric Relationships Scene Graphs from Computer Graphics ...takes into account relative positions of objects. The internal representation in computer memory: a) arrays of geometrical objects http://math.hws.edu/graphicsbook/c2/scene-graph.png 3

- 17. Part-Whole Geometric Relationships Scene Graphs from Computer Graphics ...takes into account relative positions of objects. The internal representation in computer memory: a) arrays of geometrical objects b) matrices representing their relative positions and orientations http://math.hws.edu/graphicsbook/c2/scene-graph.png 3

- 18. Part-Whole Geometric Relationships Inverse (Computer) Graphics https://d1jnx9ba8s6j9r.cloudfront.net/blog/wp-content/uploads/2017/12/ CNN-Capsule-Networks-Edureka-442x300.png 4

- 19. Part-Whole Geometric Relationships Inverse (Computer) Graphics Inverse graphics: https://d1jnx9ba8s6j9r.cloudfront.net/blog/wp-content/uploads/2017/12/ CNN-Capsule-Networks-Edureka-442x300.png 4

- 20. Part-Whole Geometric Relationships Inverse (Computer) Graphics Inverse graphics: from visual information received by eyes https://d1jnx9ba8s6j9r.cloudfront.net/blog/wp-content/uploads/2017/12/ CNN-Capsule-Networks-Edureka-442x300.png 4

- 21. Part-Whole Geometric Relationships Inverse (Computer) Graphics Inverse graphics: from visual information received by eyes deconstruct a hierarchical representation of the world around us https://d1jnx9ba8s6j9r.cloudfront.net/blog/wp-content/uploads/2017/12/ CNN-Capsule-Networks-Edureka-442x300.png 4

- 22. Part-Whole Geometric Relationships Inverse (Computer) Graphics Inverse graphics: from visual information received by eyes deconstruct a hierarchical representation of the world around us and try to match it with already learned patterns and relationships stored in the brain https://d1jnx9ba8s6j9r.cloudfront.net/blog/wp-content/uploads/2017/12/ CNN-Capsule-Networks-Edureka-442x300.png 4

- 23. Part-Whole Geometric Relationships Inverse (Computer) Graphics Inverse graphics: from visual information received by eyes deconstruct a hierarchical representation of the world around us and try to match it with already learned patterns and relationships stored in the brain relationships between 3D objects using a “pose” (= translation plus rotation) https://d1jnx9ba8s6j9r.cloudfront.net/blog/wp-content/uploads/2017/12/ CNN-Capsule-Networks-Edureka-442x300.png 4

- 24. Pose Equivariance and the Viewing Angle https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 5

- 25. Pose Equivariance and the Viewing Angle We have probably never seen these exact pictures, but we can still immediately recognize the object in it... https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 5

- 26. Pose Equivariance and the Viewing Angle We have probably never seen these exact pictures, but we can still immediately recognize the object in it... internal representation in the brain: independent of the viewing angle https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 5

- 27. Pose Equivariance and the Viewing Angle We have probably never seen these exact pictures, but we can still immediately recognize the object in it... internal representation in the brain: independent of the viewing angle quite hard for a CNN: no built-in understanding of 3D space https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 5

- 28. Pose Equivariance and the Viewing Angle We have probably never seen these exact pictures, but we can still immediately recognize the object in it... internal representation in the brain: independent of the viewing angle quite hard for a CNN: no built-in understanding of 3D space much easier for a CapsNet: these relationships are explicitly modeled https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 5

- 29. Routing by an Agreement: High-Dimensional Coincidence https://www.oreilly.com/ideas/introducing-capsule-networks 6

- 30. Routing by an Agreement: High-Dimensional Coincidence https://www.oreilly.com/ideas/introducing-capsule-networks 6

- 31. Routing by an Agreement: High-Dimensional Coincidence https://www.oreilly.com/ideas/introducing-capsule-networks 6

- 32. Routing by an Agreement: Illustrative Overview https://www.oreilly.com/ideas/introducing-capsule-networks 7

- 33. Routing by an Agreement: Illustrative Overview https://www.oreilly.com/ideas/introducing-capsule-networks 7

- 34. Routing by an Agreement: Recognizing Ambiguity in Images https://www.oreilly.com/ideas/introducing-capsule-networks 8

- 35. Routing: Lower Levels Voting for Higher-Level Feature [Sabour, Frosst, and Hinton 2017] 9

- 36. How to do it? (mathematically) 9

- 37. What Is a Capsule? a group of neurons that: perform some complicated internal computations on their inputs https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-ii-how-capsules-work-153b6ade9f66 10

- 38. What Is a Capsule? a group of neurons that: perform some complicated internal computations on their inputs encapsulate their results into a small vector of highly informative outputs https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-ii-how-capsules-work-153b6ade9f66 10

- 39. What Is a Capsule? a group of neurons that: perform some complicated internal computations on their inputs encapsulate their results into a small vector of highly informative outputs recognize an implicitly defined visual entity (over a limited domain of viewing conditions and deformations) https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-ii-how-capsules-work-153b6ade9f66 10

- 40. What Is a Capsule? a group of neurons that: perform some complicated internal computations on their inputs encapsulate their results into a small vector of highly informative outputs recognize an implicitly defined visual entity (over a limited domain of viewing conditions and deformations) encode the probability of the entity being present https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-ii-how-capsules-work-153b6ade9f66 10

- 41. What Is a Capsule? a group of neurons that: perform some complicated internal computations on their inputs encapsulate their results into a small vector of highly informative outputs recognize an implicitly defined visual entity (over a limited domain of viewing conditions and deformations) encode the probability of the entity being present encode instantiation parameters pose, lighting, deformation relative to entity’s (implicitly defined) canonical version https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-ii-how-capsules-work-153b6ade9f66 10

- 42. Output As A Vector https://www.oreilly.com/ideas/introducing-capsule-networks 11

- 43. Output As A Vector probability of presence: locally invariant E.g. if 0, 3, 2, 0, 0 leads to 0, 1, 0, 0, then 0, 0, 3, 2, 0 should also lead to 0, 1, 0, 0. https://www.oreilly.com/ideas/introducing-capsule-networks 11

- 44. Output As A Vector probability of presence: locally invariant E.g. if 0, 3, 2, 0, 0 leads to 0, 1, 0, 0, then 0, 0, 3, 2, 0 should also lead to 0, 1, 0, 0. instantiation parameters: equivariant E.g. if 0, 3, 2, 0, 0 leads to 0, 1, 0, 0, then 0, 0, 3, 2, 0 might lead to 0, 0, 1, 0. https://www.oreilly.com/ideas/introducing-capsule-networks 11

- 45. Previous Version of Capsules for illustration taken from “Transforming Auto-Encoders” [Hinton, Krizhevsky, and Wang 2011] [Hinton, Krizhevsky, and Wang 2011] 12

- 46. Previous Version of Capsules for illustration taken from “Transforming Auto-Encoders” [Hinton, Krizhevsky, and Wang 2011] three capsules of a transforming auto-encoder (that models translation) [Hinton, Krizhevsky, and Wang 2011] 12

- 49. Capsule’s Vector Flow Note: no bias (included in affine transformation matrices Wij ’s) https://cdn-images-1.medium.com/max/1250/1*GbmQ2X9NQoGuJ1M-EOD67g.png 13

- 51. Capsule Schema with Routing [Sabour, Frosst, and Hinton 2017] 14

- 52. Routing Softmax cij = exp(bij ) k exp(bik) (1) [Sabour, Frosst, and Hinton 2017] 15

- 53. Prediction Vectors ˆuj|i = Wij ui (2) [Sabour, Frosst, and Hinton 2017] 16

- 54. Total Input sj = i cij ˆuj|i (3) [Sabour, Frosst, and Hinton 2017] 17

- 55. Squashing: (vector) non-linearity vj = ||sj ||2 1 + ||sj ||2 sj ||sj || (4) [Sabour, Frosst, and Hinton 2017] 18

- 56. Squashing: (vector) non-linearity vj = ||sj ||2 1 + ||sj ||2 sj ||sj || (4) [Sabour, Frosst, and Hinton 2017] 18

- 57. Squashing: Plot for 1-D input https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-ii-how-capsules-work-153b6ade9f66 19

- 58. Squashing: Plot for 1-D input https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-ii-how-capsules-work-153b6ade9f66 19

- 59. Routing Algorithm [Sabour, Frosst, and Hinton 2017] 20

- 60. Routing Algorithm Algorithm Dynamic Routing between Capsules 1: procedure Routing(ˆuj|i , r, l) [Sabour, Frosst, and Hinton 2017] 20

- 61. Routing Algorithm Algorithm Dynamic Routing between Capsules 1: procedure Routing(ˆuj|i , r, l) 2: for all capsule i in layer l and capsule j in layer (l + 1): bij ← 0. [Sabour, Frosst, and Hinton 2017] 20

- 62. Routing Algorithm Algorithm Dynamic Routing between Capsules 1: procedure Routing(ˆuj|i , r, l) 2: for all capsule i in layer l and capsule j in layer (l + 1): bij ← 0. 3: for r iterations do [Sabour, Frosst, and Hinton 2017] 20

- 63. Routing Algorithm Algorithm Dynamic Routing between Capsules 1: procedure Routing(ˆuj|i , r, l) 2: for all capsule i in layer l and capsule j in layer (l + 1): bij ← 0. 3: for r iterations do 4: for all capsule i in layer l: ci ← softmax(bi ) softmax from Eq. 1 [Sabour, Frosst, and Hinton 2017] 20

- 64. Routing Algorithm Algorithm Dynamic Routing between Capsules 1: procedure Routing(ˆuj|i , r, l) 2: for all capsule i in layer l and capsule j in layer (l + 1): bij ← 0. 3: for r iterations do 4: for all capsule i in layer l: ci ← softmax(bi ) softmax from Eq. 1 5: for all capsule j in layer (l + 1): sj ← i cij ˆuj|i total input from Eq. 3 [Sabour, Frosst, and Hinton 2017] 20

- 65. Routing Algorithm Algorithm Dynamic Routing between Capsules 1: procedure Routing(ˆuj|i , r, l) 2: for all capsule i in layer l and capsule j in layer (l + 1): bij ← 0. 3: for r iterations do 4: for all capsule i in layer l: ci ← softmax(bi ) softmax from Eq. 1 5: for all capsule j in layer (l + 1): sj ← i cij ˆuj|i total input from Eq. 3 6: for all capsule j in layer (l + 1): vj ← squash(sj ) squash from Eq. 4 [Sabour, Frosst, and Hinton 2017] 20

- 66. Routing Algorithm Algorithm Dynamic Routing between Capsules 1: procedure Routing(ˆuj|i , r, l) 2: for all capsule i in layer l and capsule j in layer (l + 1): bij ← 0. 3: for r iterations do 4: for all capsule i in layer l: ci ← softmax(bi ) softmax from Eq. 1 5: for all capsule j in layer (l + 1): sj ← i cij ˆuj|i total input from Eq. 3 6: for all capsule j in layer (l + 1): vj ← squash(sj ) squash from Eq. 4 7: for all capsule i in layer l and capsule j in layer (l + 1): bij ← bij + ˆuj|i .vj [Sabour, Frosst, and Hinton 2017] 20

- 67. Routing Algorithm Algorithm Dynamic Routing between Capsules 1: procedure Routing(ˆuj|i , r, l) 2: for all capsule i in layer l and capsule j in layer (l + 1): bij ← 0. 3: for r iterations do 4: for all capsule i in layer l: ci ← softmax(bi ) softmax from Eq. 1 5: for all capsule j in layer (l + 1): sj ← i cij ˆuj|i total input from Eq. 3 6: for all capsule j in layer (l + 1): vj ← squash(sj ) squash from Eq. 4 7: for all capsule i in layer l and capsule j in layer (l + 1): bij ← bij + ˆuj|i .vj return vj [Sabour, Frosst, and Hinton 2017] 20

- 69. Average Change of Each Routing Logit bij (by each routing iteration during training) [Sabour, Frosst, and Hinton 2017] 21

- 70. Average Change of Each Routing Logit bij (by each routing iteration during training) [Sabour, Frosst, and Hinton 2017] 21

- 71. Log Scale of Final Differences [Sabour, Frosst, and Hinton 2017] 22

- 72. Training Loss of CapsNet on CIFAR10 (batch size of 128) The CapsNet with 3 routing iterations optimizes the loss faster and converges to a lower loss at the end. [Sabour, Frosst, and Hinton 2017] 23

- 73. Training Loss of CapsNet on CIFAR10 (batch size of 128) The CapsNet with 3 routing iterations optimizes the loss faster and converges to a lower loss at the end. [Sabour, Frosst, and Hinton 2017] 23

- 74. Training Loss of CapsNet on CIFAR10 (batch size of 128) The CapsNet with 3 routing iterations optimizes the loss faster and converges to a lower loss at the end. [Sabour, Frosst, and Hinton 2017] 23

- 75. Architecture: Encoder-Decoder encoder: [Sabour, Frosst, and Hinton 2017] 24

- 76. Architecture: Encoder-Decoder encoder: decoder: [Sabour, Frosst, and Hinton 2017] 24

- 77. Encoder: CapsNet with 3 Layers [Sabour, Frosst, and Hinton 2017] 25

- 78. Encoder: CapsNet with 3 Layers input: 28 by 28 MNIST digit image [Sabour, Frosst, and Hinton 2017] 25

- 79. Encoder: CapsNet with 3 Layers input: 28 by 28 MNIST digit image output: 16-dimensional vector of instantiation parameters [Sabour, Frosst, and Hinton 2017] 25

- 80. Encoder Layer 1: (Standard) Convolutional Layer [Sabour, Frosst, and Hinton 2017] 26

- 81. Encoder Layer 1: (Standard) Convolutional Layer input: 28 × 28 image (one color channel) [Sabour, Frosst, and Hinton 2017] 26

- 82. Encoder Layer 1: (Standard) Convolutional Layer input: 28 × 28 image (one color channel) output: 20 × 20 × 256 [Sabour, Frosst, and Hinton 2017] 26

- 83. Encoder Layer 1: (Standard) Convolutional Layer input: 28 × 28 image (one color channel) output: 20 × 20 × 256 256 kernels with size of 9 × 9 × 1 [Sabour, Frosst, and Hinton 2017] 26

- 84. Encoder Layer 1: (Standard) Convolutional Layer input: 28 × 28 image (one color channel) output: 20 × 20 × 256 256 kernels with size of 9 × 9 × 1 stride 1 [Sabour, Frosst, and Hinton 2017] 26

- 85. Encoder Layer 1: (Standard) Convolutional Layer input: 28 × 28 image (one color channel) output: 20 × 20 × 256 256 kernels with size of 9 × 9 × 1 stride 1 ReLU activation [Sabour, Frosst, and Hinton 2017] 26

- 86. Encoder Layer 2: PrimaryCaps [Sabour, Frosst, and Hinton 2017] 27

- 87. Encoder Layer 2: PrimaryCaps input: 20 × 20 × 256 basic features detected by the convolutional layer [Sabour, Frosst, and Hinton 2017] 27

- 88. Encoder Layer 2: PrimaryCaps input: 20 × 20 × 256 basic features detected by the convolutional layer output: 6 × 6 × 8 × 32 vector (activation) outputs of primary capsules [Sabour, Frosst, and Hinton 2017] 27

- 89. Encoder Layer 2: PrimaryCaps input: 20 × 20 × 256 basic features detected by the convolutional layer output: 6 × 6 × 8 × 32 vector (activation) outputs of primary capsules 32 primary capsules [Sabour, Frosst, and Hinton 2017] 27

- 90. Encoder Layer 2: PrimaryCaps input: 20 × 20 × 256 basic features detected by the convolutional layer output: 6 × 6 × 8 × 32 vector (activation) outputs of primary capsules 32 primary capsules each applies eight 9 × 9 × 256 convolutional kernels to the 20 × 20 × 256 input to produce 6 × 6 × 8 output [Sabour, Frosst, and Hinton 2017] 27

- 91. Encoder Layer 3: DigitCaps [Sabour, Frosst, and Hinton 2017] 28

- 92. Encoder Layer 3: DigitCaps input: 6 × 6 × 8 × 32 (6 × 6 × 32)-many 8-dimensional vector activations [Sabour, Frosst, and Hinton 2017] 28

- 93. Encoder Layer 3: DigitCaps input: 6 × 6 × 8 × 32 (6 × 6 × 32)-many 8-dimensional vector activations output: 16 × 10 [Sabour, Frosst, and Hinton 2017] 28

- 94. Encoder Layer 3: DigitCaps input: 6 × 6 × 8 × 32 (6 × 6 × 32)-many 8-dimensional vector activations output: 16 × 10 10 digit capsules [Sabour, Frosst, and Hinton 2017] 28

- 95. Encoder Layer 3: DigitCaps input: 6 × 6 × 8 × 32 (6 × 6 × 32)-many 8-dimensional vector activations output: 16 × 10 10 digit capsules input vectors gets their own 8 × 16 weight matrix Wij that maps 8-dimensional input space to the 16-dimensional capsule output space [Sabour, Frosst, and Hinton 2017] 28

- 96. Margin Loss for a Digit Existence https://medium.com/@pechyonkin/part-iv-capsnet-architecture-6a64422f7dce 29

- 97. Margin Loss to Train the Whole Encoder In other words, each DigitCap c has loss: [Sabour, Frosst, and Hinton 2017] 30

- 98. Margin Loss to Train the Whole Encoder In other words, each DigitCap c has loss: Lc = max(0, m+ − ||vc||)2 iff a digit of class c is present, λ max(0, ||vc|| − m− )2 otherwise. [Sabour, Frosst, and Hinton 2017] 30

- 99. Margin Loss to Train the Whole Encoder In other words, each DigitCap c has loss: Lc = max(0, m+ − ||vc||)2 iff a digit of class c is present, λ max(0, ||vc|| − m− )2 otherwise. m+ = 0.9: The loss is 0 iff the correct DigitCap predicts the correct label with probability ≥ 0.9. [Sabour, Frosst, and Hinton 2017] 30

- 100. Margin Loss to Train the Whole Encoder In other words, each DigitCap c has loss: Lc = max(0, m+ − ||vc||)2 iff a digit of class c is present, λ max(0, ||vc|| − m− )2 otherwise. m+ = 0.9: The loss is 0 iff the correct DigitCap predicts the correct label with probability ≥ 0.9. m− = 0.1: The loss is 0 iff the mismatching DigitCap predicts an incorrect label with probability ≤ 0.1. [Sabour, Frosst, and Hinton 2017] 30

- 101. Margin Loss to Train the Whole Encoder In other words, each DigitCap c has loss: Lc = max(0, m+ − ||vc||)2 iff a digit of class c is present, λ max(0, ||vc|| − m− )2 otherwise. m+ = 0.9: The loss is 0 iff the correct DigitCap predicts the correct label with probability ≥ 0.9. m− = 0.1: The loss is 0 iff the mismatching DigitCap predicts an incorrect label with probability ≤ 0.1. λ = 0.5 is down-weighting of the loss for absent digit classes. It stops the initial learning from shrinking the lengths of the activity vectors. [Sabour, Frosst, and Hinton 2017] 30

- 102. Margin Loss to Train the Whole Encoder In other words, each DigitCap c has loss: Lc = max(0, m+ − ||vc||)2 iff a digit of class c is present, λ max(0, ||vc|| − m− )2 otherwise. m+ = 0.9: The loss is 0 iff the correct DigitCap predicts the correct label with probability ≥ 0.9. m− = 0.1: The loss is 0 iff the mismatching DigitCap predicts an incorrect label with probability ≤ 0.1. λ = 0.5 is down-weighting of the loss for absent digit classes. It stops the initial learning from shrinking the lengths of the activity vectors. Squares? Because there are L2 norms in the loss function? [Sabour, Frosst, and Hinton 2017] 30

- 103. Margin Loss to Train the Whole Encoder In other words, each DigitCap c has loss: Lc = max(0, m+ − ||vc||)2 iff a digit of class c is present, λ max(0, ||vc|| − m− )2 otherwise. m+ = 0.9: The loss is 0 iff the correct DigitCap predicts the correct label with probability ≥ 0.9. m− = 0.1: The loss is 0 iff the mismatching DigitCap predicts an incorrect label with probability ≤ 0.1. λ = 0.5 is down-weighting of the loss for absent digit classes. It stops the initial learning from shrinking the lengths of the activity vectors. Squares? Because there are L2 norms in the loss function? The total loss is the sum of the losses of all digit capsules. [Sabour, Frosst, and Hinton 2017] 30

- 104. Margin Loss Function Value for Positive and for Negative Class For the correct DigitCap, the loss is 0 iff it predicts the correct label with probability ≥ 0.9. For the mismatching DigitCap, the loss is 0 iff it predicts an incorrect label with probability ≤ 0.1. https://medium.com/@pechyonkin/part-iv-capsnet-architecture-6a64422f7dce 31

- 105. Margin Loss Function Value for Positive and for Negative Class For the correct DigitCap, the loss is 0 iff it predicts the correct label with probability ≥ 0.9. For the mismatching DigitCap, the loss is 0 iff it predicts an incorrect label with probability ≤ 0.1. https://medium.com/@pechyonkin/part-iv-capsnet-architecture-6a64422f7dce 31

- 106. Decoder: Regularization of CapsNets [Sabour, Frosst, and Hinton 2017] 32

- 107. Decoder: Regularization of CapsNets Decoder is used for regularization: decodes input from DigitCaps to recreate an image of a (28 × 28)-pixels digit [Sabour, Frosst, and Hinton 2017] 32

- 108. Decoder: Regularization of CapsNets Decoder is used for regularization: decodes input from DigitCaps to recreate an image of a (28 × 28)-pixels digit with the loss function being the Euclidean distance [Sabour, Frosst, and Hinton 2017] 32

- 109. Decoder: Regularization of CapsNets Decoder is used for regularization: decodes input from DigitCaps to recreate an image of a (28 × 28)-pixels digit with the loss function being the Euclidean distance ignores the negative classes [Sabour, Frosst, and Hinton 2017] 32

- 110. Decoder: Regularization of CapsNets Decoder is used for regularization: decodes input from DigitCaps to recreate an image of a (28 × 28)-pixels digit with the loss function being the Euclidean distance ignores the negative classes forces capsules to learn features useful for reconstruction [Sabour, Frosst, and Hinton 2017] 32

- 111. Decoder: 3 Fully Connected Layers [Sabour, Frosst, and Hinton 2017] 33

- 112. Decoder: 3 Fully Connected Layers Layer 4: from 16 × 10 input to 512 output, ReLU activations [Sabour, Frosst, and Hinton 2017] 33

- 113. Decoder: 3 Fully Connected Layers Layer 4: from 16 × 10 input to 512 output, ReLU activations Layer 5: from 512 input to 1024 output, ReLU activations [Sabour, Frosst, and Hinton 2017] 33

- 114. Decoder: 3 Fully Connected Layers Layer 4: from 16 × 10 input to 512 output, ReLU activations Layer 5: from 512 input to 1024 output, ReLU activations Layer 6: from 1024 input to 784 output, sigmoid activations (after reshaping it produces a (28 × 28)-pixels decoded image) [Sabour, Frosst, and Hinton 2017] 33

- 116. MNIST Reconstructions (CapsNet, 3 routing iterations) Label: 8 5 5 5 Prediction: 8 5 3 3 Reconstruction: 8 5 5 3 Input: Output: [Sabour, Frosst, and Hinton 2017] 35

- 117. Dimension Perturbations one of the 16 dimensions, by intervals of 0.05 in the range [−0.25, 0.25]: [Sabour, Frosst, and Hinton 2017] 36

- 118. Dimension Perturbations one of the 16 dimensions, by intervals of 0.05 in the range [−0.25, 0.25]: Interpretation Reconstructions after perturbing “scale and thickness” “localized part” [Sabour, Frosst, and Hinton 2017] 36

- 119. Dimension Perturbations one of the 16 dimensions, by intervals of 0.05 in the range [−0.25, 0.25]: Interpretation Reconstructions after perturbing “scale and thickness” “localized part” “stroke thickness” “localized skew” [Sabour, Frosst, and Hinton 2017] 36

- 120. Dimension Perturbations one of the 16 dimensions, by intervals of 0.05 in the range [−0.25, 0.25]: Interpretation Reconstructions after perturbing “scale and thickness” “localized part” “stroke thickness” “localized skew” “width and translation” “localized part” [Sabour, Frosst, and Hinton 2017] 36

- 121. Dimension Perturbations: Latent Codes of 0 and 1 rows: DigitCaps dimensions columns (from left to right): + {−0.25, −0.2, −0.15, −0.1, −0.05, 0, 0.05, 0.1, 0.15, 0.2, 0.25} https://github.com/XifengGuo/CapsNet-Keras 37

- 122. Dimension Perturbations: Latent Codes of 2 and 3 rows: DigitCaps dimensions columns (from left to right): + {−0.25, −0.2, −0.15, −0.1, −0.05, 0, 0.05, 0.1, 0.15, 0.2, 0.25} https://github.com/XifengGuo/CapsNet-Keras 38

- 123. Dimension Perturbations: Latent Codes of 4 and 5 rows: DigitCaps dimensions columns (from left to right): + {−0.25, −0.2, −0.15, −0.1, −0.05, 0, 0.05, 0.1, 0.15, 0.2, 0.25} https://github.com/XifengGuo/CapsNet-Keras 39

- 124. Dimension Perturbations: Latent Codes of 6 and 7 rows: DigitCaps dimensions columns (from left to right): + {−0.25, −0.2, −0.15, −0.1, −0.05, 0, 0.05, 0.1, 0.15, 0.2, 0.25} https://github.com/XifengGuo/CapsNet-Keras 40

- 125. Dimension Perturbations: Latent Codes of 8 and 9 rows: DigitCaps dimensions columns (from left to right): + {−0.25, −0.2, −0.15, −0.1, −0.05, 0, 0.05, 0.1, 0.15, 0.2, 0.25} https://github.com/XifengGuo/CapsNet-Keras 41

- 126. MultiMNIST Reconstructions (CapsNet, 3 routing iterations) R:(2, 7) R:(6, 0) R:(6, 8) R:(7, 1) L:(2, 7) L:(6, 0) L:(6, 8) L:(7, 1) [Sabour, Frosst, and Hinton 2017] 42

- 127. MultiMNIST Reconstructions (CapsNet, 3 routing iterations) R:(2, 7) R:(6, 0) R:(6, 8) R:(7, 1) L:(2, 7) L:(6, 0) L:(6, 8) L:(7, 1) *R:(5, 7) *R:(2, 3) R:(2, 8) R:P:(2, 7) L:(5, 0) L:(4, 3) L:(2, 8) L:(2, 8) [Sabour, Frosst, and Hinton 2017] 42

- 128. MultiMNIST Reconstructions (CapsNet, 3 routing iterations) R:(8, 7) R:(9, 4) R:(9, 5) R:(8, 4) L:(8, 7) L:(9, 4) L:(9, 5) L:(8, 4) [Sabour, Frosst, and Hinton 2017] 43

- 129. MultiMNIST Reconstructions (CapsNet, 3 routing iterations) R:(8, 7) R:(9, 4) R:(9, 5) R:(8, 4) L:(8, 7) L:(9, 4) L:(9, 5) L:(8, 4) *R:(0, 8) *R:(1, 6) R:(4, 9) R:P:(4, 0) L:(1, 8) L:(7, 6) L:(4, 9) L:(4, 9) [Sabour, Frosst, and Hinton 2017] 43

- 130. Results on MNIST and MultiMNIST CapsNet classification test accuracy: [Sabour, Frosst, and Hinton 2017] 44

- 131. Results on MNIST and MultiMNIST CapsNet classification test accuracy: Method Routing Reconstruction MNIST (%) MultiMNIST (%) Baseline - - 0.39 8.1 CapsNet 1 no 0.34±0.032 - CapsNet 1 yes 0.29±0.011 7.5 CapsNet 3 no 0.35±0.036 - CapsNet 3 yes 0.25±0.005 5.2 (The MNIST average and standard deviation results are reported from 3 trials.) [Sabour, Frosst, and Hinton 2017] 44

- 132. Results on Other Datasets CIFAR10 10.6% test error [Sabour, Frosst, and Hinton 2017] 45

- 133. Results on Other Datasets CIFAR10 10.6% test error ensemble of 7 models [Sabour, Frosst, and Hinton 2017] 45

- 134. Results on Other Datasets CIFAR10 10.6% test error ensemble of 7 models 3 routing iterations [Sabour, Frosst, and Hinton 2017] 45

- 135. Results on Other Datasets CIFAR10 10.6% test error ensemble of 7 models 3 routing iterations 24 × 24 patches of the image [Sabour, Frosst, and Hinton 2017] 45

- 136. Results on Other Datasets CIFAR10 10.6% test error ensemble of 7 models 3 routing iterations 24 × 24 patches of the image about what standard convolutional nets achieved when they were first applied to CIFAR10 (Zeiler and Fergus [2013]) [Sabour, Frosst, and Hinton 2017] 45

- 137. CapsuleGAN

- 138. Loss Function of CapsuleGAN min G max D V (D, G) = [Jaiswal, AbdAlmageed, and Natarajan 2018] 46

- 139. Loss Function of CapsuleGAN min G max D V (D, G) = Ex∼pdata(x) [−LM(D(x), T = 1)] [Jaiswal, AbdAlmageed, and Natarajan 2018] 46

- 140. Loss Function of CapsuleGAN min G max D V (D, G) = Ex∼pdata(x) [−LM(D(x), T = 1)] + Ez∼pz (z) [−LM(D(G(z)), T = 0)] [Jaiswal, AbdAlmageed, and Natarajan 2018] 46

- 141. Loss Function of CapsuleGAN min G max D V (D, G) = Ex∼pdata(x) [−LM(D(x), T = 1)] + Ez∼pz (z) [−LM(D(G(z)), T = 0)] [Jaiswal, AbdAlmageed, and Natarajan 2018] 46

- 142. Loss Function of CapsuleGAN min G max D V (D, G) = Ex∼pdata(x) [−LM(D(x), T = 1)] + Ez∼pz (z) [−LM(D(G(z)), T = 0)] In practice: [Jaiswal, AbdAlmageed, and Natarajan 2018] 46

- 143. Loss Function of CapsuleGAN min G max D V (D, G) = Ex∼pdata(x) [−LM(D(x), T = 1)] + Ez∼pz (z) [−LM(D(G(z)), T = 0)] In practice: generator trained to minimize LM(D(G(z)), T = 1) rather than −LM(D(G(z)), T = 0), [Jaiswal, AbdAlmageed, and Natarajan 2018] 46

- 144. Loss Function of CapsuleGAN min G max D V (D, G) = Ex∼pdata(x) [−LM(D(x), T = 1)] + Ez∼pz (z) [−LM(D(G(z)), T = 0)] In practice: generator trained to minimize LM(D(G(z)), T = 1) rather than −LM(D(G(z)), T = 0), the down-weighting factor λ in LM is eliminated (no capsules in the generator). [Jaiswal, AbdAlmageed, and Natarajan 2018] 46

- 145. Randomly Generated MNIST Images [Jaiswal, AbdAlmageed, and Natarajan 2018] 47

- 146. Randomly Generated MNIST Images (a) GAN [Jaiswal, AbdAlmageed, and Natarajan 2018] 47

- 147. Randomly Generated MNIST Images (a) GAN (b) CapsuleGAN [Jaiswal, AbdAlmageed, and Natarajan 2018] 47

- 148. Randomly Generated CIFAR-10 Images [Jaiswal, AbdAlmageed, and Natarajan 2018] 48

- 149. Randomly Generated CIFAR-10 Images (a) GAN [Jaiswal, AbdAlmageed, and Natarajan 2018] 48

- 150. Randomly Generated CIFAR-10 Images (a) GAN (b) CapsuleGAN [Jaiswal, AbdAlmageed, and Natarajan 2018] 48

- 151. Generative Adversarial Metrics [Im et al. 2016] https://github.com/jiwoongim/GRAN/battle.py [Jaiswal, AbdAlmageed, and Natarajan 2018] 49

- 152. Generative Adversarial Metrics [Im et al. 2016] https://github.com/jiwoongim/GRAN/battle.py pairwise comparison between 2 GAN models M1 = (G1, D1) and M2 = (G2, D2) [Jaiswal, AbdAlmageed, and Natarajan 2018] 49

- 153. Generative Adversarial Metrics [Im et al. 2016] https://github.com/jiwoongim/GRAN/battle.py pairwise comparison between 2 GAN models M1 = (G1, D1) and M2 = (G2, D2) each generator against the opponent’s discriminator: [Jaiswal, AbdAlmageed, and Natarajan 2018] 49

- 154. Generative Adversarial Metrics [Im et al. 2016] https://github.com/jiwoongim/GRAN/battle.py pairwise comparison between 2 GAN models M1 = (G1, D1) and M2 = (G2, D2) each generator against the opponent’s discriminator: G1 against D2 [Jaiswal, AbdAlmageed, and Natarajan 2018] 49

- 155. Generative Adversarial Metrics [Im et al. 2016] https://github.com/jiwoongim/GRAN/battle.py pairwise comparison between 2 GAN models M1 = (G1, D1) and M2 = (G2, D2) each generator against the opponent’s discriminator: G1 against D2 G2 against D1 [Jaiswal, AbdAlmageed, and Natarajan 2018] 49

- 156. Generative Adversarial Metrics [Im et al. 2016] https://github.com/jiwoongim/GRAN/battle.py pairwise comparison between 2 GAN models M1 = (G1, D1) and M2 = (G2, D2) each generator against the opponent’s discriminator: G1 against D2 G2 against D1 classification accuracies rather than errors (numerical problems) [Jaiswal, AbdAlmageed, and Natarajan 2018] 49

- 157. Generative Adversarial Metrics [Im et al. 2016] https://github.com/jiwoongim/GRAN/battle.py pairwise comparison between 2 GAN models M1 = (G1, D1) and M2 = (G2, D2) each generator against the opponent’s discriminator: G1 against D2 G2 against D1 classification accuracies rather than errors (numerical problems) [Jaiswal, AbdAlmageed, and Natarajan 2018] 49

- 158. Generative Adversarial Metrics [Im et al. 2016] https://github.com/jiwoongim/GRAN/battle.py pairwise comparison between 2 GAN models M1 = (G1, D1) and M2 = (G2, D2) each generator against the opponent’s discriminator: G1 against D2 G2 against D1 classification accuracies rather than errors (numerical problems) rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) [Jaiswal, AbdAlmageed, and Natarajan 2018] 49

- 159. Generative Adversarial Metrics [Im et al. 2016] https://github.com/jiwoongim/GRAN/battle.py pairwise comparison between 2 GAN models M1 = (G1, D1) and M2 = (G2, D2) each generator against the opponent’s discriminator: G1 against D2 G2 against D1 classification accuracies rather than errors (numerical problems) rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) [Jaiswal, AbdAlmageed, and Natarajan 2018] 49

- 160. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 161. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 162. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 163. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: rsamples < 1 [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 164. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: rsamples < 1 on MNIST: rsamples = 0.79 [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 165. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: rsamples < 1 on MNIST: rsamples = 0.79 on CIFAR-10: rsamples = 1.0 [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 166. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: rsamples < 1 on MNIST: rsamples = 0.79 on CIFAR-10: rsamples = 1.0 rtest 1 [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 167. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: rsamples < 1 on MNIST: rsamples = 0.79 on CIFAR-10: rsamples = 1.0 rtest 1 on MNIST: rtest = 1 [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 168. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: rsamples < 1 on MNIST: rsamples = 0.79 on CIFAR-10: rsamples = 1.0 rtest 1 on MNIST: rtest = 1 on CIFAR-10: rtest = 0.72 [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 169. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: rsamples < 1 on MNIST: rsamples = 0.79 on CIFAR-10: rsamples = 1.0 rtest 1 on MNIST: rtest = 1 on CIFAR-10: rtest = 0.72 [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 170. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: rsamples < 1 on MNIST: rsamples = 0.79 on CIFAR-10: rsamples = 1.0 rtest 1 on MNIST: rtest = 1 on CIFAR-10: rtest = 0.72 ⇒ CapsuleGAN > GAN on MNIST [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 171. Generative Adversarial Metrics [Im et al. 2016] (standard) convolutional GAN vs. CapsuleGAN rsamples = A(DGAN(GCapsuleGAN(z))) A(DCapsuleGAN(GGAN(z))) rtest = A(DGAN(xtest)) A(DCapsuleGAN(xtest)) CapsuleGAN is winning against GAN when: rsamples < 1 on MNIST: rsamples = 0.79 on CIFAR-10: rsamples = 1.0 rtest 1 on MNIST: rtest = 1 on CIFAR-10: rtest = 0.72 ⇒ CapsuleGAN > GAN on MNIST and comparable on CIFAR-10 [Jaiswal, AbdAlmageed, and Natarajan 2018] 50

- 172. Semi-Supervised Classification: MNIST Model Error Rate n = 100 n = 1,000 n = 10,000 [Jaiswal, AbdAlmageed, and Natarajan 2018] 51

- 173. Semi-Supervised Classification: MNIST Model Error Rate n = 100 n = 1,000 n = 10,000 GAN 0.2900 0.1539 0.0702 [Jaiswal, AbdAlmageed, and Natarajan 2018] 51

- 174. Semi-Supervised Classification: MNIST Model Error Rate n = 100 n = 1,000 n = 10,000 GAN 0.2900 0.1539 0.0702 CapsuleGAN 0.2724 0.1142 0.0531 [Jaiswal, AbdAlmageed, and Natarajan 2018] 51

- 175. Semi-Supervised Classification: CIFAR-10 Model Error Rate n = 100 n = 1,000 n = 10,000 [Jaiswal, AbdAlmageed, and Natarajan 2018] 52

- 176. Semi-Supervised Classification: CIFAR-10 Model Error Rate n = 100 n = 1,000 n = 10,000 GAN 0.8305 0.7587 0.7209 [Jaiswal, AbdAlmageed, and Natarajan 2018] 52

- 177. Semi-Supervised Classification: CIFAR-10 Model Error Rate n = 100 n = 1,000 n = 10,000 GAN 0.8305 0.7587 0.7209 CapsuleGAN 0.7983 0.7496 0.7102 [Jaiswal, AbdAlmageed, and Natarajan 2018] 52

- 178. Conclusion

- 180. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 181. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 182. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics no algorithm to implement and train a capsule network: https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 183. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics no algorithm to implement and train a capsule network: now dynamic routing algorithm https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 184. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics no algorithm to implement and train a capsule network: now dynamic routing algorithm one of the reasons: computers not powerful enough in the pre-GPU-based era https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 185. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics no algorithm to implement and train a capsule network: now dynamic routing algorithm one of the reasons: computers not powerful enough in the pre-GPU-based era capable of learning to achieve state-of-the art performance by only using a fraction of the data compared to CNN https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 186. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics no algorithm to implement and train a capsule network: now dynamic routing algorithm one of the reasons: computers not powerful enough in the pre-GPU-based era capable of learning to achieve state-of-the art performance by only using a fraction of the data compared to CNN task of telling digits apart: the human brain needs a couple of dozens of examples (hundreds at most). https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 187. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics no algorithm to implement and train a capsule network: now dynamic routing algorithm one of the reasons: computers not powerful enough in the pre-GPU-based era capable of learning to achieve state-of-the art performance by only using a fraction of the data compared to CNN task of telling digits apart: the human brain needs a couple of dozens of examples (hundreds at most). On the other hand, CNNs typically need tens of thousands of them. https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 188. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics no algorithm to implement and train a capsule network: now dynamic routing algorithm one of the reasons: computers not powerful enough in the pre-GPU-based era capable of learning to achieve state-of-the art performance by only using a fraction of the data compared to CNN task of telling digits apart: the human brain needs a couple of dozens of examples (hundreds at most). On the other hand, CNNs typically need tens of thousands of them. https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 189. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics no algorithm to implement and train a capsule network: now dynamic routing algorithm one of the reasons: computers not powerful enough in the pre-GPU-based era capable of learning to achieve state-of-the art performance by only using a fraction of the data compared to CNN task of telling digits apart: the human brain needs a couple of dozens of examples (hundreds at most). On the other hand, CNNs typically need tens of thousands of them. Downsides: https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 190. Capsules Benefits: a new building block usable in deep learning to better model hierarchical relationships representations similar to scene graphs in computer graphics no algorithm to implement and train a capsule network: now dynamic routing algorithm one of the reasons: computers not powerful enough in the pre-GPU-based era capable of learning to achieve state-of-the art performance by only using a fraction of the data compared to CNN task of telling digits apart: the human brain needs a couple of dozens of examples (hundreds at most). On the other hand, CNNs typically need tens of thousands of them. Downsides: current implementations: much slower than other modern deep learning models https://medium.com/ai-theory-practice-business/ understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b 53

- 191. CapsuleGAN In summary: [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 192. CapsuleGAN In summary: active GAN research: [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 193. CapsuleGAN In summary: active GAN research: easier and more stable training [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 194. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 195. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 196. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 197. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 198. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 199. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines updated objective function [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 200. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines updated objective function CapsuleGANs outperform convolutional GANs: [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 201. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines updated objective function CapsuleGANs outperform convolutional GANs: according to the generative adversarial metric [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 202. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines updated objective function CapsuleGANs outperform convolutional GANs: according to the generative adversarial metric at semi-supervised classification [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 203. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines updated objective function CapsuleGANs outperform convolutional GANs: according to the generative adversarial metric at semi-supervised classification with a large number of unlabeled generated images [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 204. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines updated objective function CapsuleGANs outperform convolutional GANs: according to the generative adversarial metric at semi-supervised classification with a large number of unlabeled generated images and a small number of real labeled ones [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 205. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines updated objective function CapsuleGANs outperform convolutional GANs: according to the generative adversarial metric at semi-supervised classification with a large number of unlabeled generated images and a small number of real labeled ones on MNIST and CIFAR-10 datasets [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 206. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines updated objective function CapsuleGANs outperform convolutional GANs: according to the generative adversarial metric at semi-supervised classification with a large number of unlabeled generated images and a small number of real labeled ones on MNIST and CIFAR-10 datasets [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 207. CapsuleGAN In summary: active GAN research: easier and more stable training improving GAN itself CapsuleGAN: a GAN variant CapsNets instead of CNNs as discriminators design guidelines updated objective function CapsuleGANs outperform convolutional GANs: according to the generative adversarial metric at semi-supervised classification with a large number of unlabeled generated images and a small number of real labeled ones on MNIST and CIFAR-10 datasets [Jaiswal, AbdAlmageed, and Natarajan 2018] 54

- 208. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 209. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: for designing discriminators [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 210. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: for designing discriminators for other inference modules [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 211. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: for designing discriminators for other inference modules [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 212. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: for designing discriminators for other inference modules Future work: [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 213. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: for designing discriminators for other inference modules Future work: theoretical analysis of the margin loss in the GAN objective [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 214. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: for designing discriminators for other inference modules Future work: theoretical analysis of the margin loss in the GAN objective incorporate GAN training tricks [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 215. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: for designing discriminators for other inference modules Future work: theoretical analysis of the margin loss in the GAN objective incorporate GAN training tricks CapsNets for encoders (in GAN variants like BiGAN) [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 216. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: for designing discriminators for other inference modules Future work: theoretical analysis of the margin loss in the GAN objective incorporate GAN training tricks CapsNets for encoders (in GAN variants like BiGAN) [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 217. CapsuleGAN CapsNets as potential alternatives to CNNs in future GAN models: for designing discriminators for other inference modules Future work: theoretical analysis of the margin loss in the GAN objective incorporate GAN training tricks CapsNets for encoders (in GAN variants like BiGAN) [Jaiswal, AbdAlmageed, and Natarajan 2018] 55

- 219. Backup Slides

- 220. Results on Other Datasets smallNORB (LeCun et al. [2004]) 2.7% test error [Sabour, Frosst, and Hinton 2017]

- 221. Results on Other Datasets smallNORB (LeCun et al. [2004]) 2.7% test error 96 × 96 stereo grey-scale images resized to 48 × 48; during training processed random 32 × 32 crops; during test the central 32 × 32 patch [Sabour, Frosst, and Hinton 2017]

- 222. Results on Other Datasets smallNORB (LeCun et al. [2004]) 2.7% test error 96 × 96 stereo grey-scale images resized to 48 × 48; during training processed random 32 × 32 crops; during test the central 32 × 32 patch same CapsNet architecture as for MNIST [Sabour, Frosst, and Hinton 2017]

- 223. Results on Other Datasets smallNORB (LeCun et al. [2004]) 2.7% test error 96 × 96 stereo grey-scale images resized to 48 × 48; during training processed random 32 × 32 crops; during test the central 32 × 32 patch same CapsNet architecture as for MNIST on-par with the state-of-the-art (Ciresan et al. [2011]) [Sabour, Frosst, and Hinton 2017]

- 224. Results on Other Datasets SVHN (Netzer et al. [2011]) 4.3% test error [Sabour, Frosst, and Hinton 2017]

- 225. Results on Other Datasets SVHN (Netzer et al. [2011]) 4.3% test error only 73257 images! [Sabour, Frosst, and Hinton 2017]

- 226. Results on Other Datasets SVHN (Netzer et al. [2011]) 4.3% test error only 73257 images! the number of first convolutional layer channels reduced to 64 [Sabour, Frosst, and Hinton 2017]

- 227. Results on Other Datasets SVHN (Netzer et al. [2011]) 4.3% test error only 73257 images! the number of first convolutional layer channels reduced to 64 the primary capsule layer: to 16 6D-capsules [Sabour, Frosst, and Hinton 2017]

- 228. Results on Other Datasets SVHN (Netzer et al. [2011]) 4.3% test error only 73257 images! the number of first convolutional layer channels reduced to 64 the primary capsule layer: to 16 6D-capsules final capsule layer: 8D-capsules [Sabour, Frosst, and Hinton 2017]

- 229. Further Reading i

- 230. References i Hinton, Geoffrey E, Alex Krizhevsky, and Sida D Wang (2011). “Transforming Auto-Encoders”. In: International Conference on Artificial Neural Networks. Springer, pp. 44–51. Im, Daniel Jiwoong et al. (2016). Generative Adversarial Metric. Jaiswal, Ayush, Wael AbdAlmageed, and Premkumar Natarajan (2018). “CapsuleGAN: Generative Adversarial Capsule Network”. In: arXiv preprint arXiv:1802.06167. Sabour, Sara, Nicholas Frosst, and Geoffrey E Hinton (2017). “Dynamic Routing Between Capsules”. In: Advances in Neural Information Processing Systems, pp. 3859–3869.