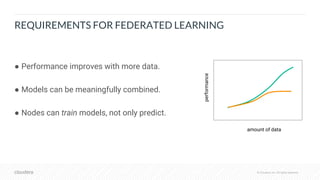

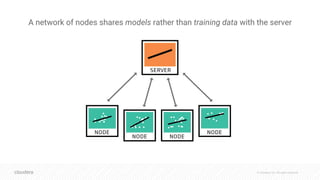

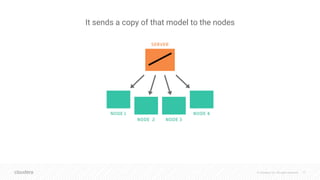

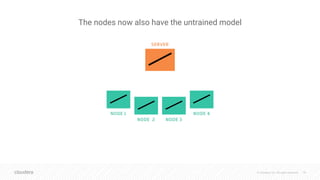

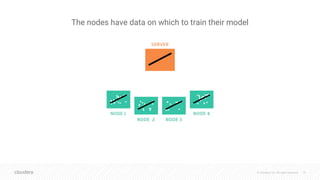

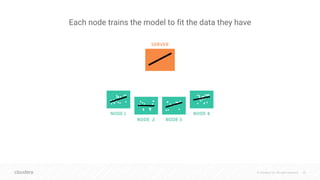

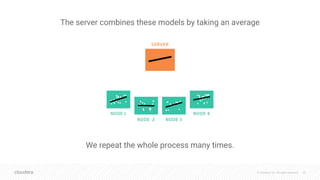

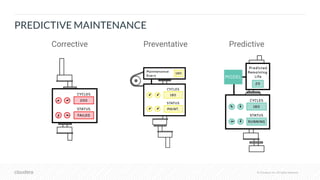

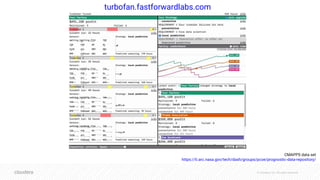

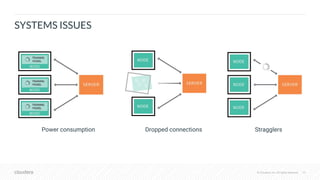

The document discusses federated learning, a decentralized approach to machine learning that enhances privacy by allowing nodes to share model updates instead of training data. It outlines the benefits, challenges, and tools associated with federated learning, including performance improvements with more data and considerations regarding privacy and communication efficiency. Use cases for federated learning include predictive maintenance, healthcare, and enterprise IT applications.