This document summarizes Amazon RDS for PostgreSQL, including:

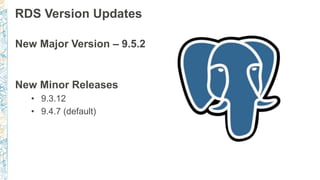

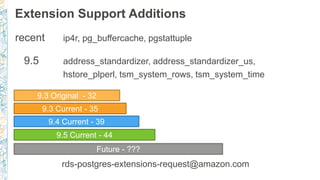

- New major and minor version releases including 9.5.2 and support for additional extensions

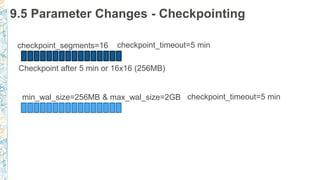

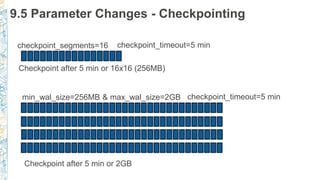

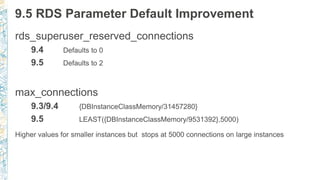

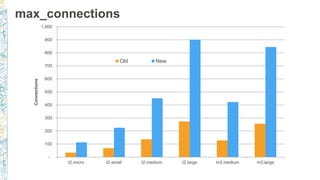

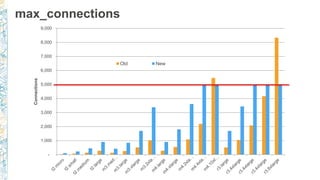

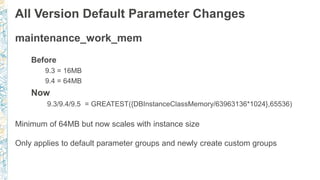

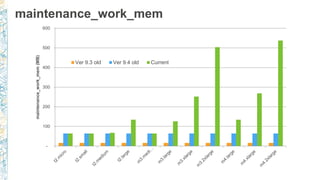

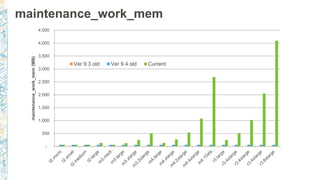

- Changes to default parameters in 9.5 including increased max_connections and maintenance_work_mem

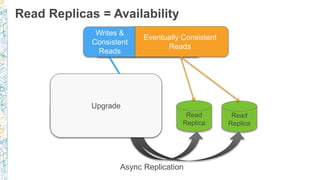

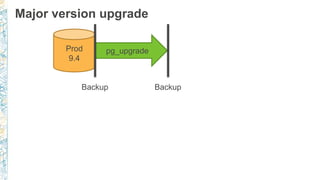

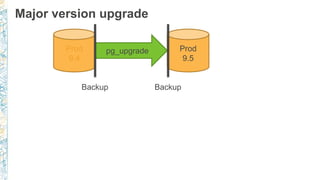

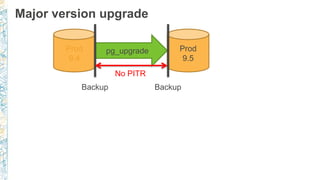

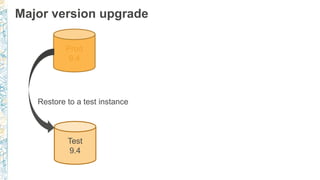

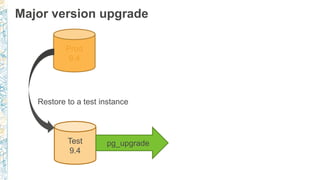

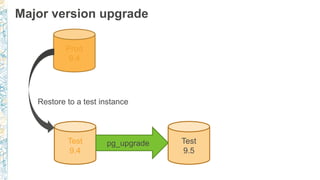

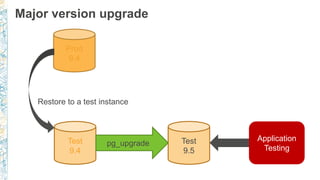

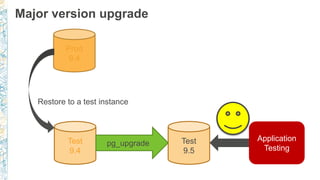

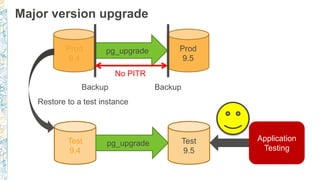

- Details on performing major version upgrades safely using pg_upgrade and testing

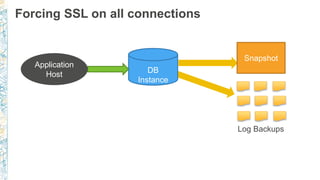

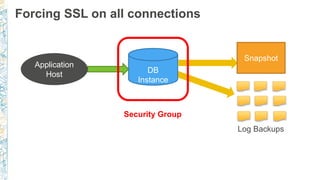

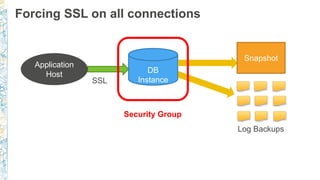

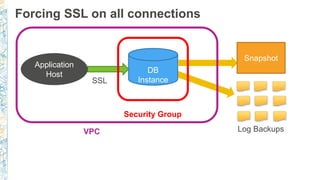

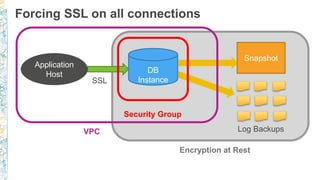

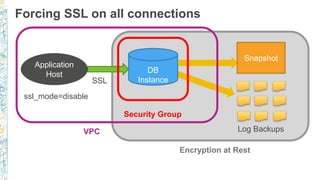

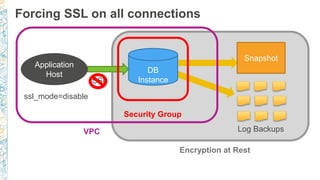

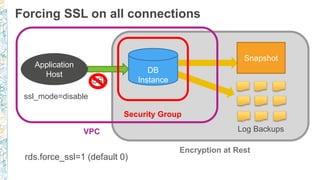

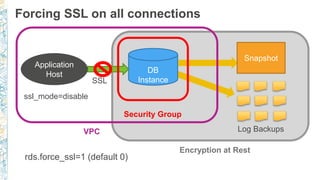

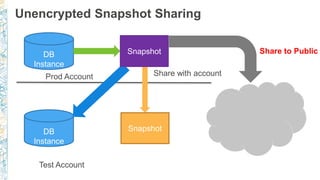

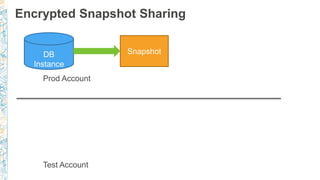

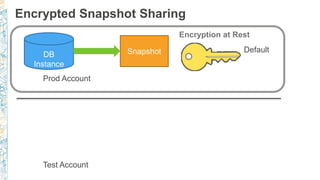

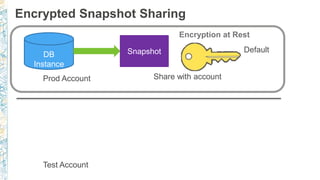

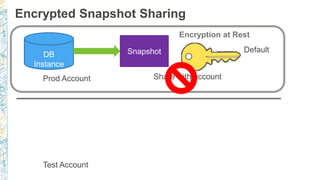

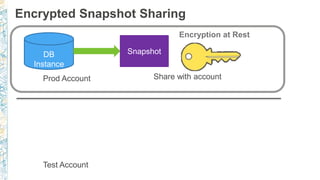

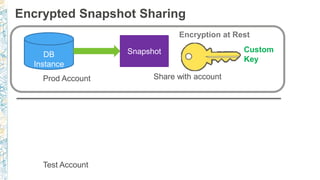

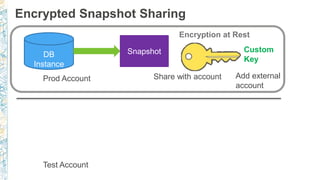

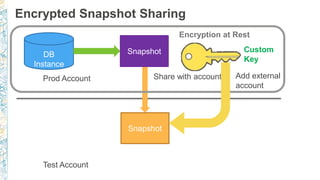

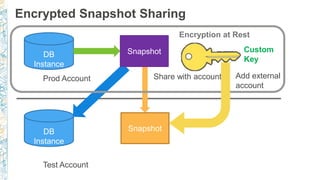

- New security features like forcing SSL on all connections and encryption of snapshot sharing

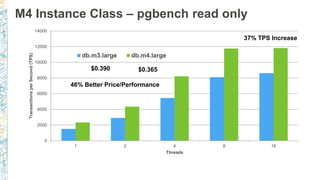

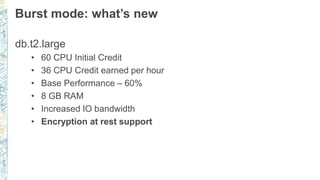

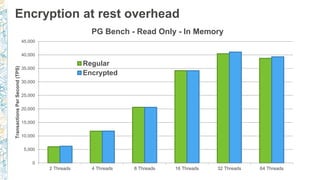

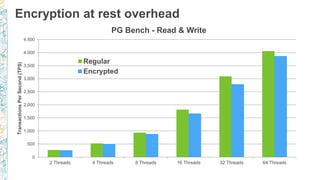

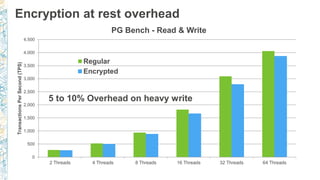

- Performance testing showing little overhead from encryption at rest

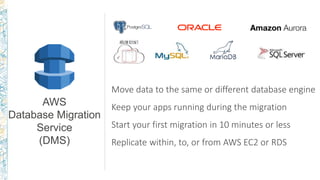

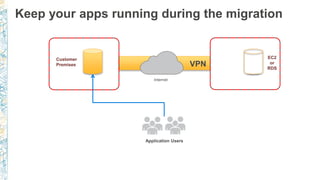

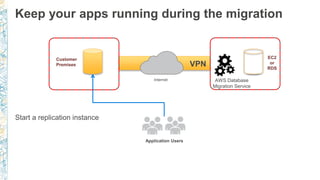

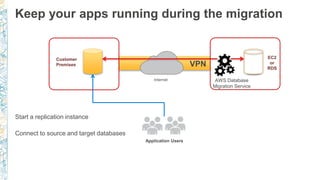

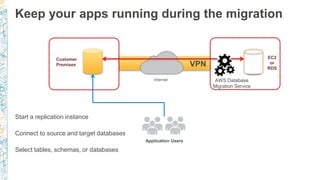

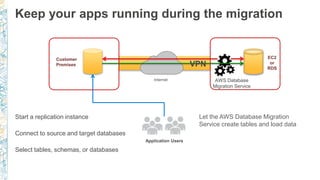

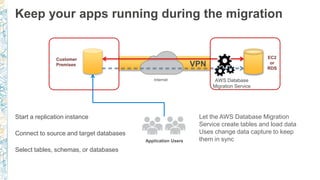

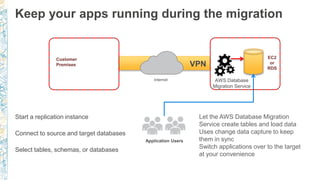

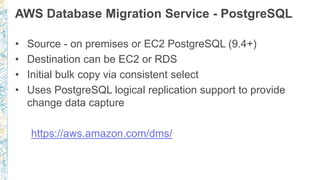

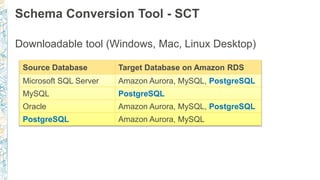

- Data migration options using the Database Migration Service

![RDS autovacuum logging (9.4.5+)

log_autovacuum_min_duration = 5000 (i.e. 5 secs)

rds.force_autovacuum_logging_level = LOG

…[14638]:ERROR: canceling autovacuum task

…[14638]:CONTEXT: automatic vacuum of table "postgres.public.pgbench_tellers"

…[14638]:LOG: skipping vacuum of "pgbench_branches" --- lock not available](https://image.slidesharecdn.com/amazonrdspostgresqllessonslearnedanddeepdiveonnewfeaturesny2016exploded-160428210220/85/Amazon-RDS-for-PostgreSQL-PGConf-2016-67-320.jpg)