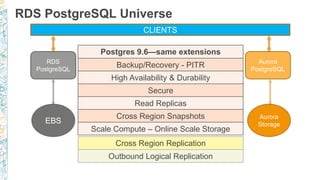

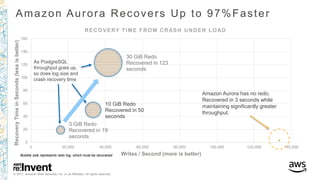

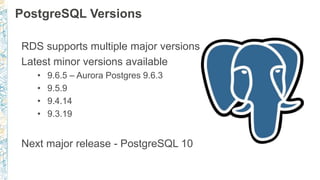

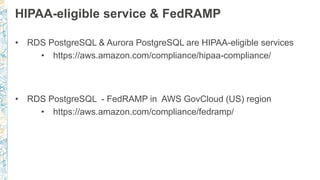

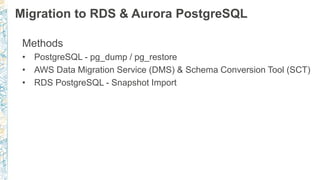

The document provides an in-depth overview of the Amazon RDS PostgreSQL and Aurora PostgreSQL services, including features such as multi-AZ replication, storage scaling, and backup/recovery options. It discusses performance metrics, recovery times, and various PostgreSQL extensions supported by RDS. Additionally, it touches on security features, compliance with HIPAA and FedRAMP, and auditing capabilities.

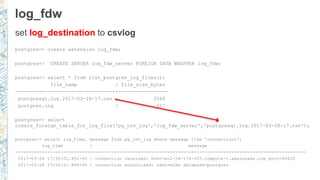

![log_fdw - continued

can be done without csv

postgres=> select

create_foreign_table_for_log_file('pg_log','log_fdw_server','postgresql.log.2017-03-28-17');

postgres=> select log_entry from pg_log where log_entry like '%connection%';

log_entry

----------------------------------------------------------------------------------------------------------------------------- -----------------------

2017-03-28 17:50:01 UTC:ec2-54-174.compute-1.amazonaws.com(45626):[unknown]@[unknown]:[20434]:LOG: received: host=ec2-54-174-205..amazonaws.com

2017-03-28 17:50:01 UTC:ec2-54-174.compute-1.amazonaws.com(45626):mike@postgres:[20434]:LOG: connection authorized: user=mike database=postgres

2017-03-28 17:57:44 UTC:ec2-54-174.compute-1.amazonaws.com(45626):mike@postgres:[20434]:ERROR: column "connection" does not exist at character 143](https://image.slidesharecdn.com/deepdiveintotherdspostgresqluniverseaustin2017finalsplit-171205195950/85/Deep-dive-into-the-Rds-PostgreSQL-Universe-Austin-2017-56-320.jpg)

![PostgreSQL Audit : pgaudit (9.6.3+)

CREATE ROLE rds_pgaudit;

Add pgaudit to shared_preload_libraries in parameter group

SET pgaudit.role = rds_pgaudit;

CREATE EXTENSION pgaudit;

For tables to be enabled for auditing:

GRANT SELECT ON table1 TO rds_pgaudit;

Database logs will show entry as follows:

2017-06-12 19:09:49 UTC:…:pgadmin@postgres:[11701]:LOG:

AUDIT: OBJECT,1,1,READ,SELECT,TABLE,public.t1,select * from

t1; ...](https://image.slidesharecdn.com/deepdiveintotherdspostgresqluniverseaustin2017finalsplit-171205195950/85/Deep-dive-into-the-Rds-PostgreSQL-Universe-Austin-2017-72-320.jpg)

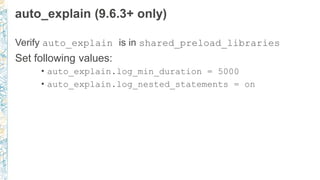

![RDS autovacuum logging (9.4.5+)

log_autovacuum_min_duration = 5000 (i.e. 5 secs)

rds.force_autovacuum_logging_level = LOG

…[14638]:ERROR: canceling autovacuum task

…[14638]:CONTEXT: automatic vacuum of table "postgres.public.pgbench_tellers"

…[14638]:LOG: skipping vacuum of "pgbench_branches" --- lock not available](https://image.slidesharecdn.com/deepdiveintotherdspostgresqluniverseaustin2017finalsplit-171205195950/85/Deep-dive-into-the-Rds-PostgreSQL-Universe-Austin-2017-123-320.jpg)