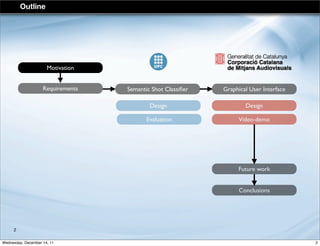

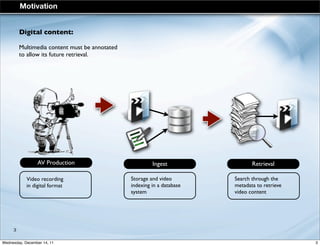

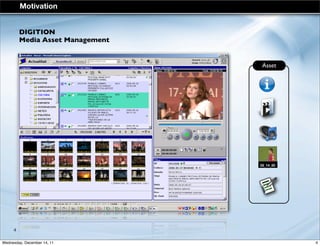

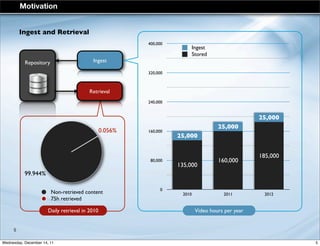

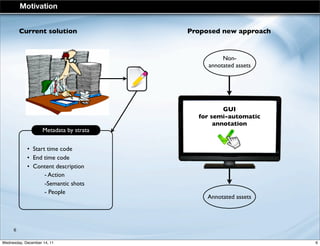

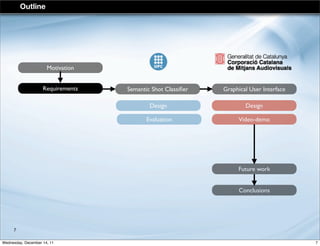

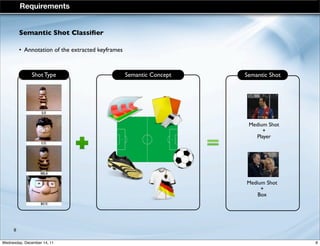

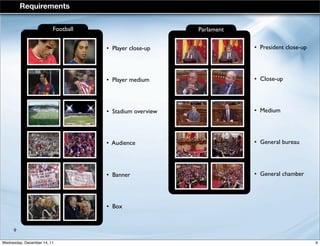

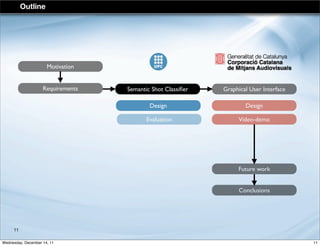

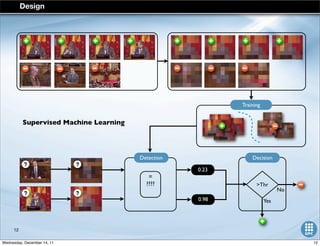

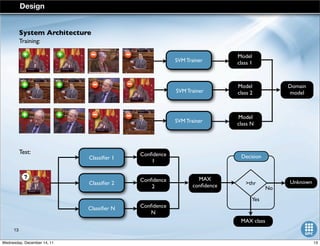

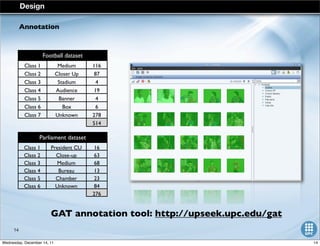

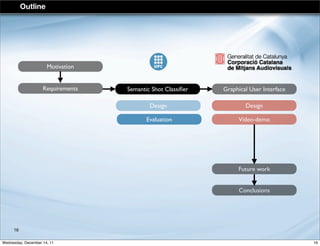

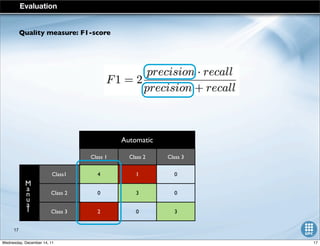

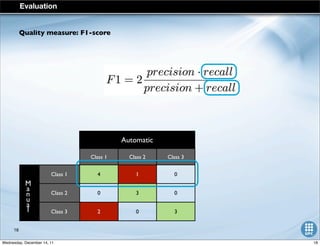

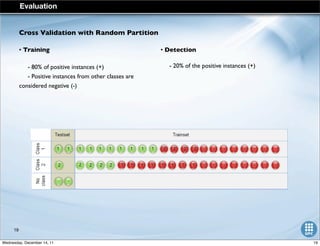

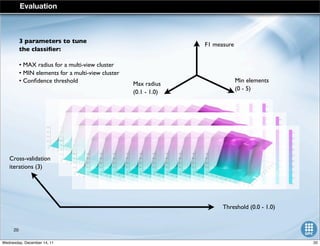

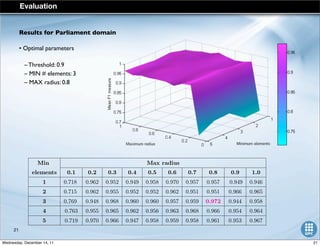

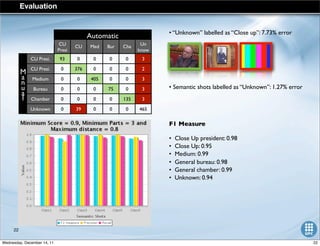

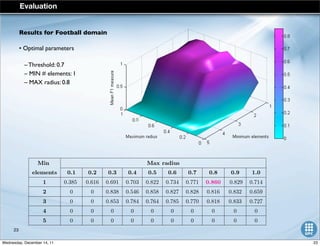

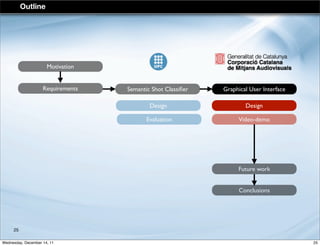

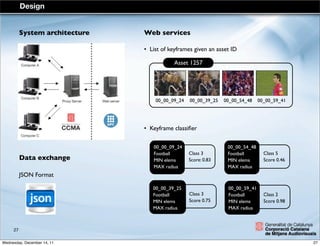

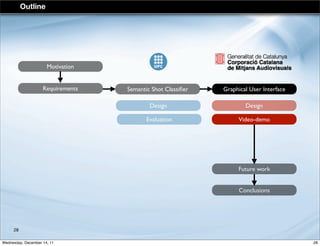

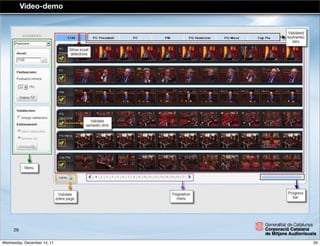

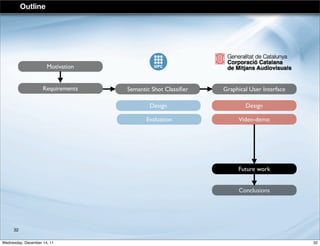

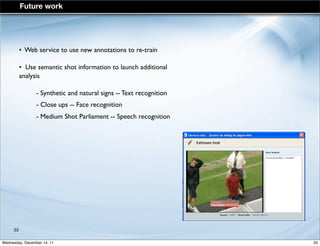

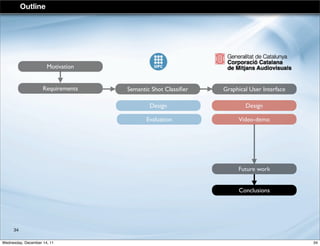

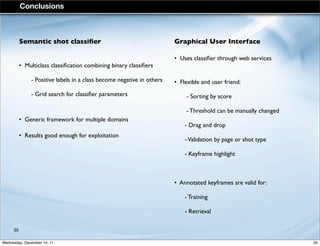

The document presents a rich internet application designed for semi-automatic annotation of semantic shots in keyframes, highlighting its motivation, design, and evaluation methods. The semantic shot classifier utilizes supervised machine learning to label multimedia content, aiming to improve digital content retrieval. Future work involves enhancing the system for various analyses, including face and speech recognition.