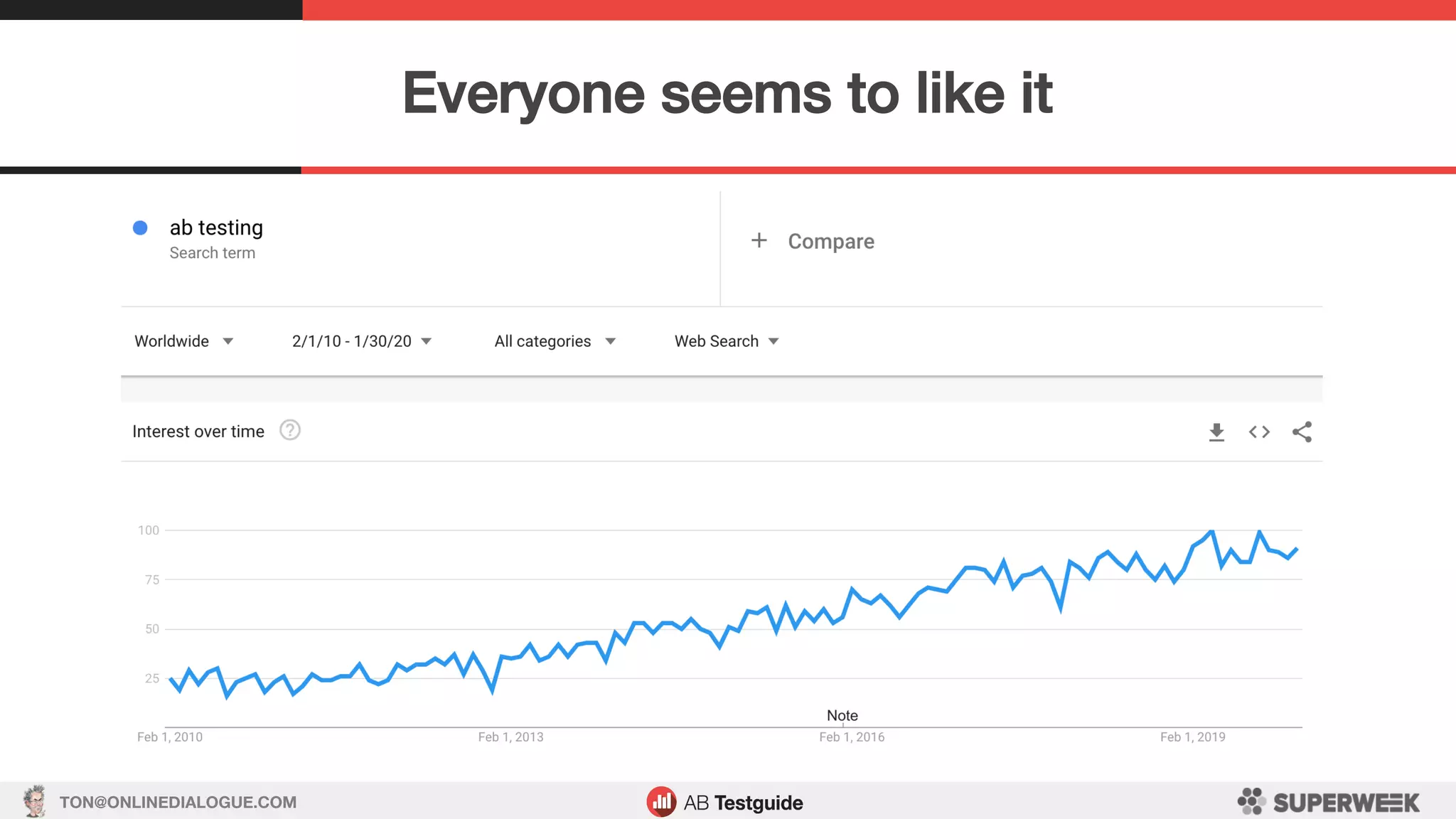

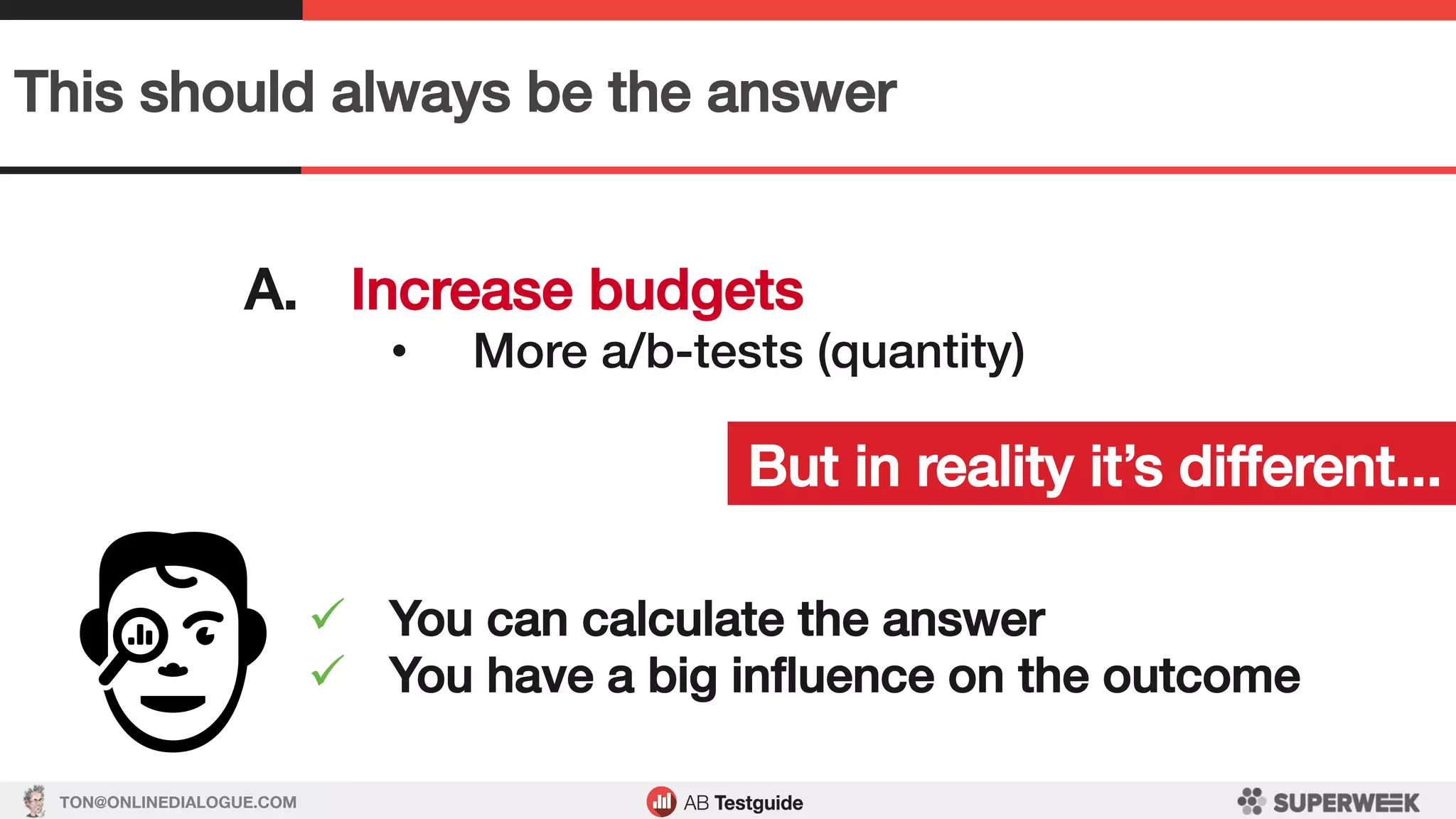

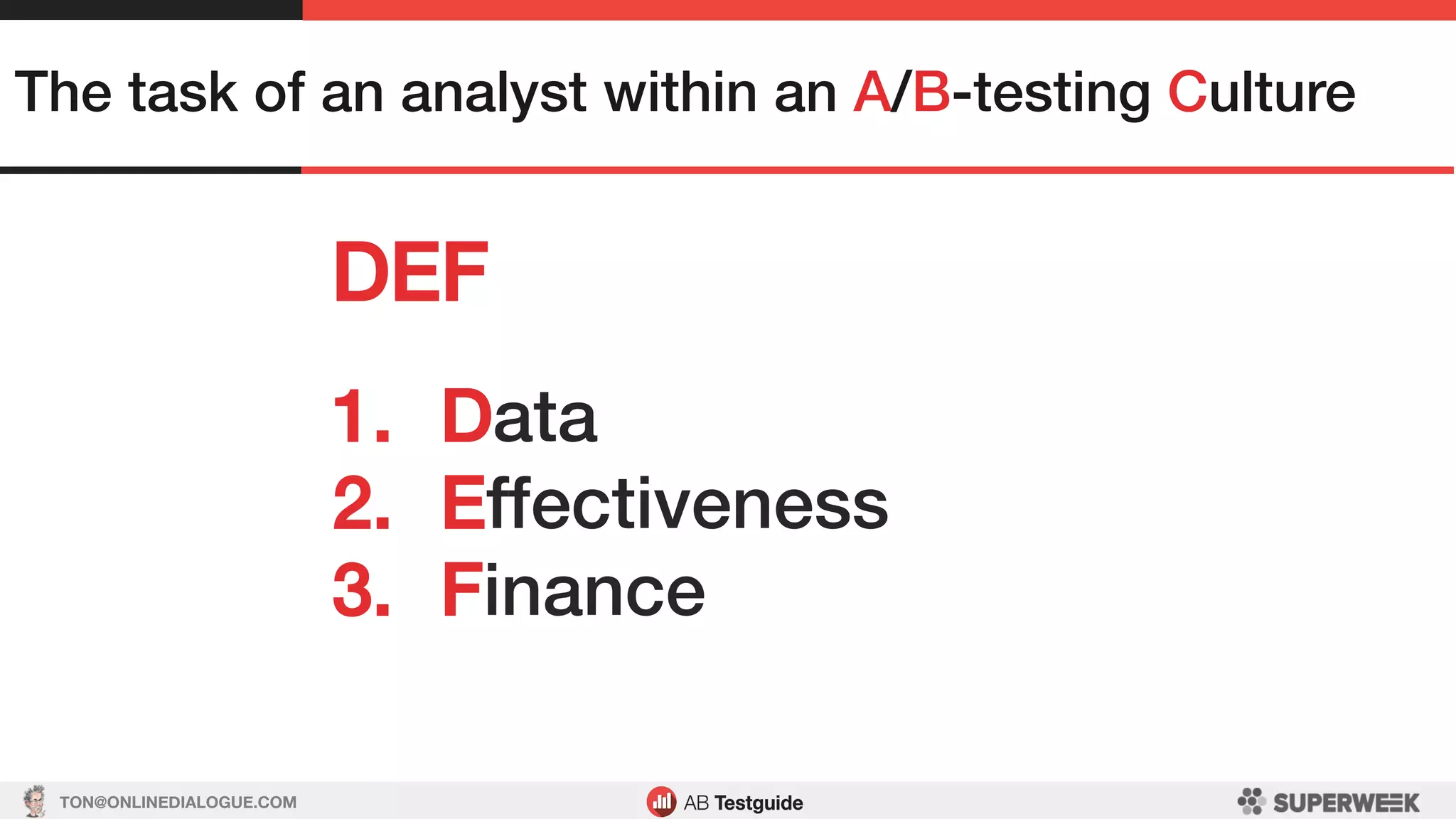

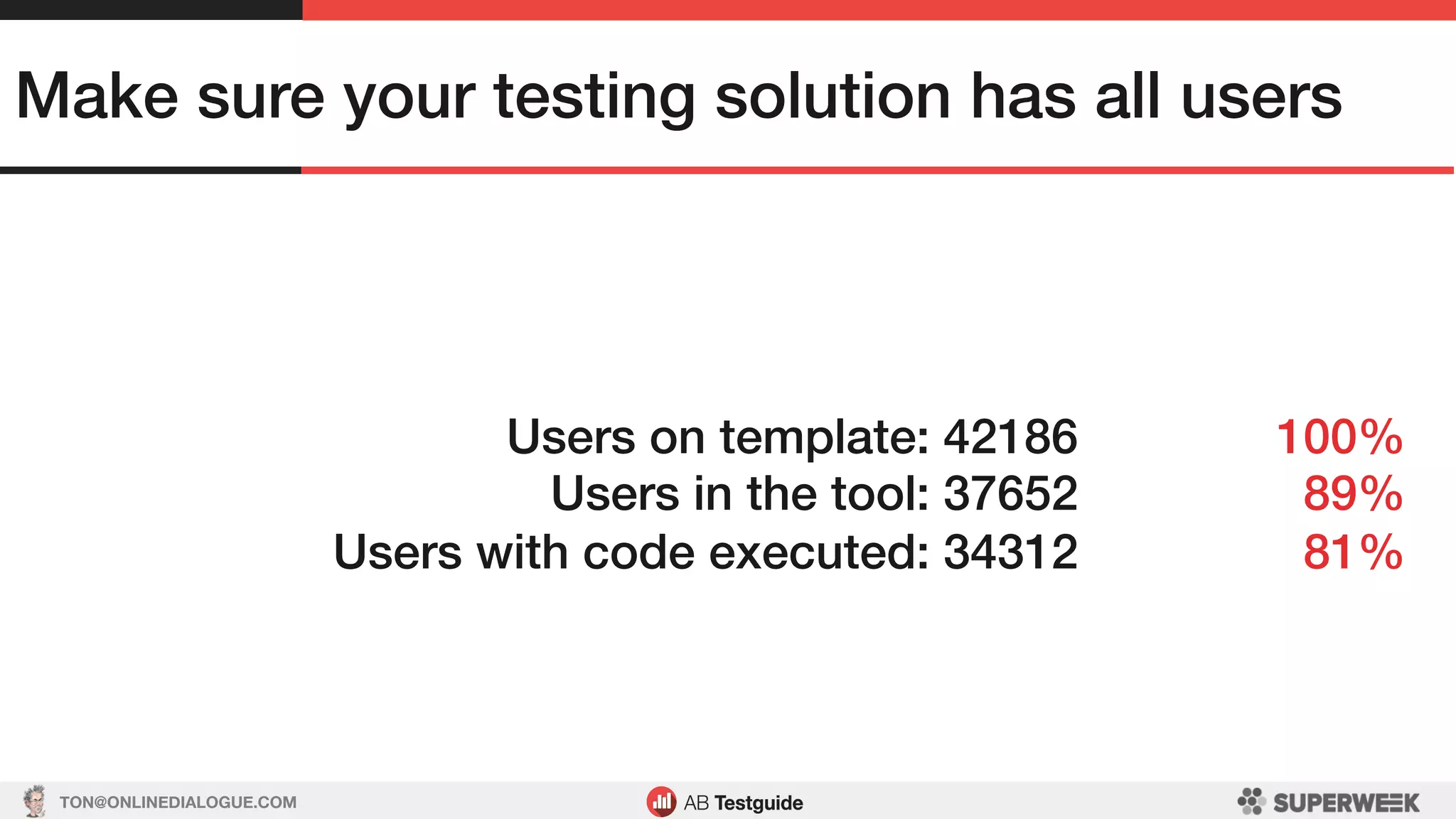

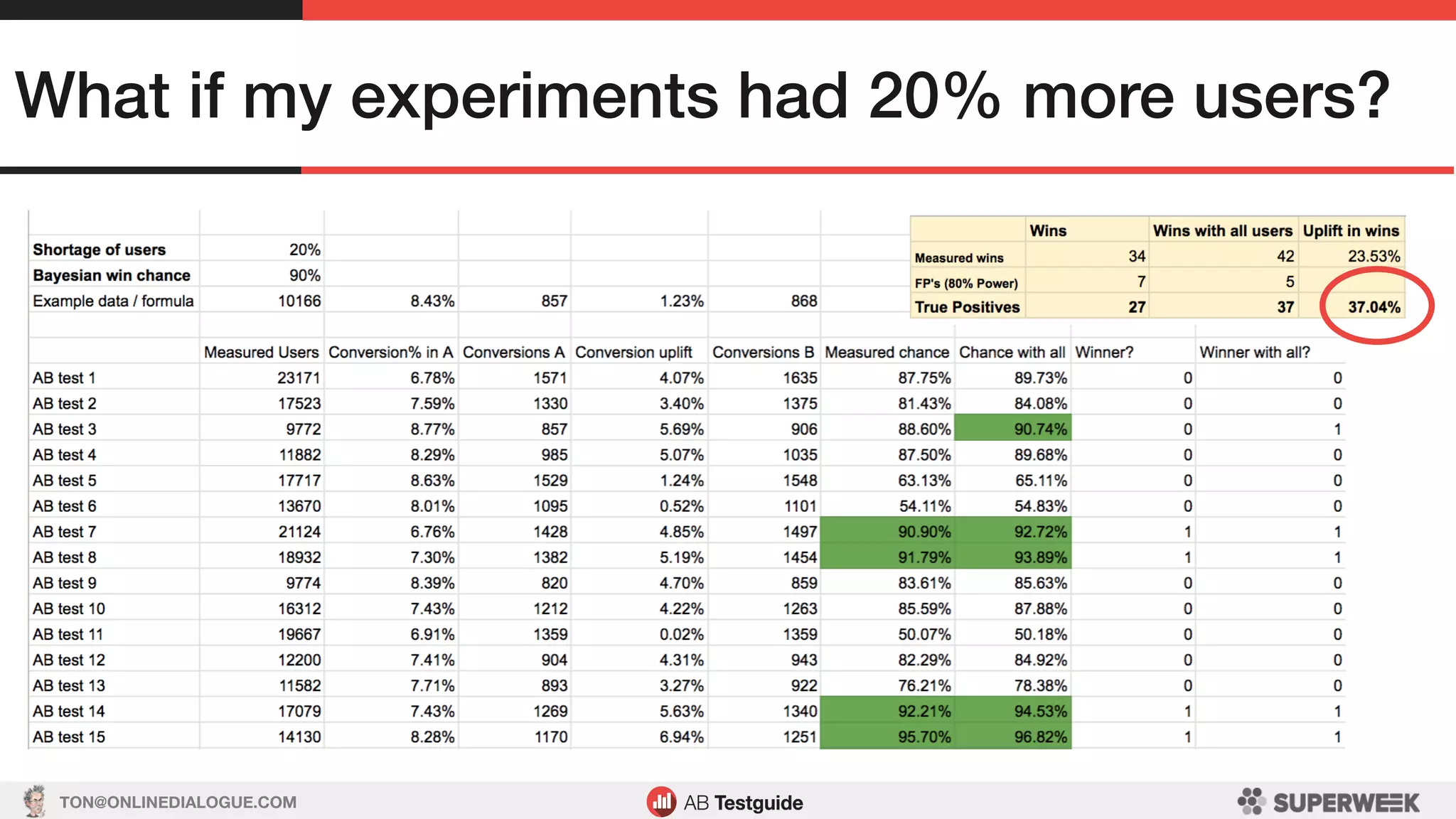

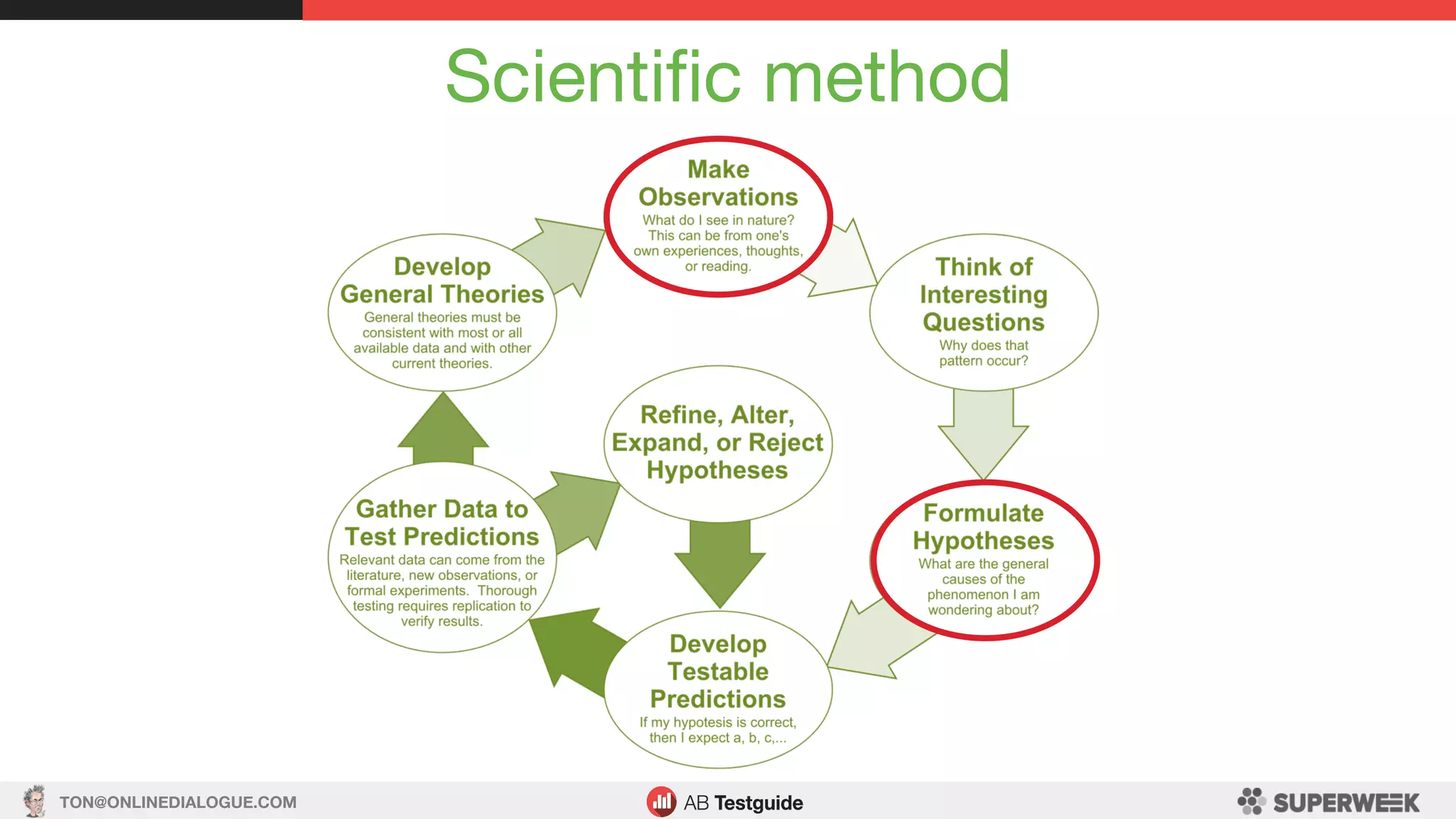

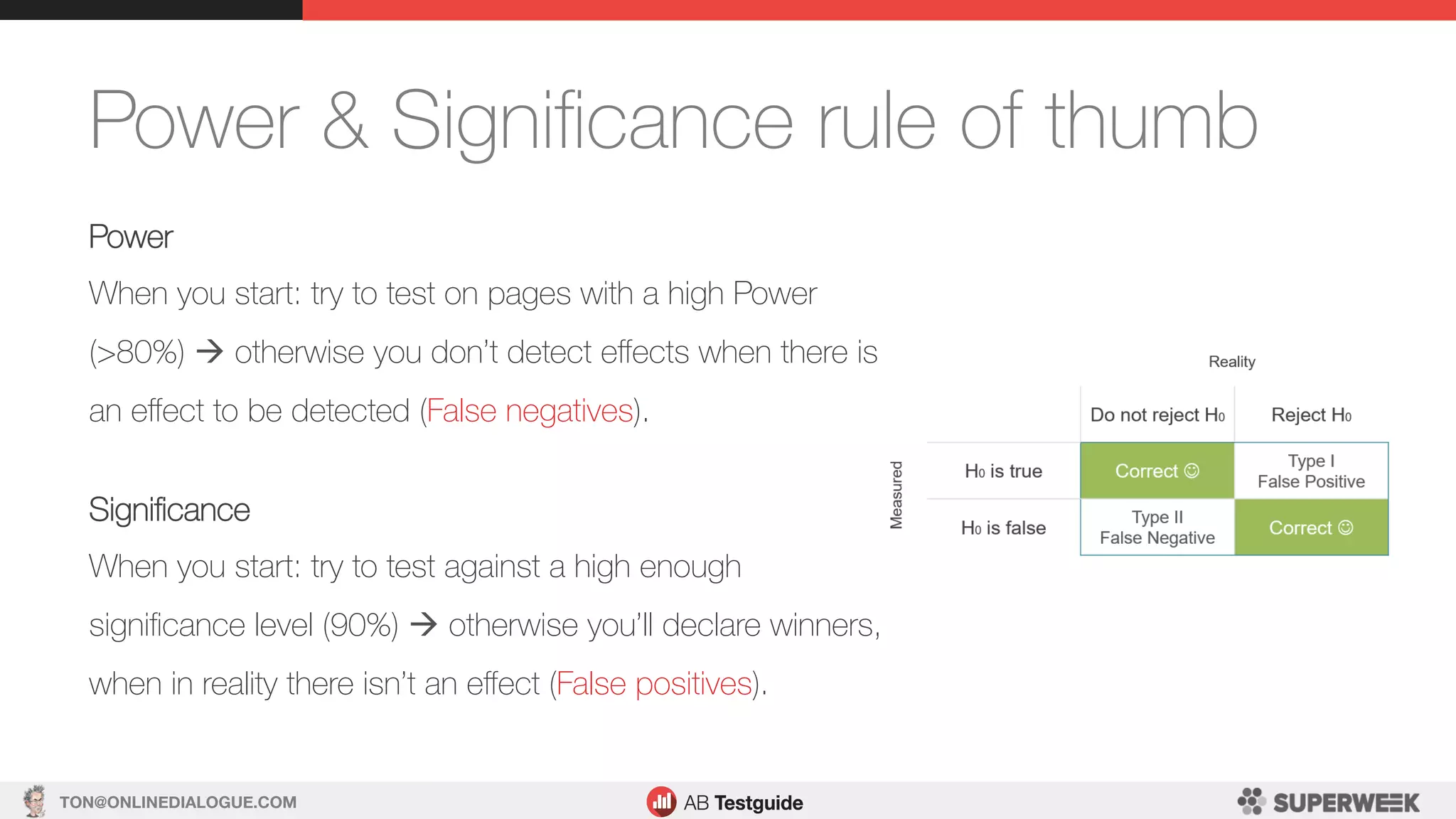

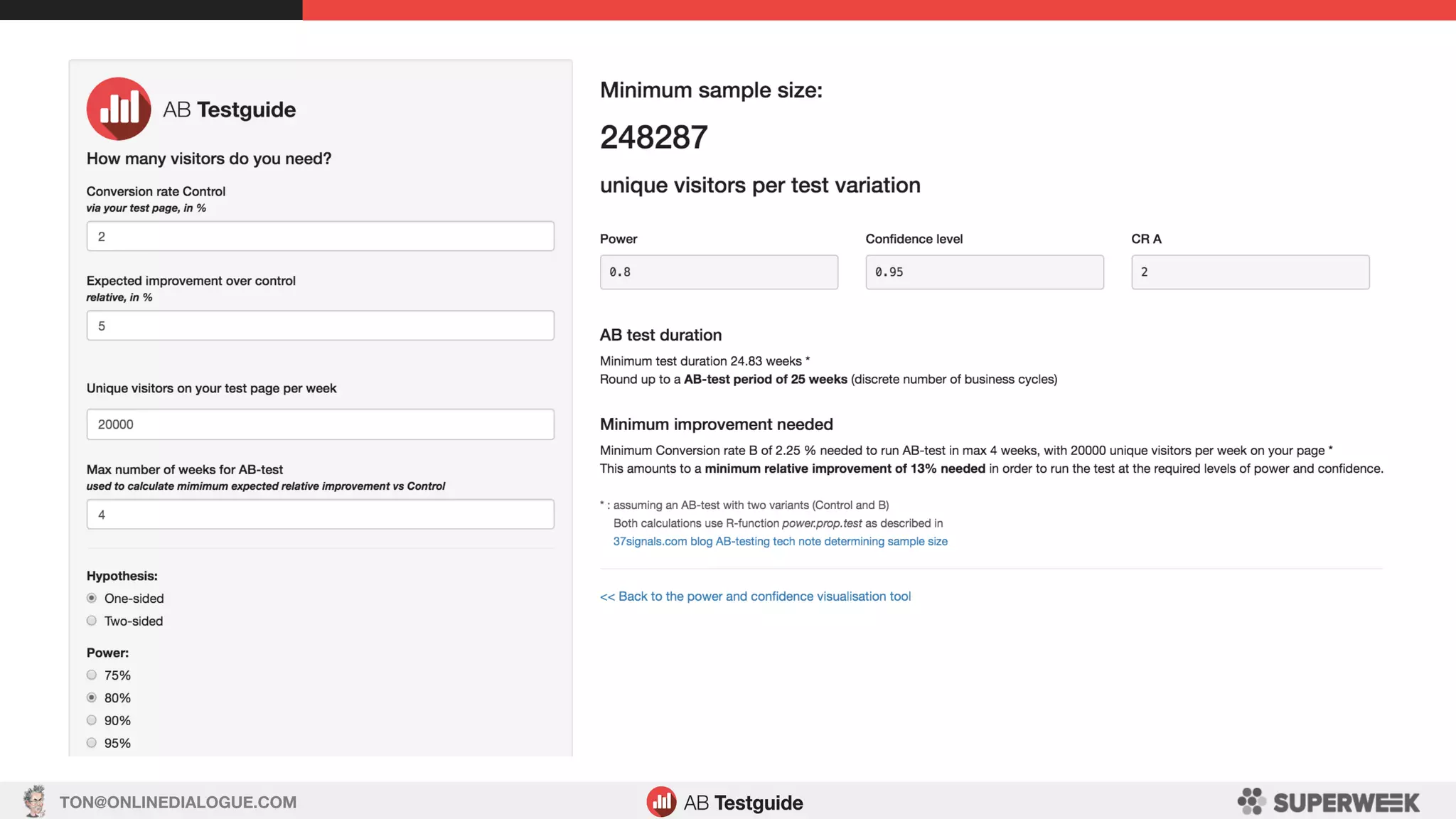

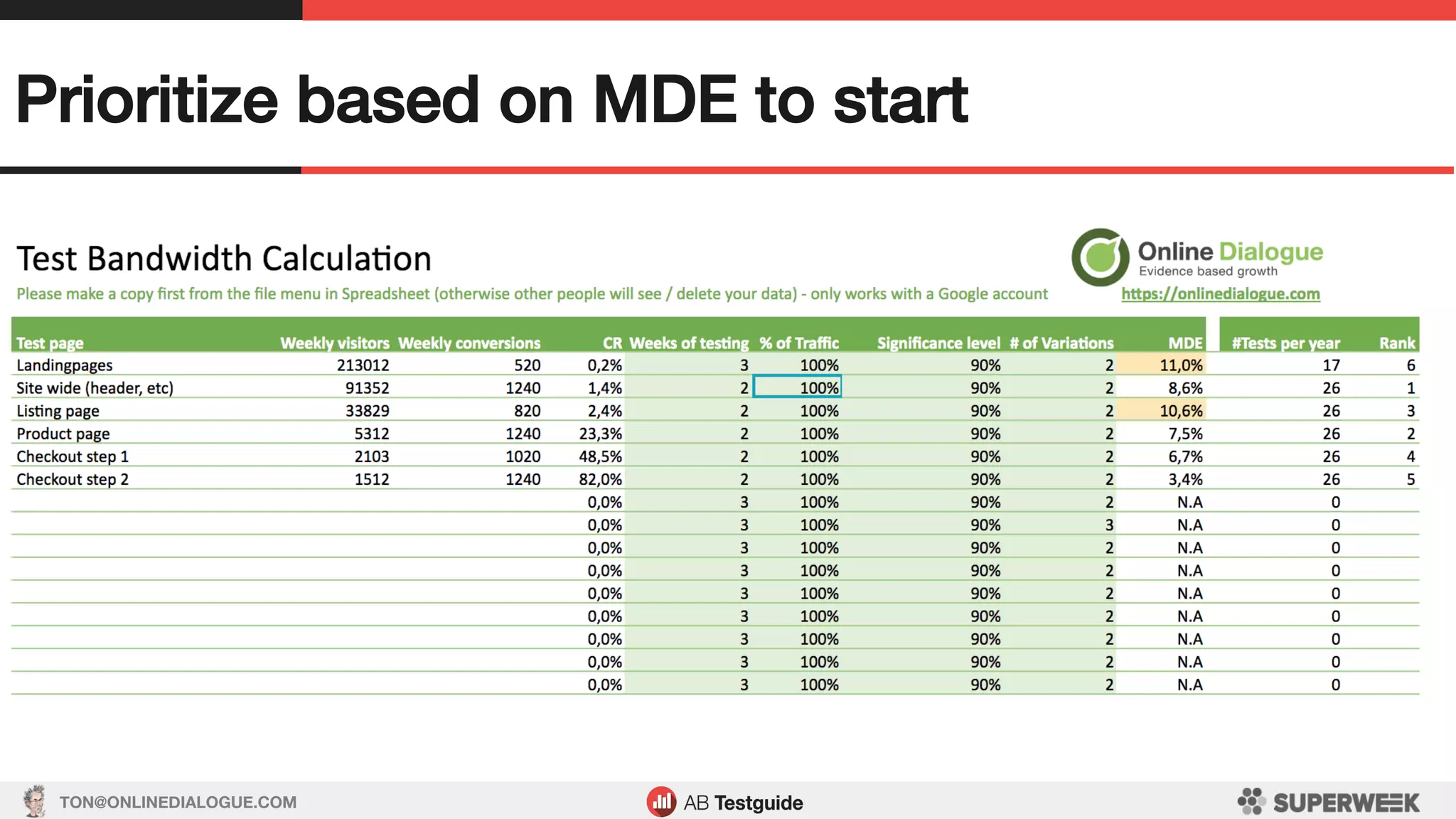

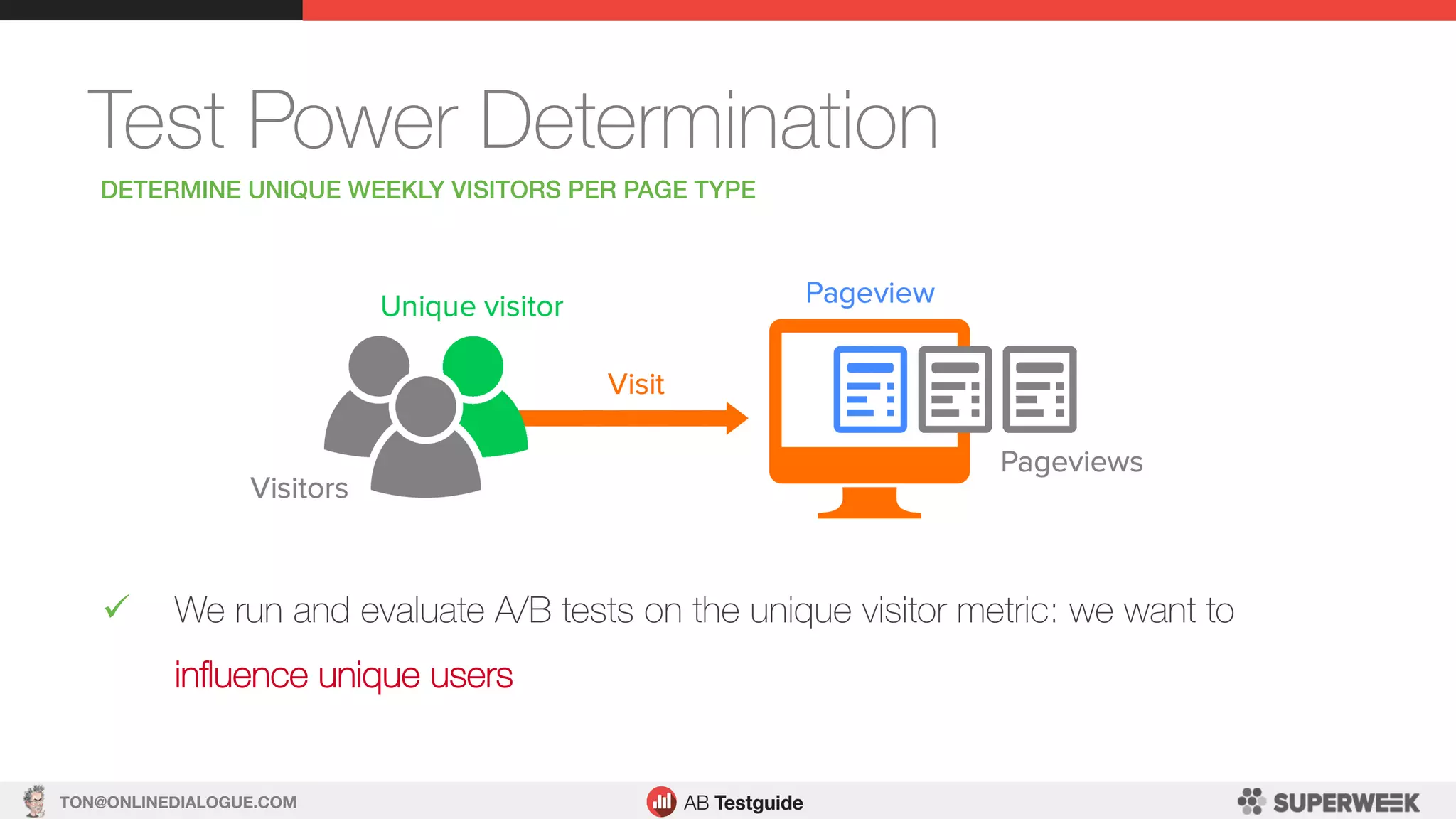

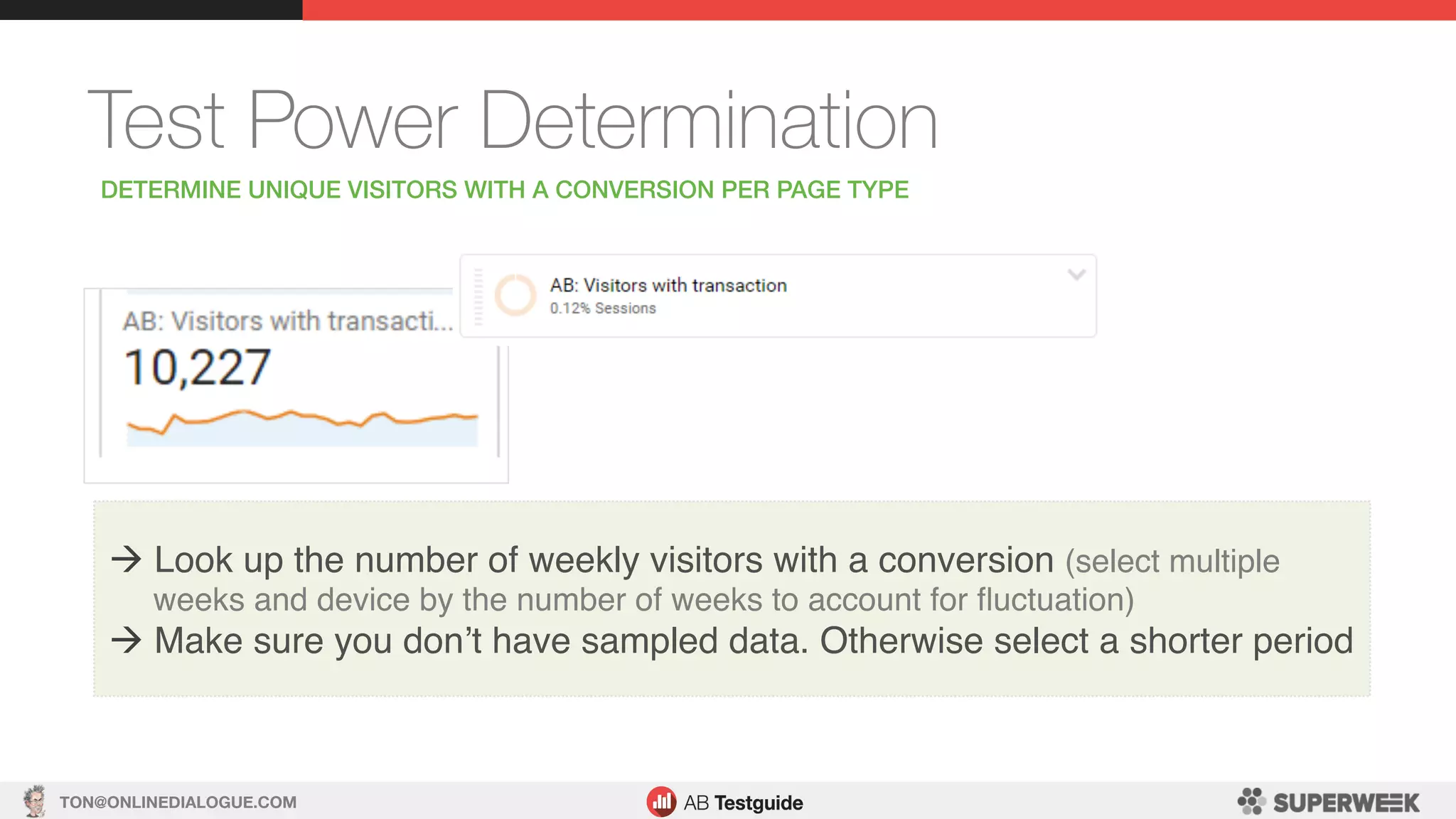

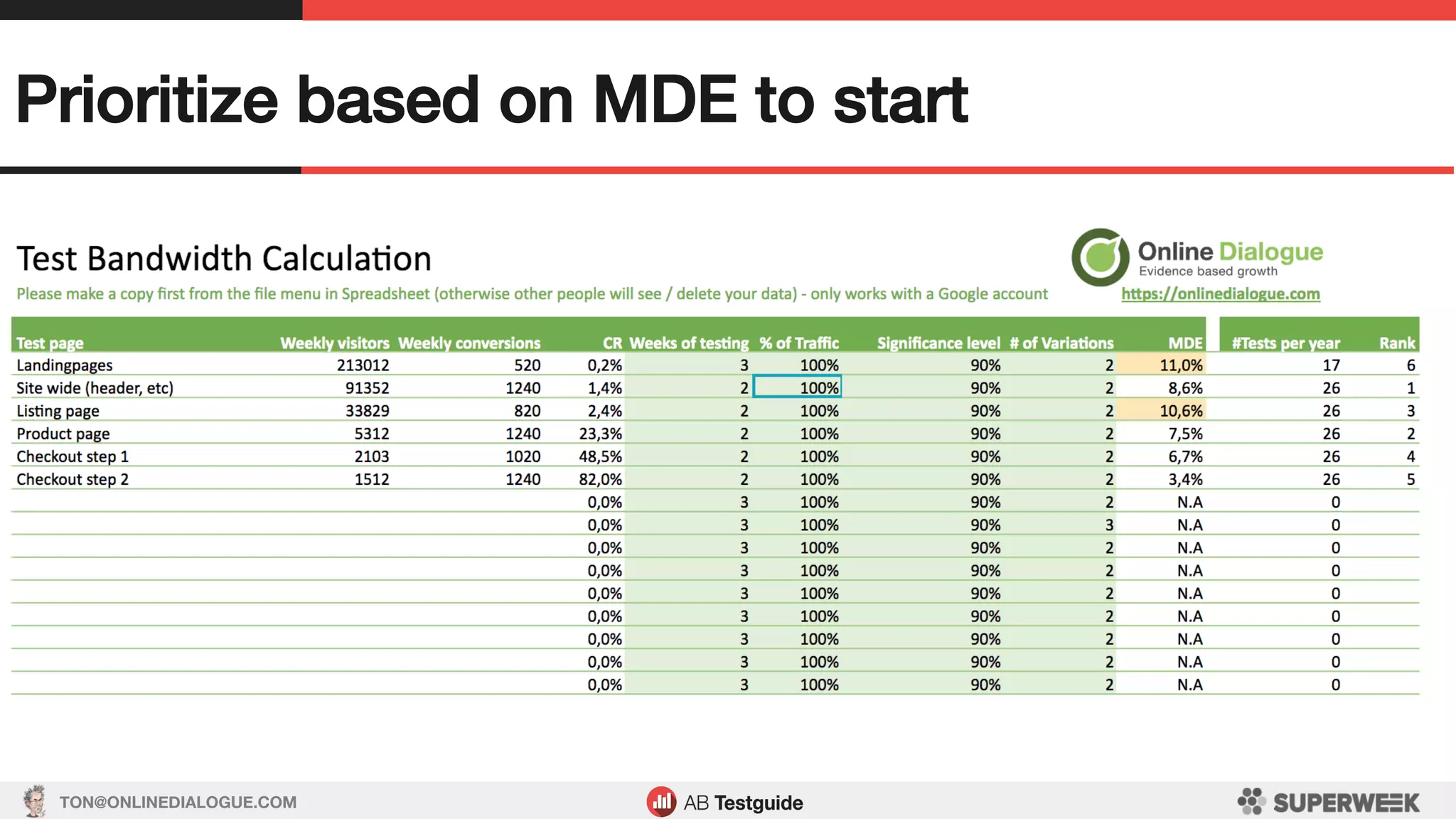

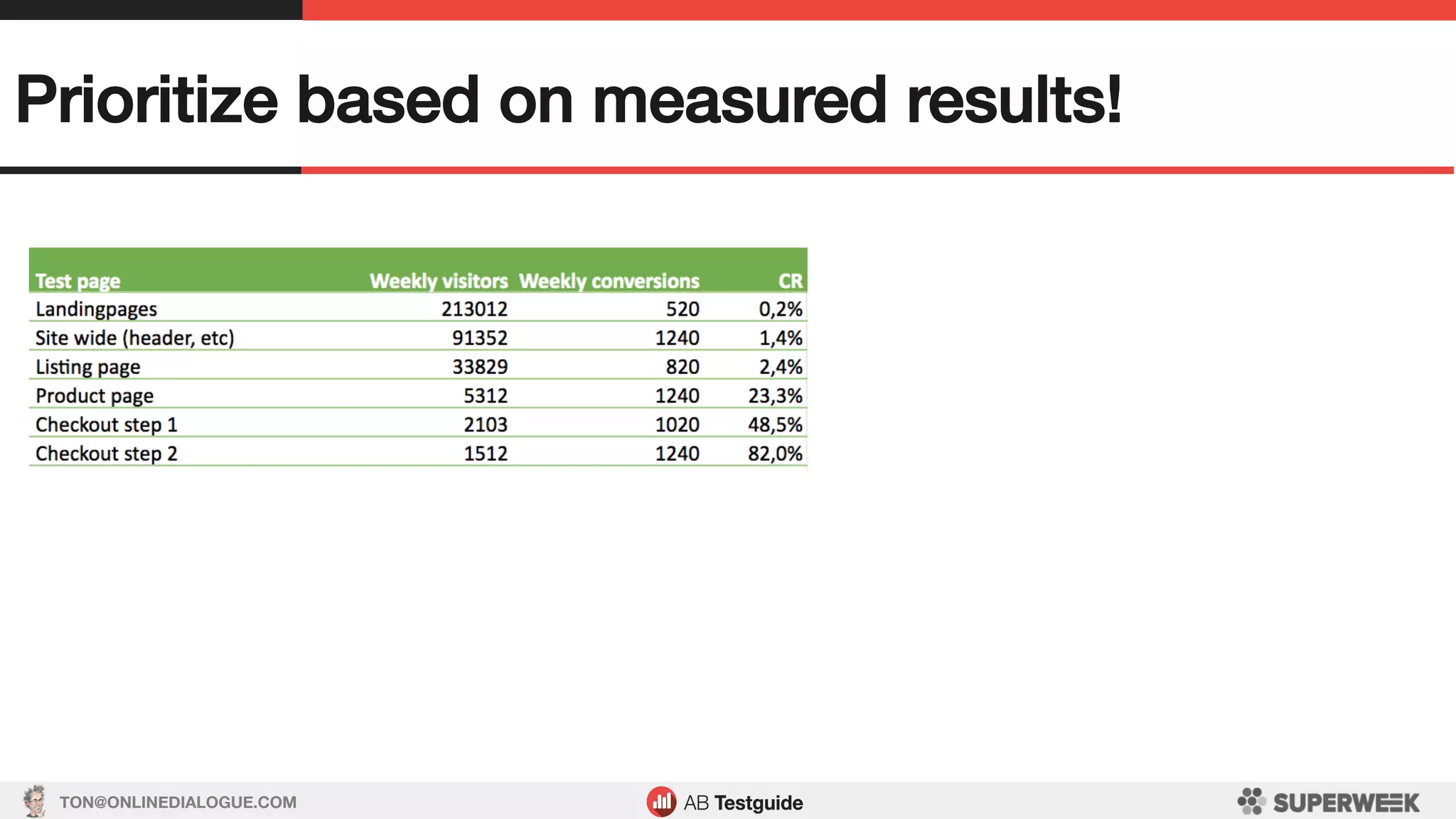

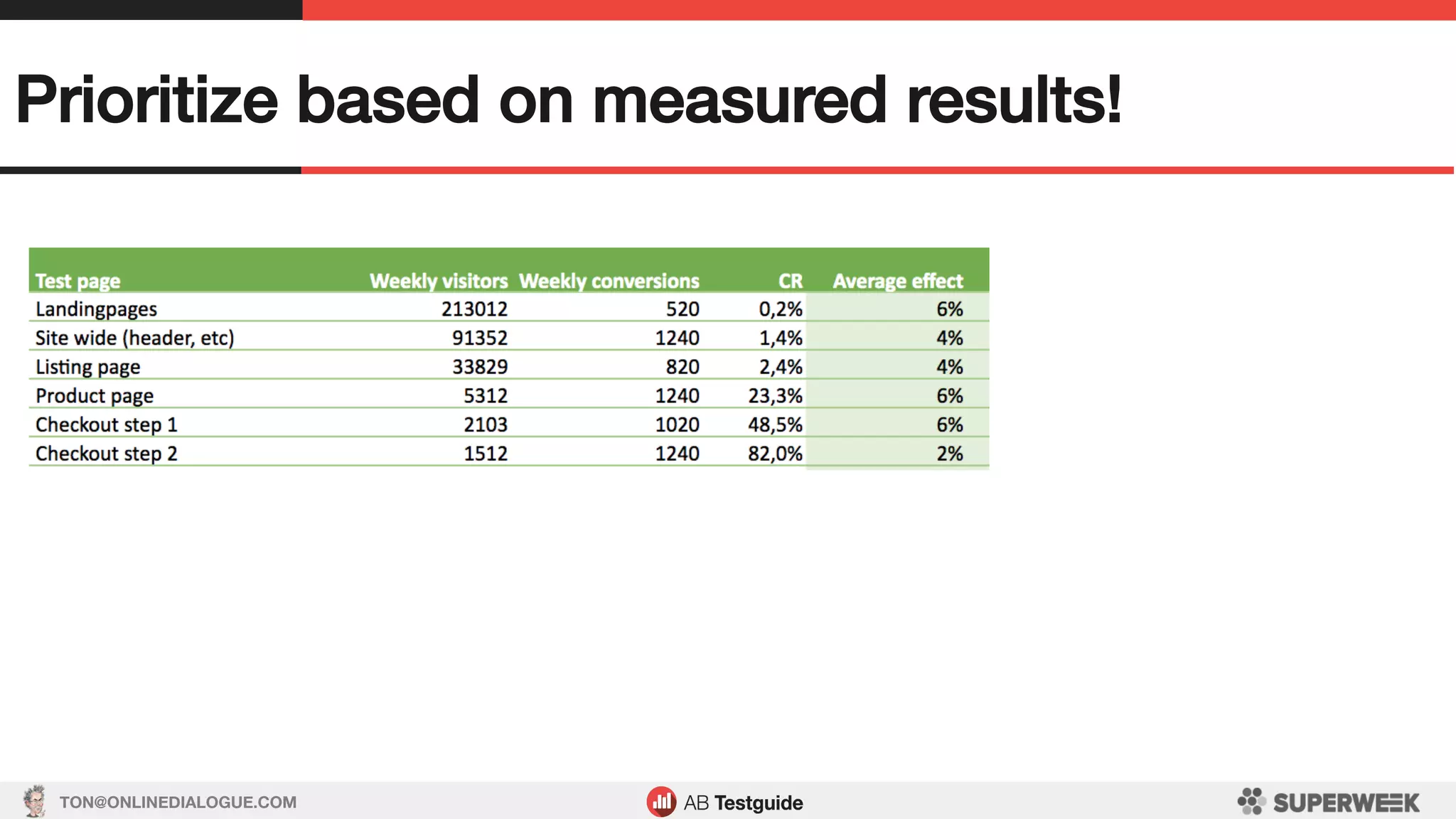

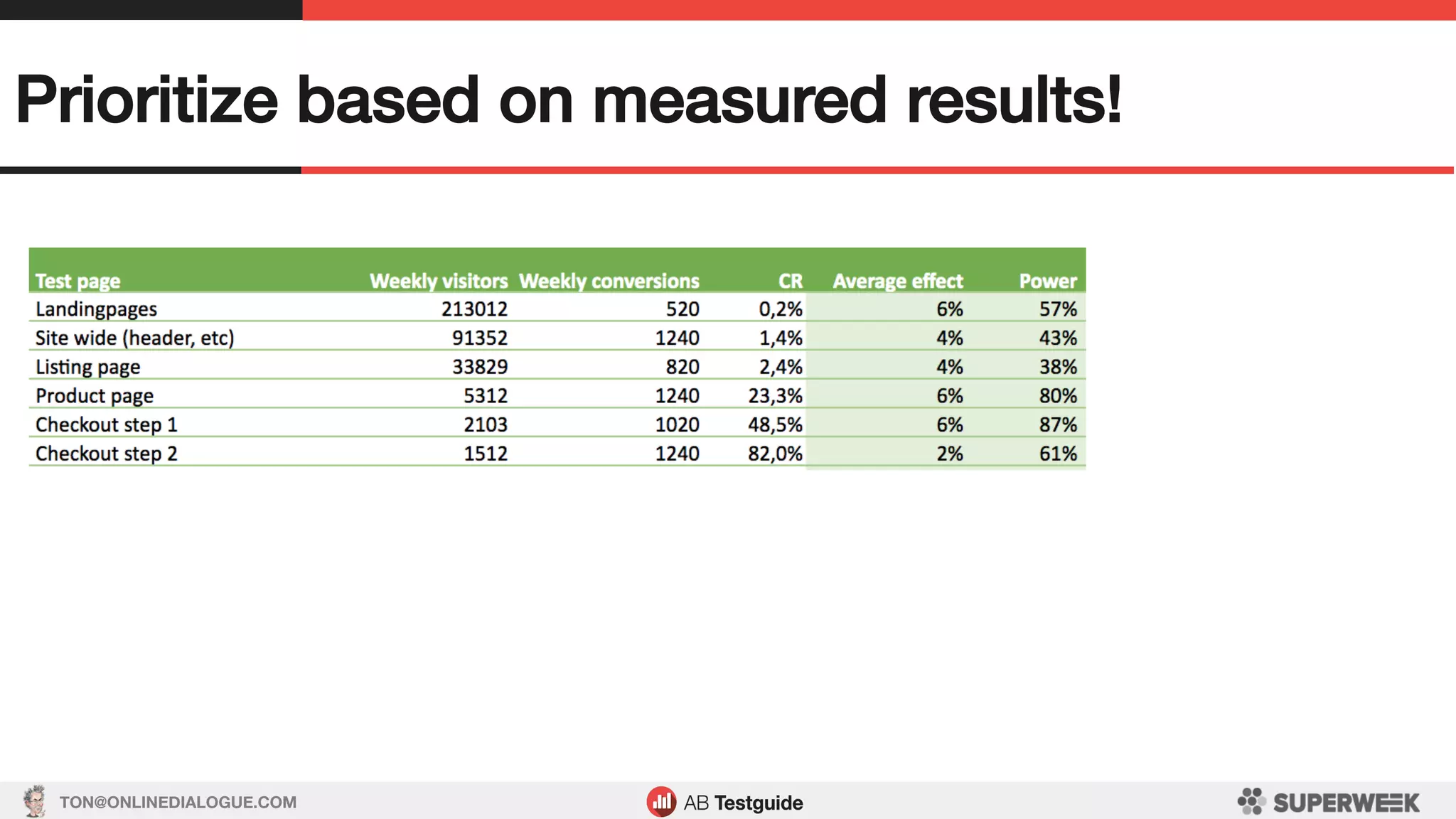

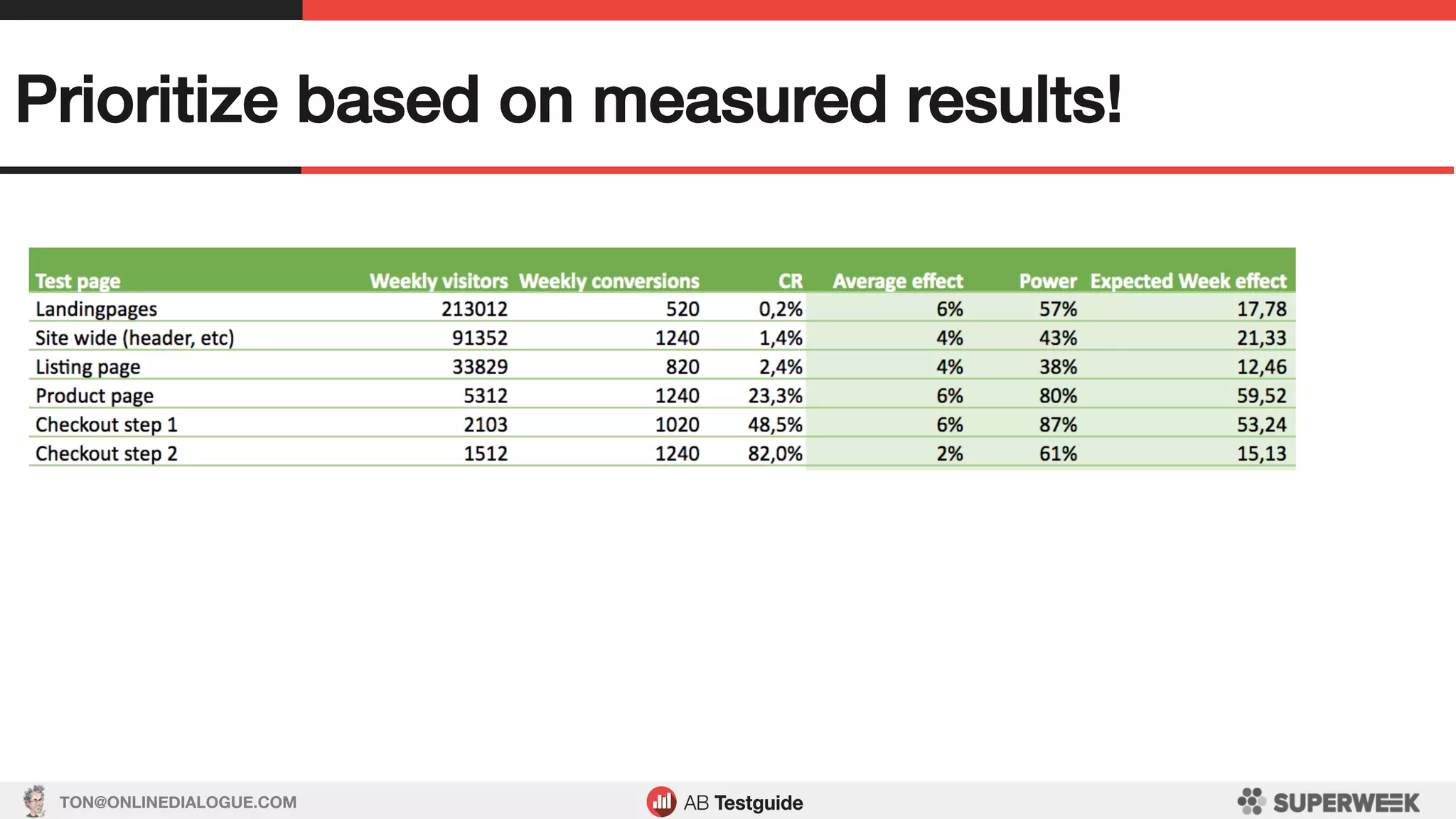

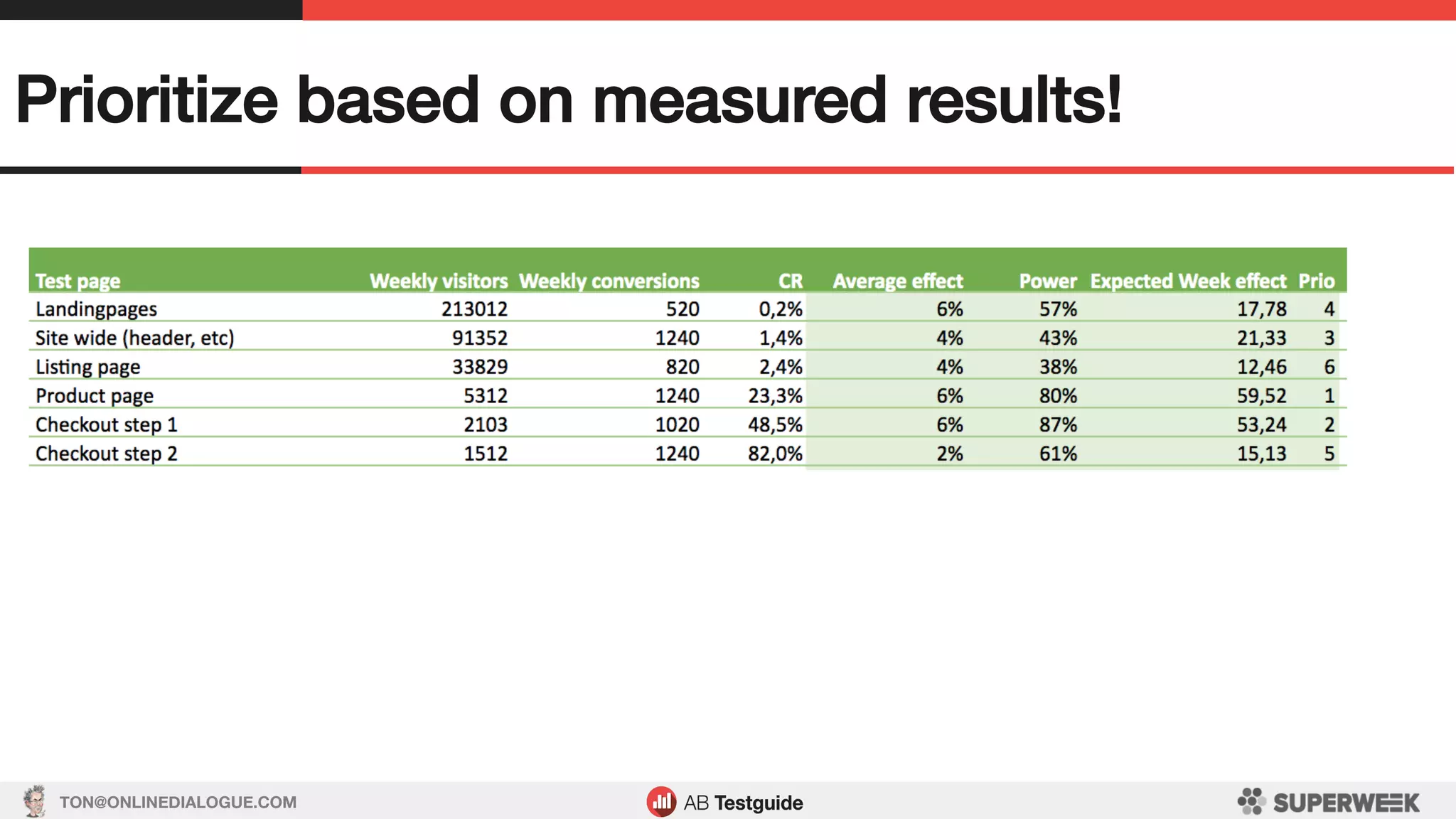

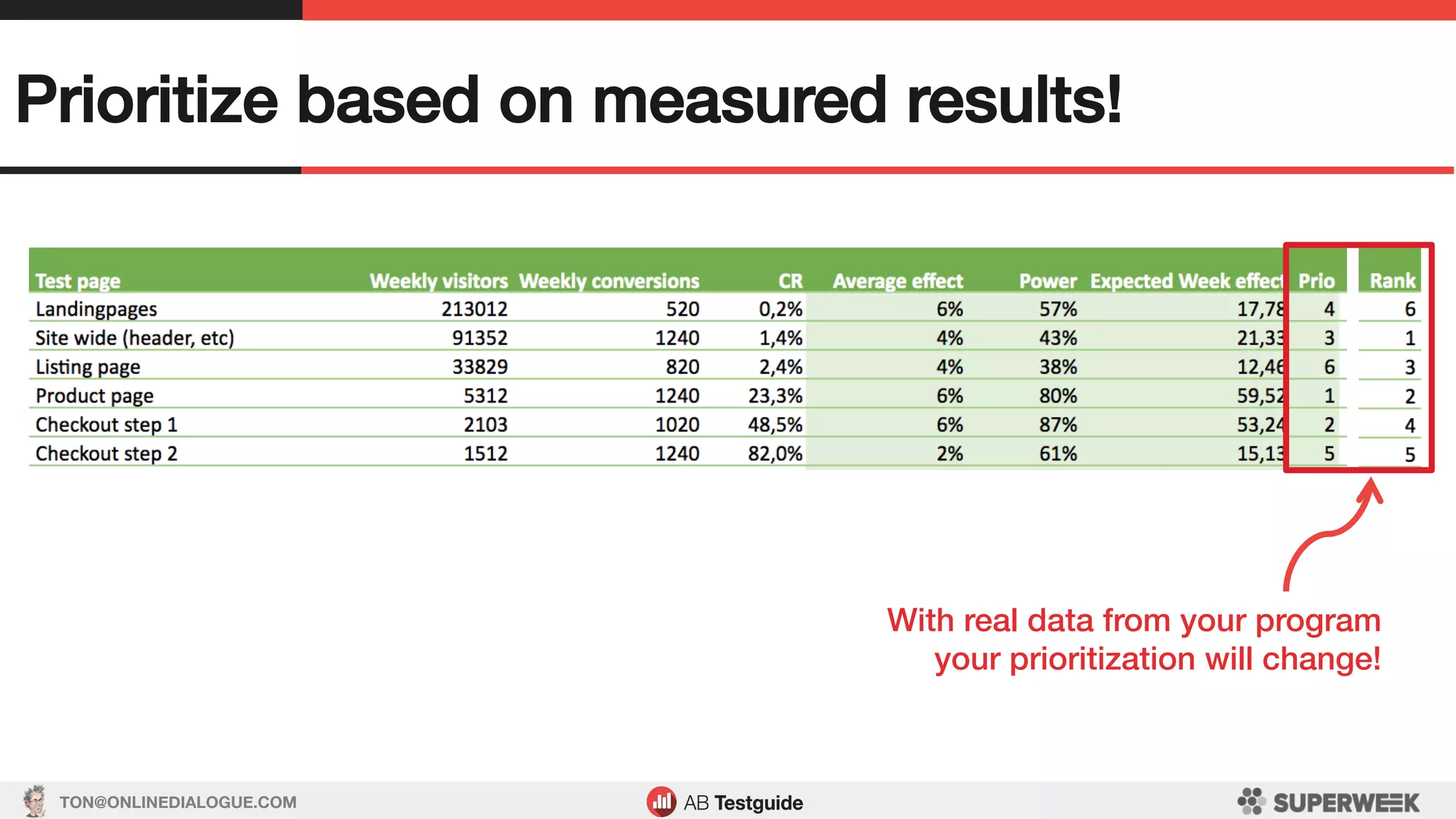

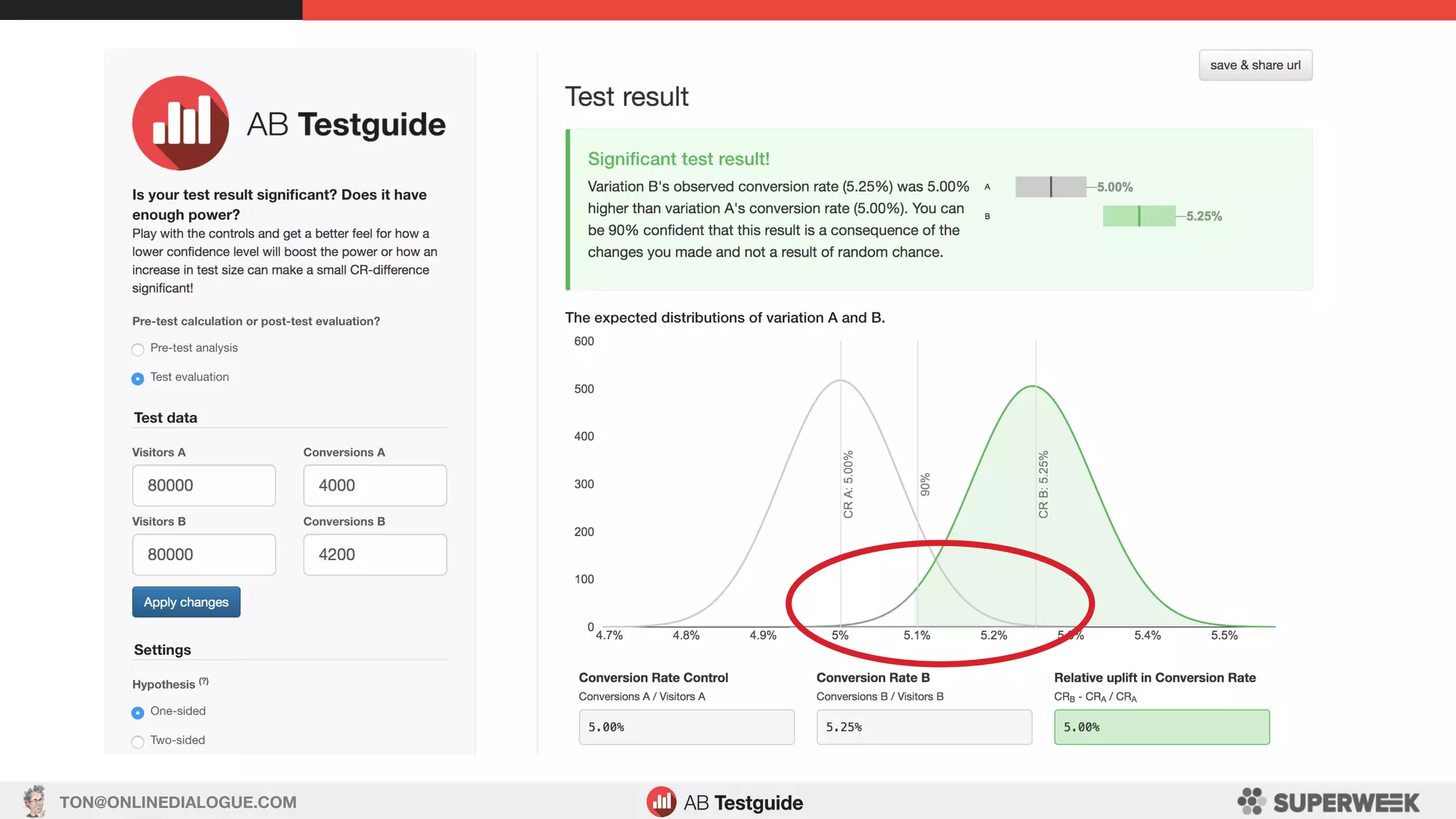

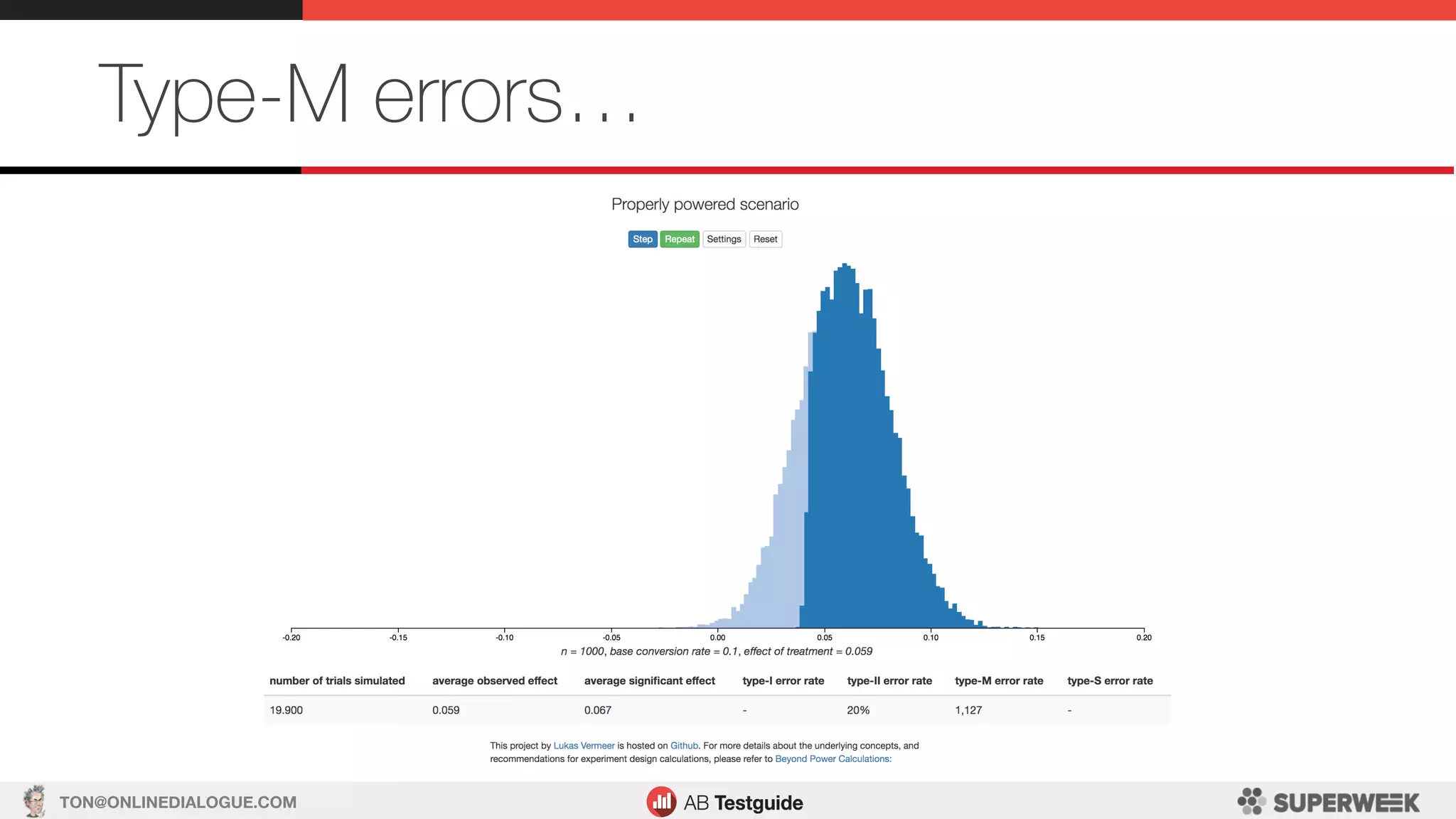

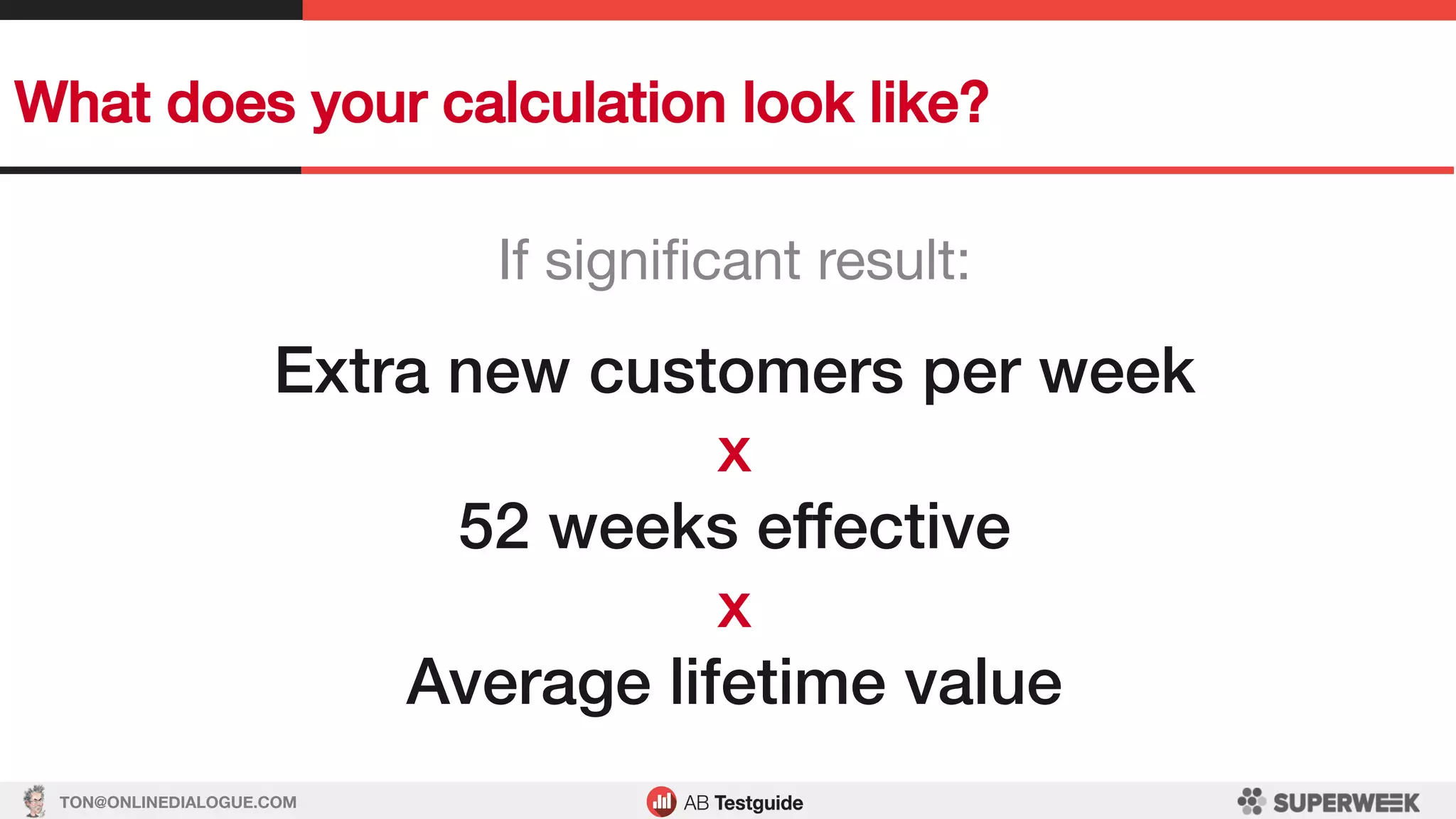

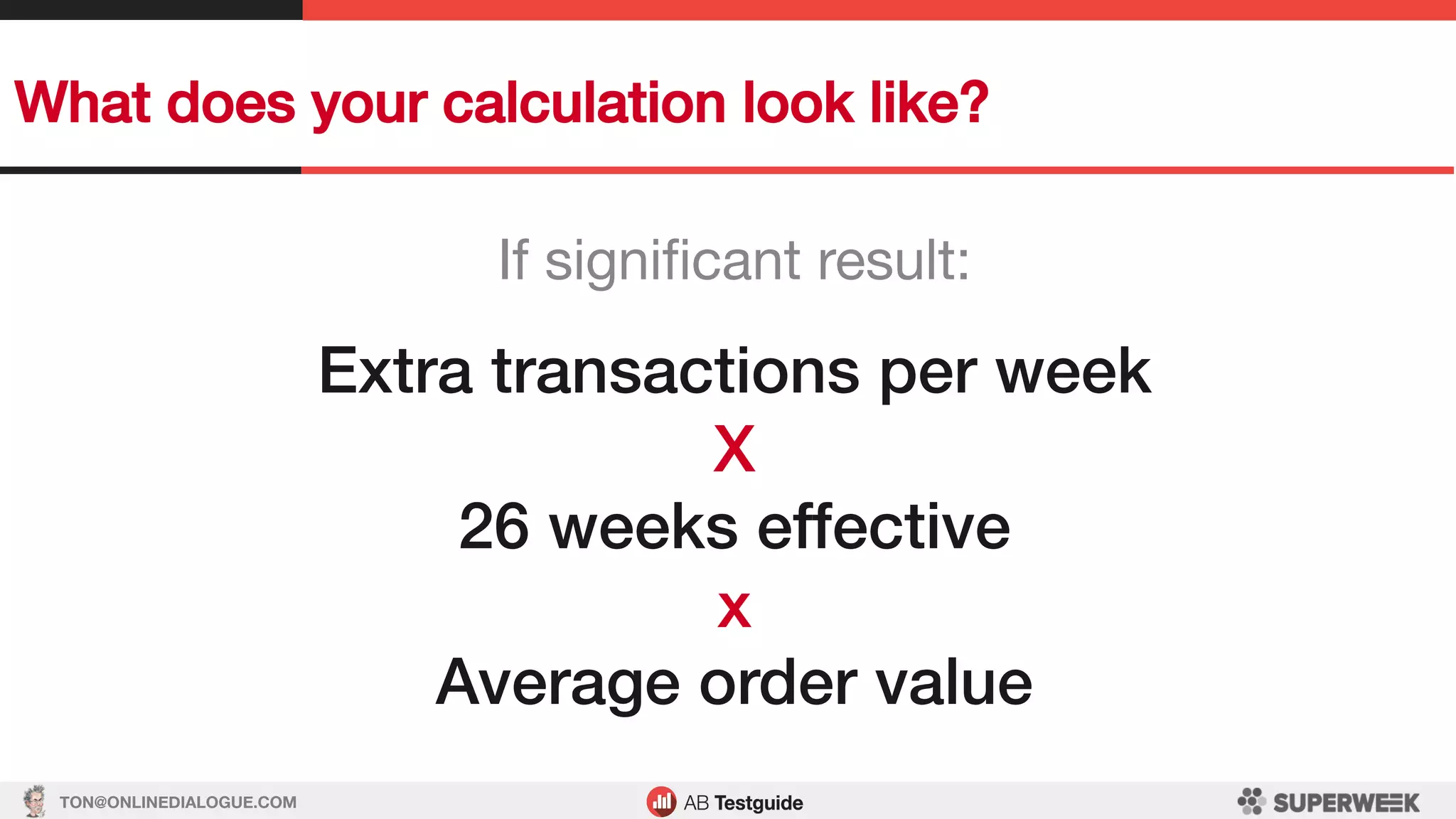

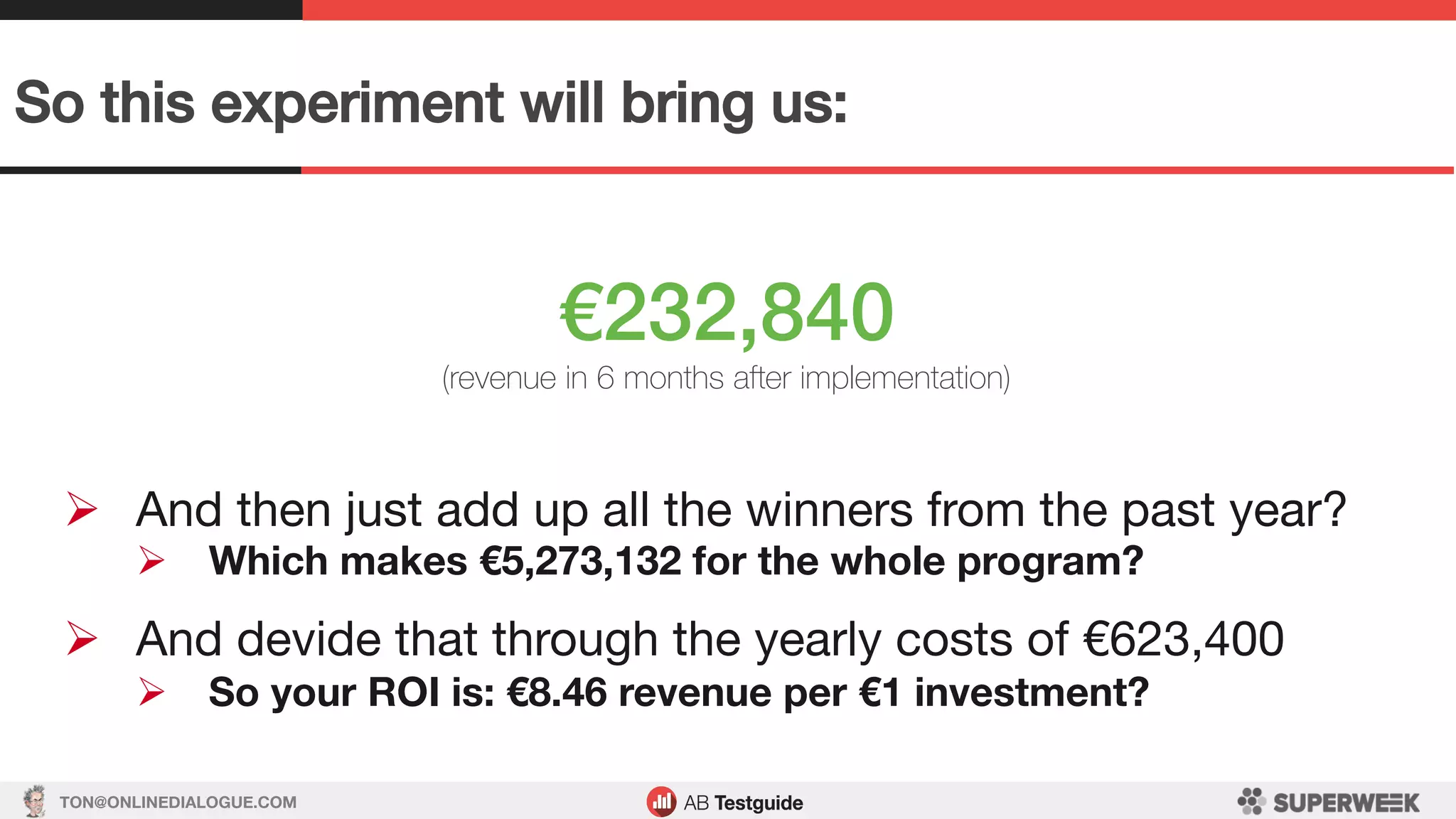

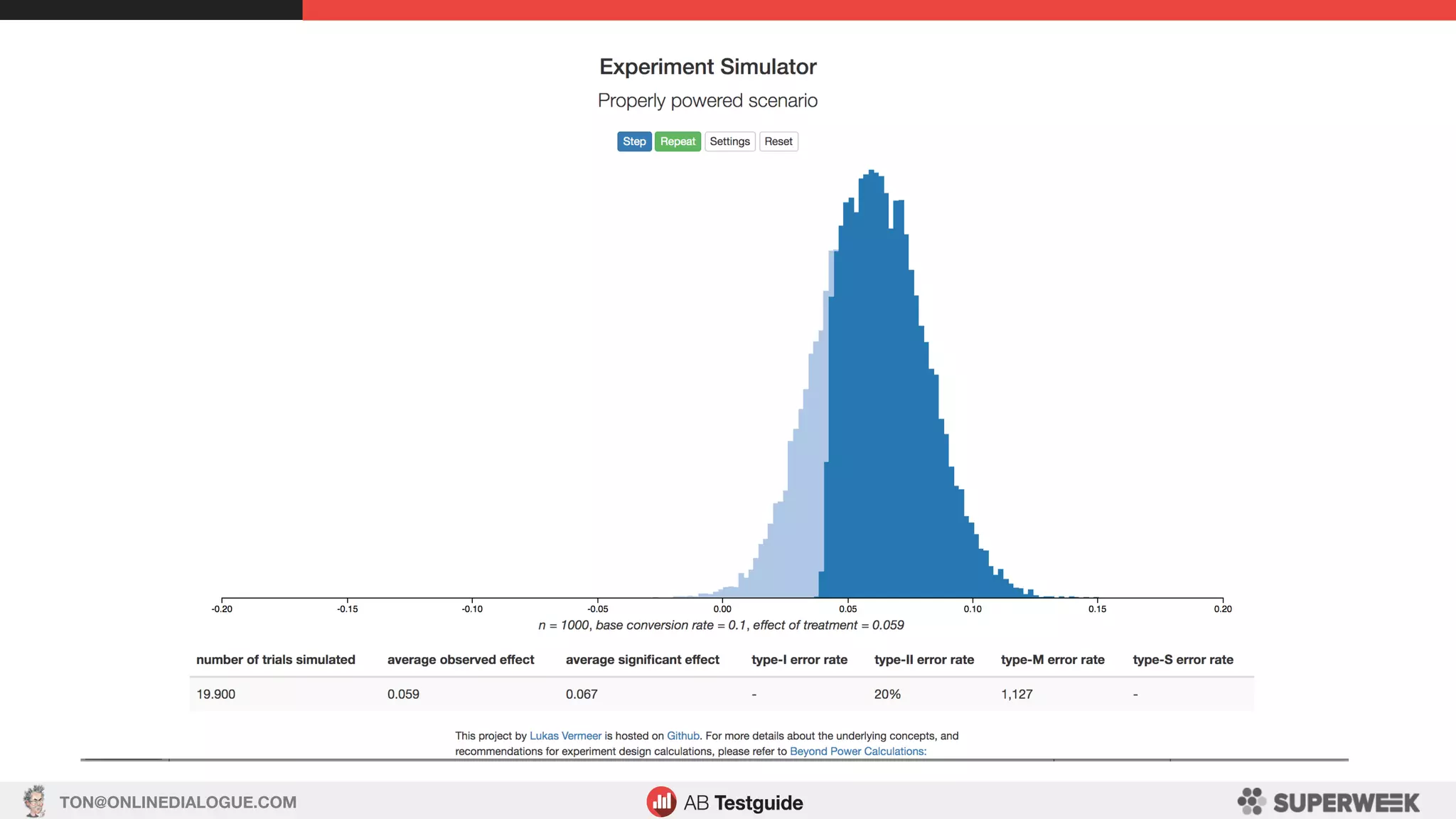

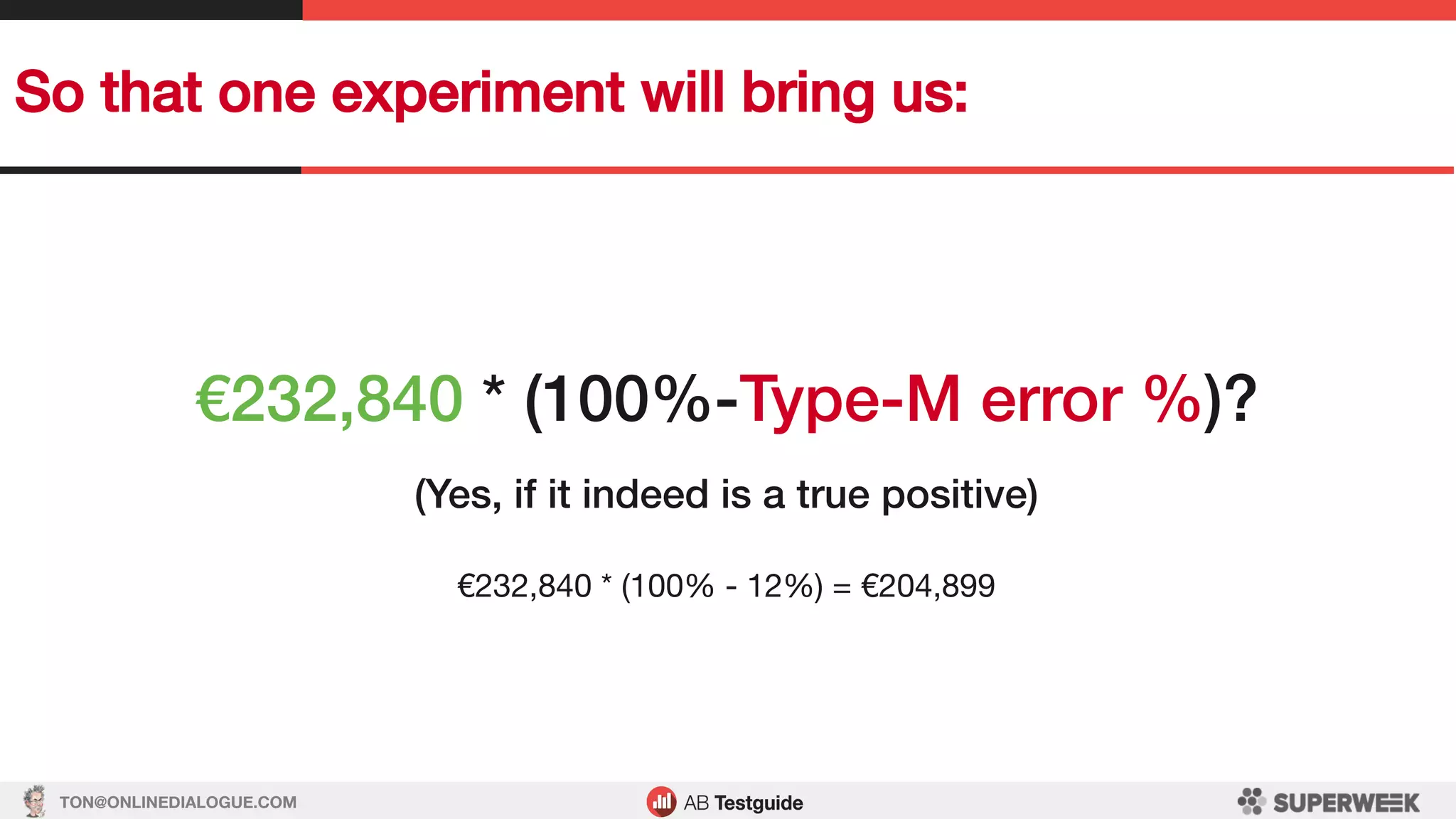

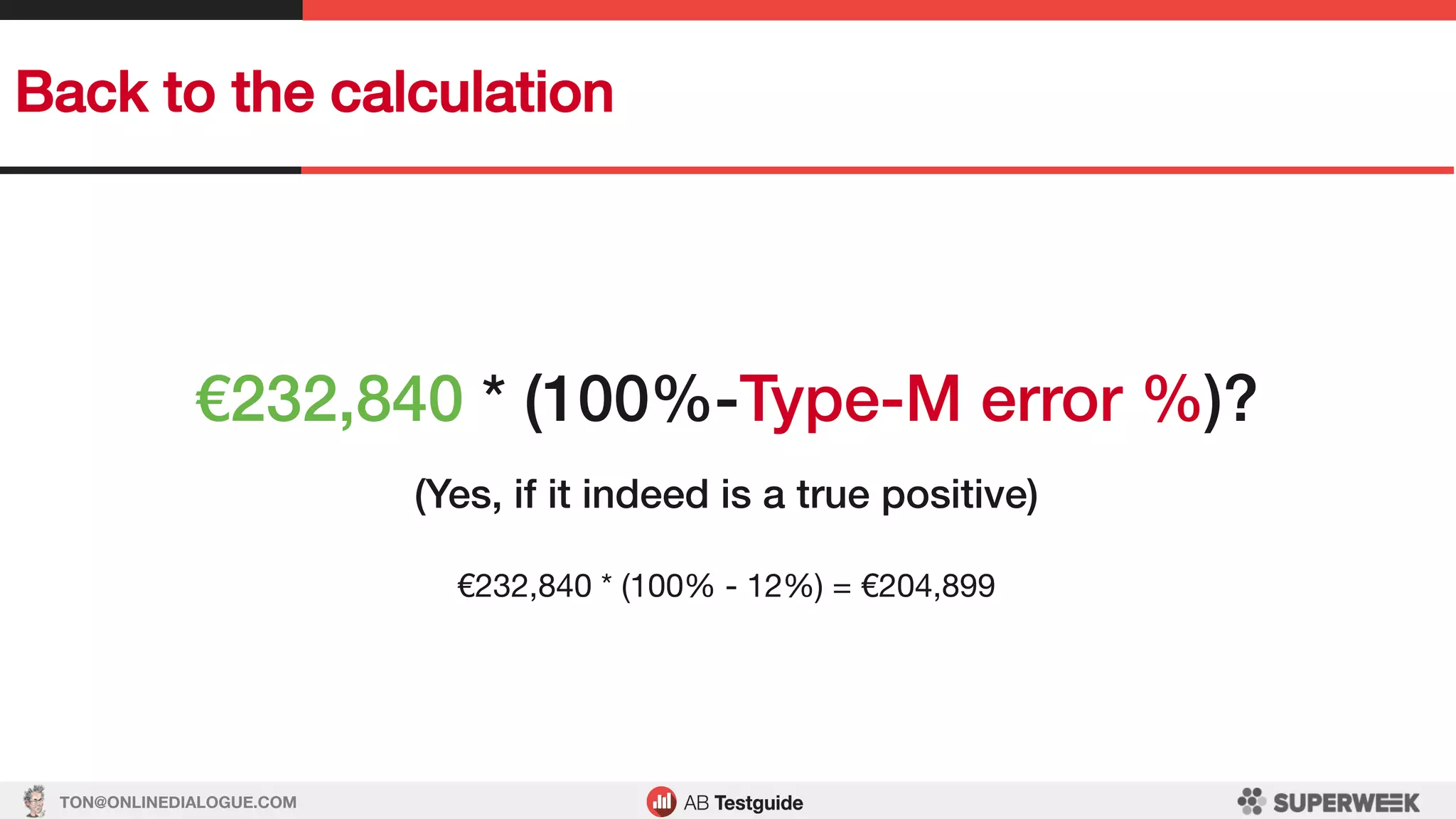

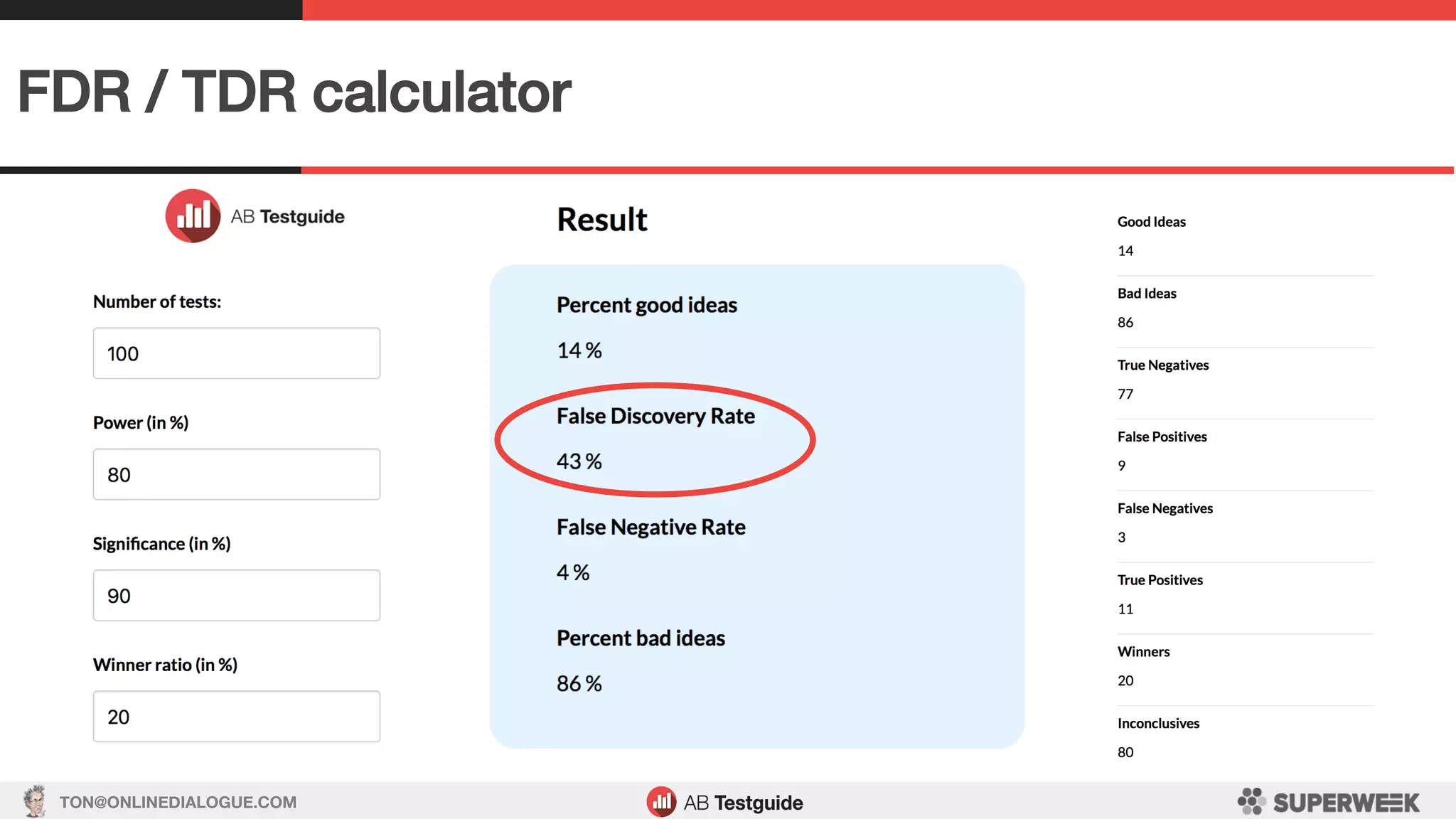

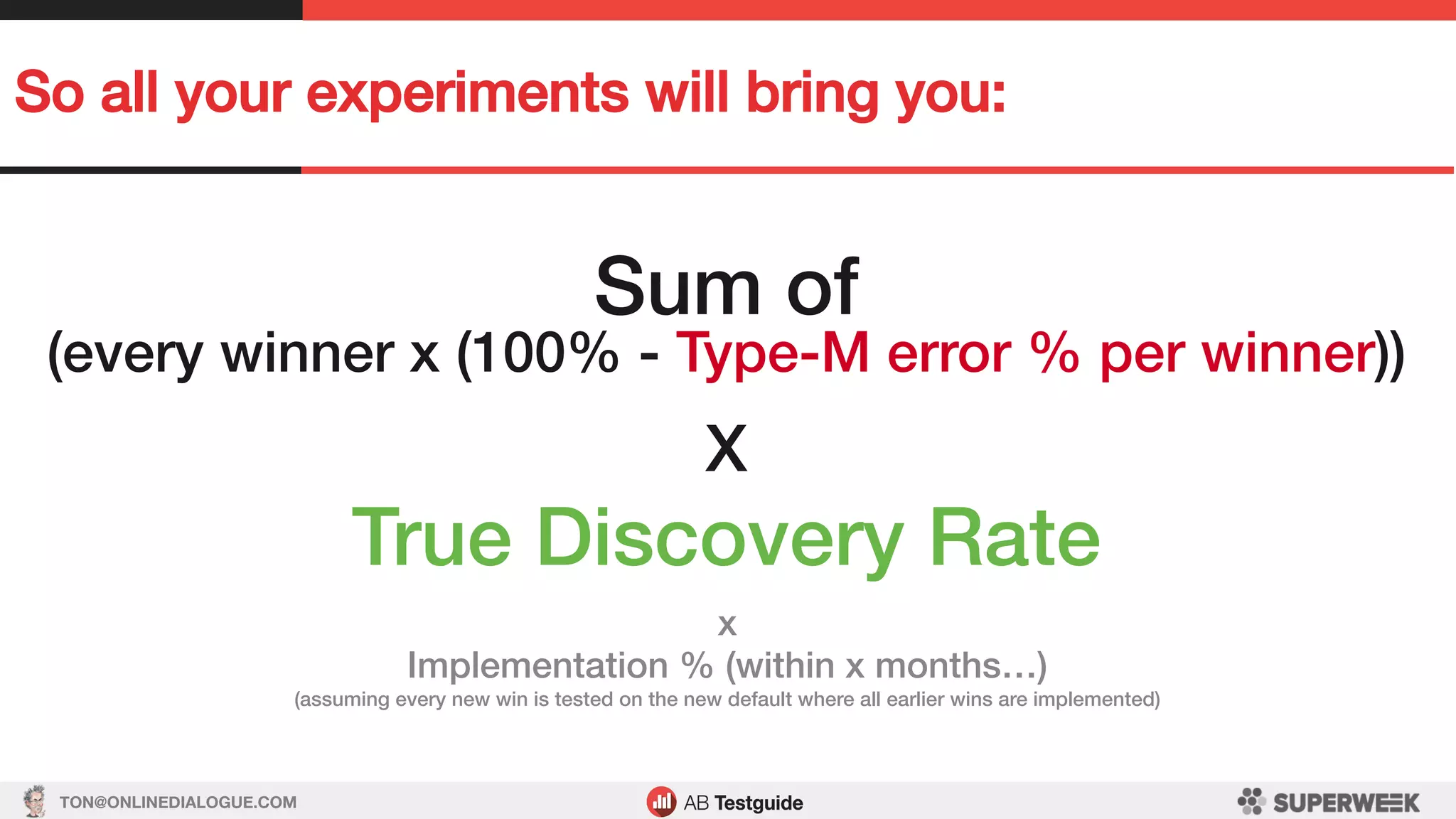

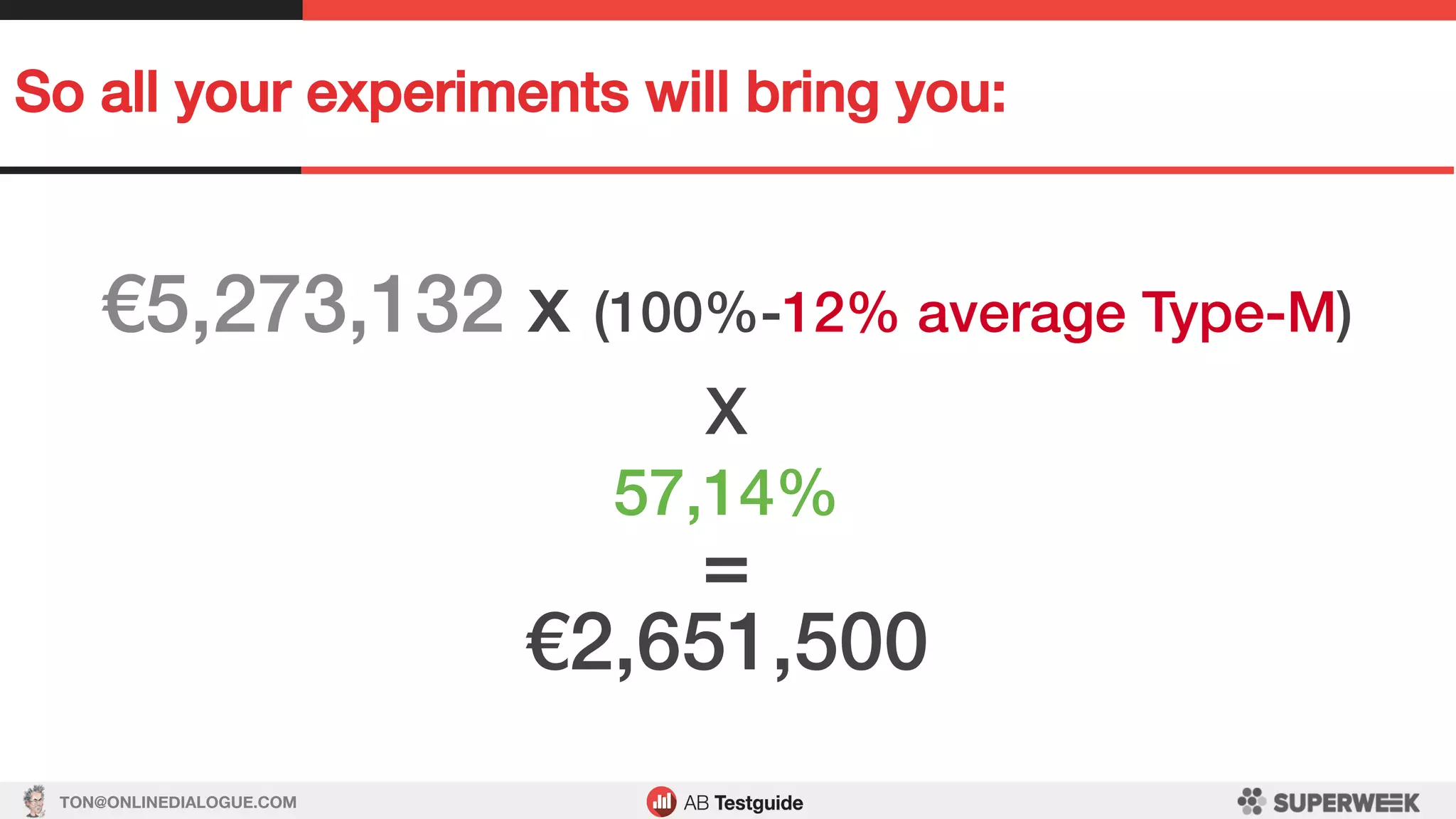

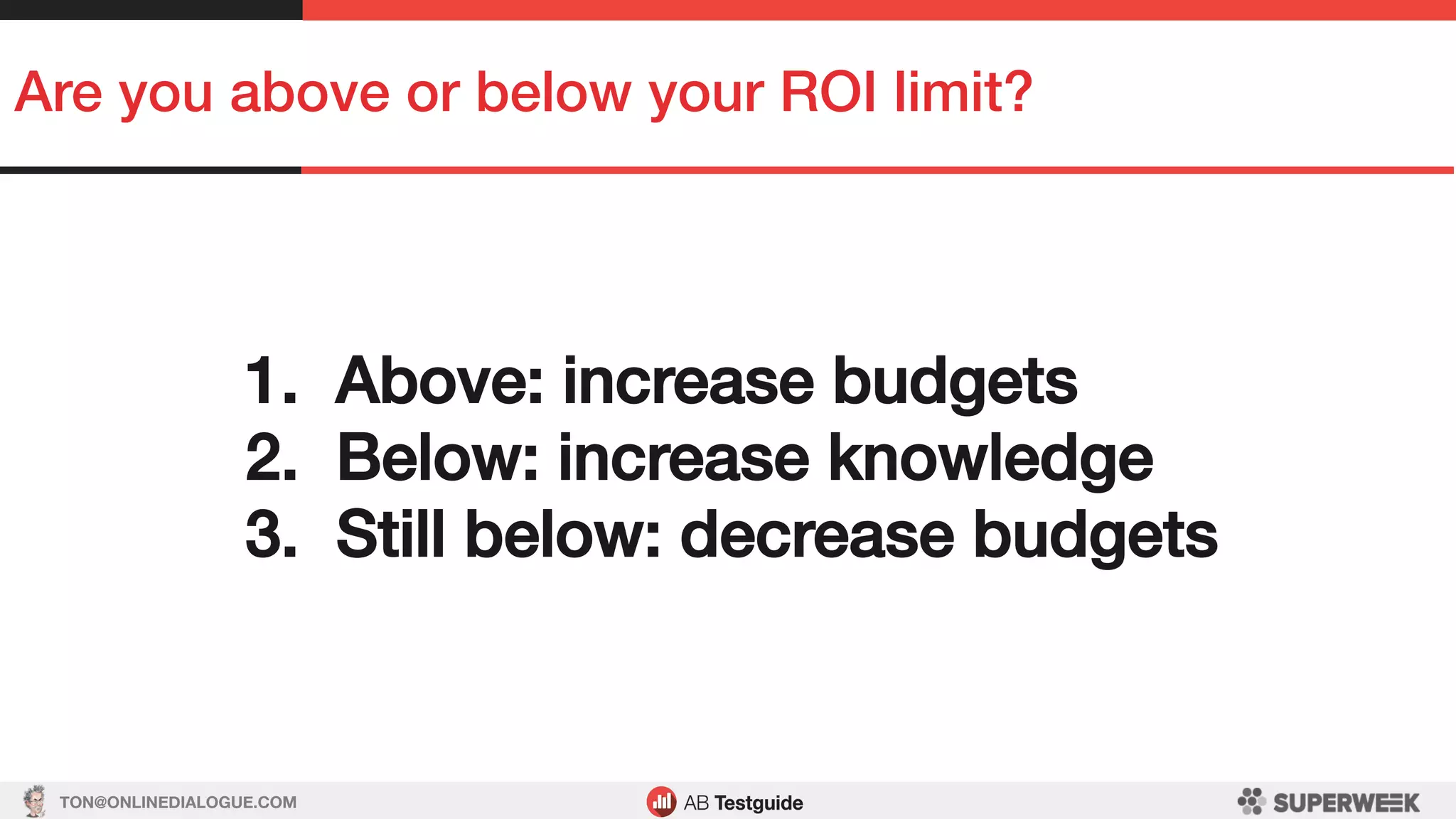

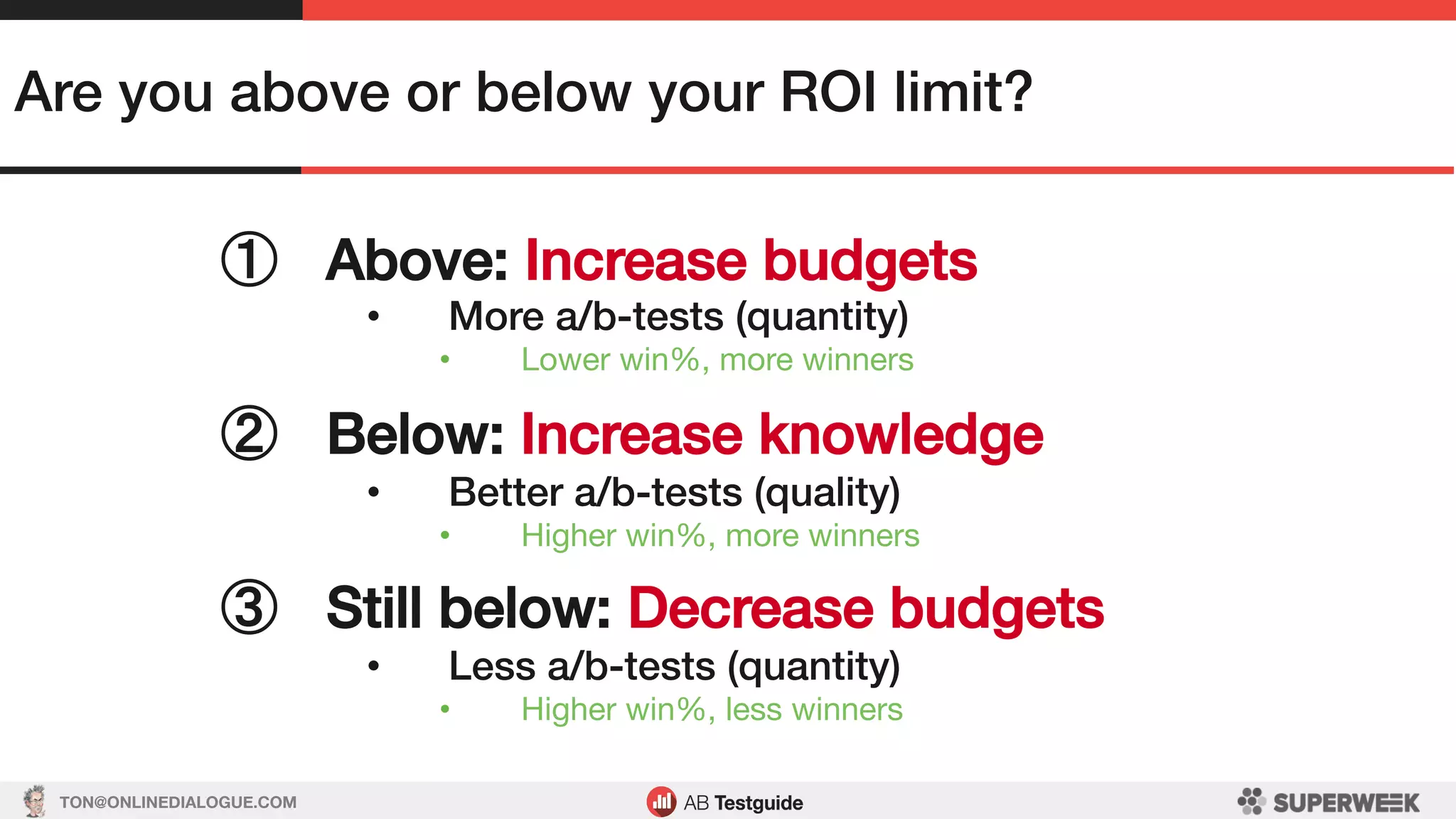

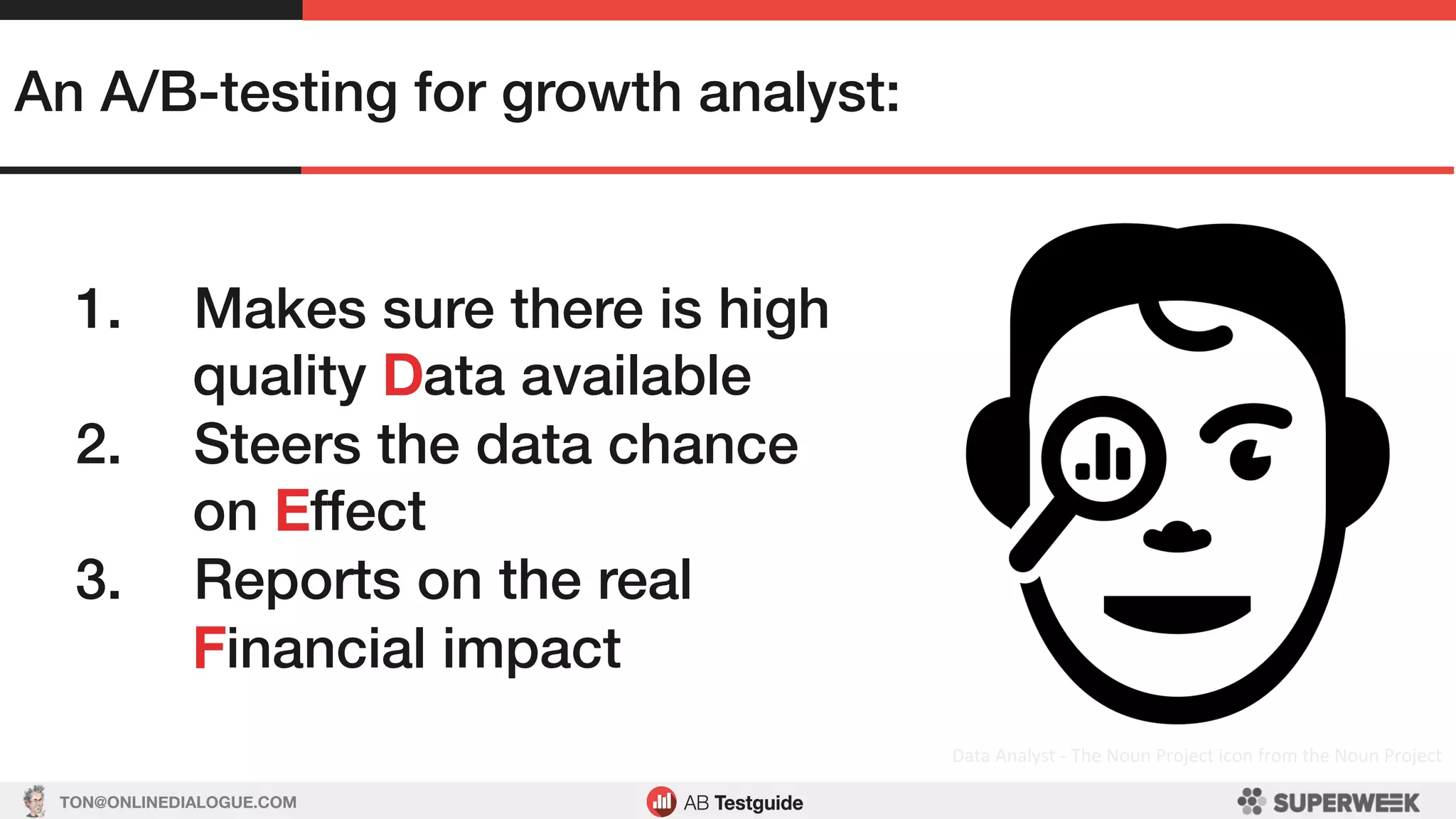

The document discusses the role of a data analyst within an A/B testing culture. It outlines that the analyst's tasks involve: 1) Ensuring high quality data is collected, 2) Prioritizing tests that have the highest potential for effectiveness, and 3) Performing business case calculations to assess financial impacts. The analyst is responsible for determining which experiments to run based on statistical power, prioritizing experiments with the largest predicted minimum detectable effects, and calculating returns on investment from successful experiments to optimize company growth within budget constraints.