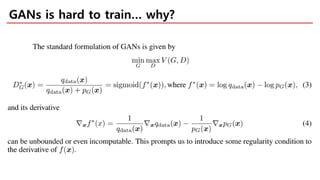

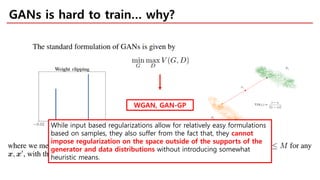

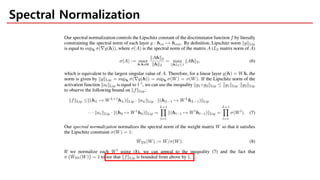

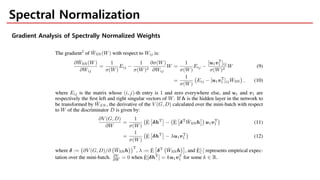

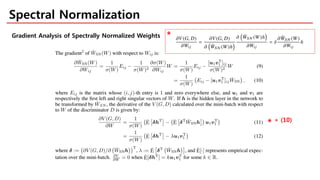

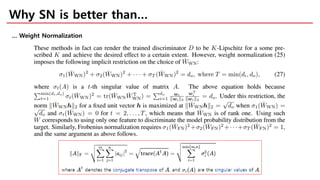

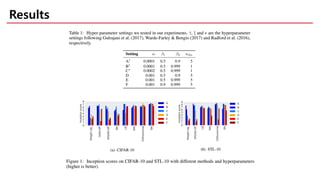

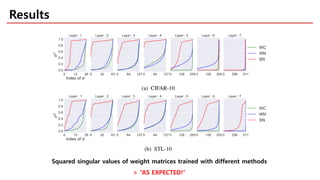

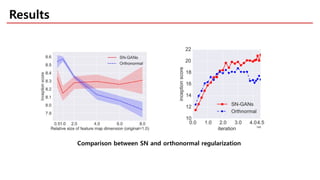

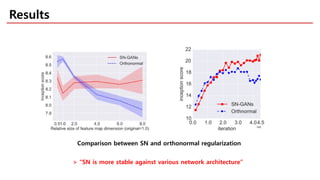

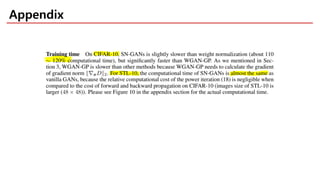

The document discusses spectral normalization, a proposed technique to stabilize the training of the discriminator in generative adversarial networks (GANs). It highlights the simplicity and effectiveness of this method, which only requires tuning a single hyper-parameter, the Lipschitz constant. Comparative results indicate that spectral normalization outperforms other regularization methods in maintaining stability across various network architectures and datasets.