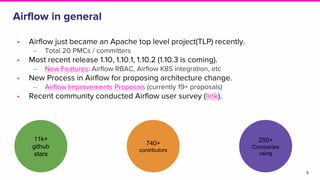

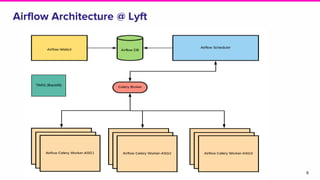

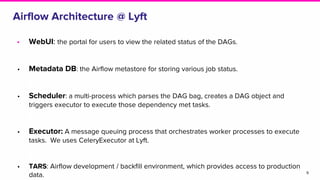

Tao Feng gave a presentation on Airflow at Lyft. Some key points:

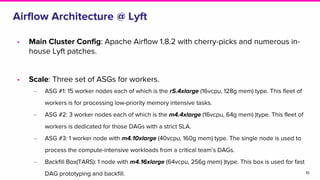

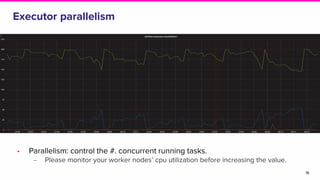

1) Lyft uses Apache Airflow for ETL workflows with over 600 DAGs and 800 DAG runs daily across three AWS Auto Scaling Groups of worker nodes.

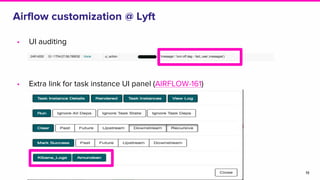

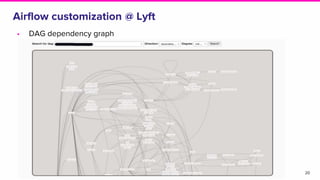

2) Lyft has customized Airflow with additional UI links, DAG dependency graphs, and integration with internal tools.

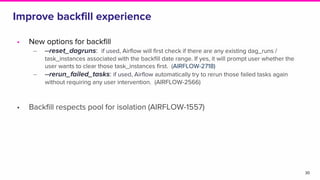

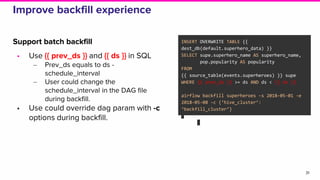

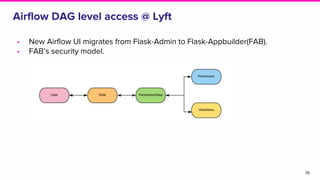

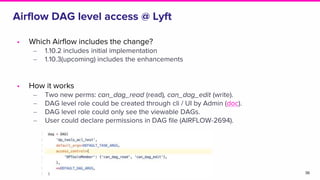

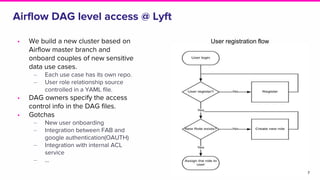

3) Lyft is working to improve the backfill experience, support DAG-level access controls, and explore running Airflow with Kubernetes executors.

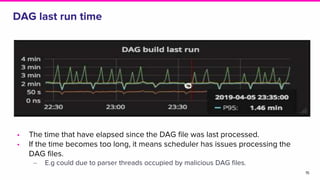

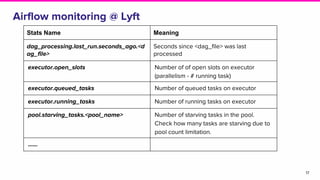

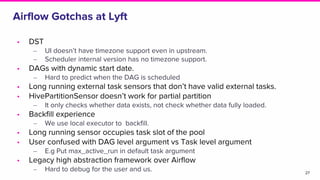

4) Tao discussed challenges like daylight saving time issues and long-running tasks occupying slots, and thanked other Lyft engineers contributing to Airflow.