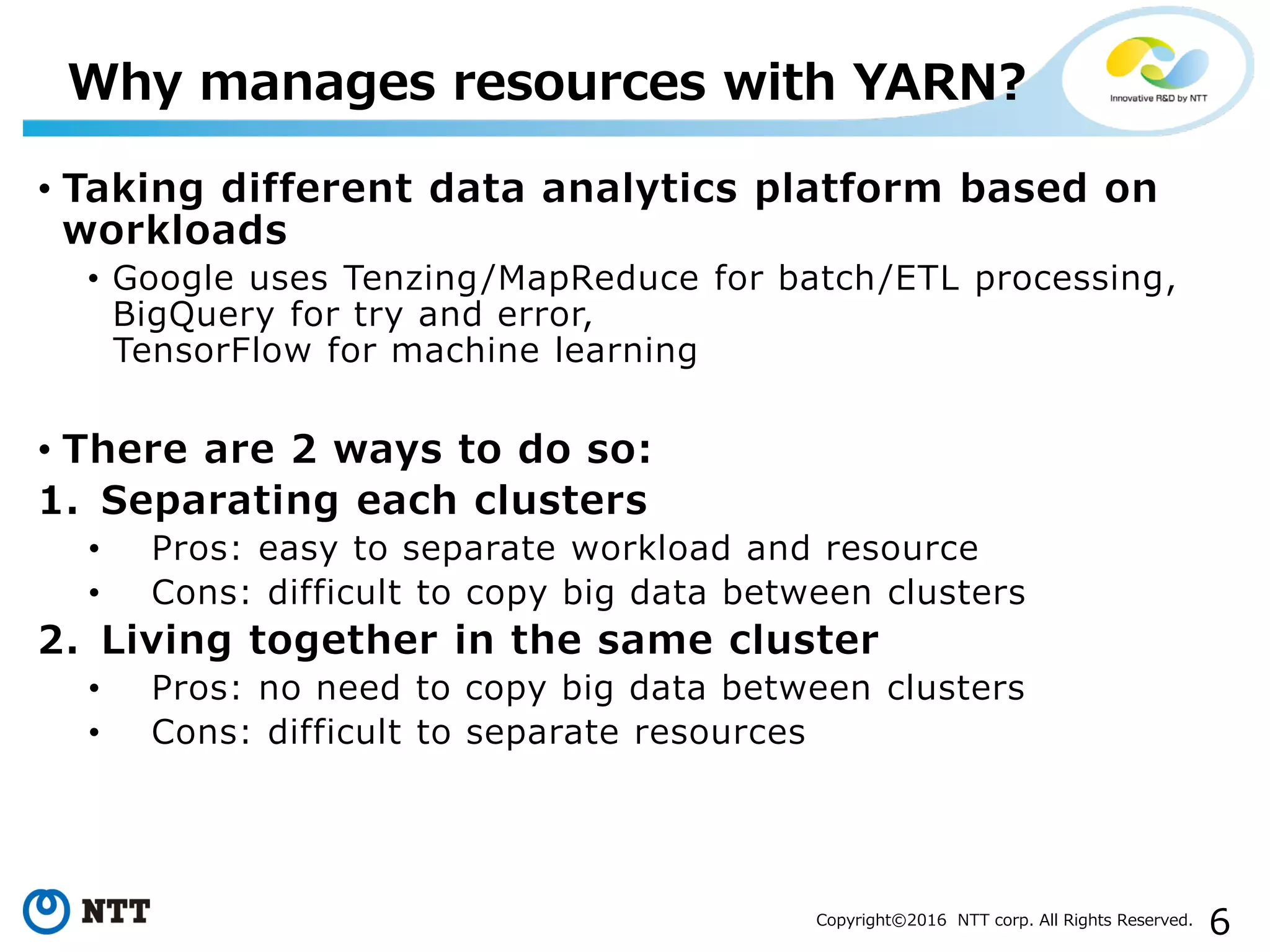

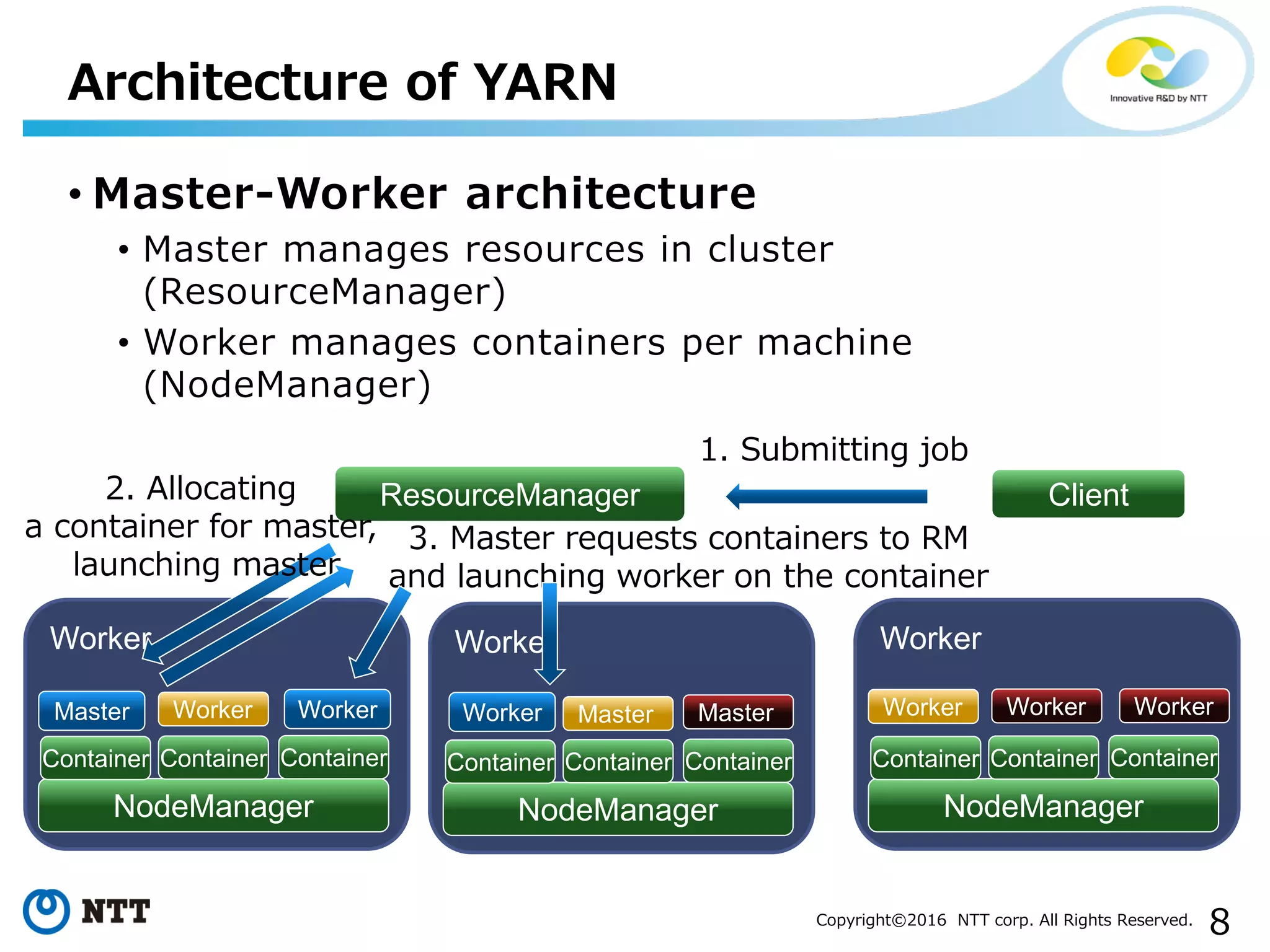

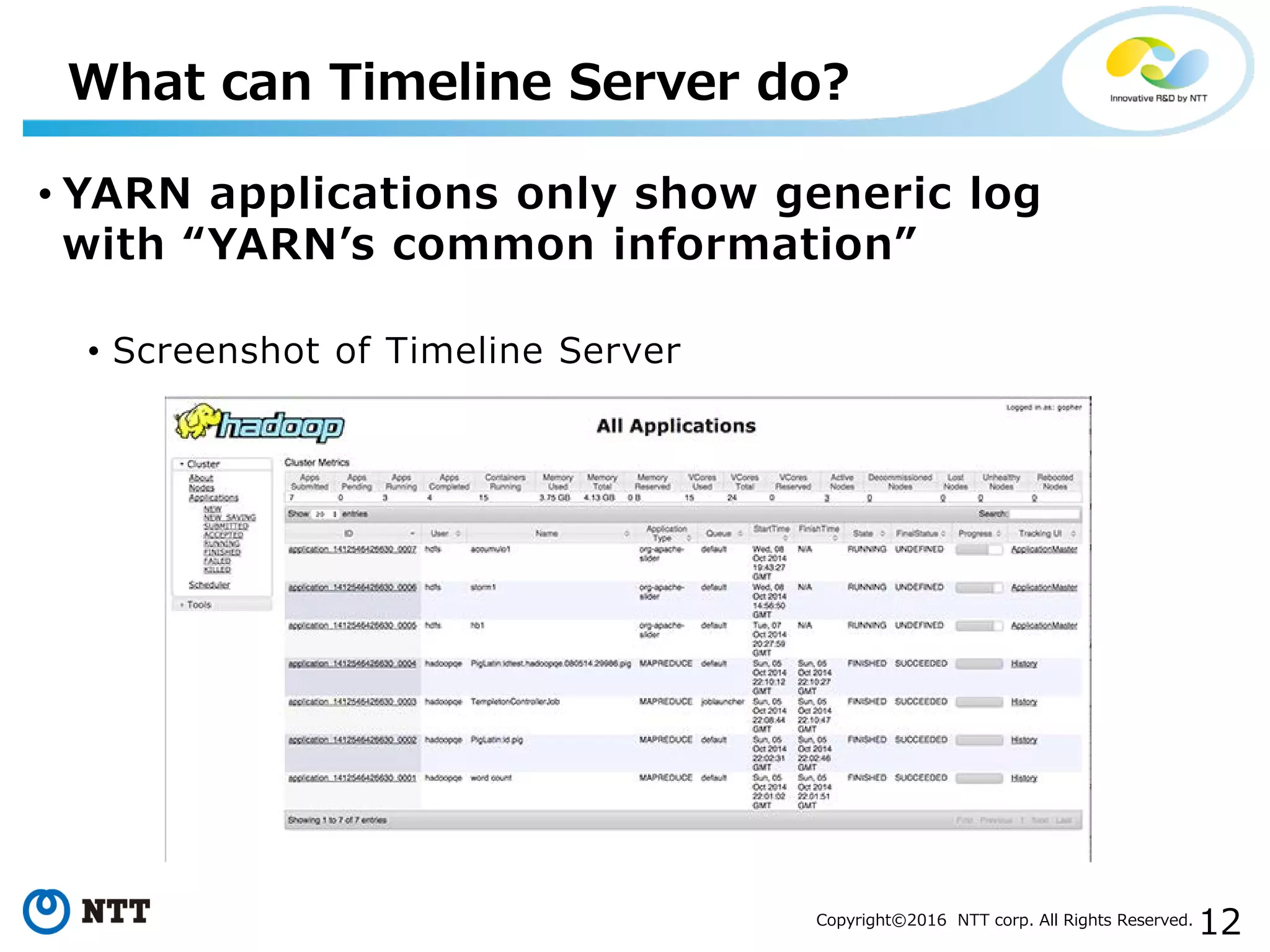

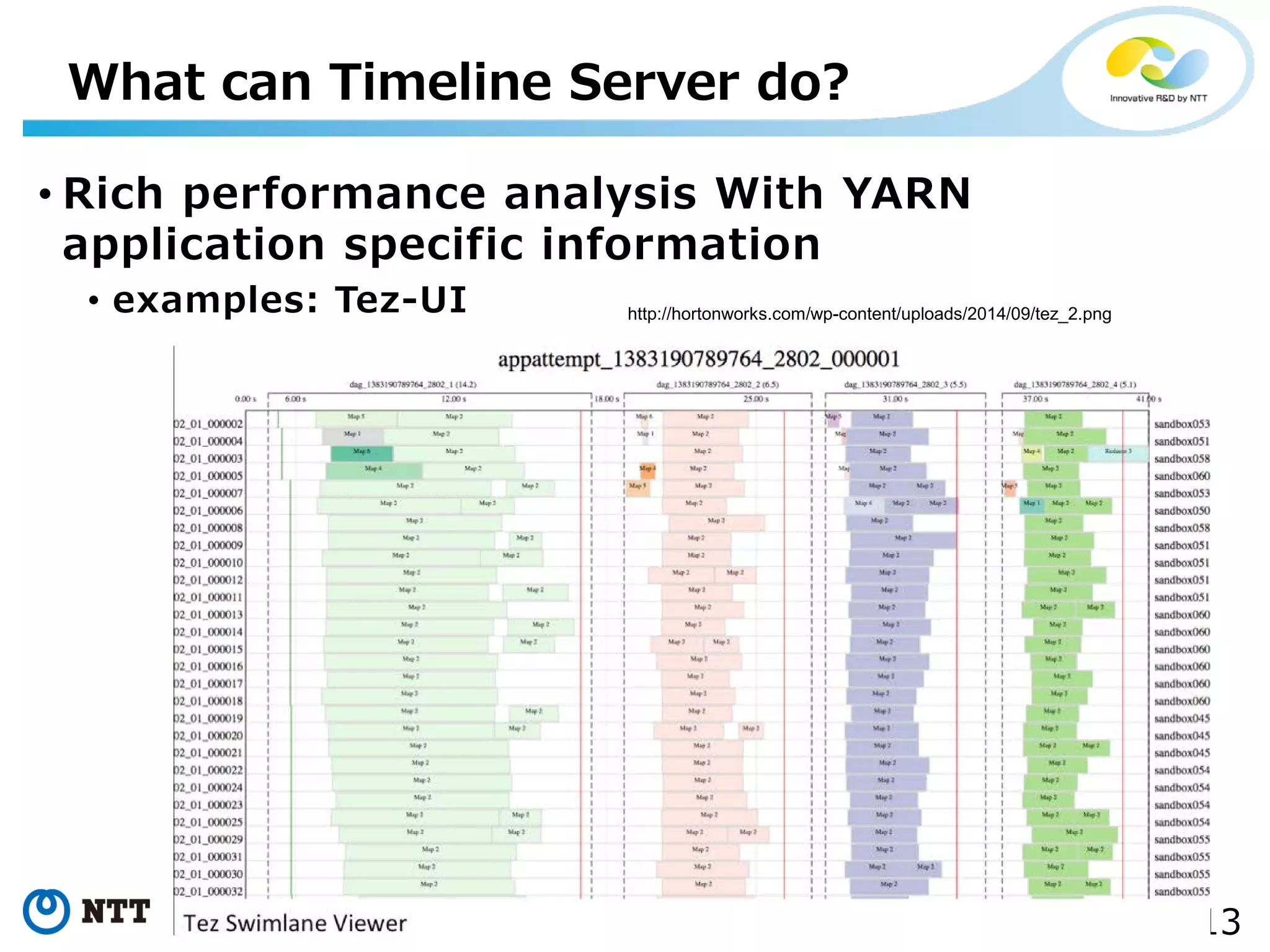

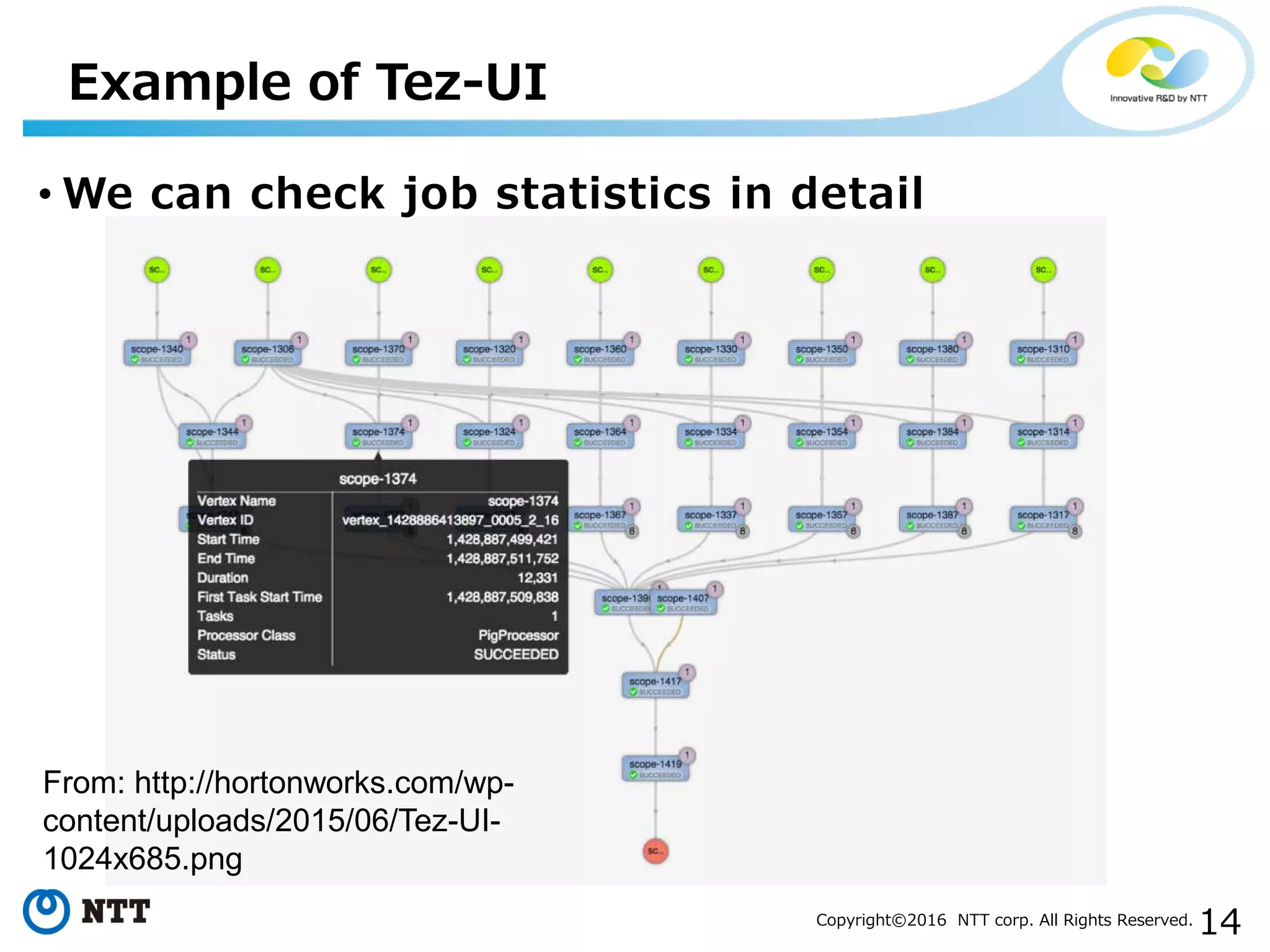

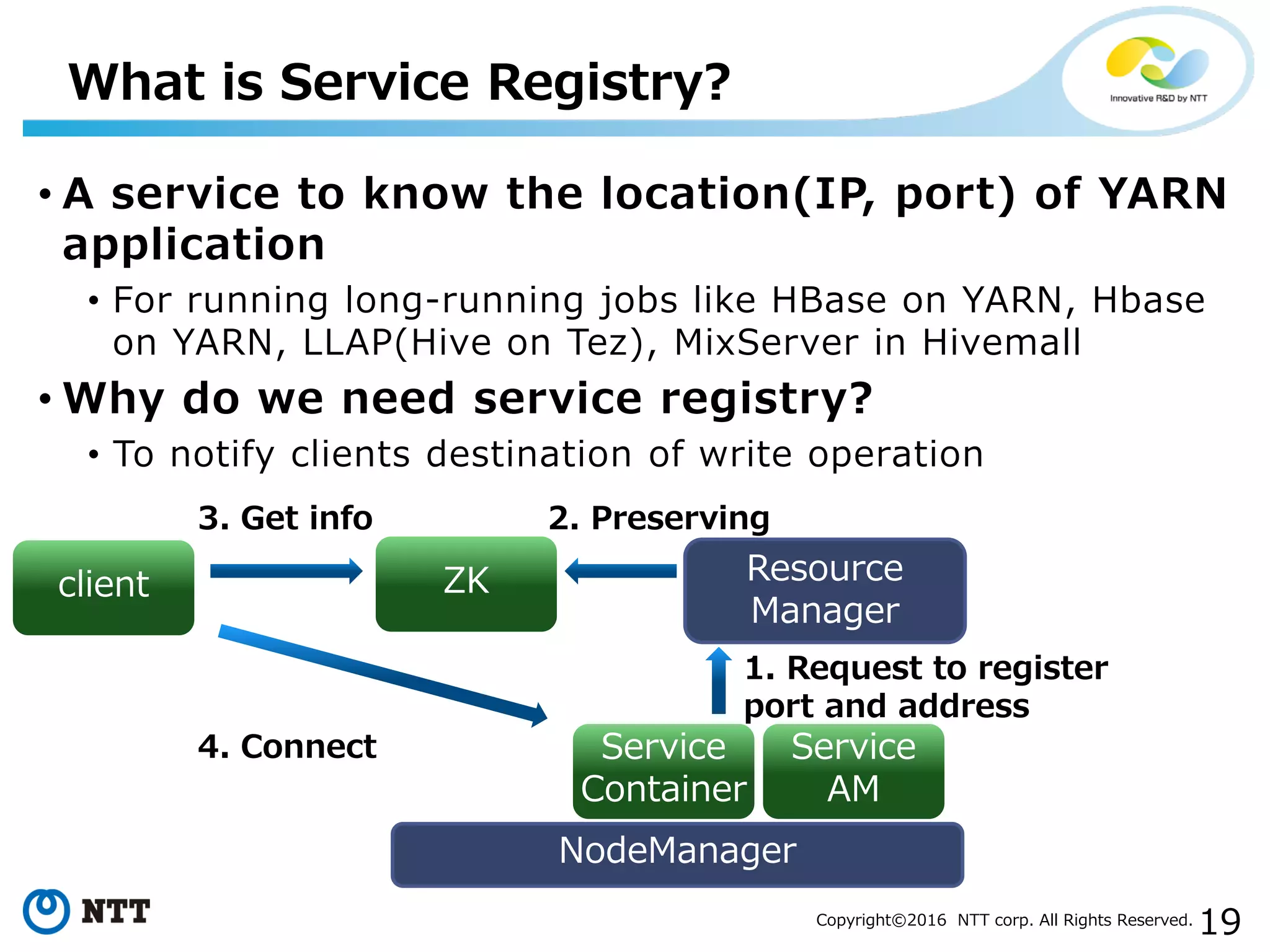

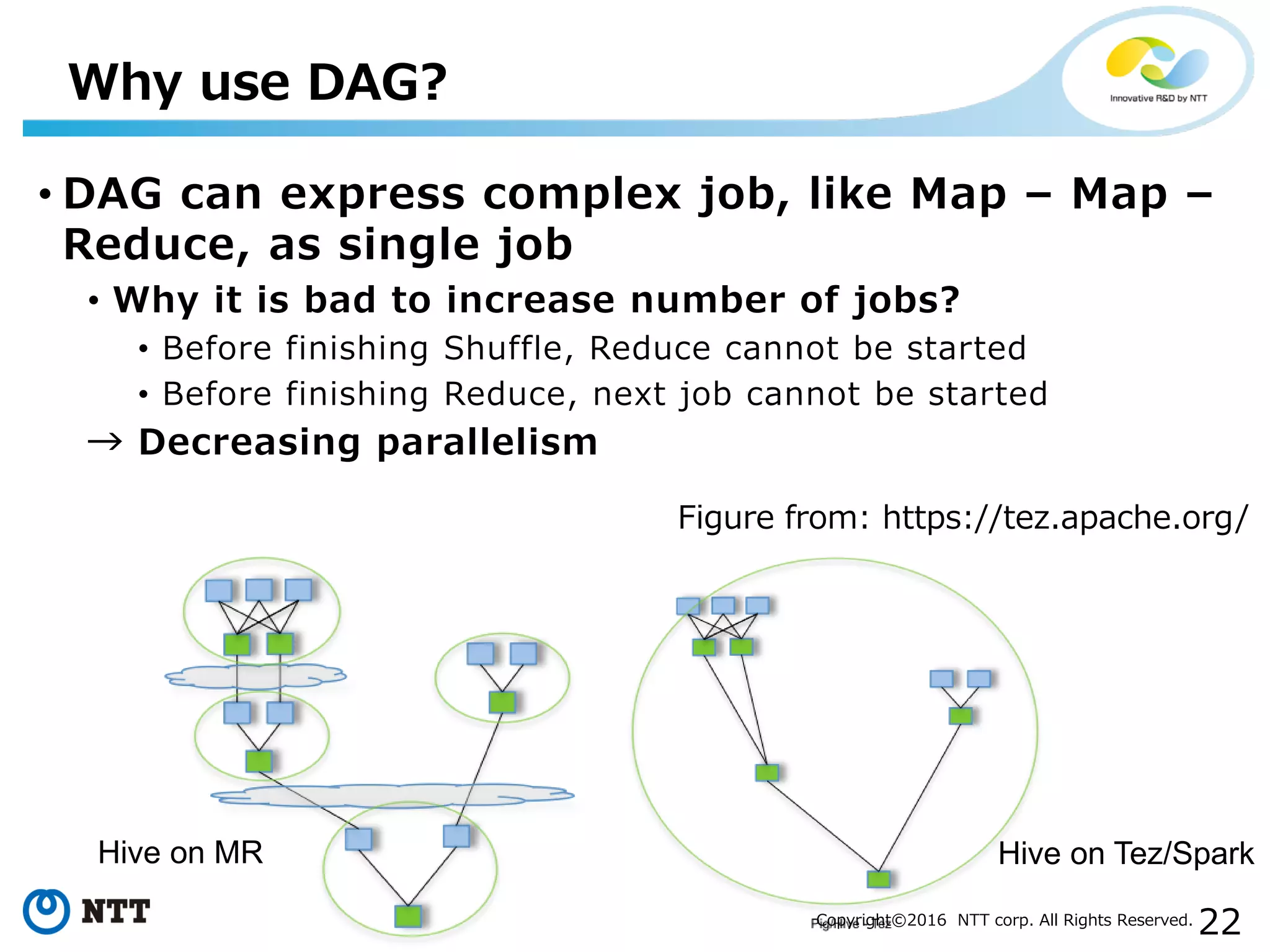

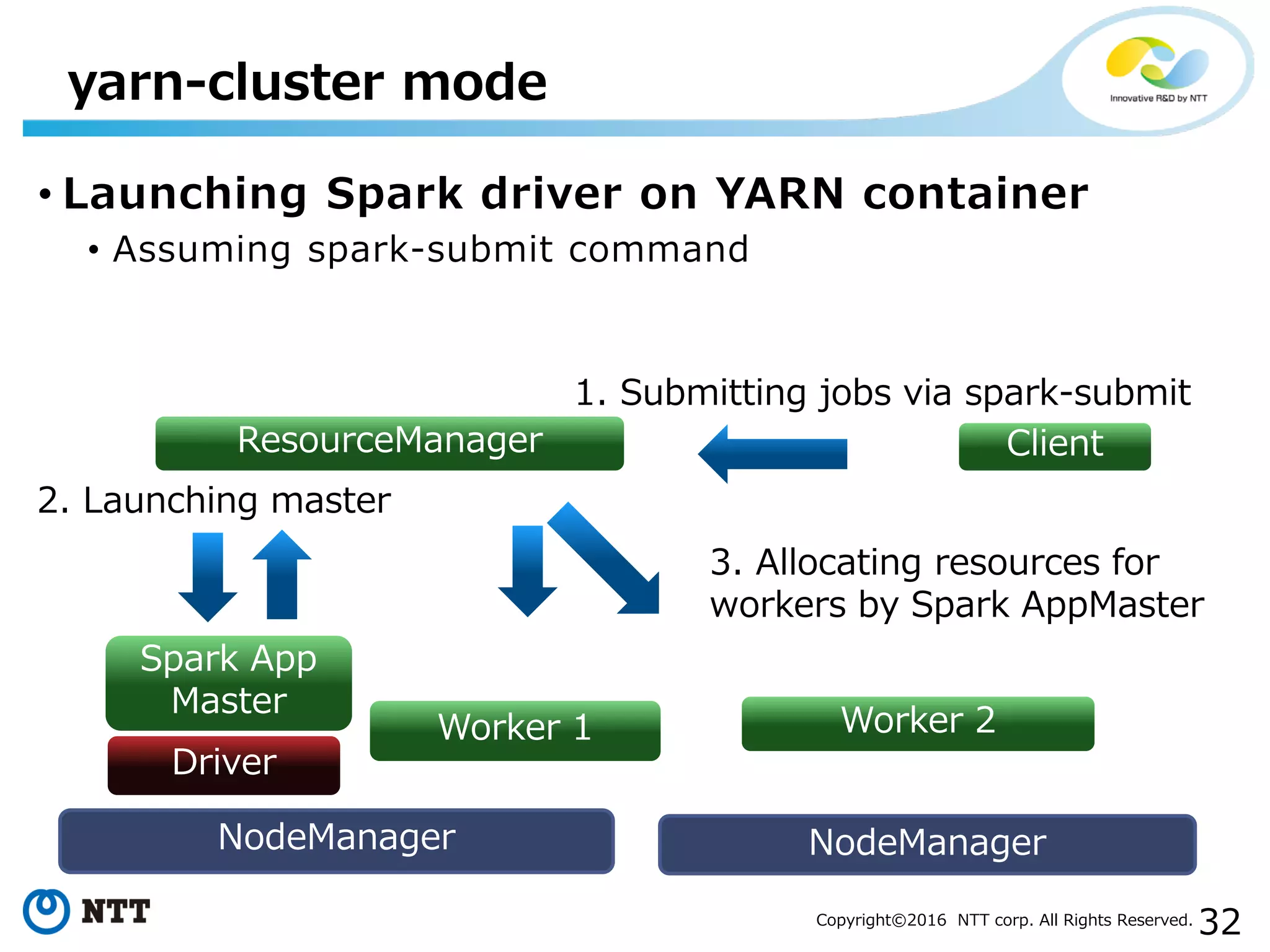

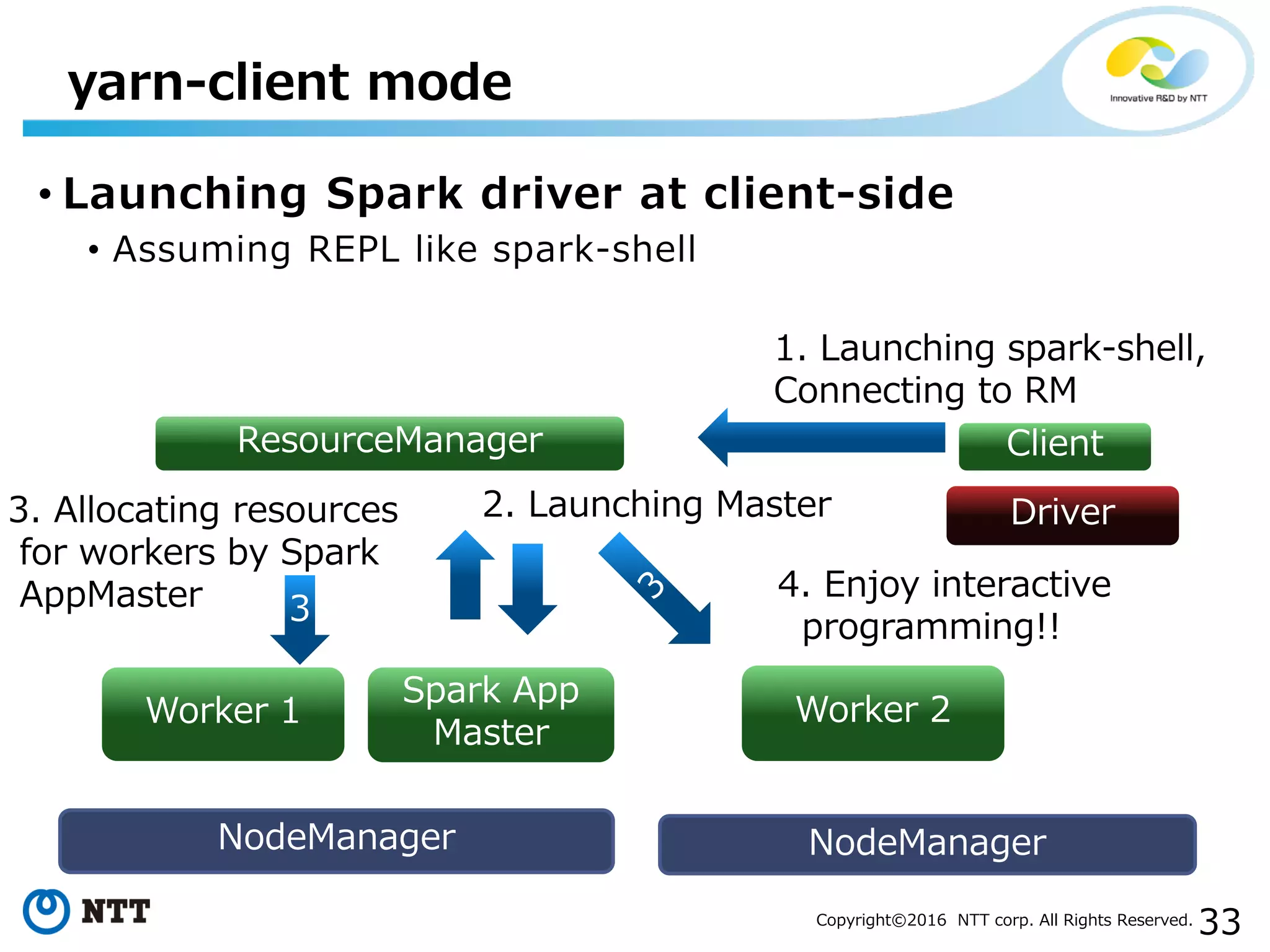

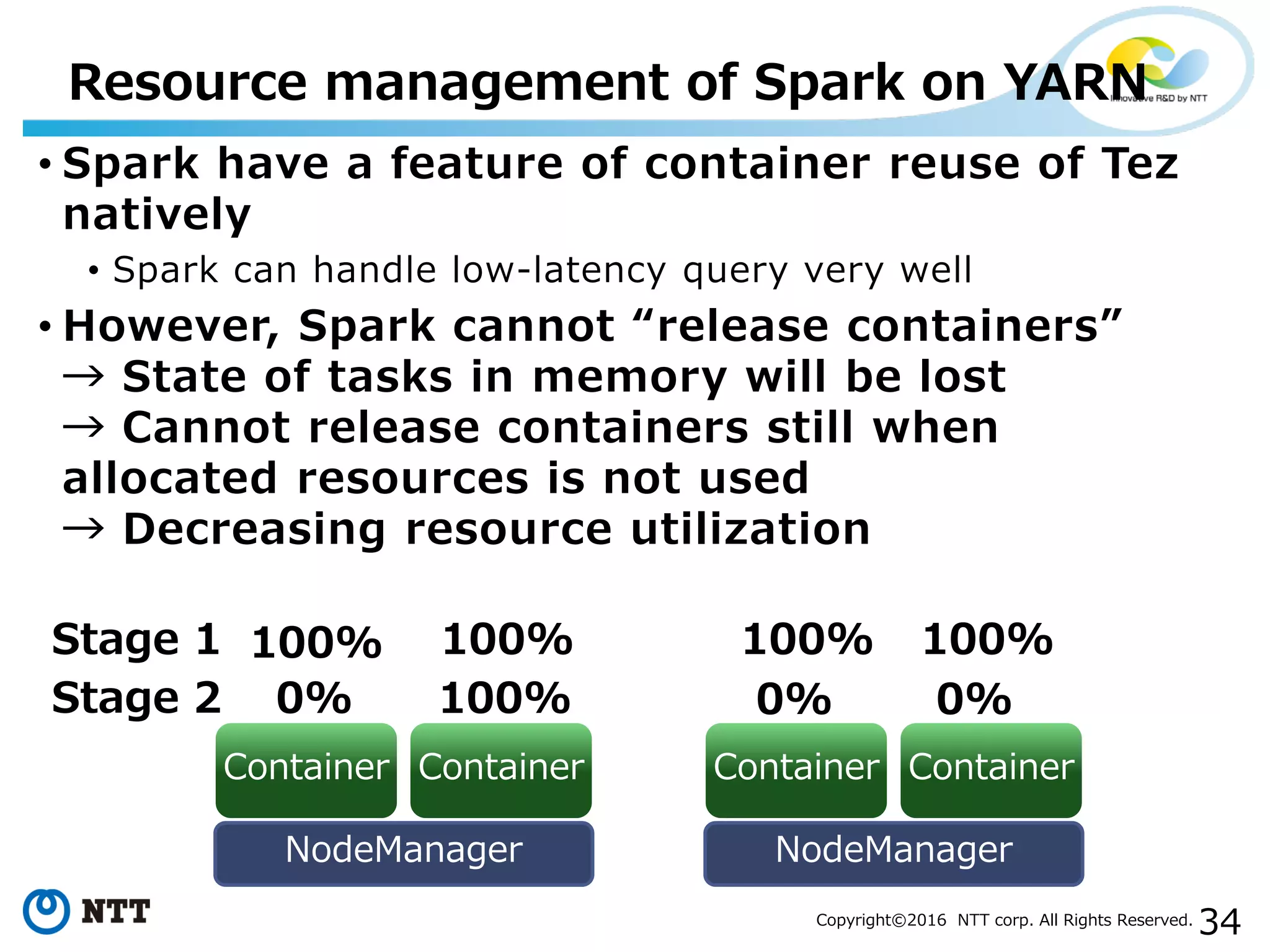

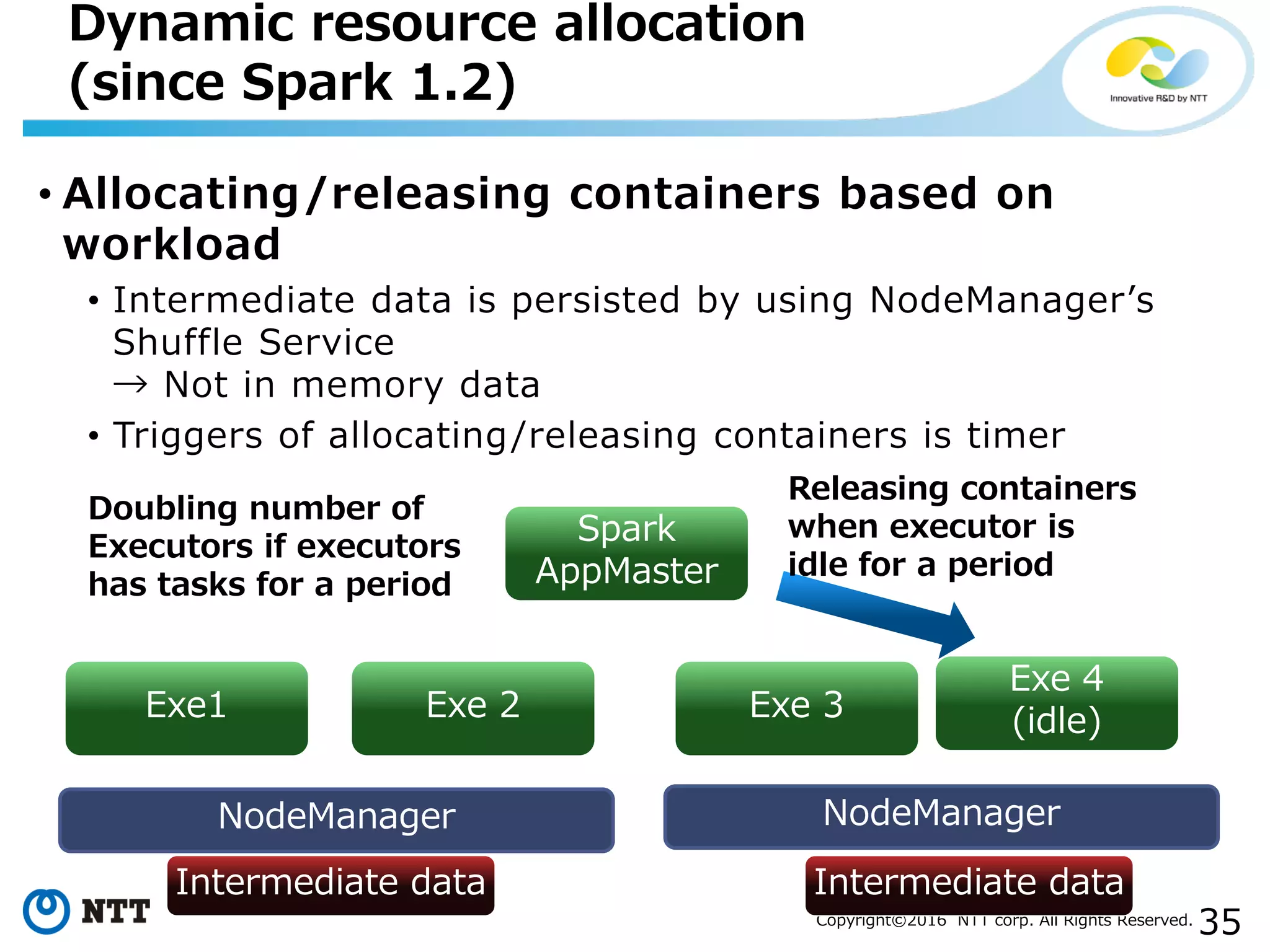

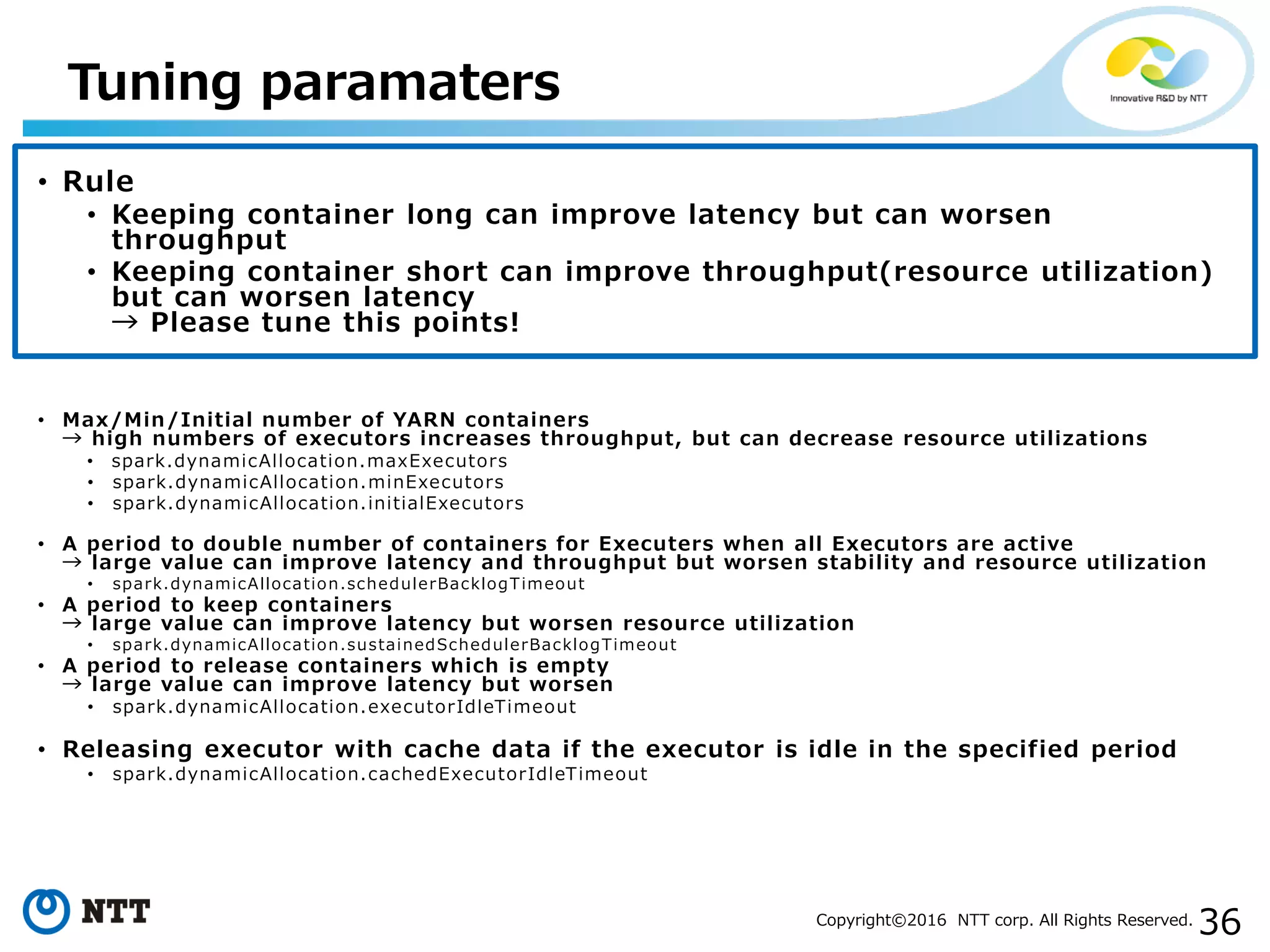

The document discusses YARN, a resource manager for Apache Hadoop. It provides an overview of YARN and its key features: (1) managing resources in a cluster, (2) managing application history logs, and (3) a service registry mechanism. It then discusses how distributed processing frameworks like Tez and Spark work on YARN, focusing on their directed acyclic graph (DAG) models and techniques for improving performance on YARN like container reuse.