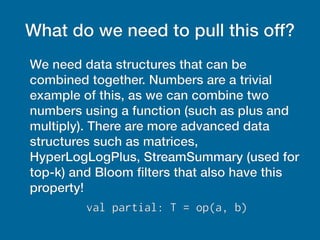

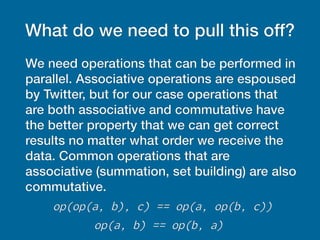

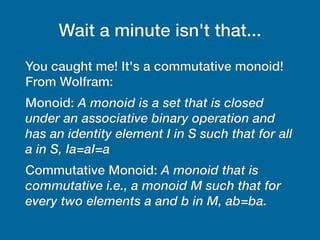

This document discusses techniques for pre-computing aggregations over large datasets using associative and commutative functions. It describes how to map input data to combinable structures, reduce the structures using associative operations, and store the results in Accumulo in a way that allows efficient querying. Examples are given of counting occurrences and finding top-k relationships between fields in the data. Considerations for more complex aggregations, security, and querying capabilities are also outlined.

![What does our Accumulo Iterator

look like?

●

We can re-use Accumulo's Combiner type here:

override def reduce:(key: Key, values:

Iterator[Value]) Value = {

// deserialize and combine all intermediate

// values. This logic should be identical to

// what is in the mr.Combiner and Reducer

}

●

Our function has to be commutative because major

compactions will often pick smaller files to combine,

which means we only see discrete subsets of data in an

iterator invocation](https://image.slidesharecdn.com/xxxtremeaggregation-140926162102-phpapp01/85/Xxx-treme-aggregation-10-320.jpg)

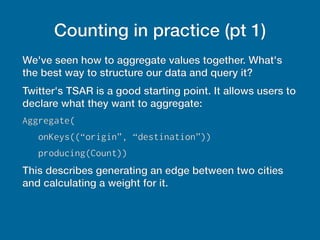

![Counting in practice (pt 2)

With that declaration, we can infer that the user wants their

operation to be summing over each instance of a given

pairing, so we can say the base value is 1 (sounds a bit like

word count, huh?). We need a key for each base value and

partial computation to be reduced with. For this simple

pairing we can have a schema like:

<field_1>0<value_1>0...<field_n>0<value_n> count: “”

[] <serialized long>

I recently traveled from Baltimore to Denver. Here's what that

trip would look like:

origin0bwi0destination0dia count: “” [] x01](https://image.slidesharecdn.com/xxxtremeaggregation-140926162102-phpapp01/85/Xxx-treme-aggregation-12-320.jpg)

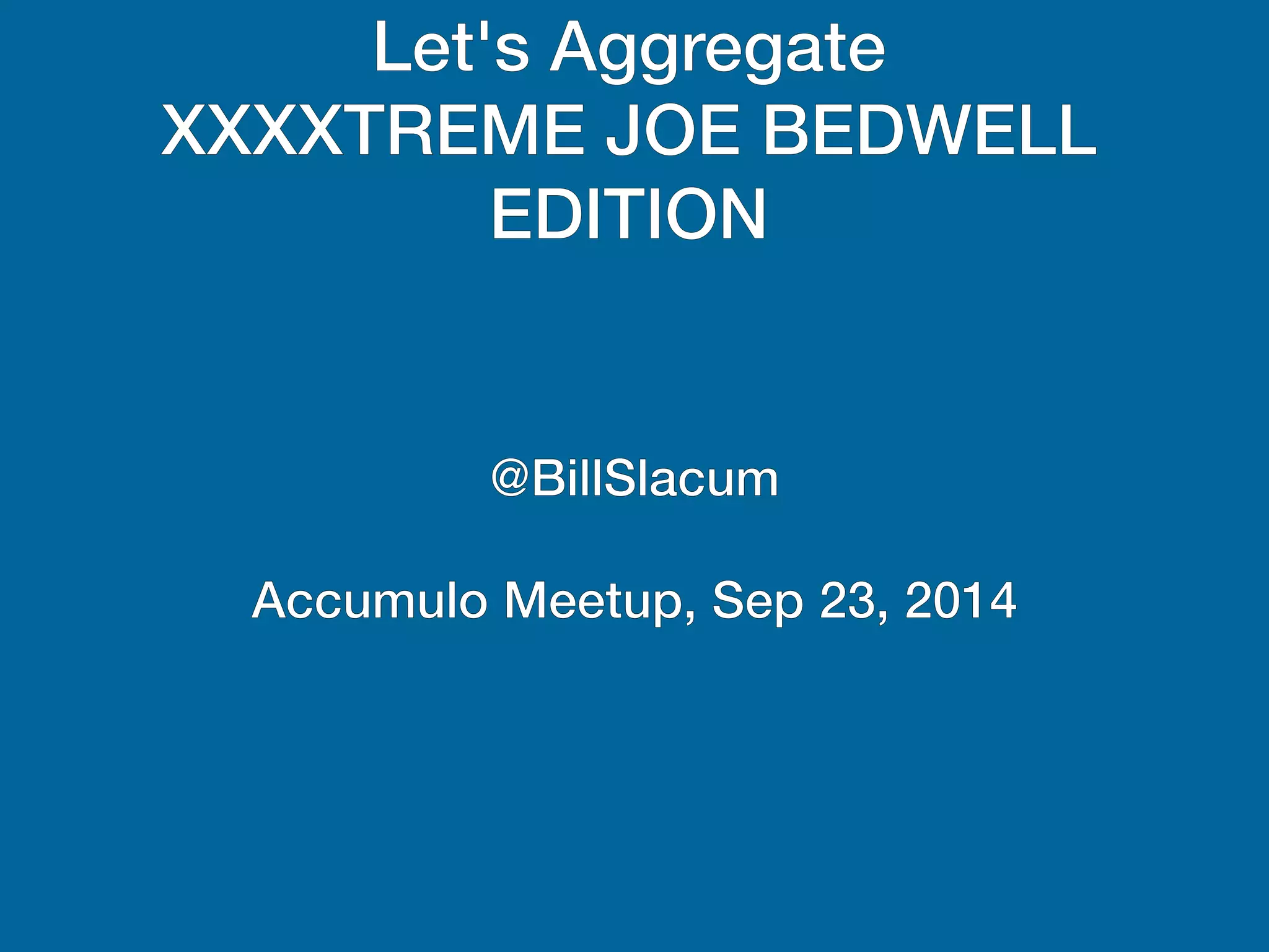

![How you like me now?

● Aggregate(

onKeys((“origin”))

producing(TopK(“destination”)))

● <field1>0<value1>0...<fieldN>0<valueN> <op>:

<relation> [] <serialized data structure>

●

Let's use my Baltimore->Denver trip as an

example:

origin0BWI topk: destination [] {“DIA”: 1}](https://image.slidesharecdn.com/xxxtremeaggregation-140926162102-phpapp01/85/Xxx-treme-aggregation-15-320.jpg)