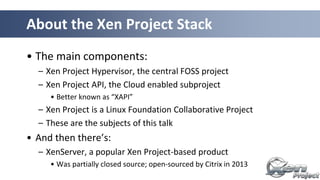

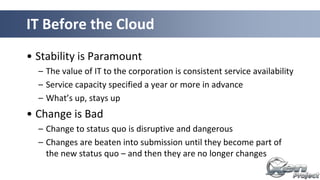

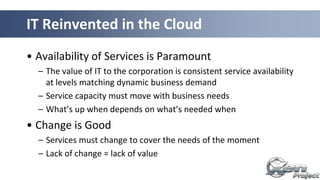

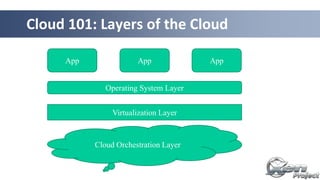

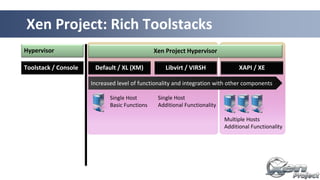

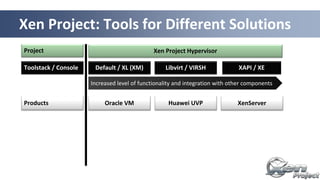

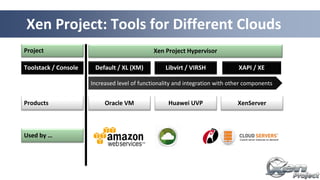

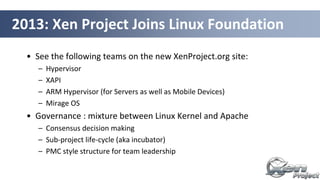

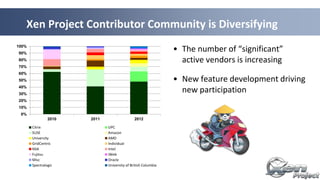

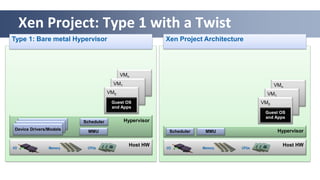

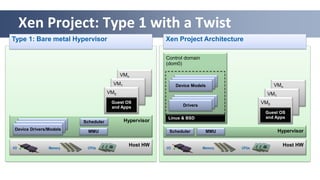

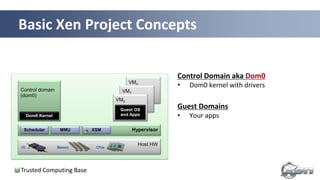

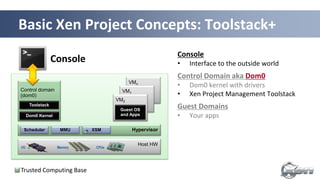

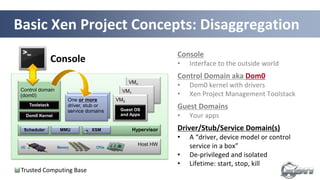

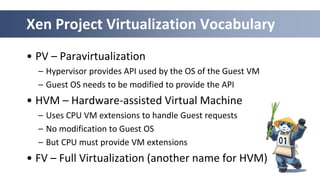

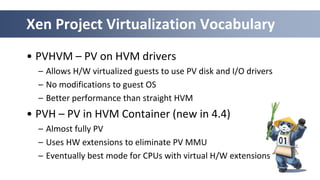

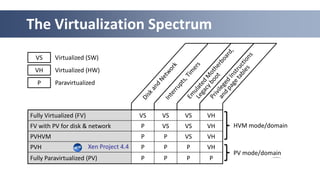

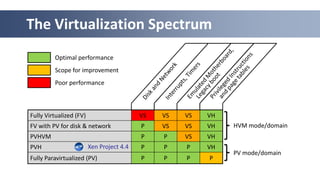

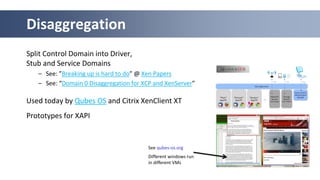

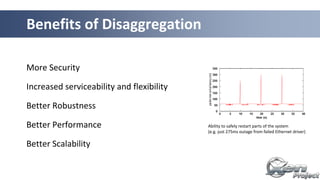

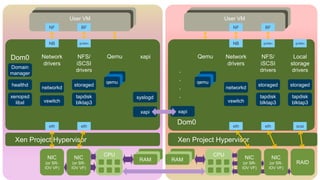

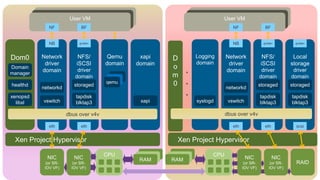

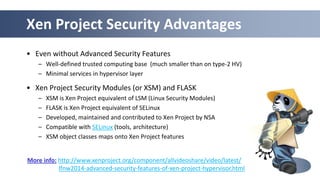

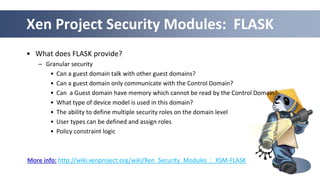

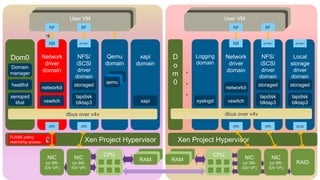

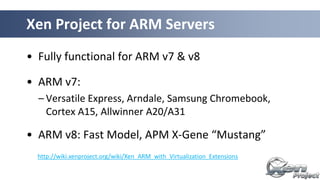

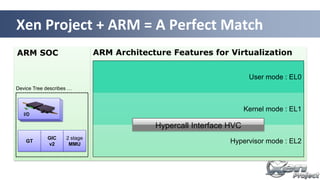

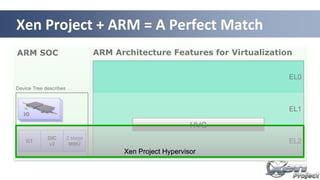

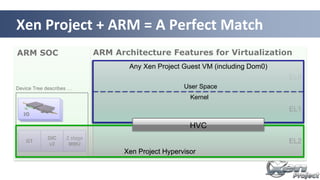

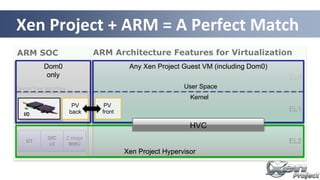

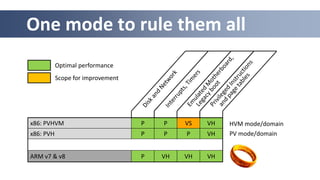

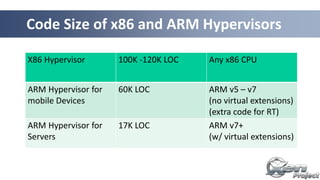

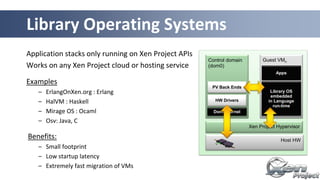

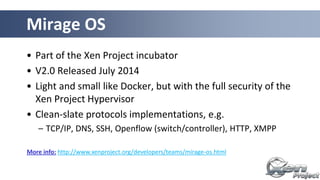

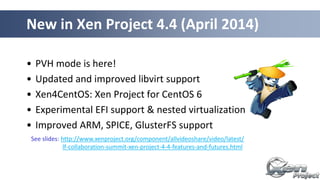

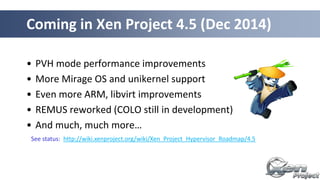

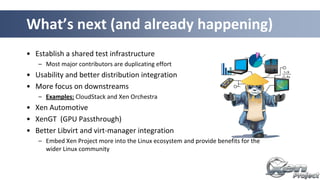

Russell Pavlicek, an evangelist for the Xen Project, discusses the project's components, including the hypervisor and API, highlighting the importance of stability and adaptability in cloud services. The Xen Project is a collaborative effort under the Linux Foundation, focusing on security, configuration at scale, and multi-tenancy without vendor lock-in. Key features include virtualization methods, disaggregation for increased security and performance, and support for ARM architecture, indicating a growing community and potential for further innovation.