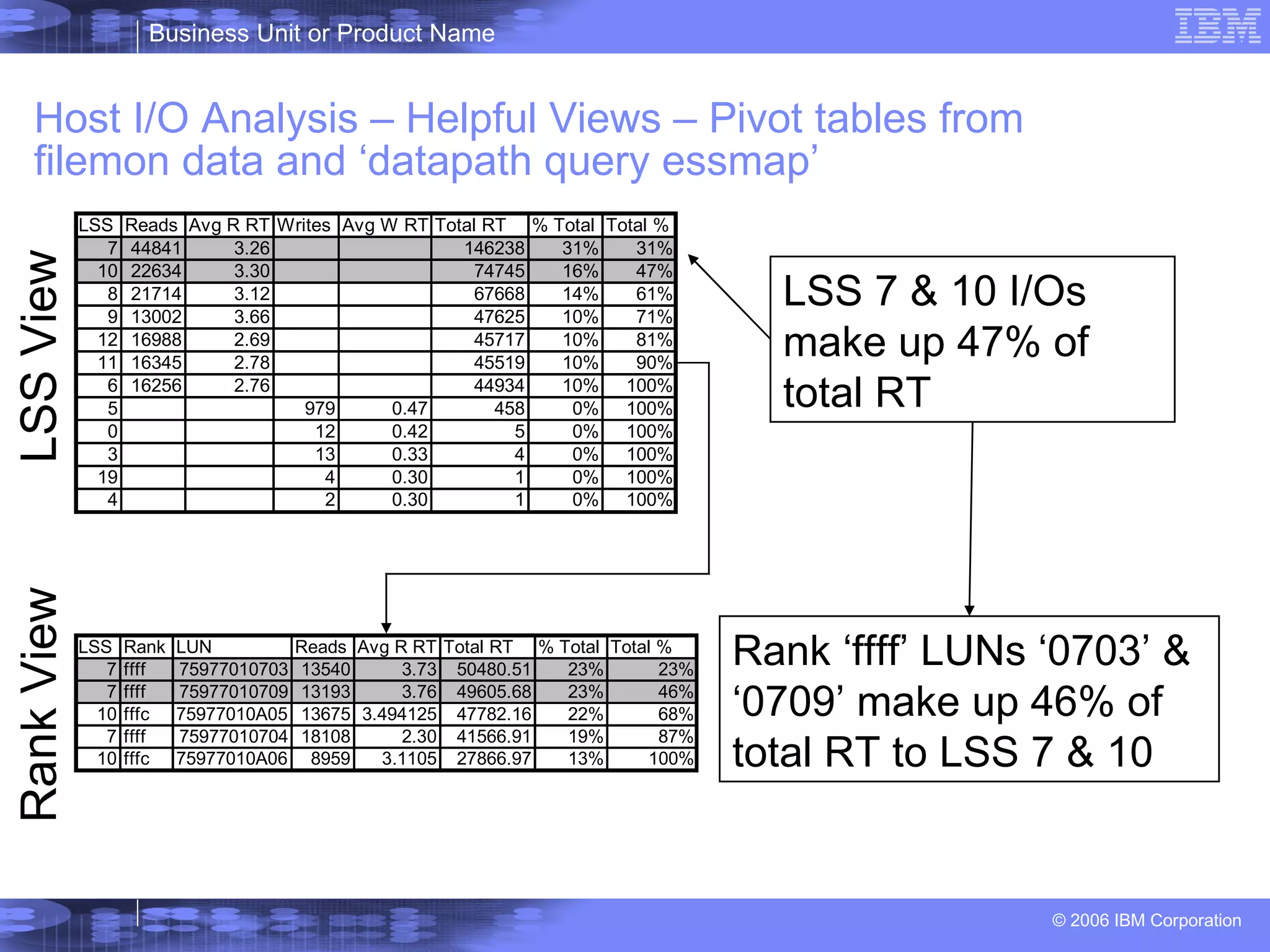

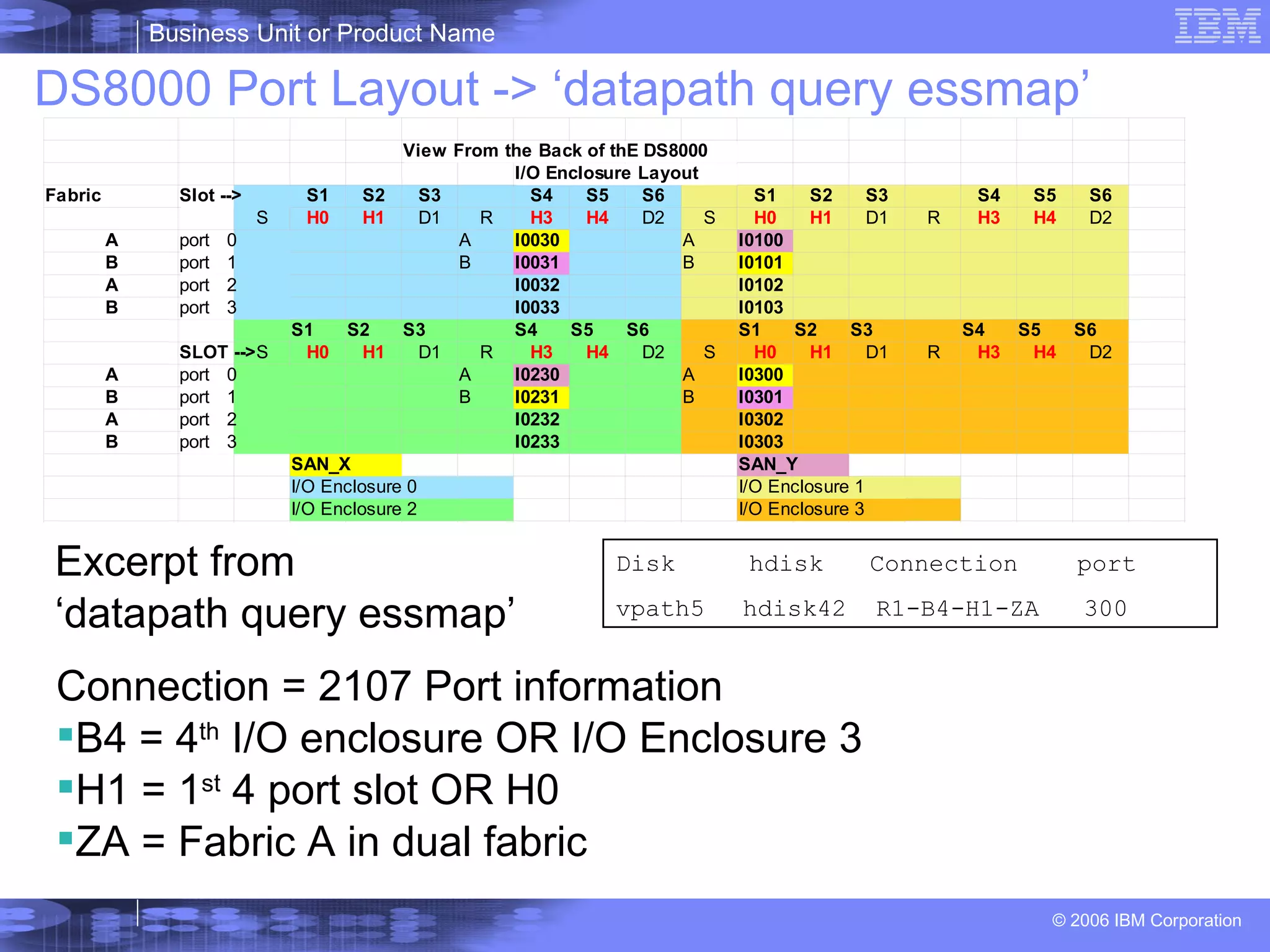

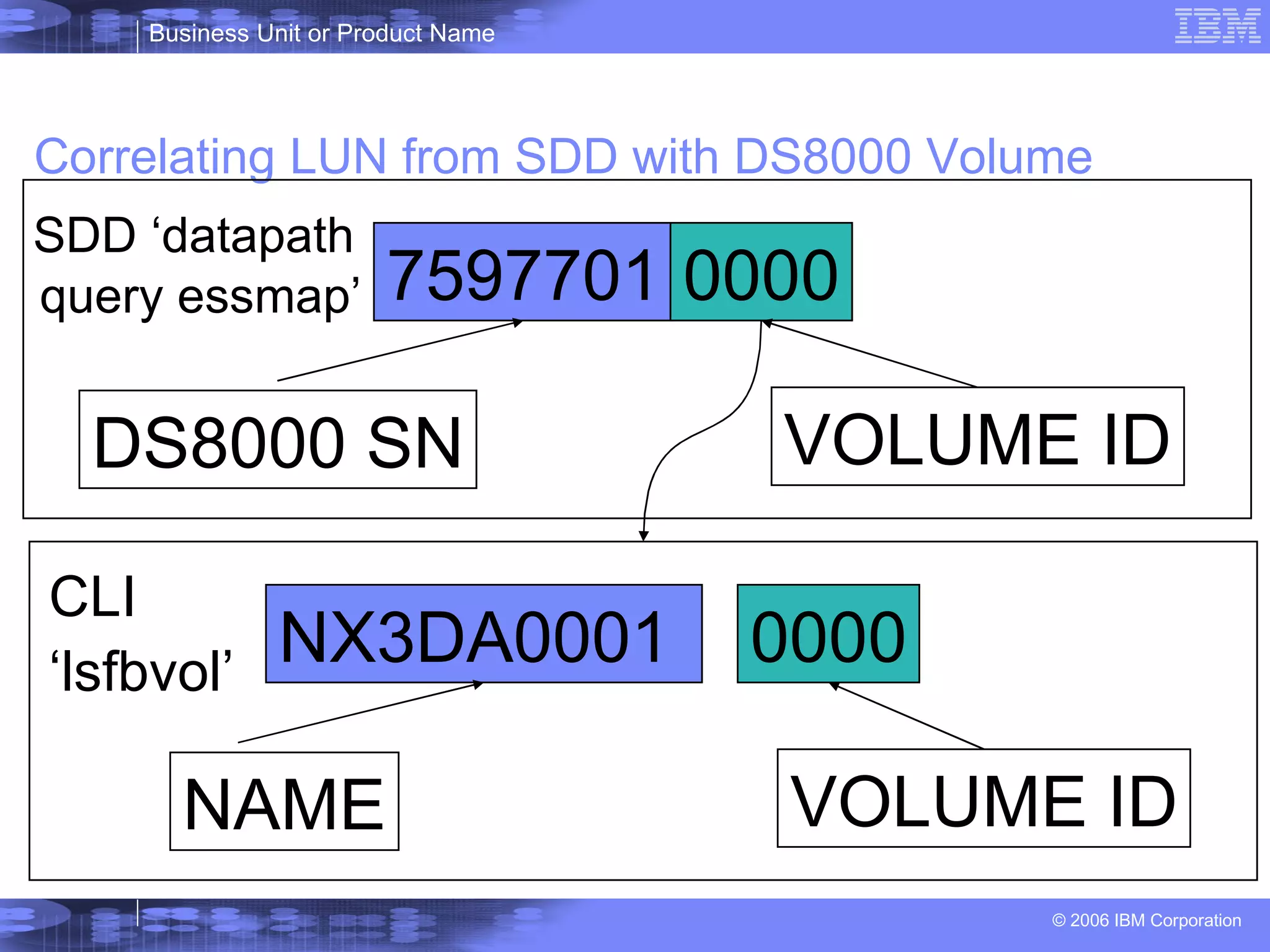

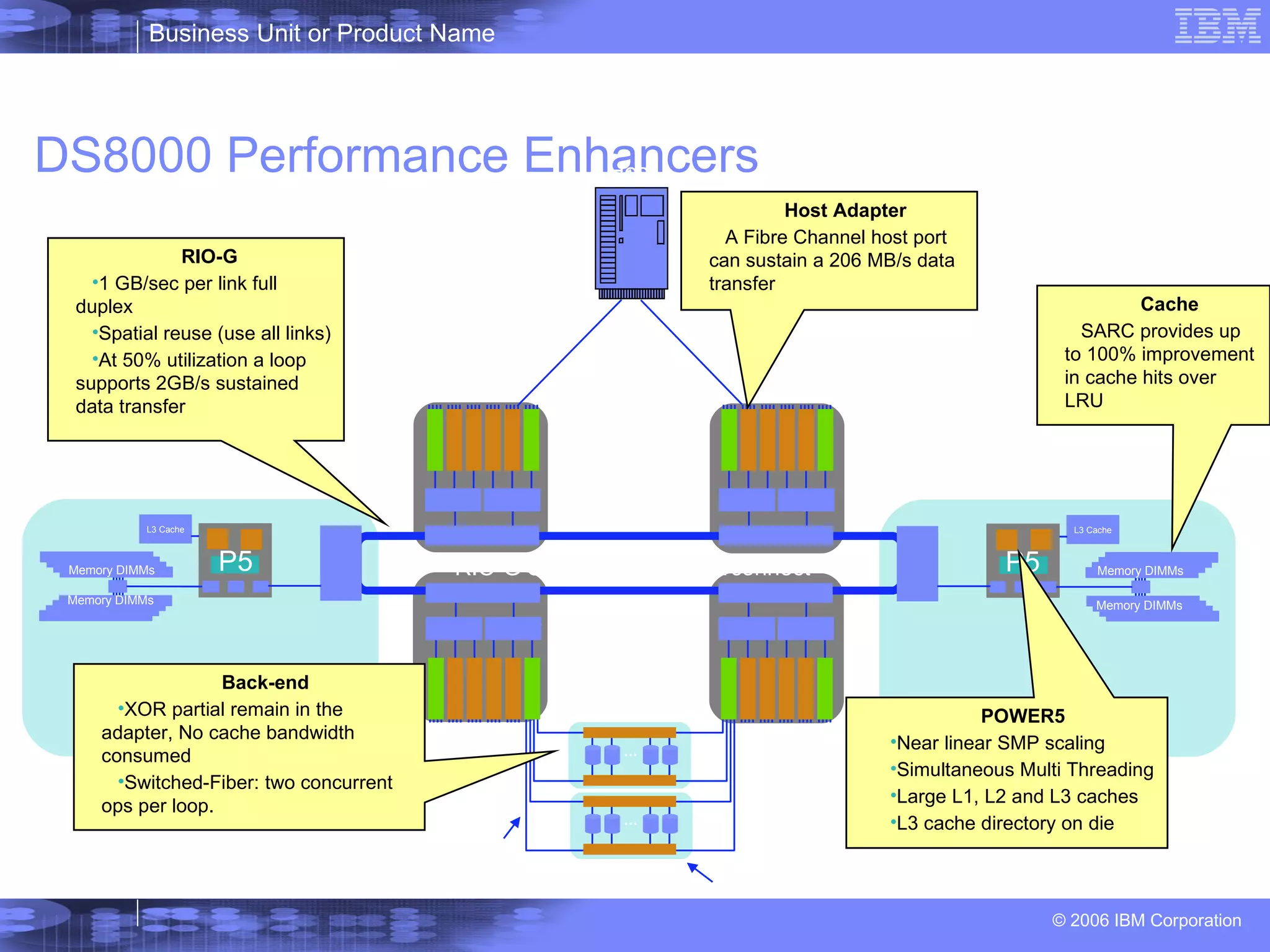

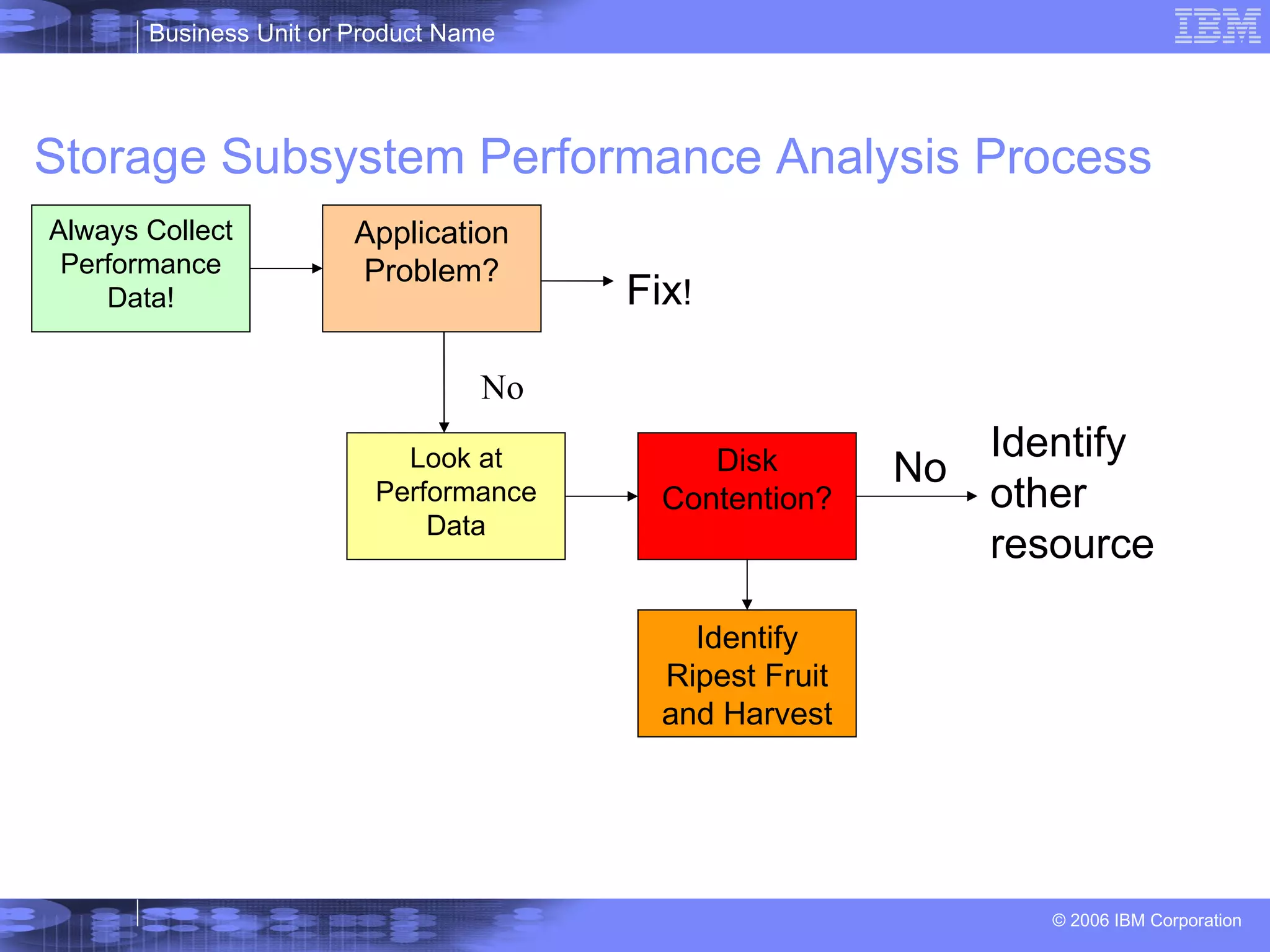

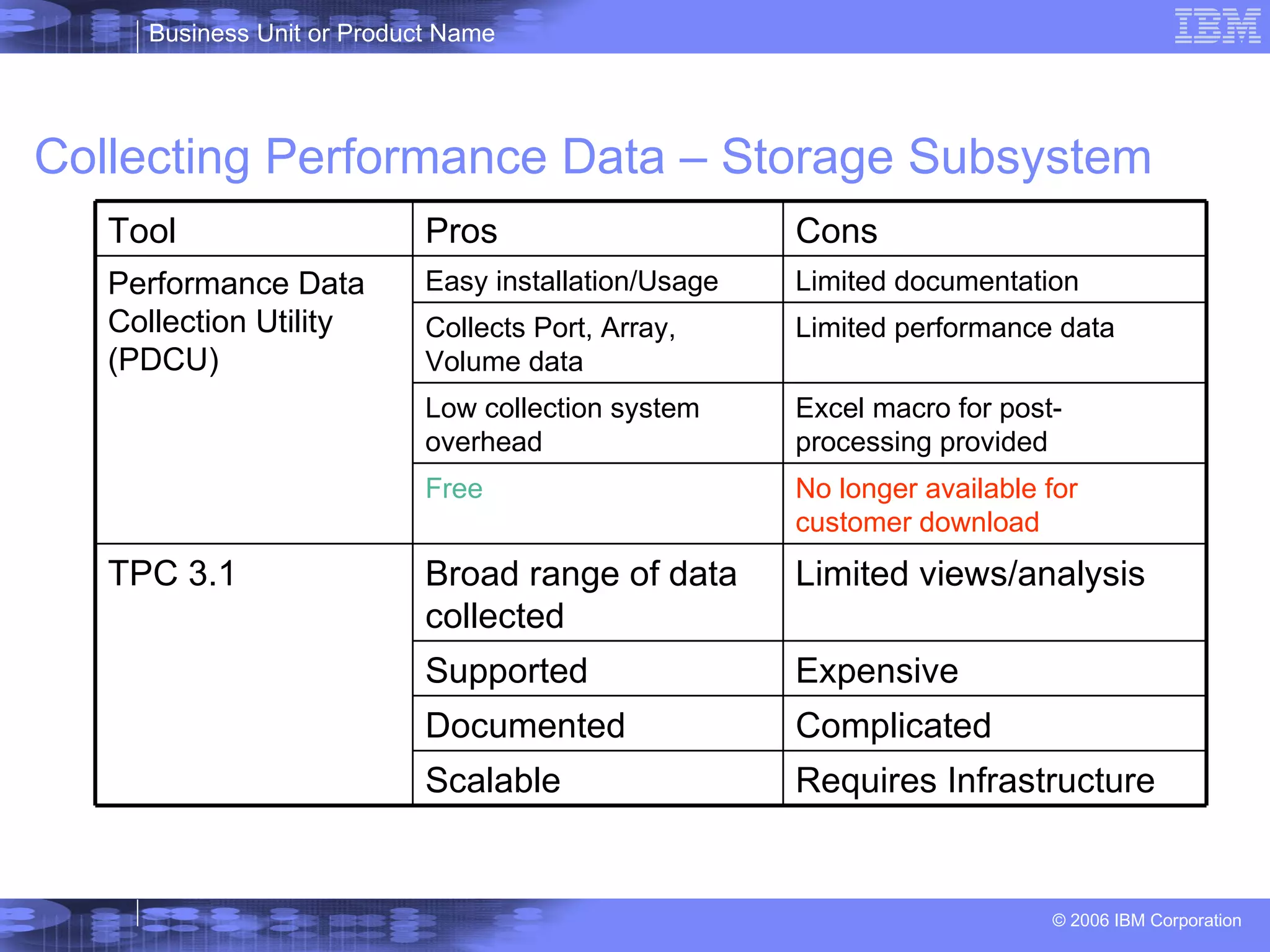

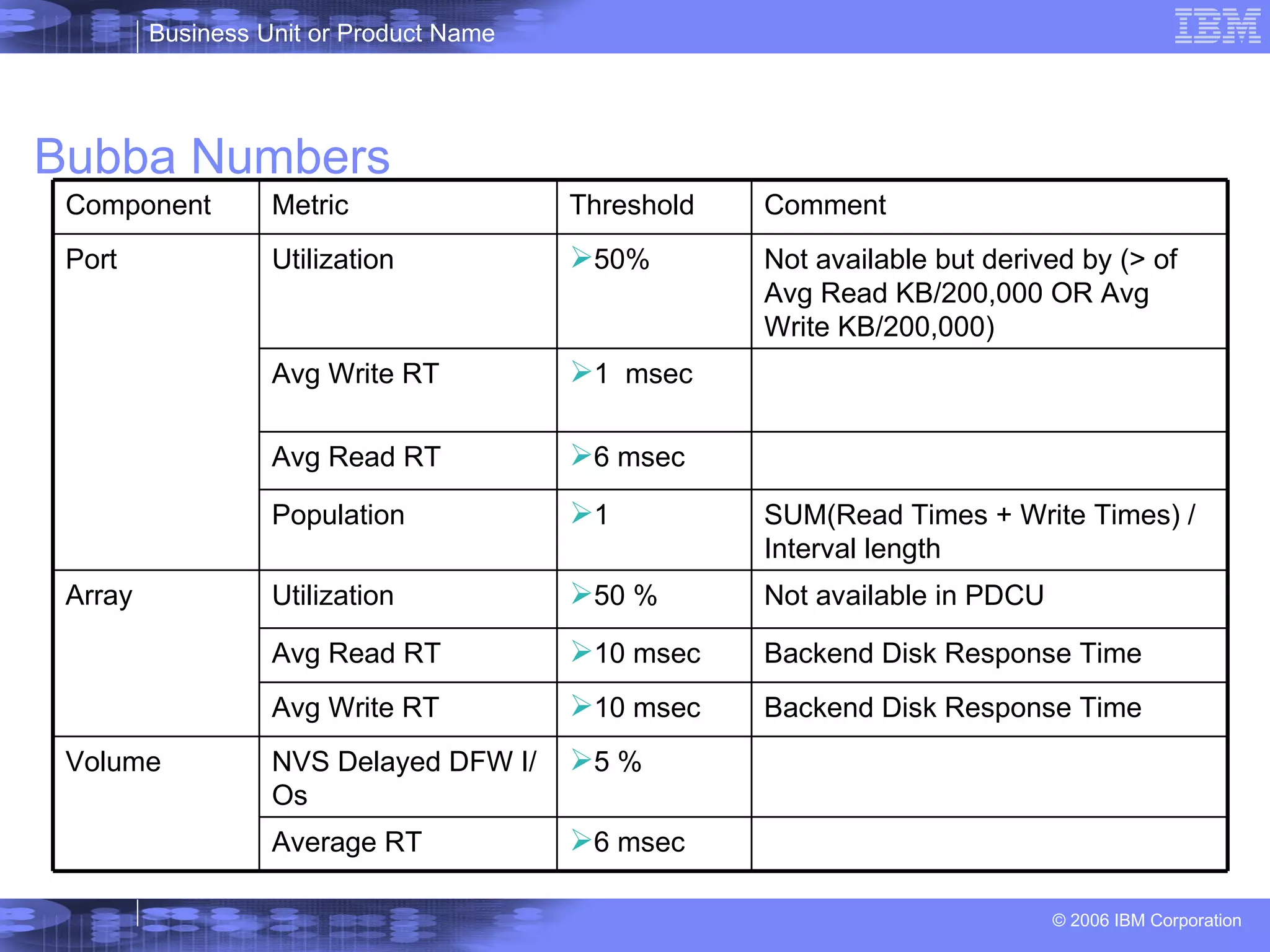

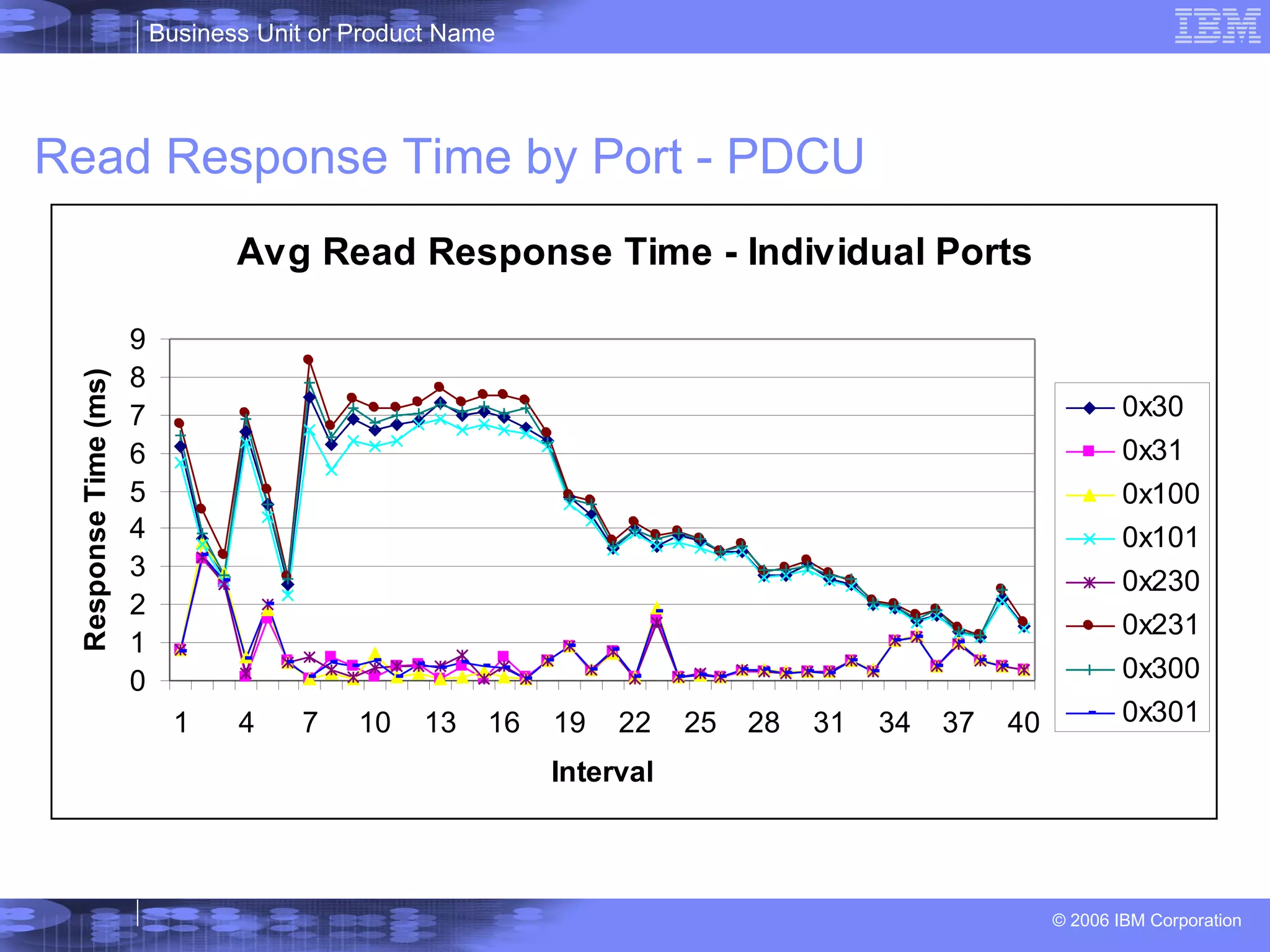

The document provides an overview and agenda for a 5-day training course on performance analysis of IBM DS8000 storage subsystems. The course covers hardware overview, performance implications, Disk Magic performance modeling tool, techniques for performance analysis including common metrics, and features that enhance DS8000 performance such as memory, cache, disks and host adapters.

![Host I/O Analysis - Example of AIX Server Gather LUN ->hdisk information See Appendix A) Disk Path P Location adapter LUN SN Type vpath197 hdisk42 09-08-01[FC] fscsi0 75977014E01 IBM 2107-900 Format the data (email me for the filemon-DS8000map.pl script) Note: The formatted data can be used in Excel pivot tables to perform top-down examination of I/O subsystem performance ------------------------------------------------------------------------ Detailed Physical Volume Stats (512 byte blocks) ------------------------------------------------------------------------ VOLUME: /dev/hdisk42 description: IBM FC 2107 reads: 1723 (0 errs) read sizes (blks): avg 180.9 min 8 max 512 sdev 151.0 read times (msec): avg 4.058 min 0.163 max 39.335 sdev 4.284 Gather Response Time Data ‘filemon’ (See Appendix B) 91.8 2.868 1978 hdisk1278 75977010604 7597701 test1 18:04:05 May/30/2006 93.3 3.832 1605 hdisk42 75977014E01 7597701 test1 18:04:05 May/30/2006 AVG_READ_KB READ_TIMES #READS HDISK LUN DS8000 SERVER TIME DATE](https://image.slidesharecdn.com/ds8000practicalperformanceanalysisp0420060718-12674563198092-phpapp01/75/Ds8000-Practical-Performance-Analysis-P04-20060718-22-2048.jpg)