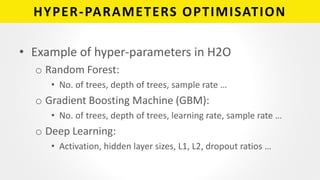

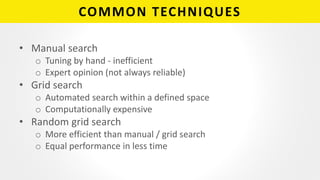

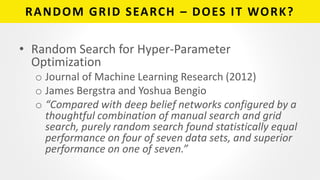

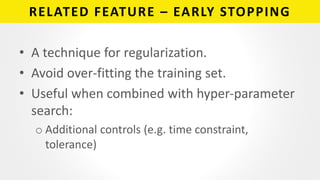

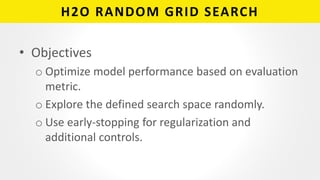

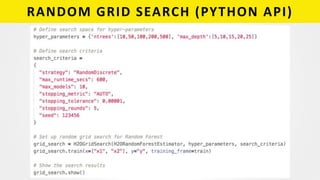

The document discusses H2O Random Grid Search, which is an efficient technique for optimizing machine learning model hyperparameters. It allows automated searching of a defined hyperparameter space at random to find the best model. The speaker explains that hyperparameter tuning is important for model performance but commonly done manually or through grid search, which are inefficient. H2O Random Grid Search explores the space randomly and uses early stopping to regularize models and make the process more effective. Examples of using the technique through H2O's Python API are also provided.