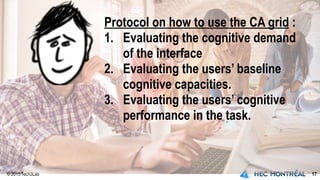

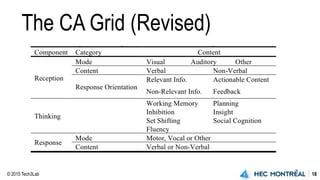

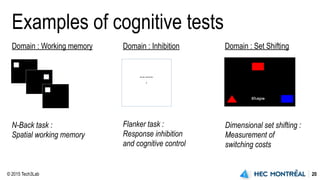

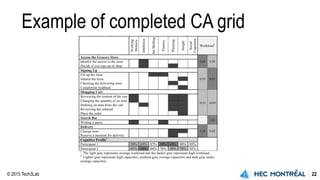

The document discusses the cognitive analysis grid (CA grid) as a tool for evaluating information systems design by assessing cognitive demands and user performance. It describes a pilot study protocol involving cognitive evaluations—focusing on executive functions targeted by clinical trials and research. The aim is to develop psychometrically robust executive measurement tools to standardize assessments and improve our understanding of executive functioning in various neurobehavioral conditions.