Embed presentation

Download to read offline

![Thank you for your attention!

Acknowledgments

GENTIO project funded by the Austrian Federal Ministry for Climate Action,

Environment, Energy, Mobility and Technology (BMK) via the ICT of the Future

Program (GA No. 873992).

DWBI project funded by Vienna Science and Technology Fund (WWTF)

[10.47379/ICT20096].

Images

Copyright © IPTC, HuggingFace, Mohamad Al Sayed](https://image.slidesharecdn.com/iwann2023coarsefineclassification-230620135311-3c0aace3/85/Unsupervised-Topic-Modeling-with-BERTopic-for-Coarse-and-Fine-Grained-News-Classification-12-320.jpg)

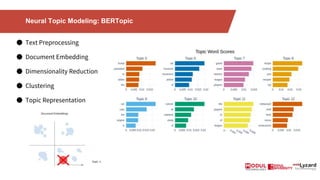

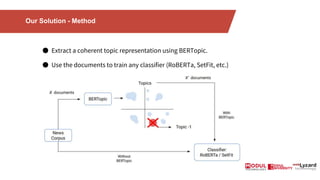

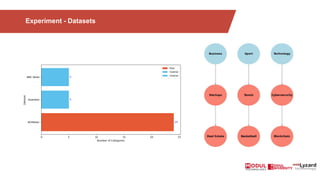

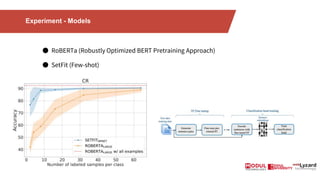

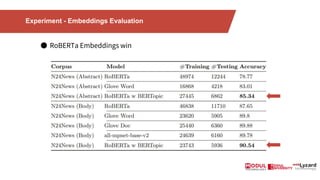

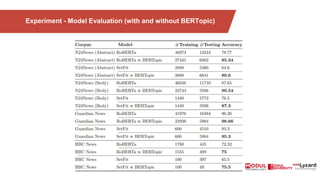

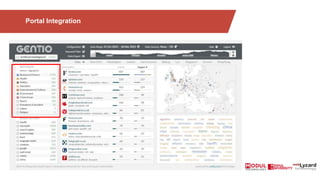

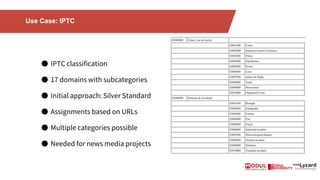

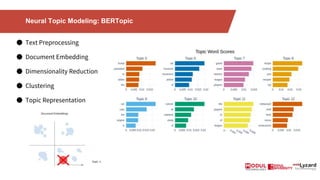

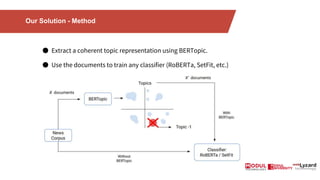

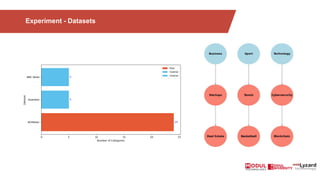

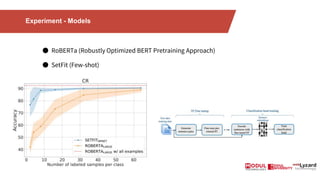

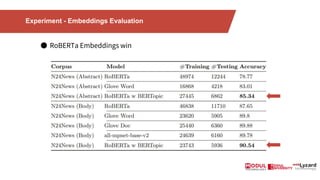

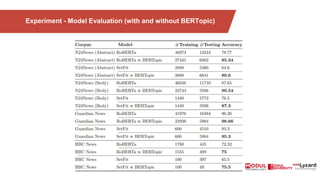

The document discusses the use of BERTopic for unsupervised topic modeling in news classification across 17 domains and subcategories, highlighting challenges in current text classification methods, such as data quality and model overfitting. A proposed method involves using BERTopic for topic representation followed by training classifiers like RoBERTa and SetFit, with experiments indicating RoBERTa embeddings outperform others. The conclusion emphasizes the benefits of improved content understanding, customization, and analytics in news media projects.

![Thank you for your attention!

Acknowledgments

GENTIO project funded by the Austrian Federal Ministry for Climate Action,

Environment, Energy, Mobility and Technology (BMK) via the ICT of the Future

Program (GA No. 873992).

DWBI project funded by Vienna Science and Technology Fund (WWTF)

[10.47379/ICT20096].

Images

Copyright © IPTC, HuggingFace, Mohamad Al Sayed](https://image.slidesharecdn.com/iwann2023coarsefineclassification-230620135311-3c0aace3/85/Unsupervised-Topic-Modeling-with-BERTopic-for-Coarse-and-Fine-Grained-News-Classification-12-320.jpg)