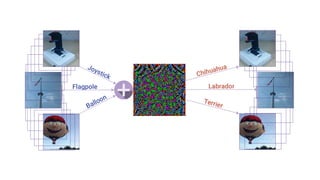

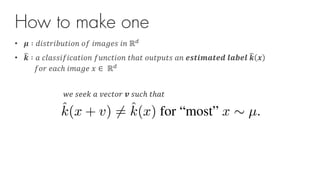

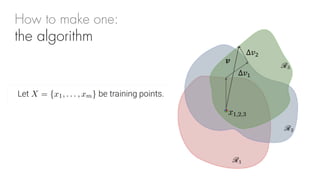

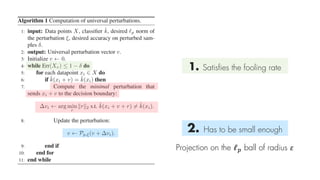

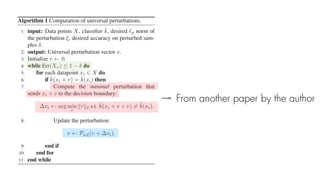

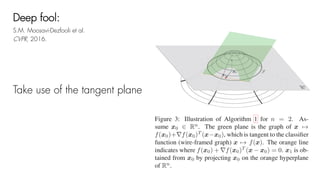

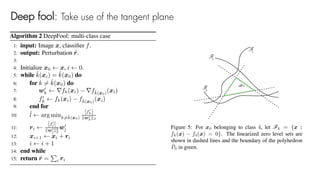

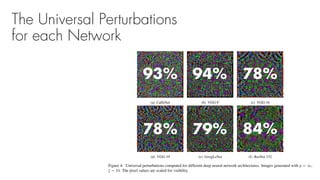

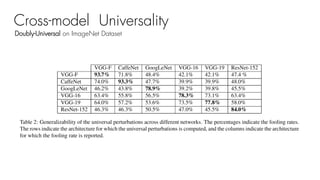

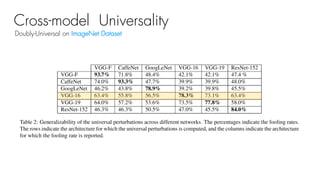

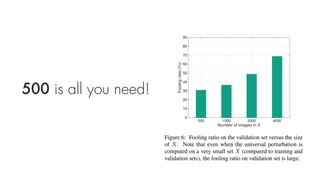

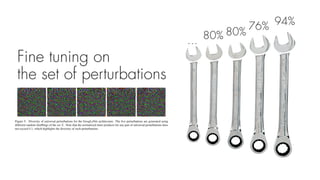

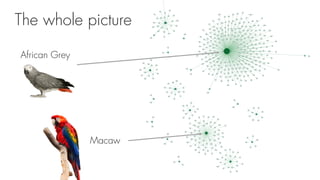

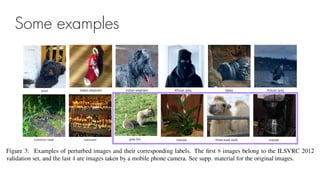

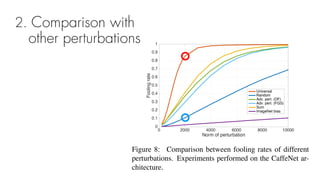

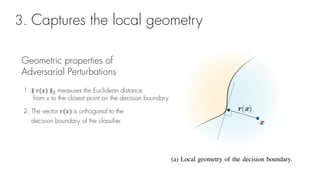

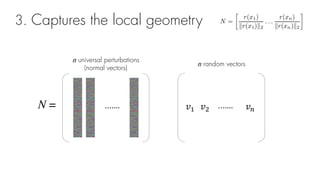

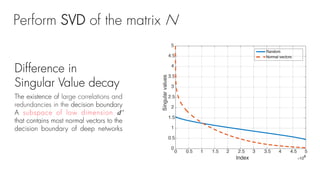

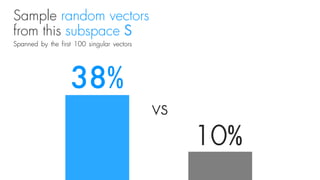

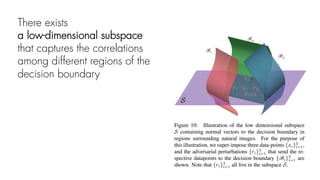

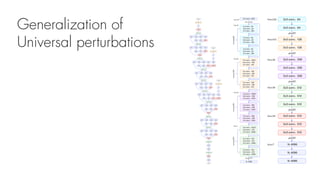

This document summarizes a seminar on universal adversarial perturbations. It begins with a quick introduction to adversarial attack methods like DeepFool. It then discusses the concept of universal adversarial perturbations - single perturbations that can fool neural networks into misclassifying most images. The document explains how universal perturbations are crafted to satisfy a fooling rate while being small. It shows that a single perturbation can achieve high fooling rates across different networks and models. It also discusses how universal perturbations capture the local geometry and correlations in the decision boundaries of neural networks.

![Explaining and Harnessing Adversarial Examples

I.J. Goodfellow et al. ICLR, 2015.

Fast Gradient Sign Method [FGSM]](https://image.slidesharecdn.com/presentationfinaleupload-180305022101/85/Universal-Adversarial-Perturbation-9-320.jpg)

![!" = " + %

&

Fast Gradient Sign Method [FGSM]

Explaining and Harnessing Adversarial Examples

I.J. Goodfellow et al. ICLR, 2015.

Perturbation

Gradient of the

cost function](https://image.slidesharecdn.com/presentationfinaleupload-180305022101/85/Universal-Adversarial-Perturbation-10-320.jpg)

![Fast Gradient Sign Method [FGSM]

Explaining and Harnessing Adversarial Examples

I.J. Goodfellow et al. ICLR, 2015.

RAW Images ATTACKED Images

With Maxout Network

Misclassification Rate

- MNIST : 89.4%](https://image.slidesharecdn.com/presentationfinaleupload-180305022101/85/Universal-Adversarial-Perturbation-11-320.jpg)

![Fast Gradient Sign Method [FGSM]

Explaining and Harnessing Adversarial Examples

I.J. Goodfellow et al. ICLR, 2015.

RAW Images ATTACKED Images

With Conv Maxout Network

Misclassification Rate

- CIFAR-10 : 87.2%](https://image.slidesharecdn.com/presentationfinaleupload-180305022101/85/Universal-Adversarial-Perturbation-12-320.jpg)

![Fast Gradient Sign Method [FGSM]

Explaining and Harnessing Adversarial Examples

I.J. Goodfellow et al. ICLR, 2015.

: Intensity of the Attack](https://image.slidesharecdn.com/presentationfinaleupload-180305022101/85/Universal-Adversarial-Perturbation-13-320.jpg)