This document discusses research instruments and their development. It covers:

1. Drafting measurement questions such as open-ended, closed-ended, dichotomous, and rating scale questions for surveys and checklists.

2. Assembling, pre-testing, and revising instruments to ensure questions are appropriate and identify issues before full data collection.

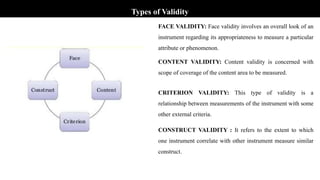

3. Testing instruments for reliability to ensure consistent results over time and validity to confirm they accurately measure the intended constructs.

Characteristics of good research instruments and the process of designing questionnaires are also outlined.