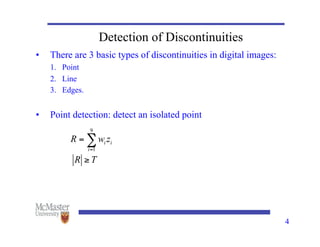

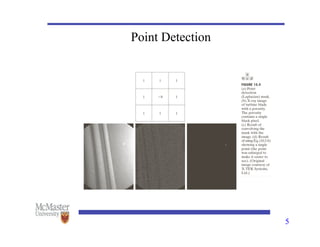

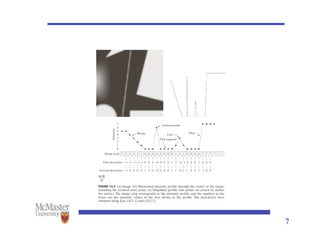

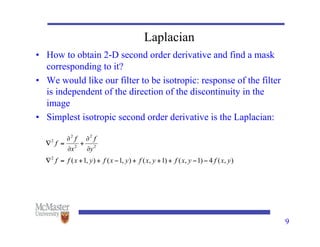

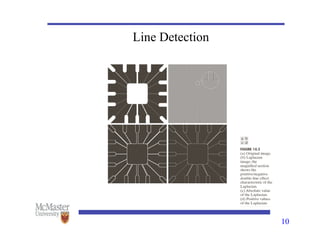

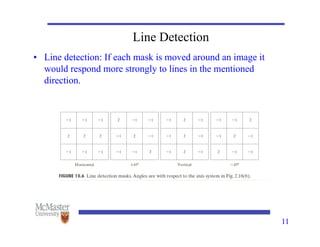

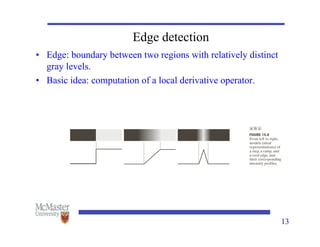

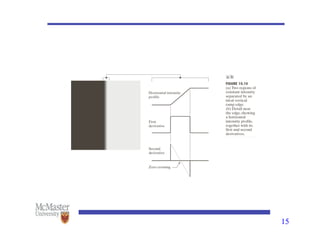

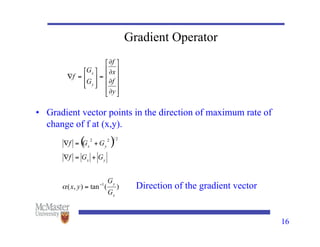

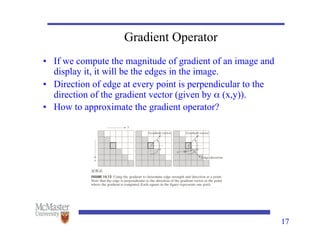

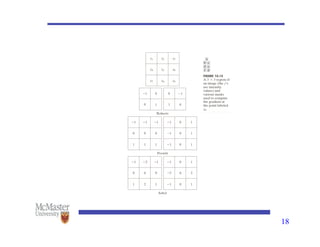

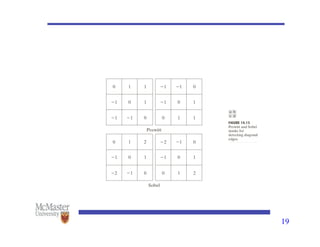

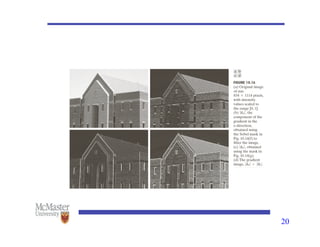

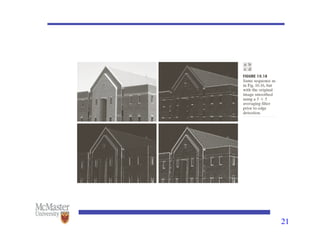

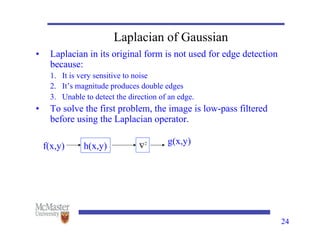

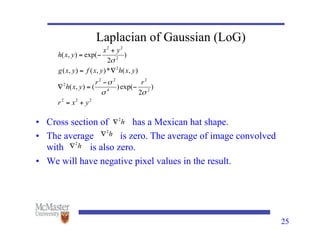

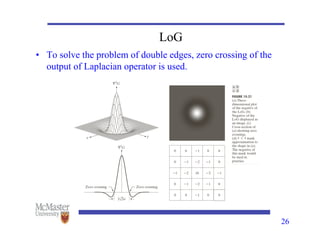

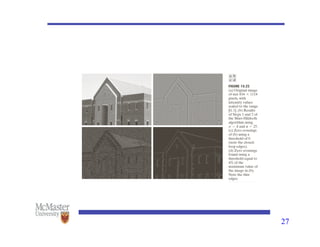

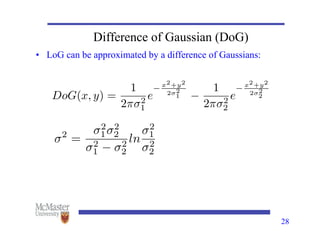

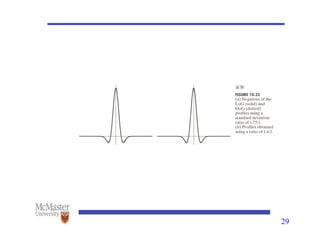

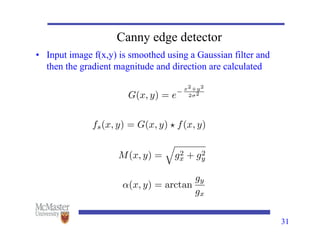

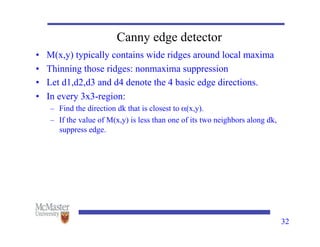

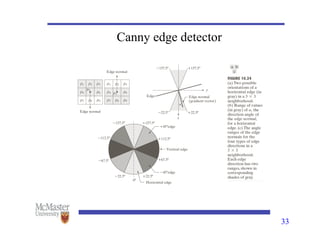

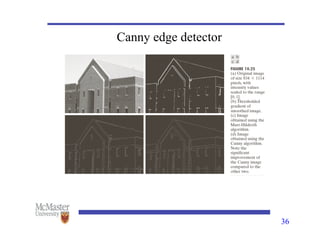

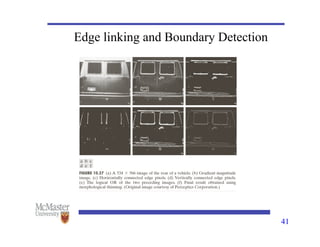

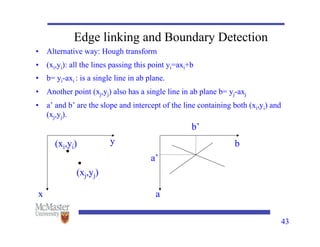

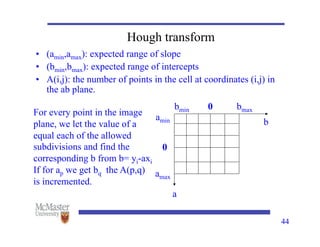

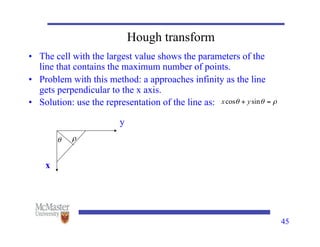

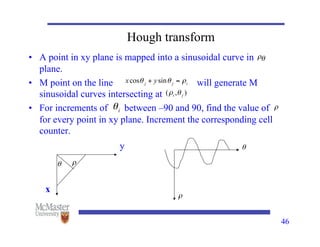

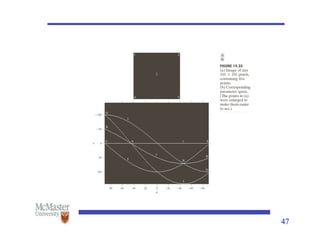

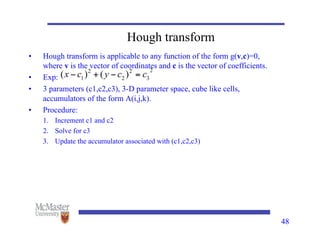

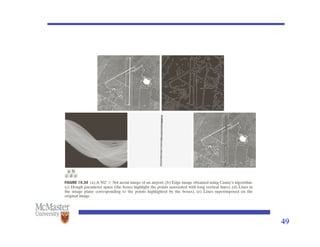

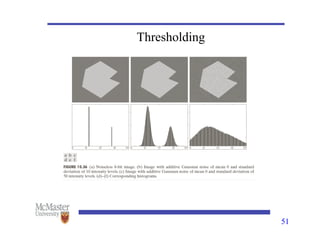

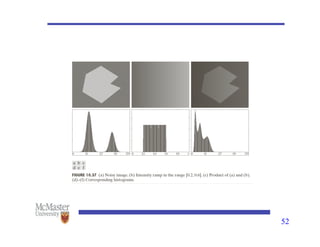

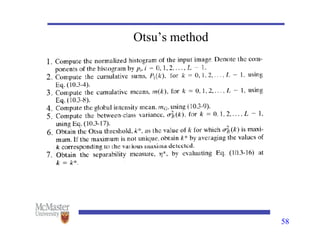

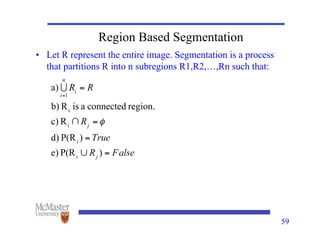

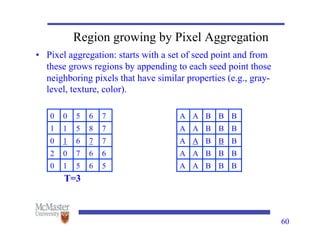

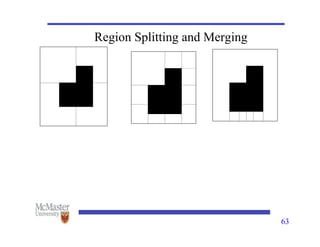

This document discusses image segmentation, emphasizing techniques for subdividing images into constituent parts, primarily focusing on autonomous segmentation. It outlines key concepts such as discontinuity and similarity in segmentation algorithms, methods for edge detection including the Canny edge detector and the Hough transform, and approaches like thresholding, region growing, and region splitting and merging for processing images. The document details the various methods and challenges in detecting edges, linking and boundary detection, and segmenting regions based on pixel properties.