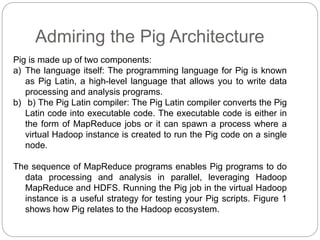

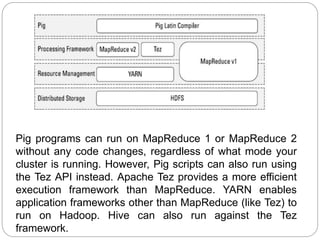

Pig is a tool that makes Hadoop programming easier for non-Java users. It provides a high-level declarative query language called Pig Latin that is compiled into MapReduce jobs. Pig Latin allows users to express data analysis logic without dealing with low-level MapReduce code. It was developed at Yahoo! to help users focus on analyzing large datasets rather than writing Java code. Pig includes features like automatic optimization and extensibility to make it smarter and more useful for developers.