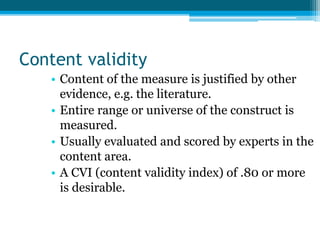

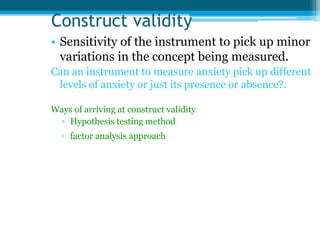

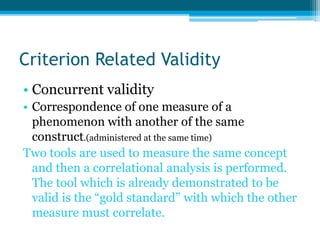

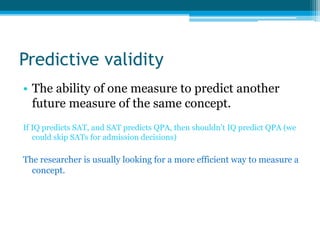

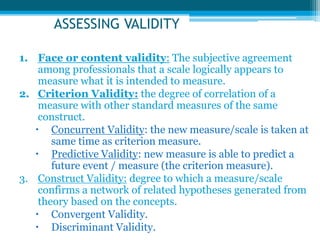

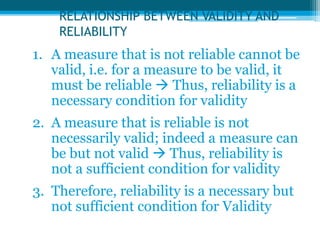

This document discusses validity and reliability in measurement. It defines validity as the accuracy of a measure and the extent to which it measures the intended concept. Reliability is the degree to which a measure is consistent. There are several types of validity discussed, including face validity, content validity, criterion validity (concurrent and predictive), and construct validity. Reliability can be measured through test-retest, parallel forms, and internal consistency. A measure must be reliable but reliability alone does not ensure validity.