ChatGPT

تسجيل الدخول

أنت قلت:

Give me a title and description for this presentation: Recursive Neural Network and title is 40 characters and description is 3000 characters

قال ChatGPT:

Sure! Here's a title that fits the 40-character limit and a detailed description up to 3000 characters:

Title:

Understanding Recursive Neural Networks

Description:

Recursive Neural Networks (RecNNs) are a class of deep learning models that apply the same set of weights recursively over structured input, such as trees. Unlike traditional feedforward or recurrent neural networks that handle flat or sequential data, RecNNs are designed to work on hierarchical structures, making them especially powerful in areas like natural language processing, sentiment analysis, and syntactic parsing.

This presentation delves into the foundational concepts, architecture, and applications of Recursive Neural Networks. We begin by exploring the motivation behind using recursive models, particularly in scenarios where input data has an inherent recursive or tree-like structure—such as parsing a sentence into its grammatical components or analyzing nested expressions in programming languages.

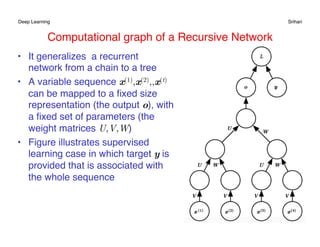

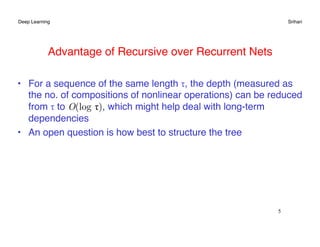

We will examine the architecture of a basic RecNN, where the same function is applied at each node of a tree, and how it differs from Recurrent Neural Networks (RNNs). Special attention will be given to the compositional nature of RecNNs, where child nodes are combined to produce parent node representations, allowing the model to learn complex structural dependencies.

The presentation also covers advanced variants like the Tree-LSTM, which enhances the standard RecNN by incorporating long short-term memory units to better capture long-range dependencies and reduce vanishing gradient issues. We'll explore how these models are trained using gradient-based optimization techniques and how backpropagation through structure (BPTS) is applied in this context.

Real-world applications of RecNNs are showcased, particularly in sentiment classification where the model interprets sentiment based on the syntactic structure of sentences rather than just the presence of keywords. We’ll look into benchmark datasets such as the Stanford Sentiment Treebank and how RecNNs outperform traditional models in capturing nuanced meanings.

Challenges and limitations of RecNNs will also be discussed, including issues with scalability, training complexity, and the need for structured input like parse trees. We will touch upon recent research efforts aimed at overcoming these hurdles, including hybrid models and unsupervised approaches to structure learning.

By the end of the presentation, attendees will have a comprehensive understanding of Recursive Neural Networks, their mathematical underpinnings, practical uses, and potential for future research. This talk is geared toward students, researchers, and professionals with a basic understanding of neural networks who are interested in exploring more structured.