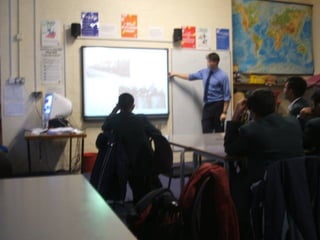

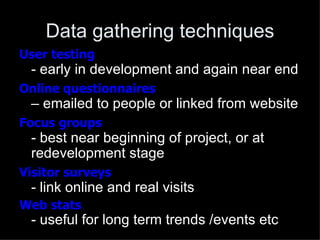

The document discusses user testing of online educational resources in classroom settings. It argues that classroom user testing provides valuable insights that conventional user testing alone cannot reveal. Testing projects with real students and teachers allows issues to emerge that may not be apparent in isolated testing, such as usability problems when content is viewed by an entire class rather than individually. While classroom testing has limitations in what can be observed, it better reflects the real use context compared to controlled one-on-one testing, and is important for ensuring online resources meet classroom needs.

![The ‘long tail’

An example of a power law graph showing popularity

ranking. To the right is the long tail; to the left are the

few that dominate. Notice that the areas of both

regions match. [Wikipedia: Long Tail]](https://image.slidesharecdn.com/understandingonlineaudiencescreatingcapacity19june2012-120714122246-phpapp02/85/Understanding-online-audiences-creating-capacity-19-june-2012-97-320.jpg)

![The ‘long tail’

The tail becomes bigger and longer in new markets (depicted in

red). In other words, whereas traditional retailers have focused

on the area to the left of the chart, online bookstores derive

more sales from the area to the right.[Wikipedia: Long Tail]](https://image.slidesharecdn.com/understandingonlineaudiencescreatingcapacity19june2012-120714122246-phpapp02/85/Understanding-online-audiences-creating-capacity-19-june-2012-98-320.jpg)