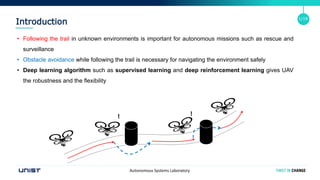

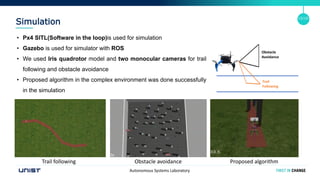

The document describes a learning-based algorithm for drone trail following with obstacle avoidance. The algorithm uses deep learning approaches including convolutional neural networks (CNN) and deep reinforcement learning. CNN is used for trail following to classify the drone's direction, and deep reinforcement learning with a dual deep Q network is used for obstacle avoidance. The algorithm integrates these approaches, using monocular camera images and estimated depth images to determine if an obstacle is present and either execute the trail following or obstacle avoidance module. Simulation and real-world experiments demonstrated the algorithm's ability to safely follow trails while avoiding obstacles.