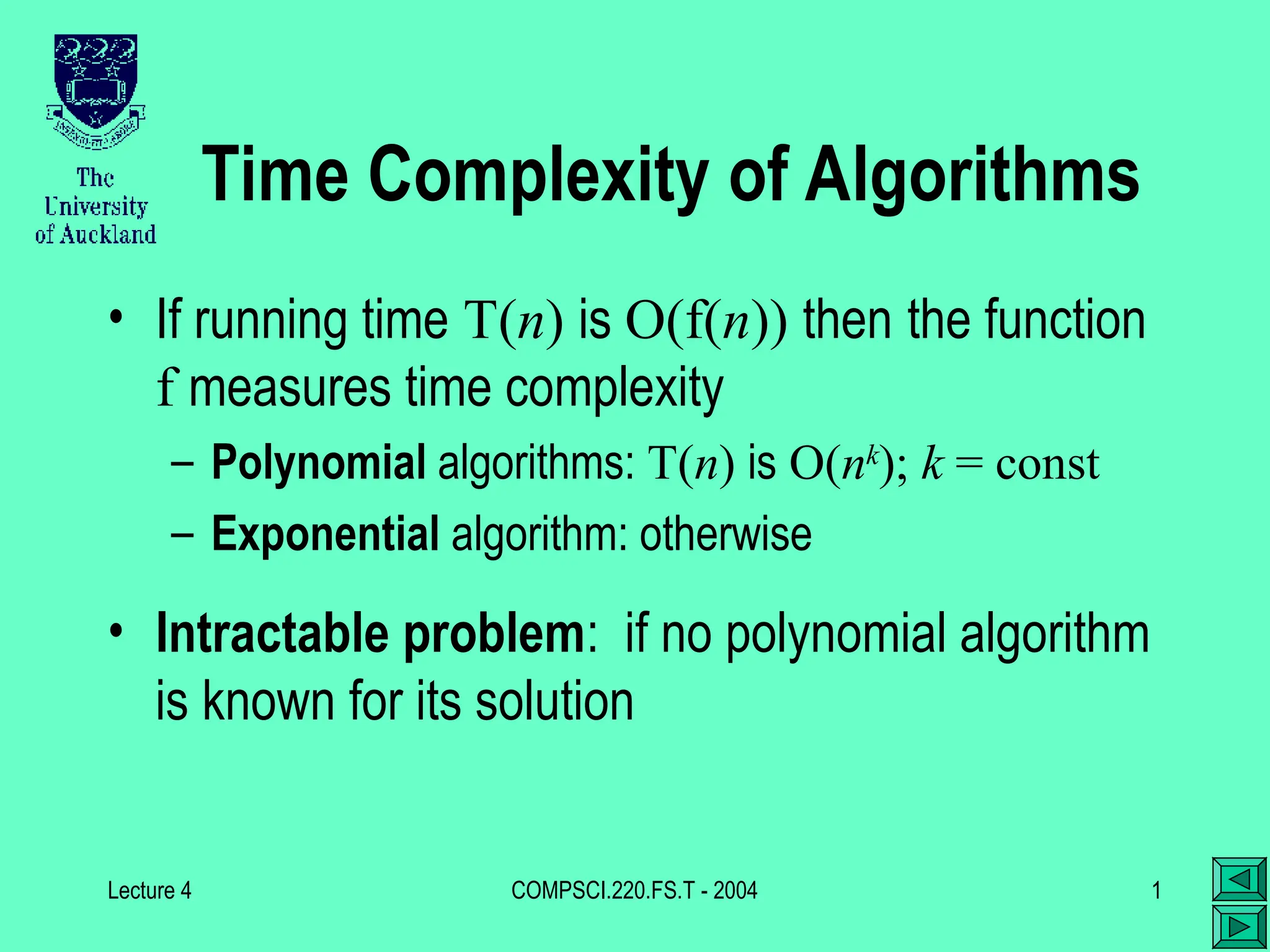

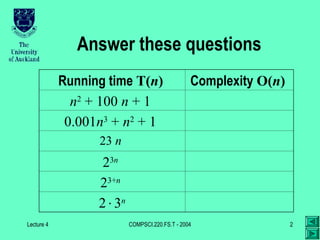

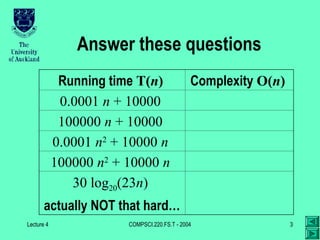

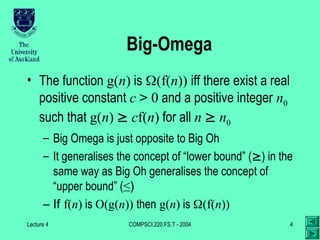

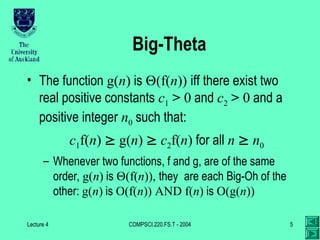

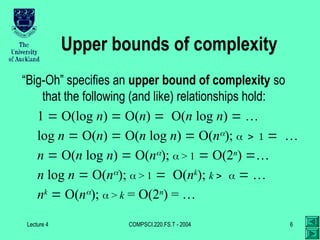

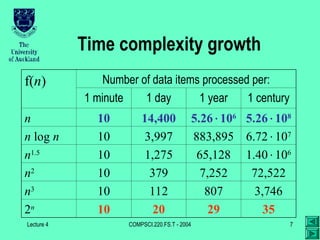

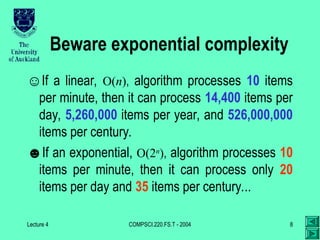

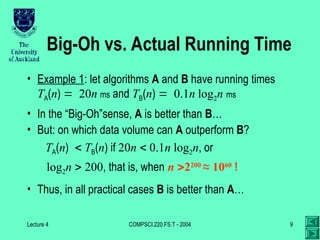

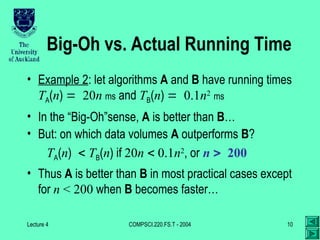

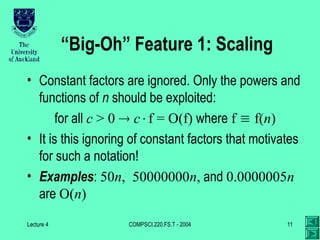

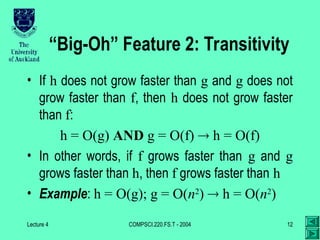

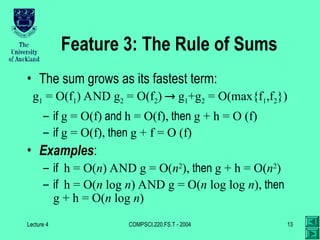

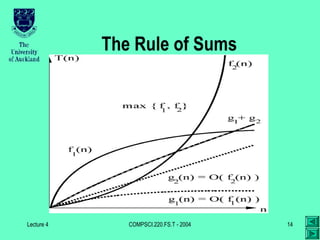

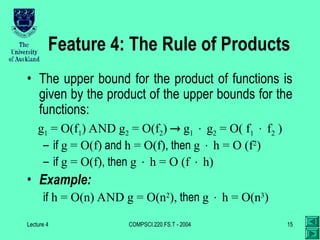

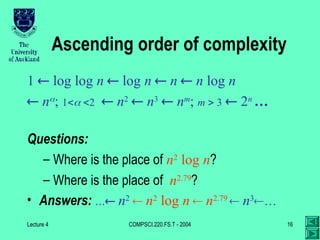

The document discusses time complexity of algorithms, categorizing them into polynomial and exponential complexities, and introduces concepts such as big-oh, big-omega, and big-theta. It outlines upper bounds of complexity, scaling properties, transitivity, and rules for sums and products. Key takeaways include the disparities between theoretical growth rates and practical performance of algorithms, demonstrating circumstances where one algorithm may outperform another despite having a higher complexity class.