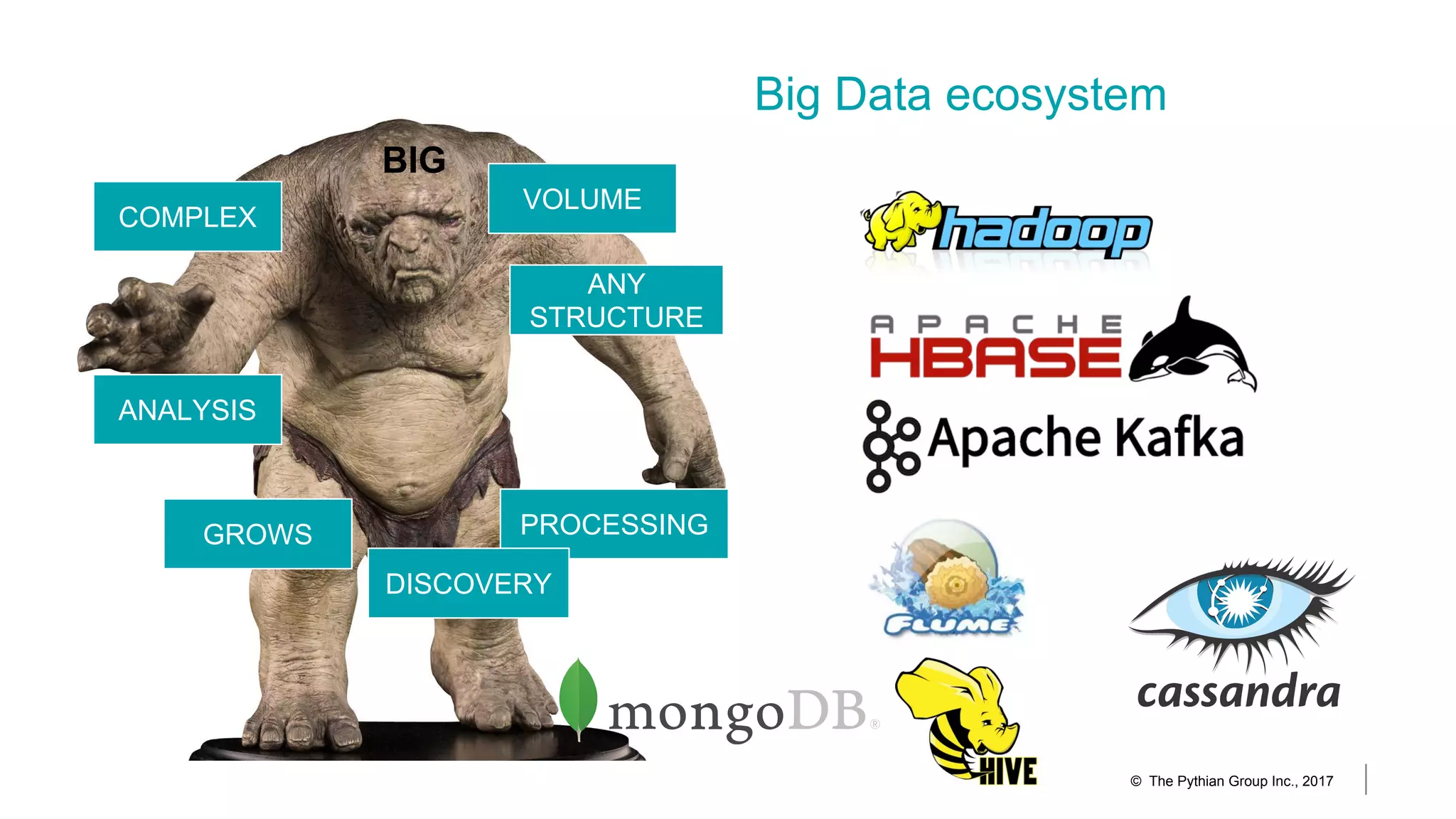

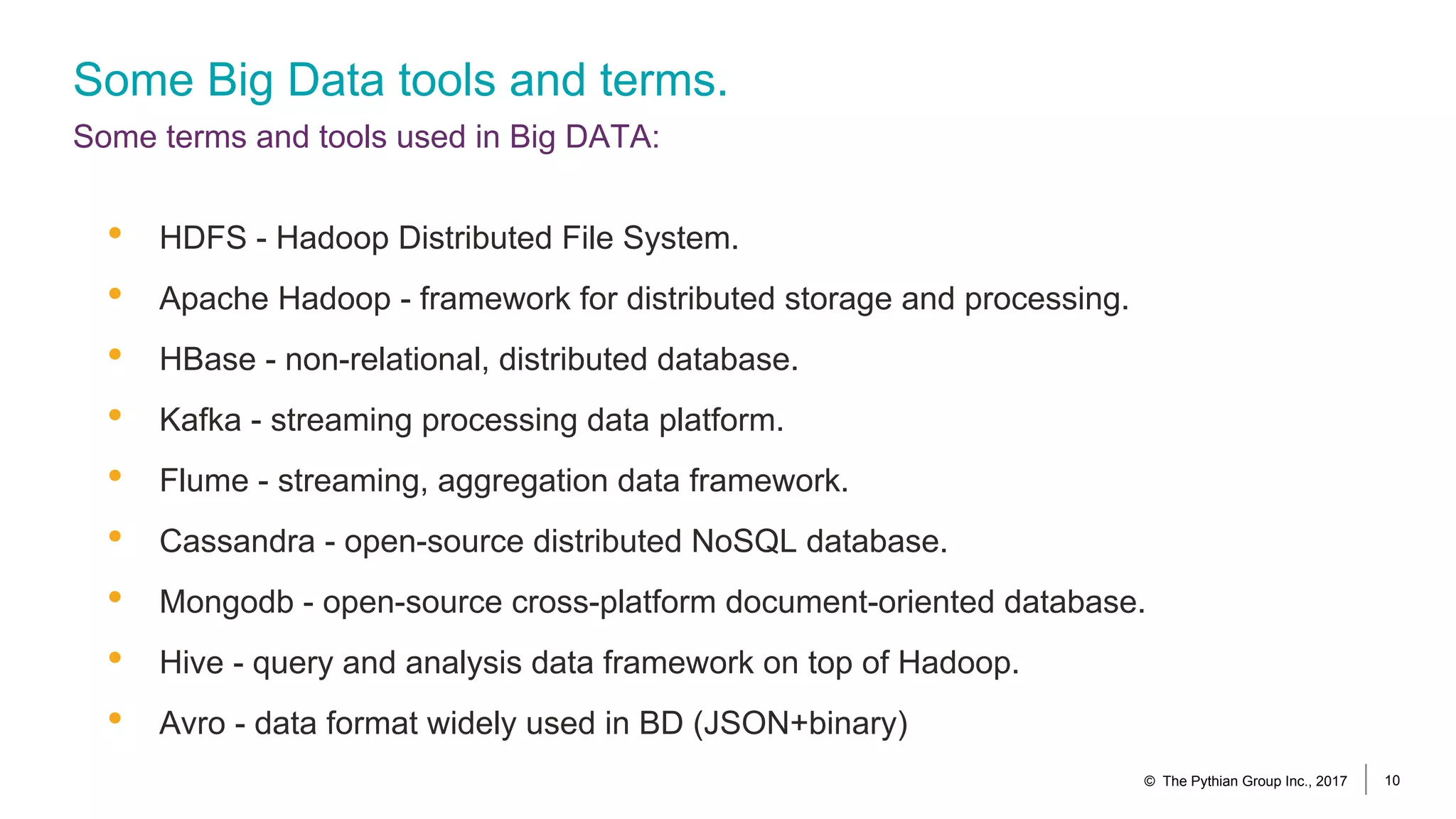

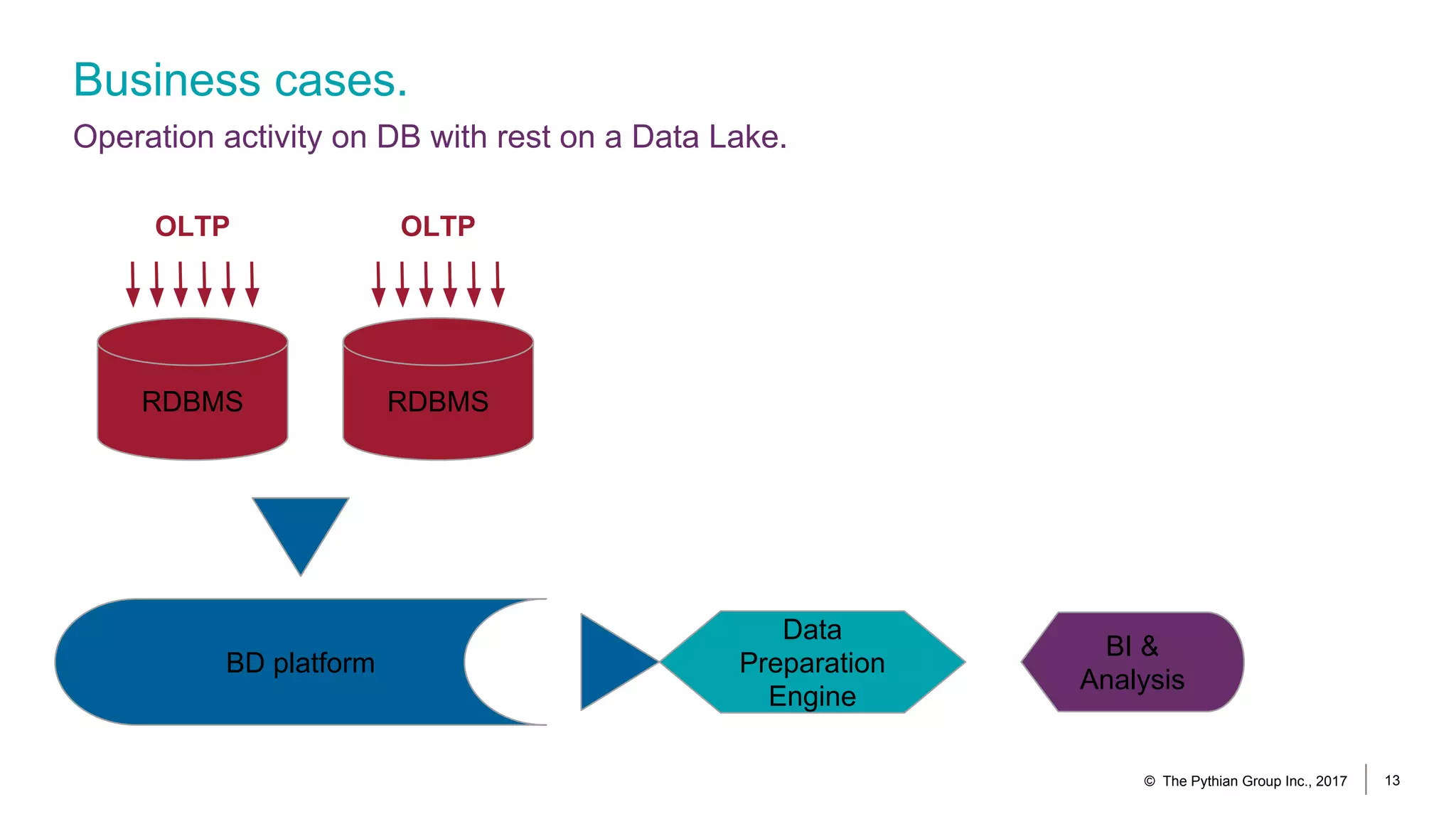

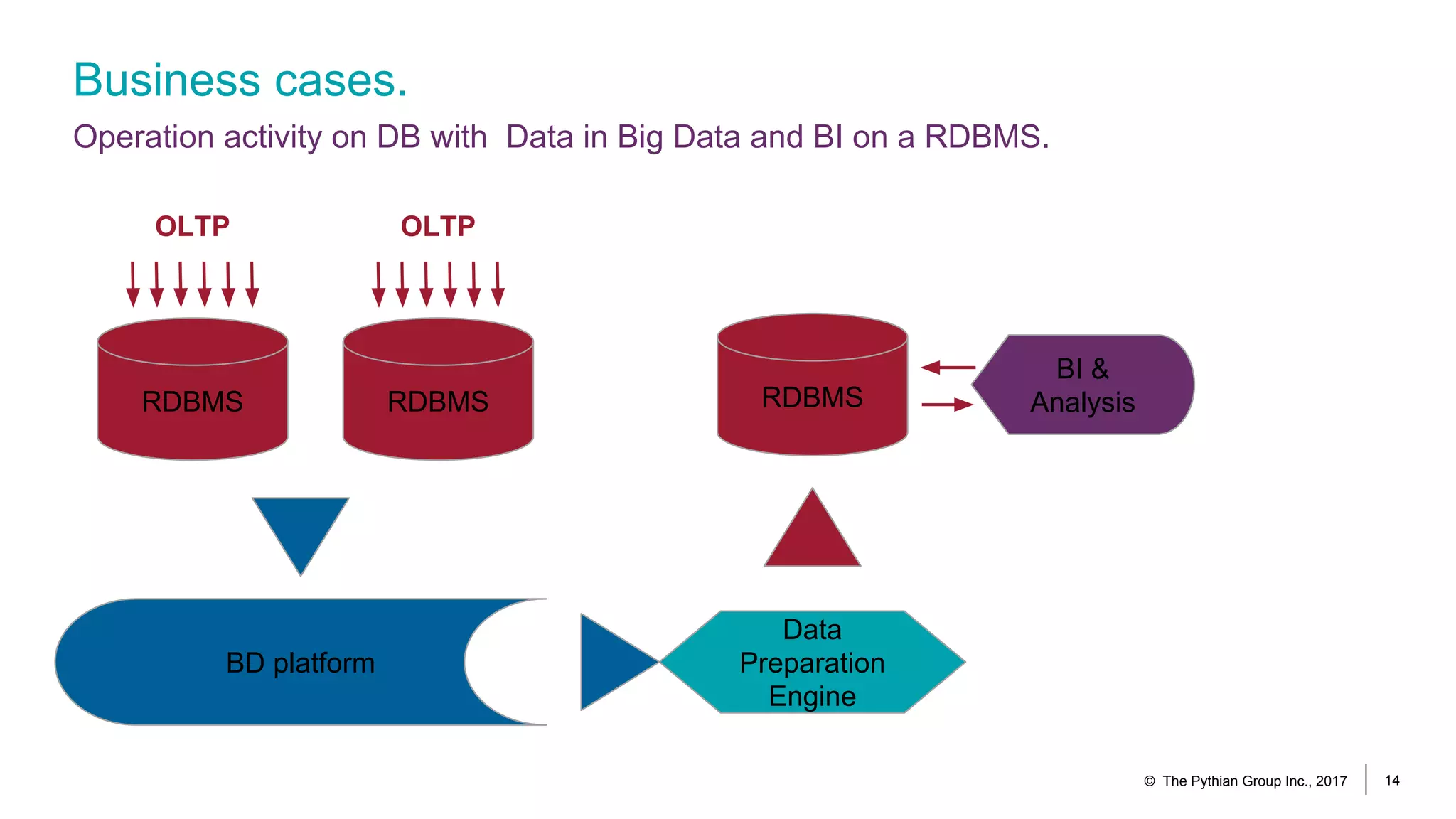

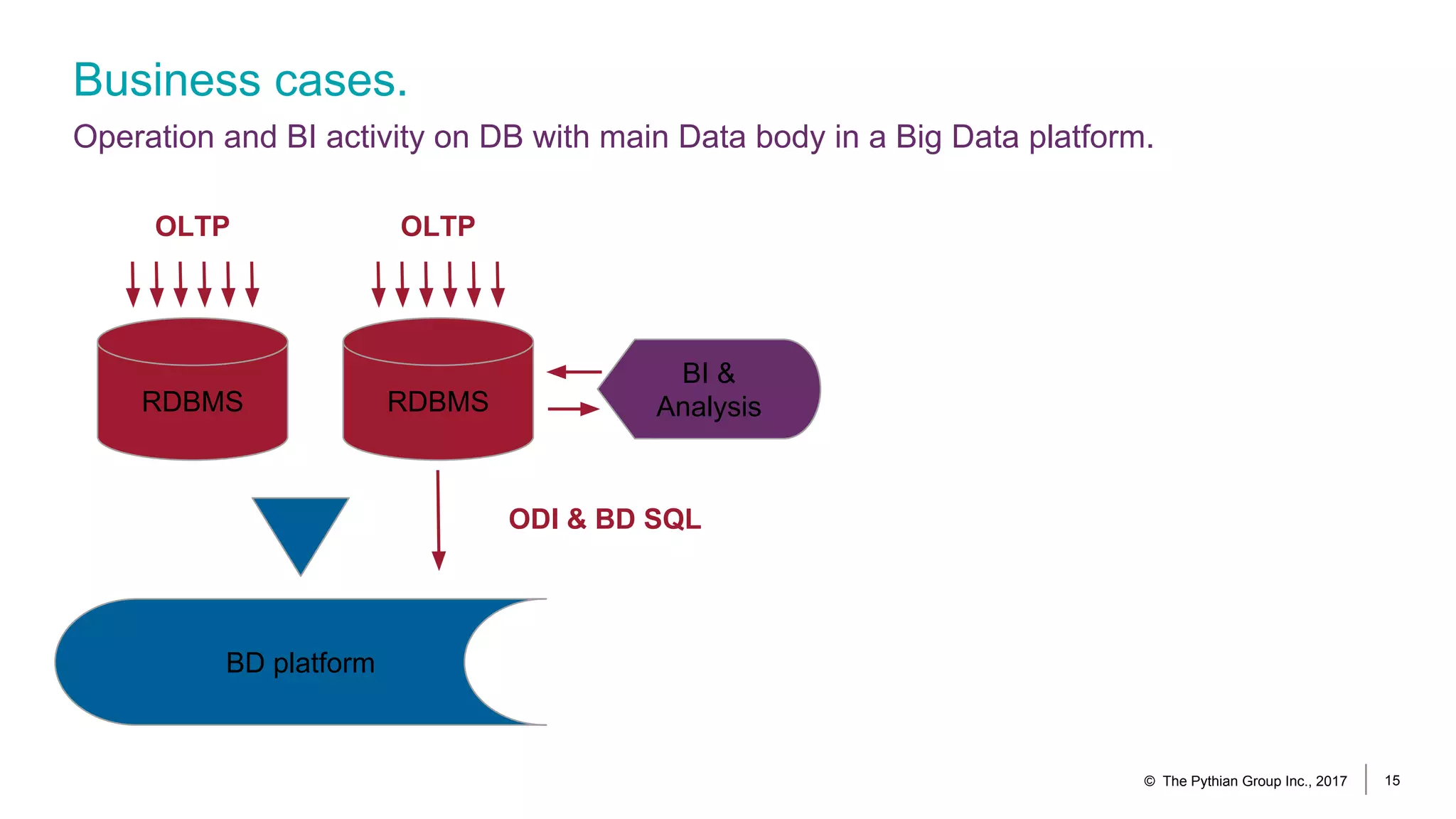

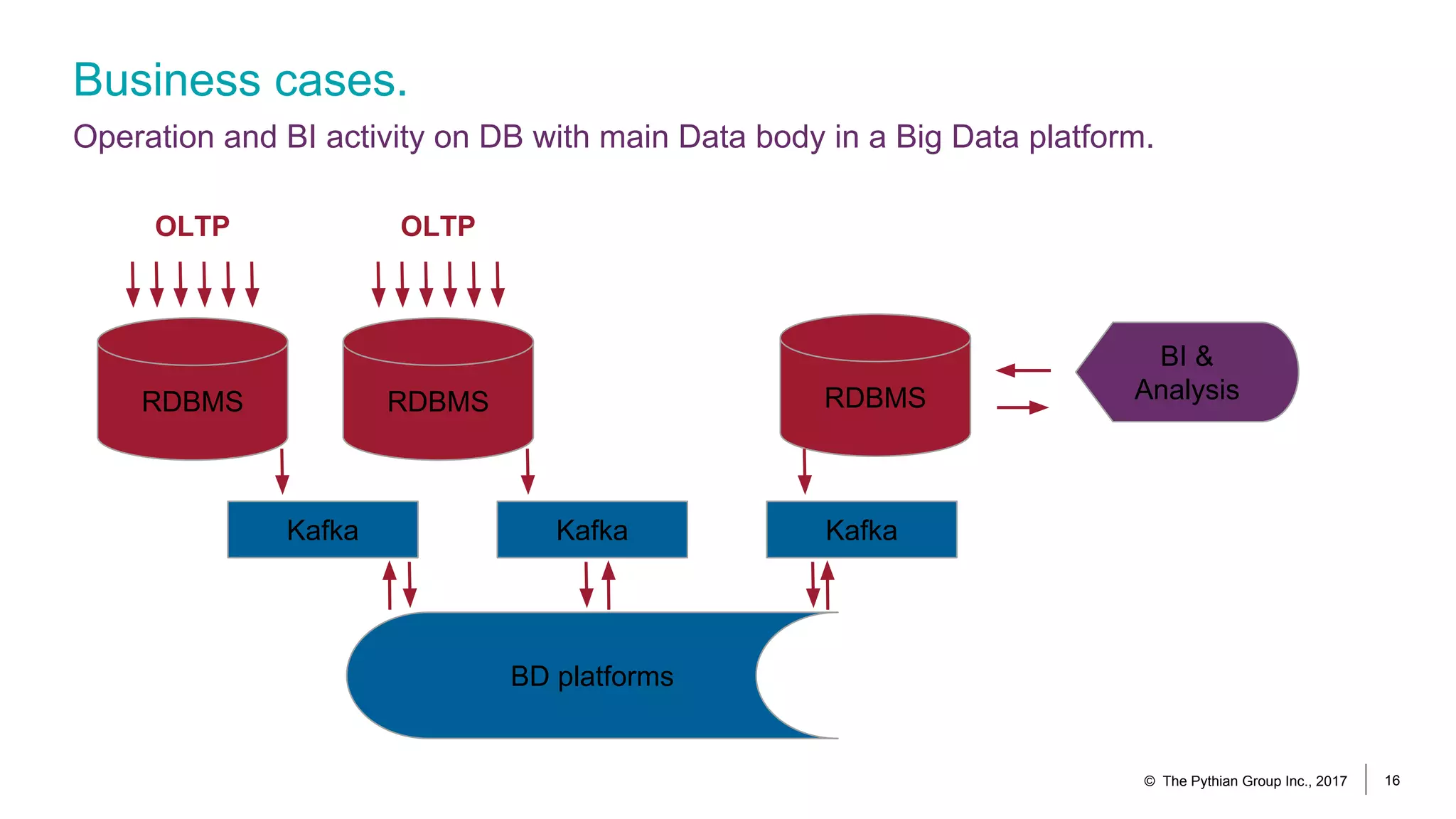

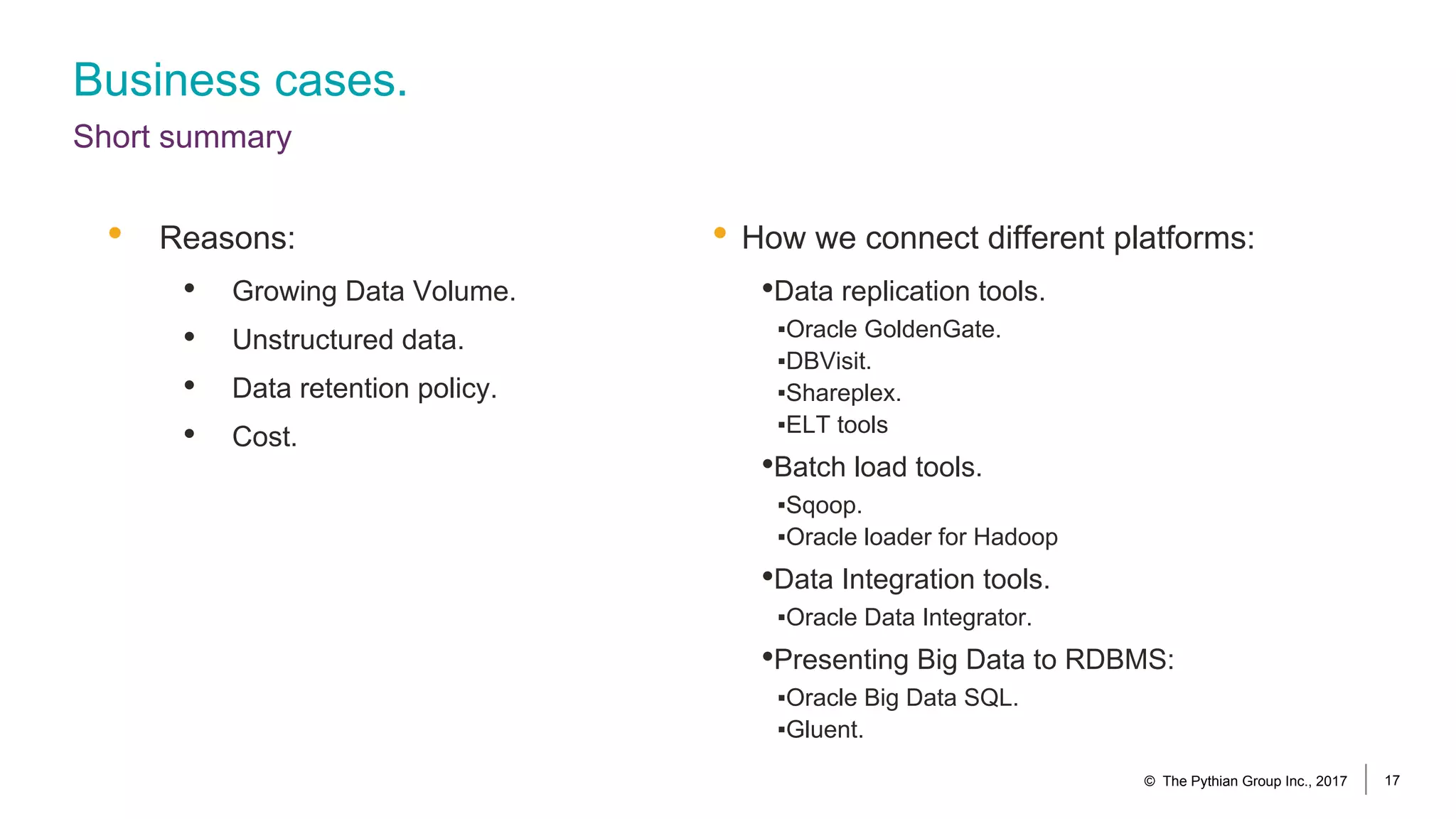

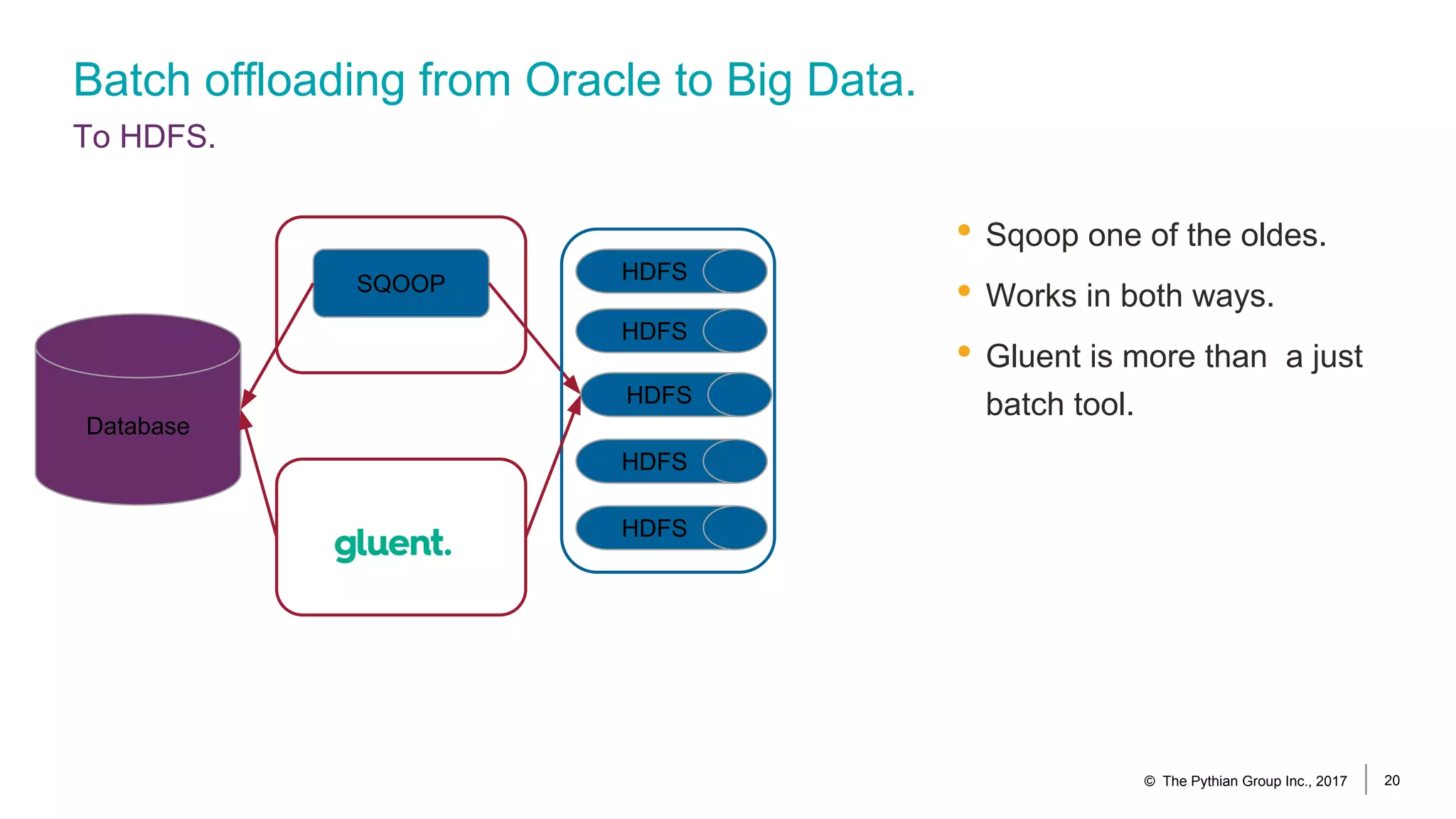

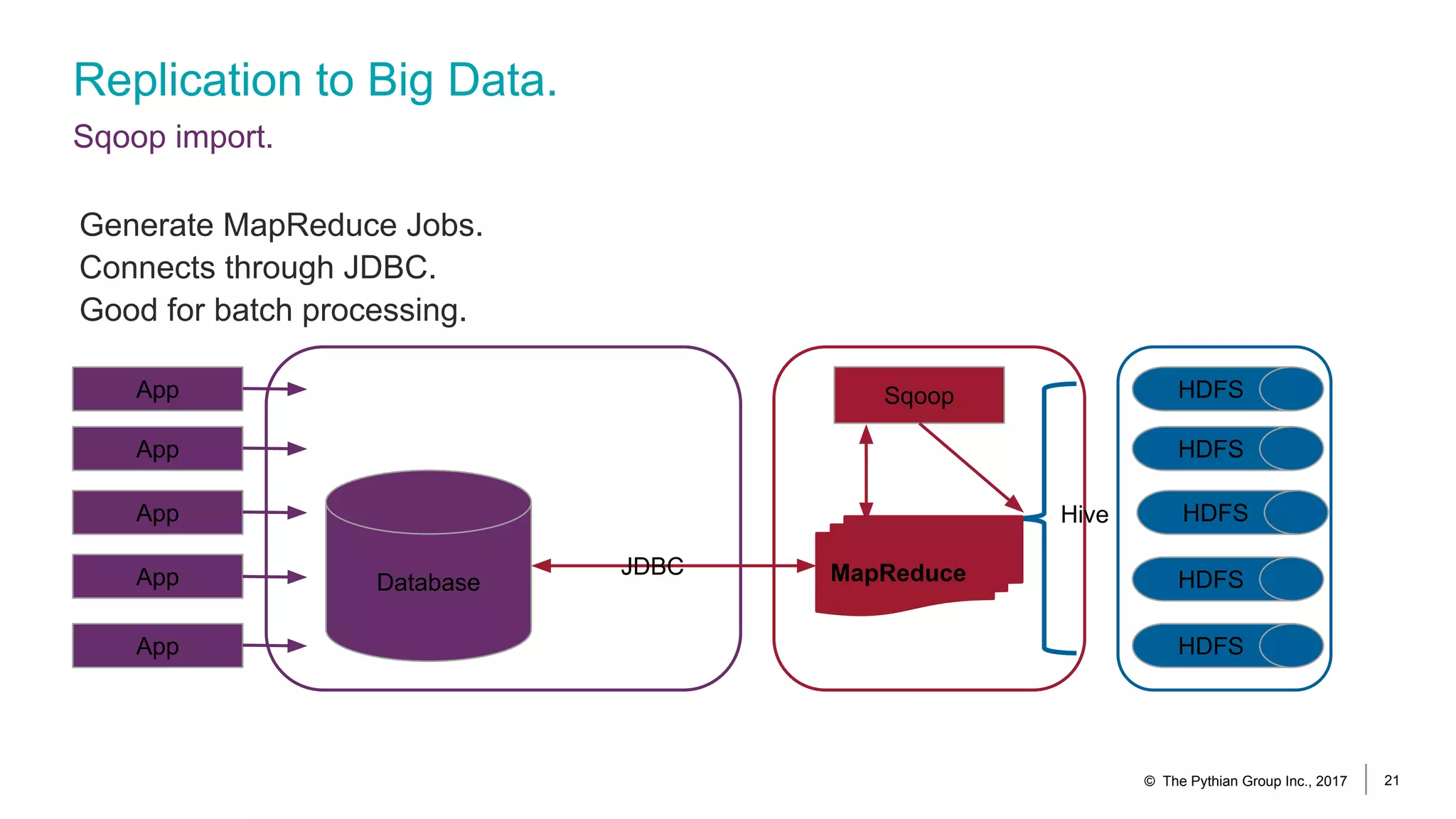

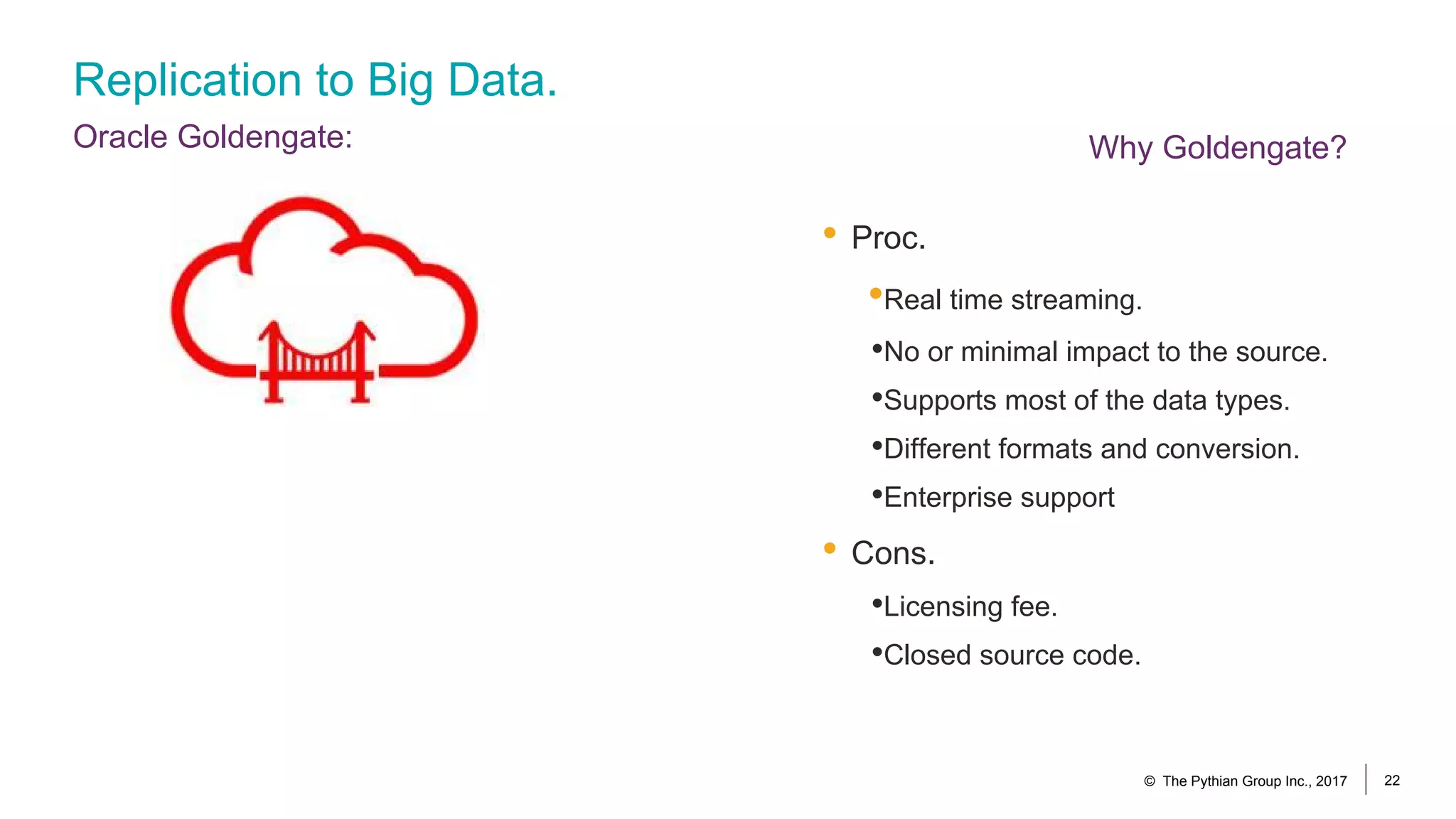

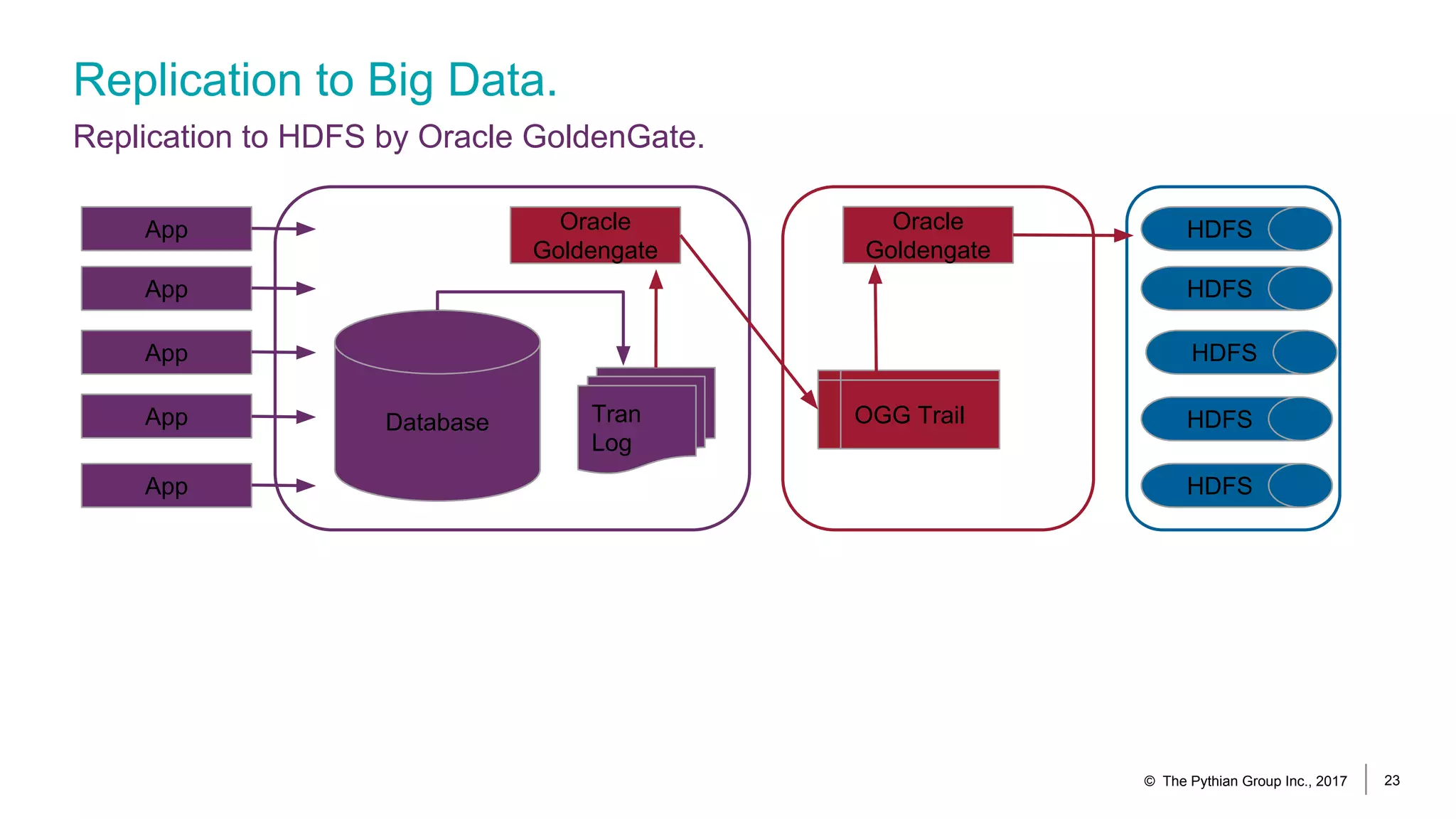

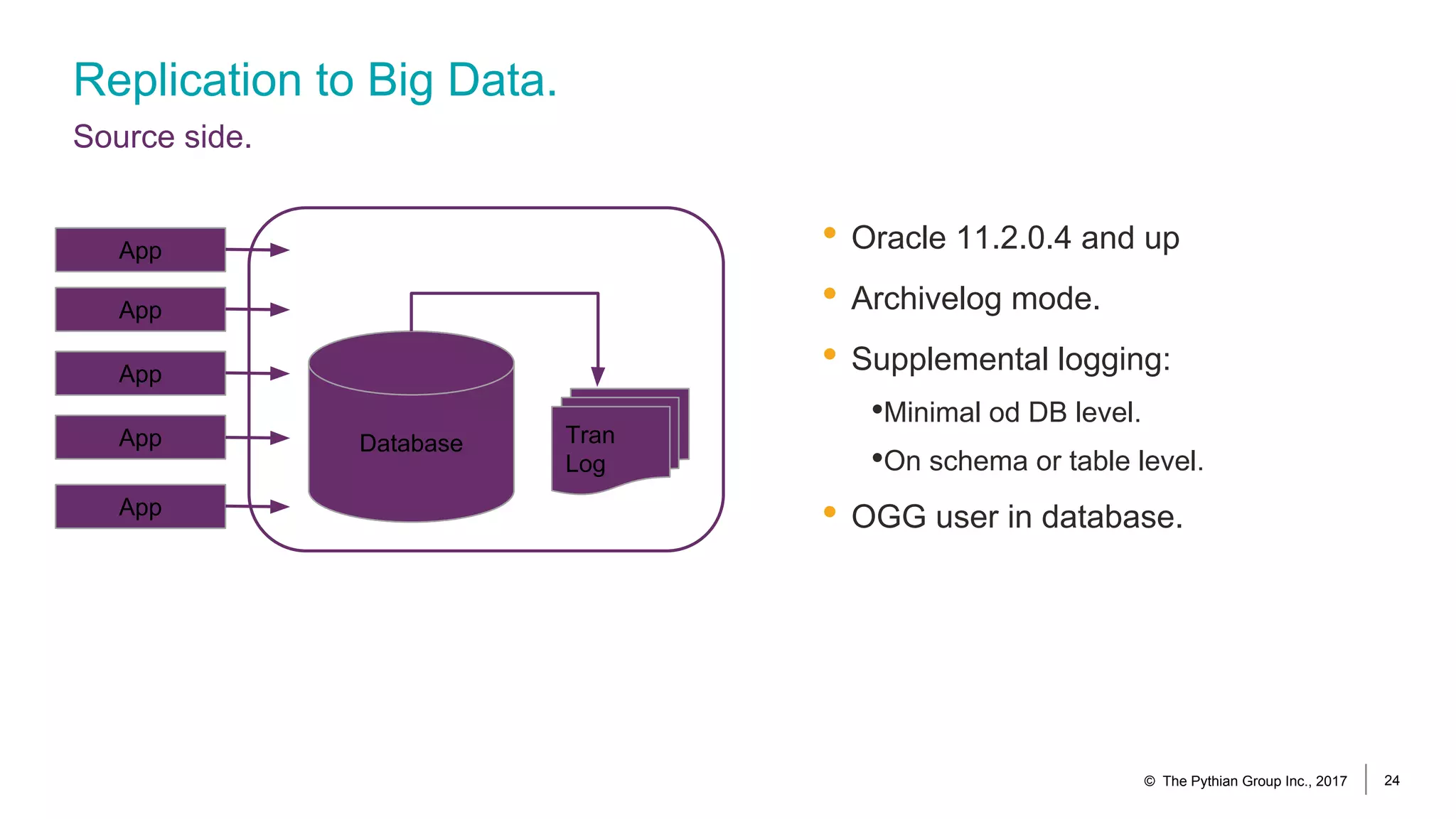

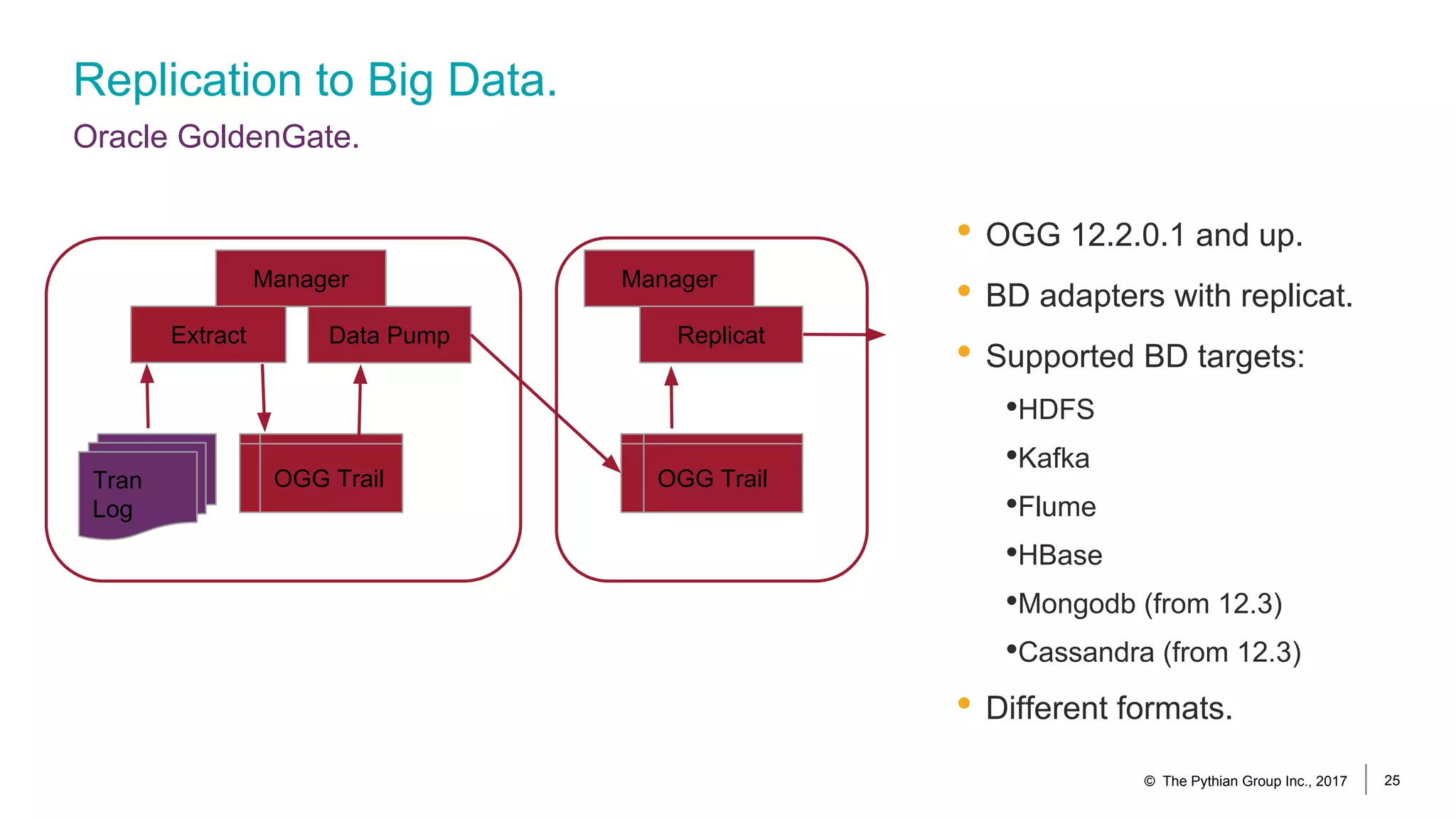

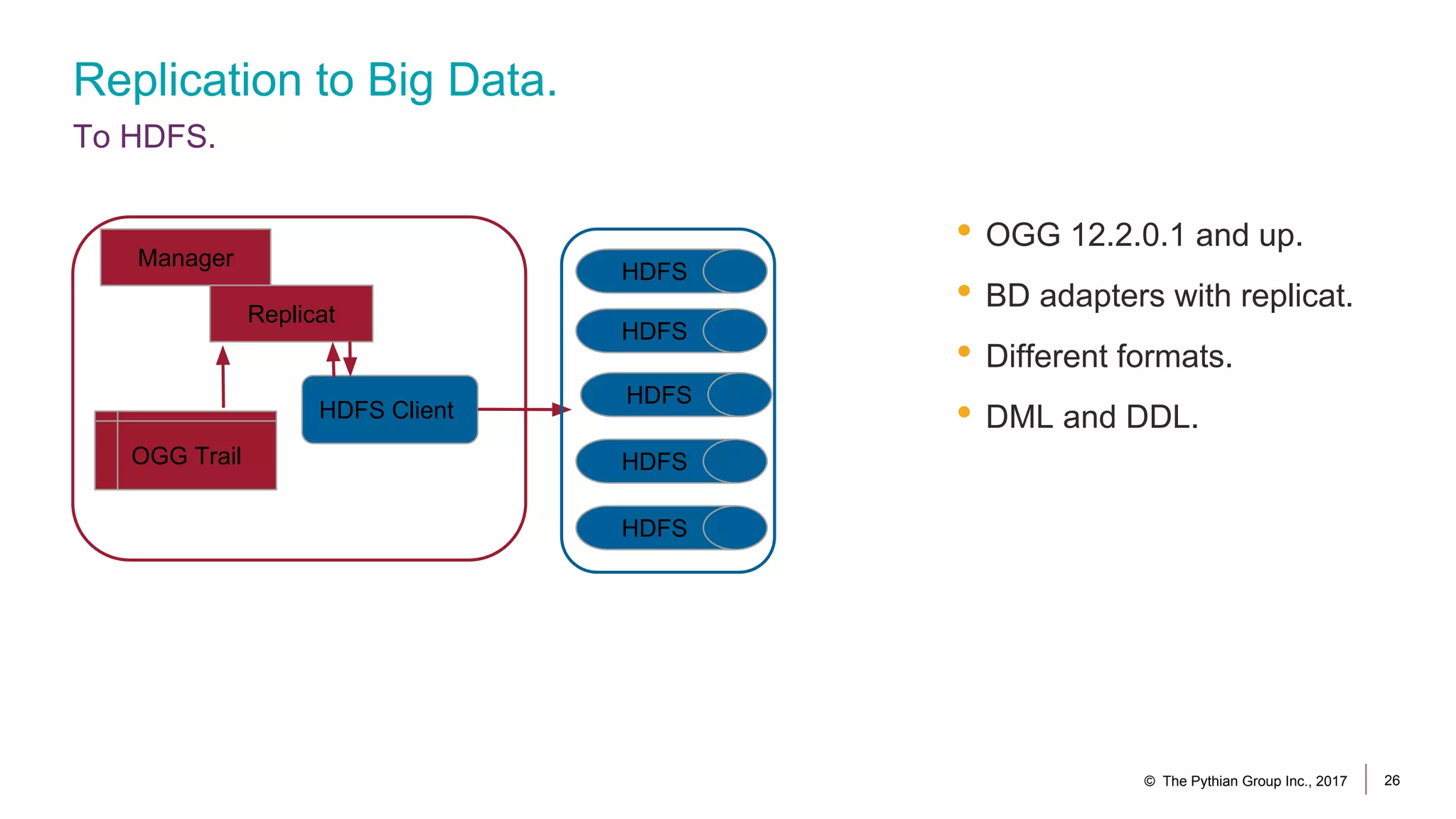

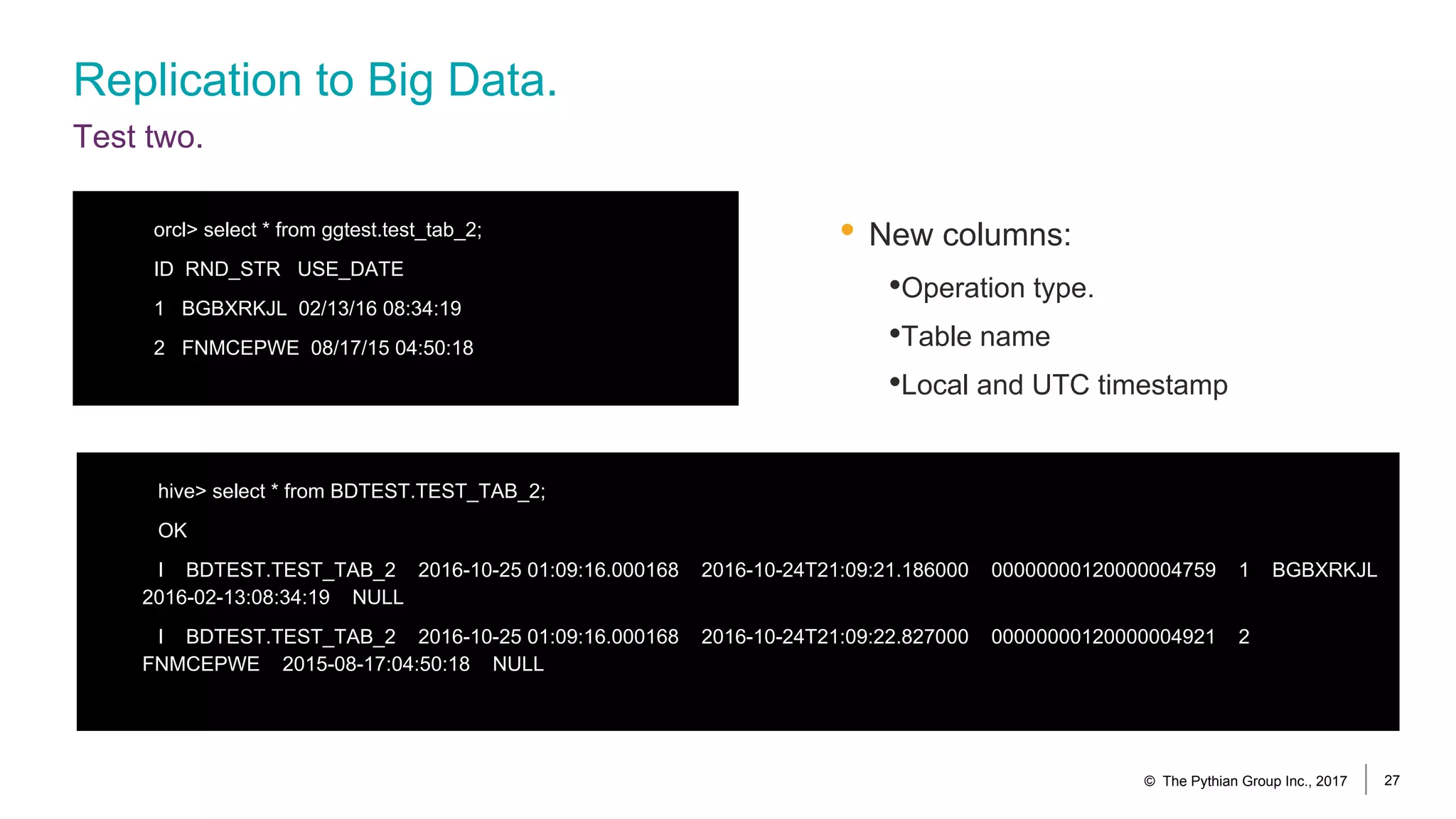

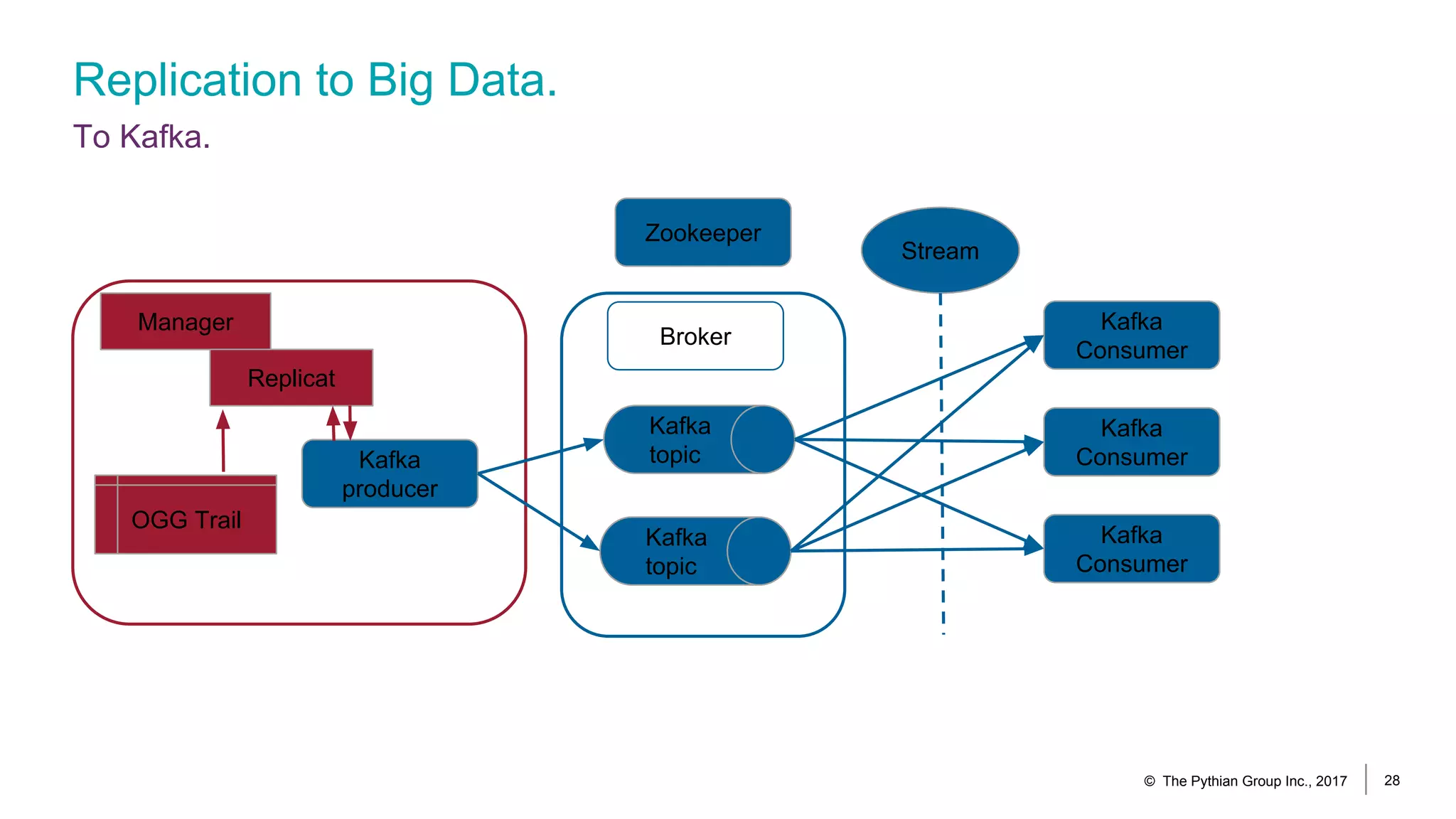

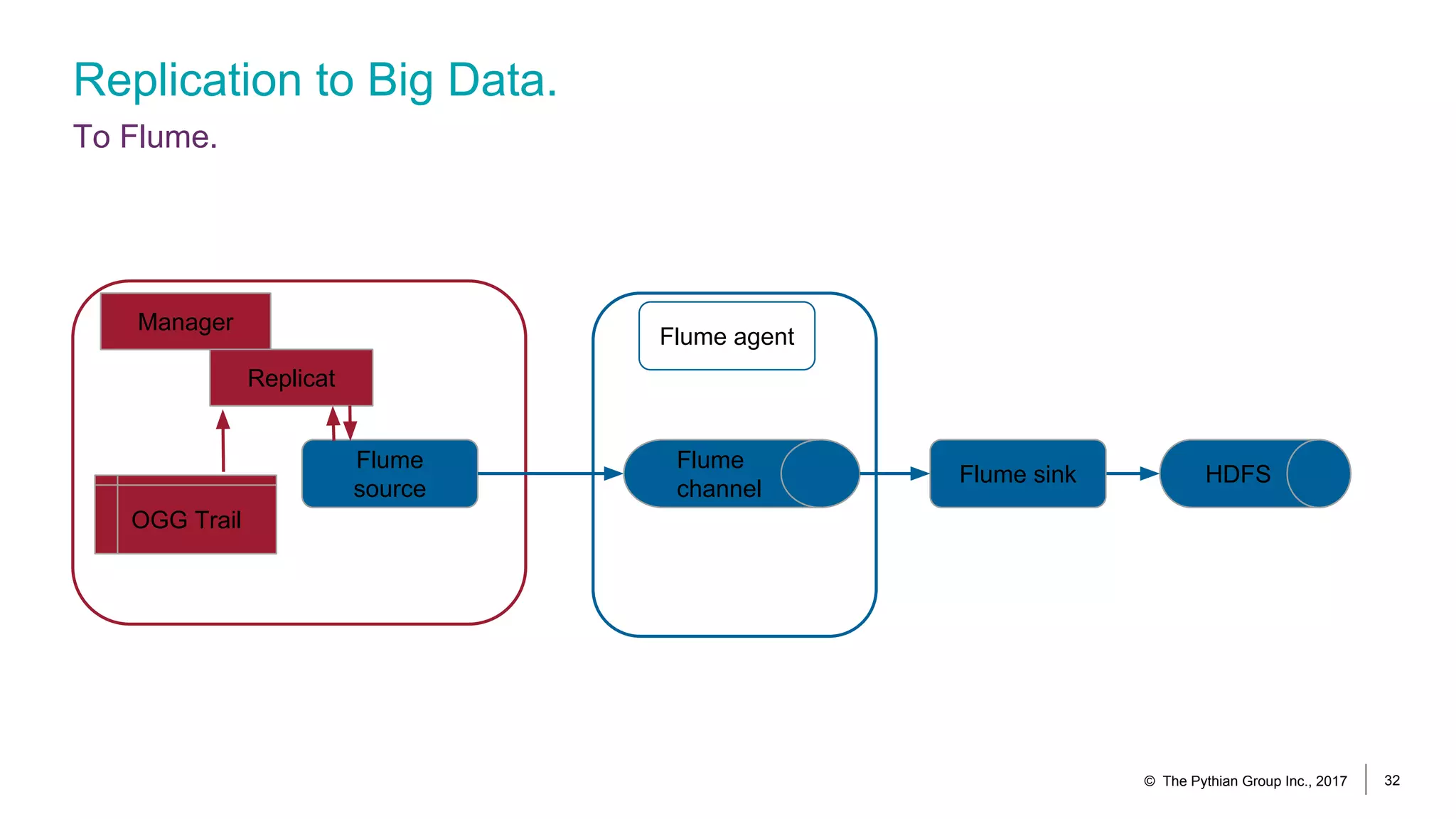

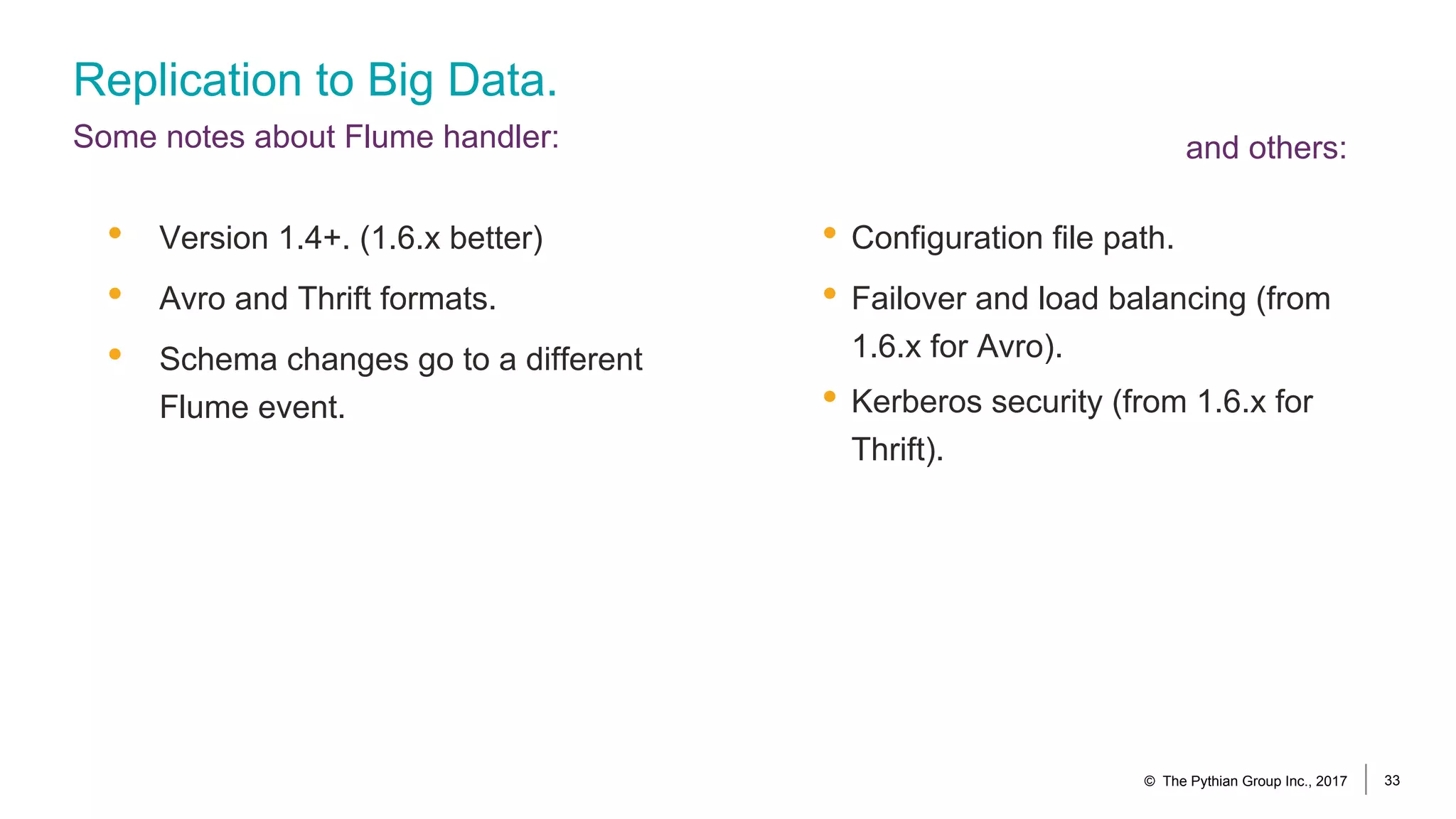

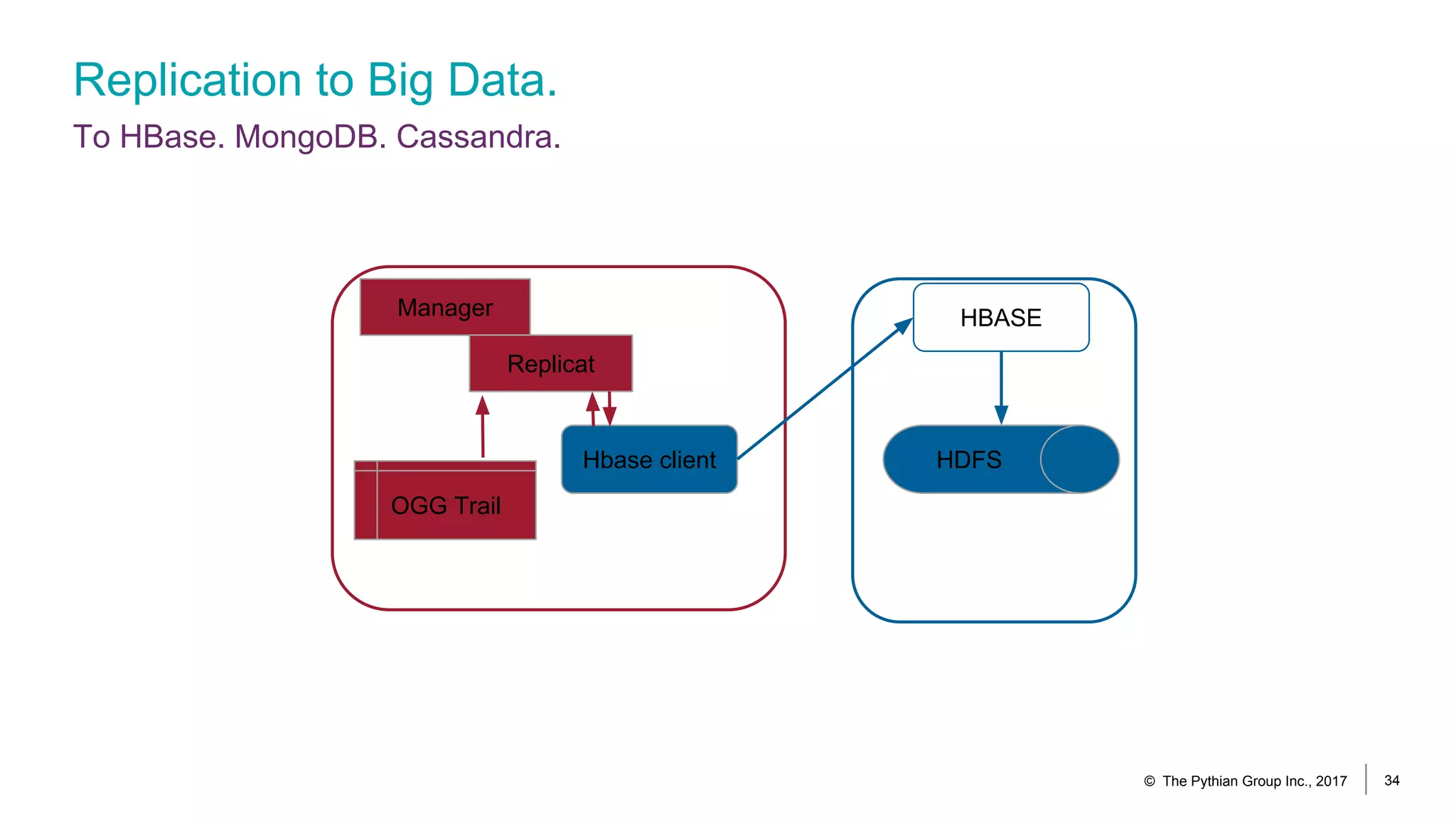

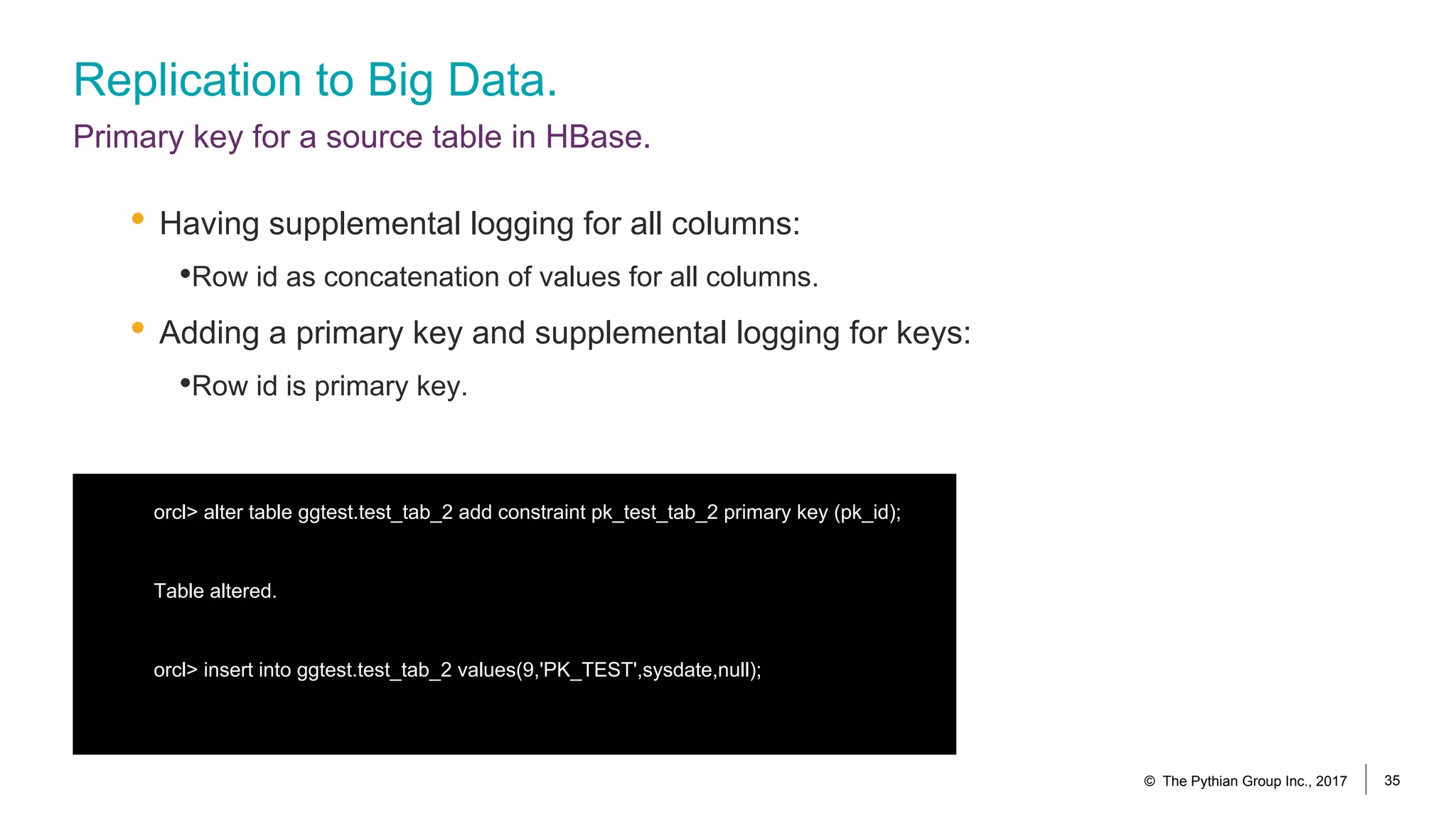

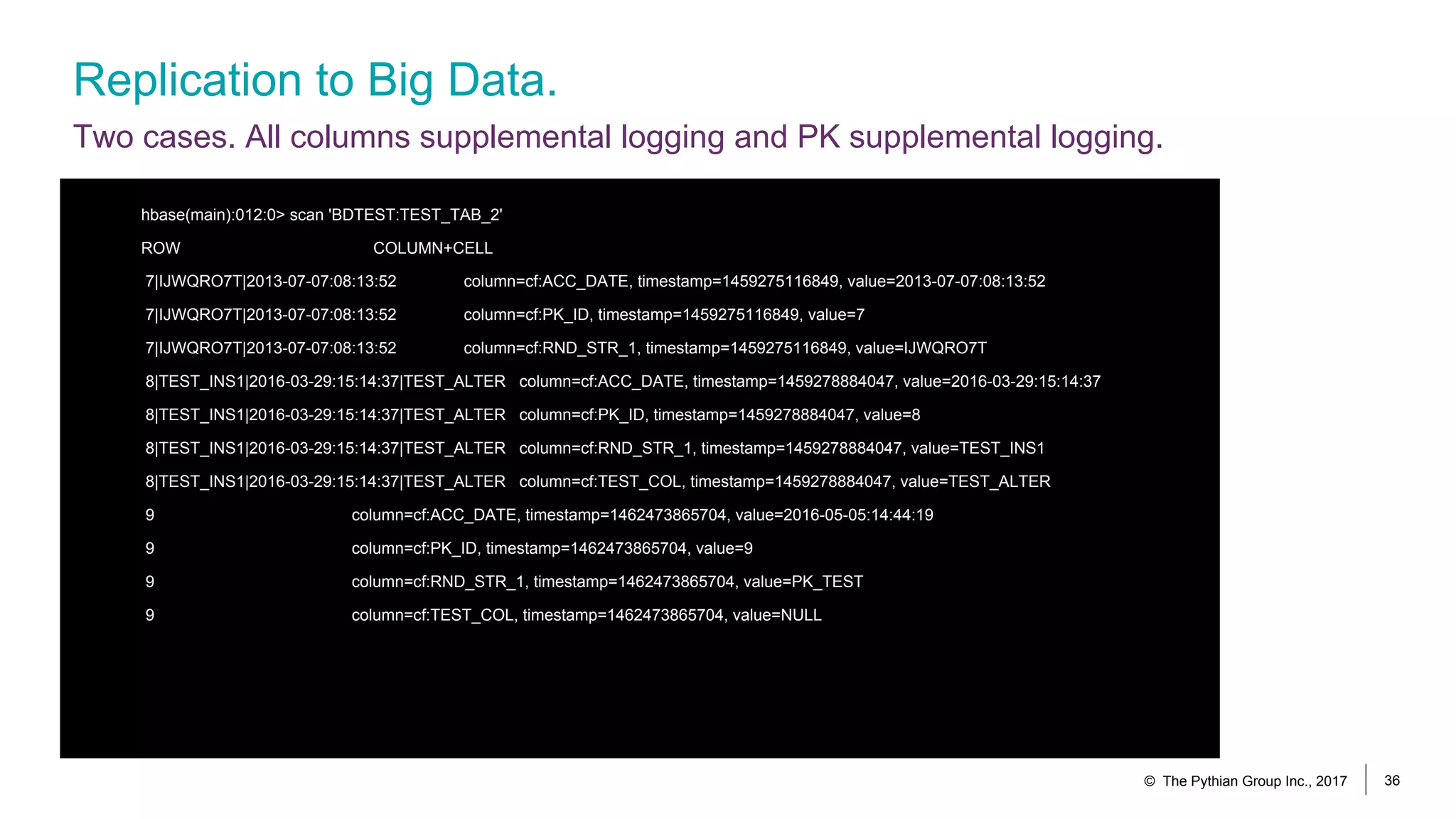

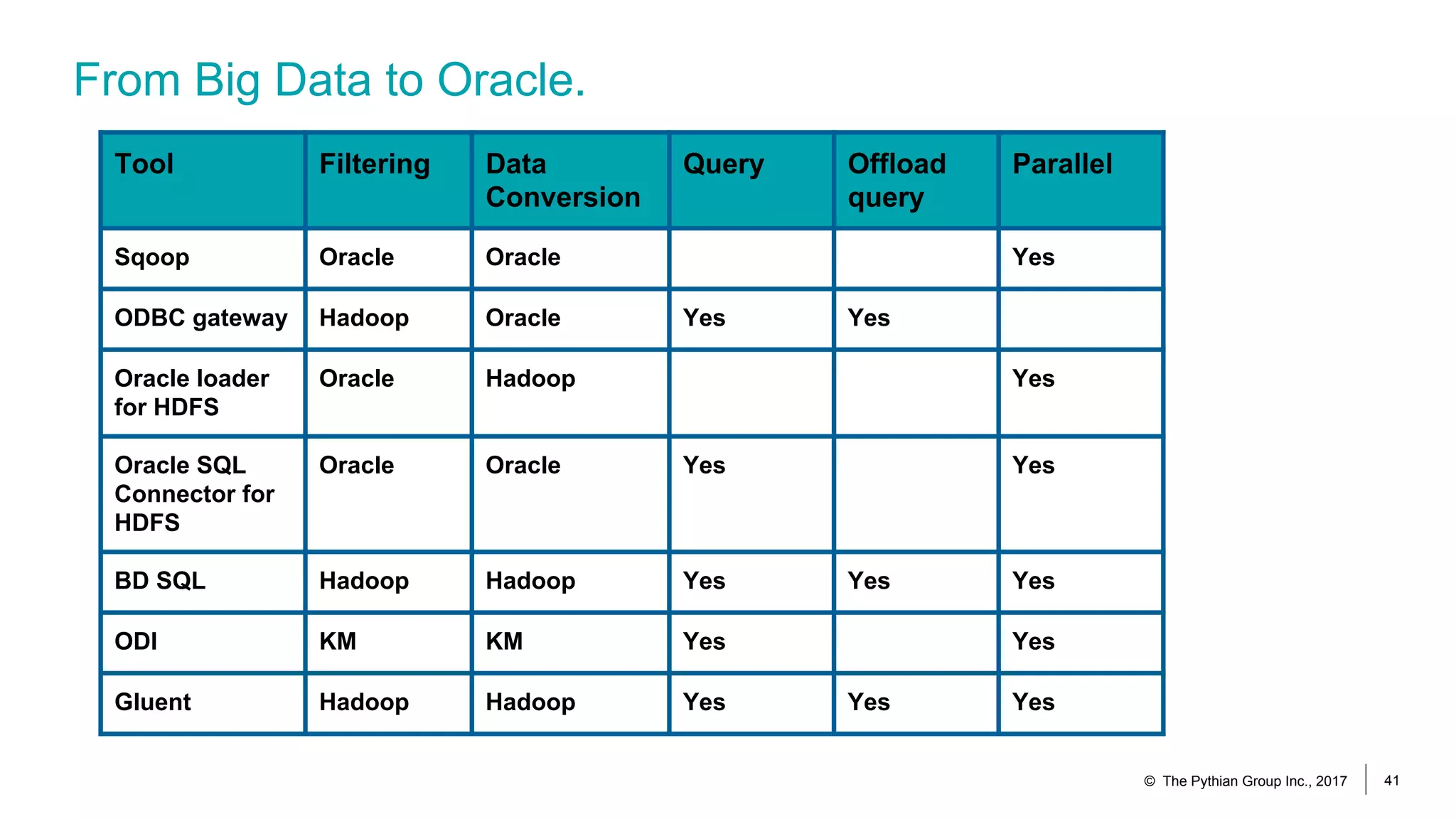

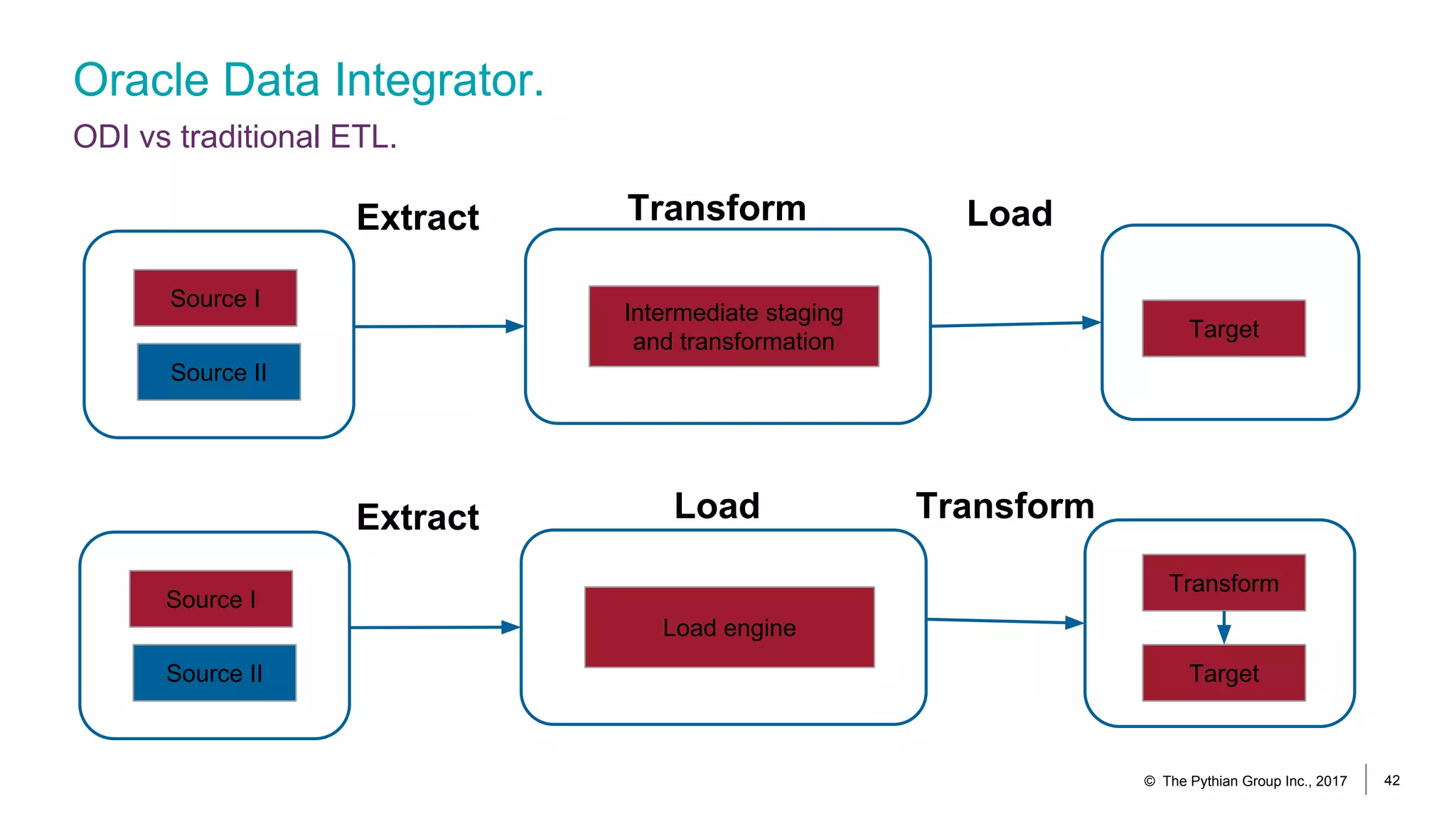

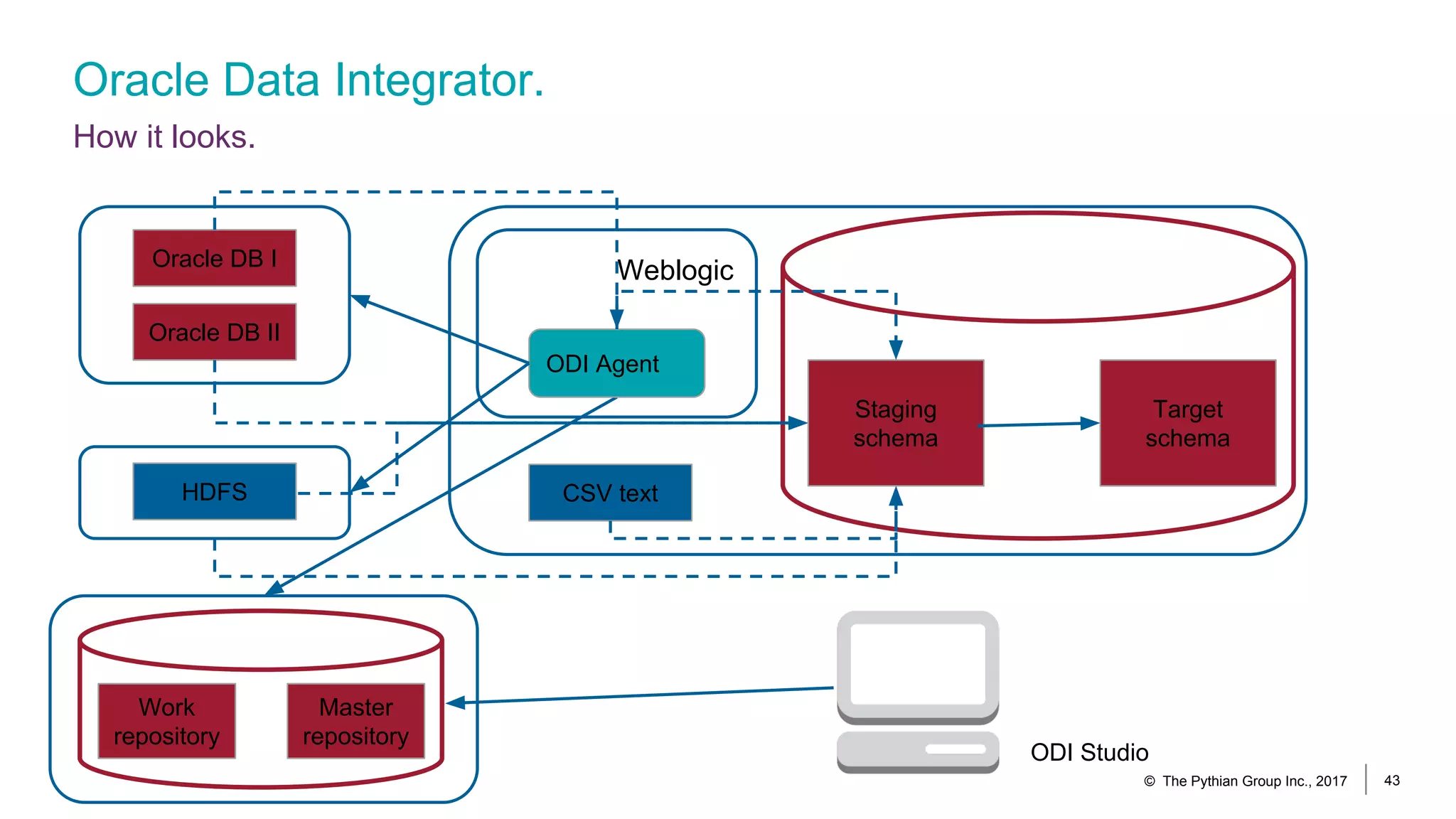

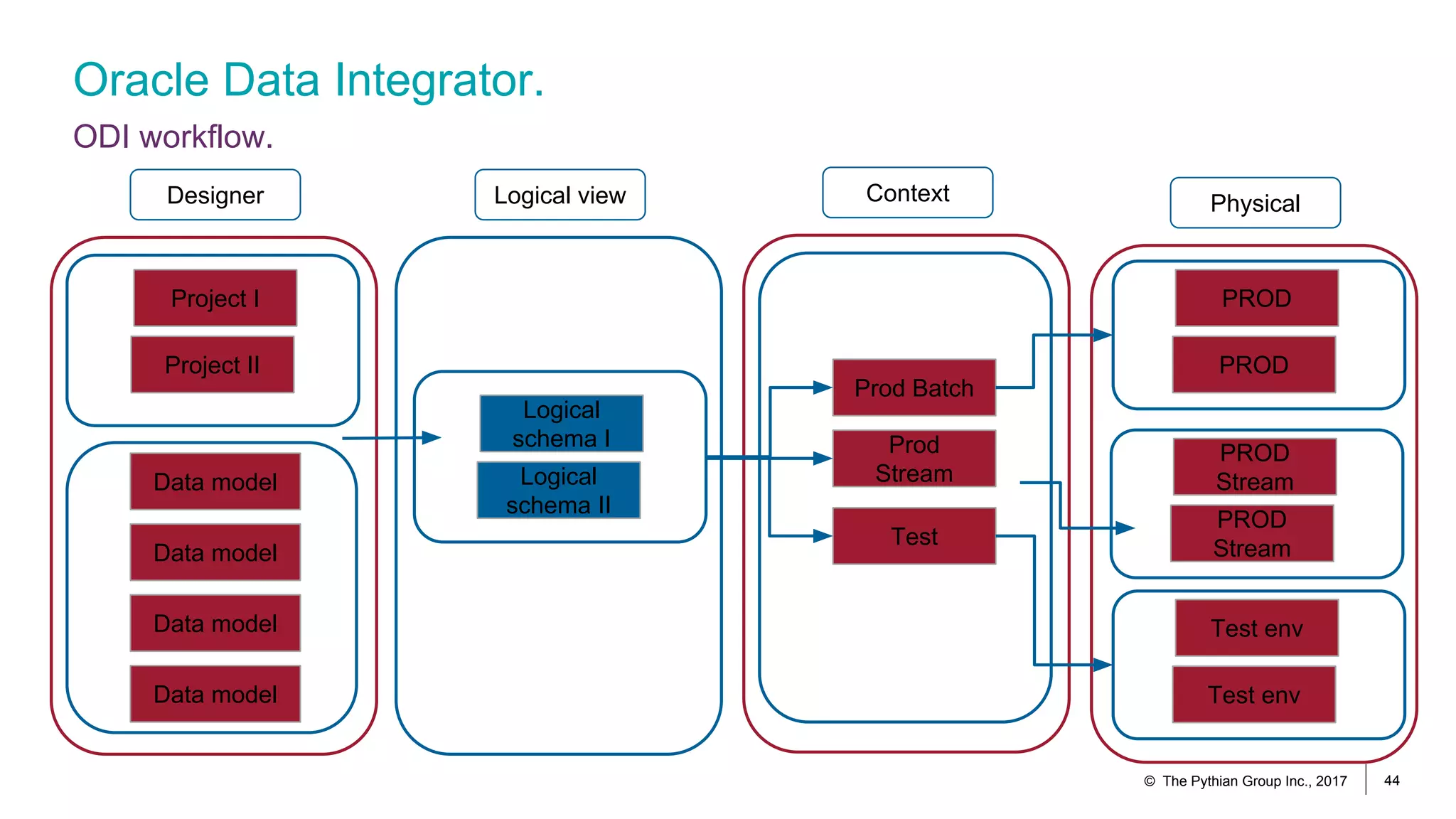

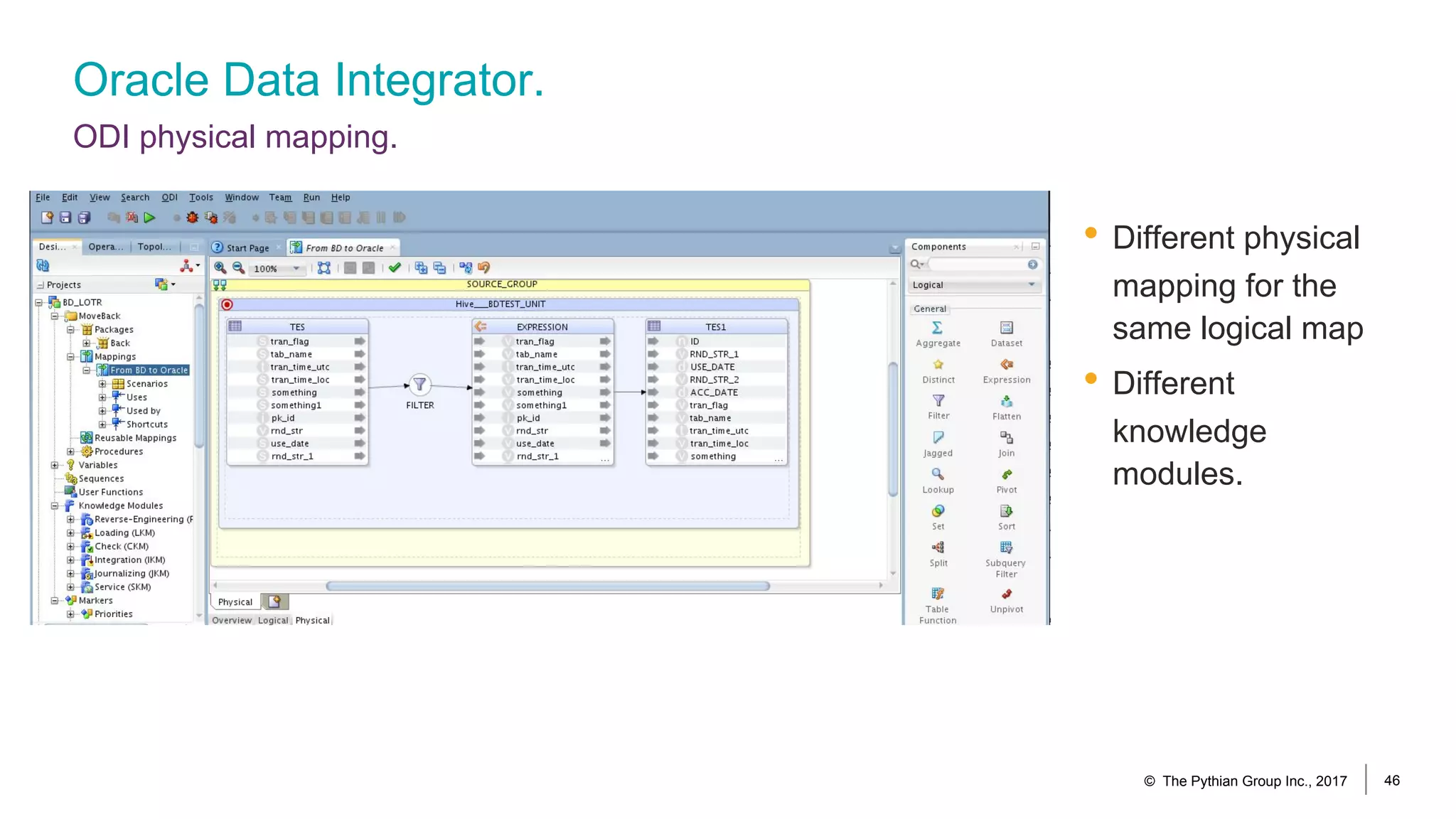

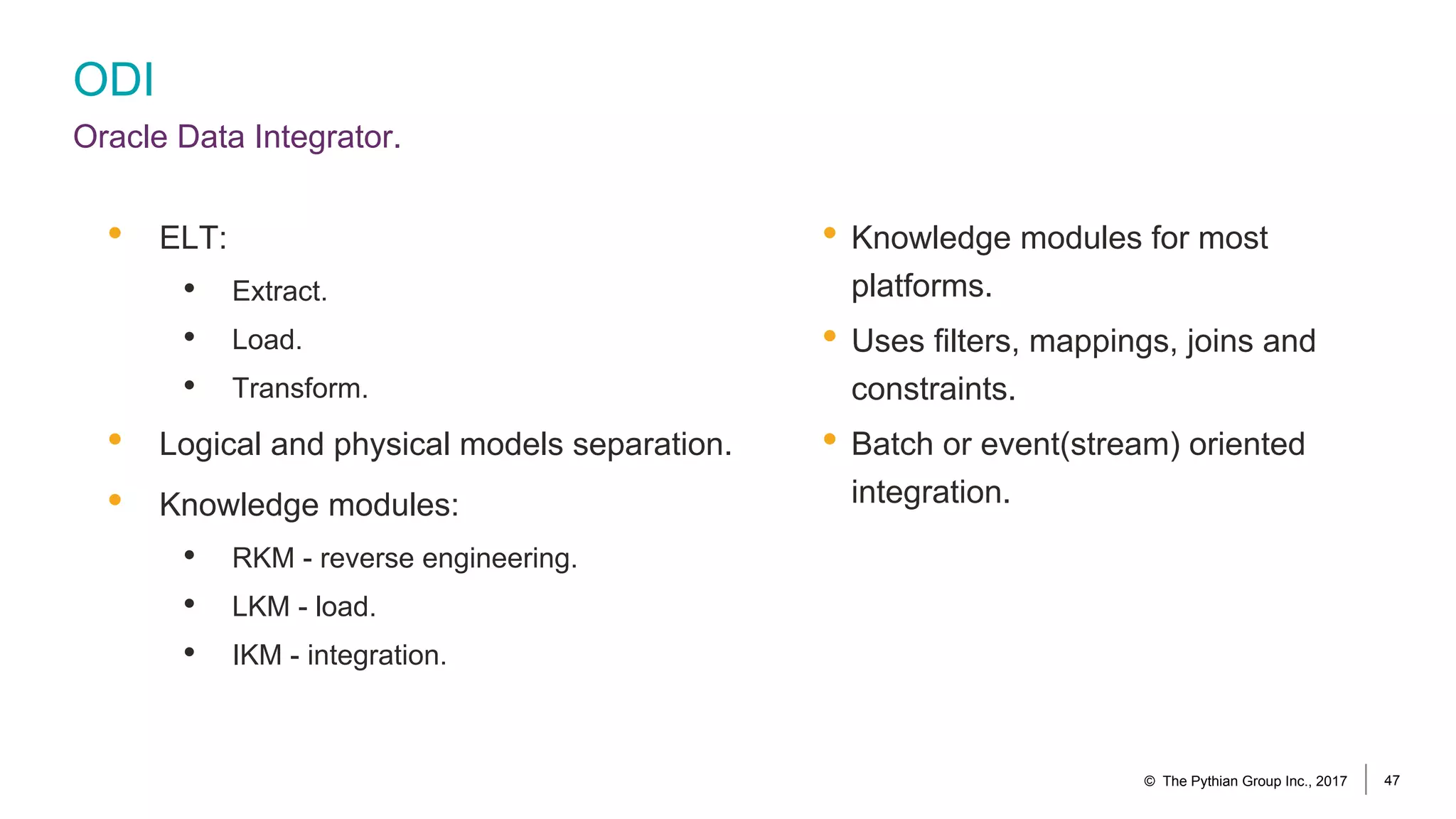

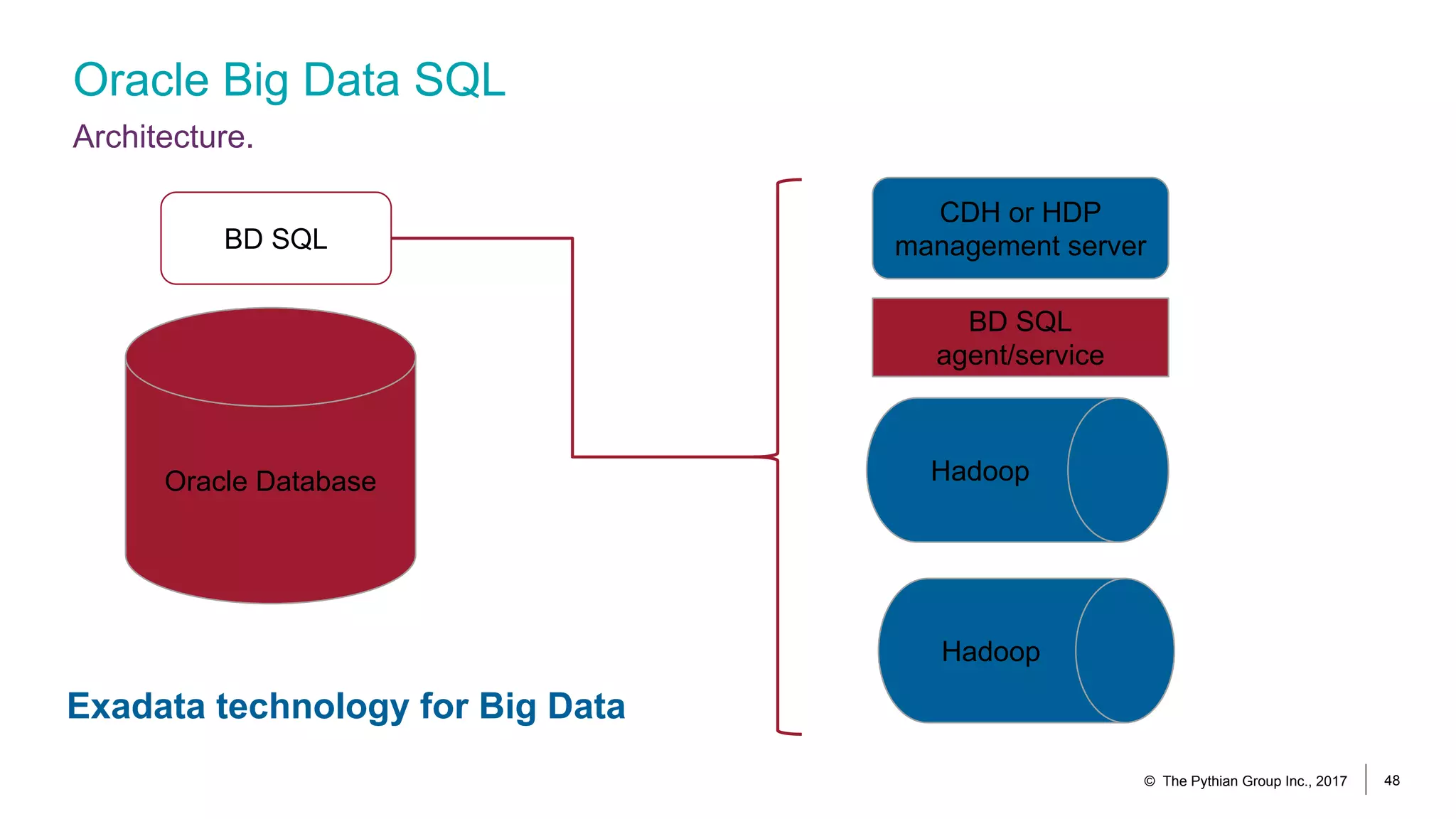

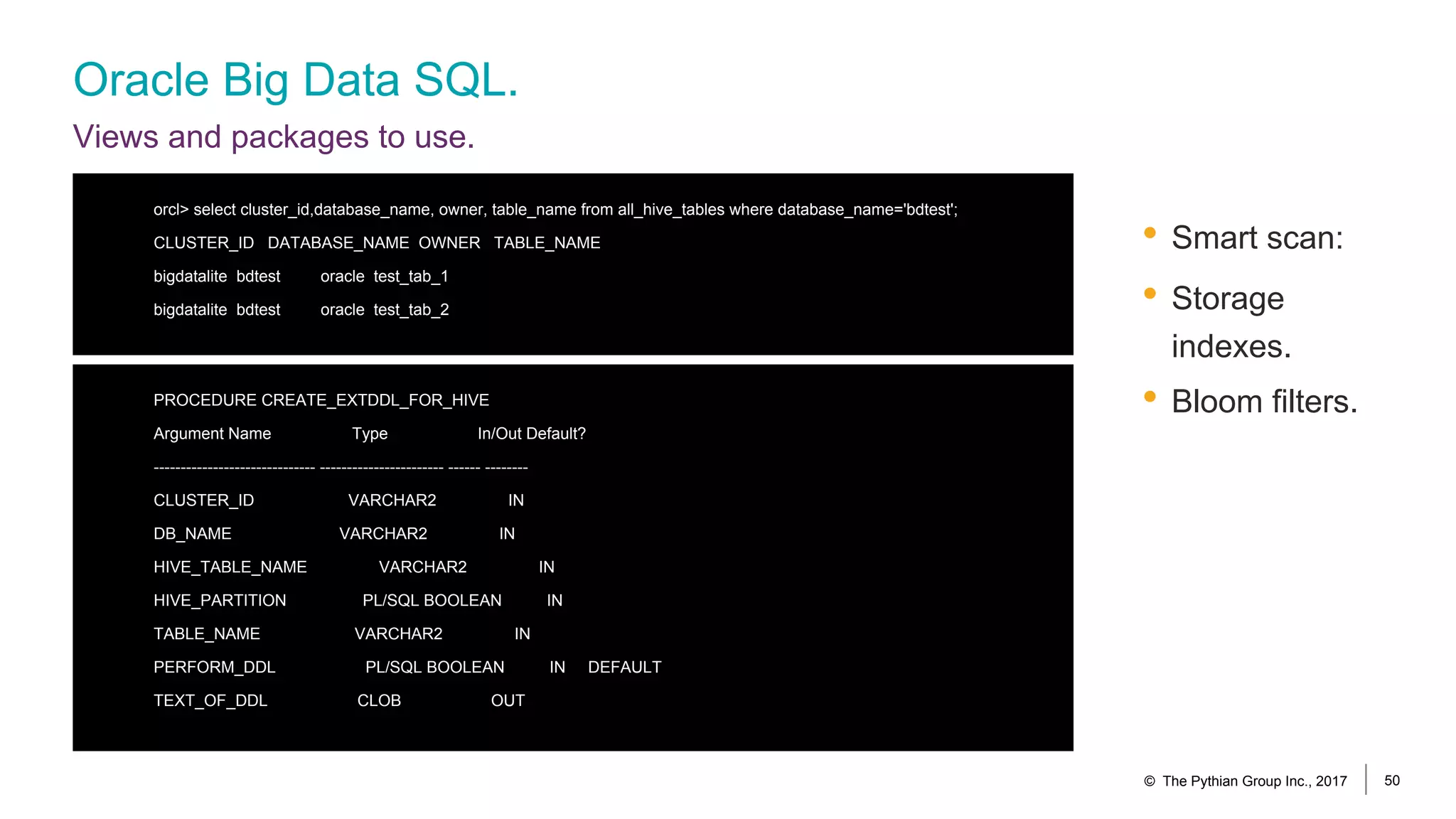

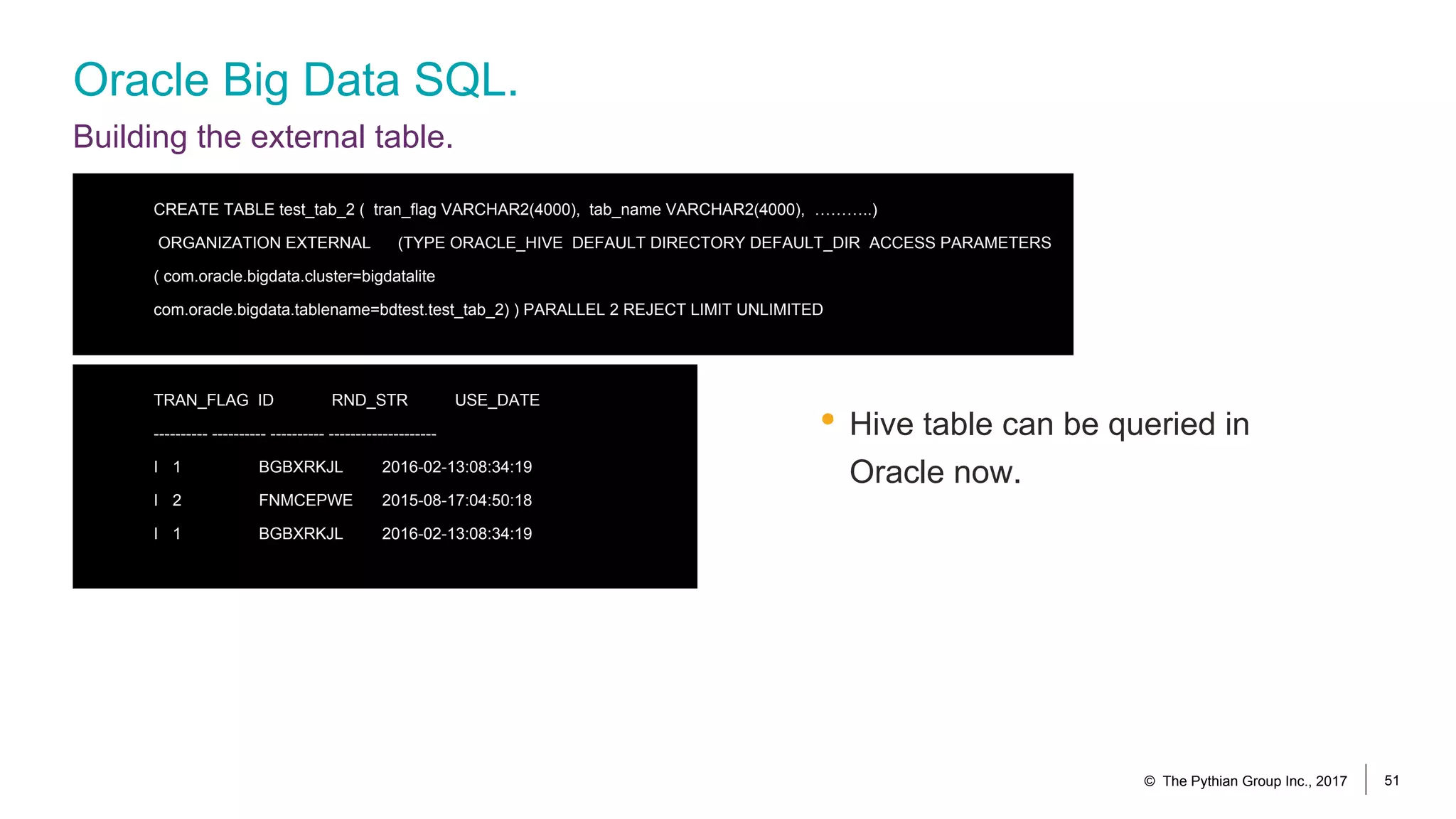

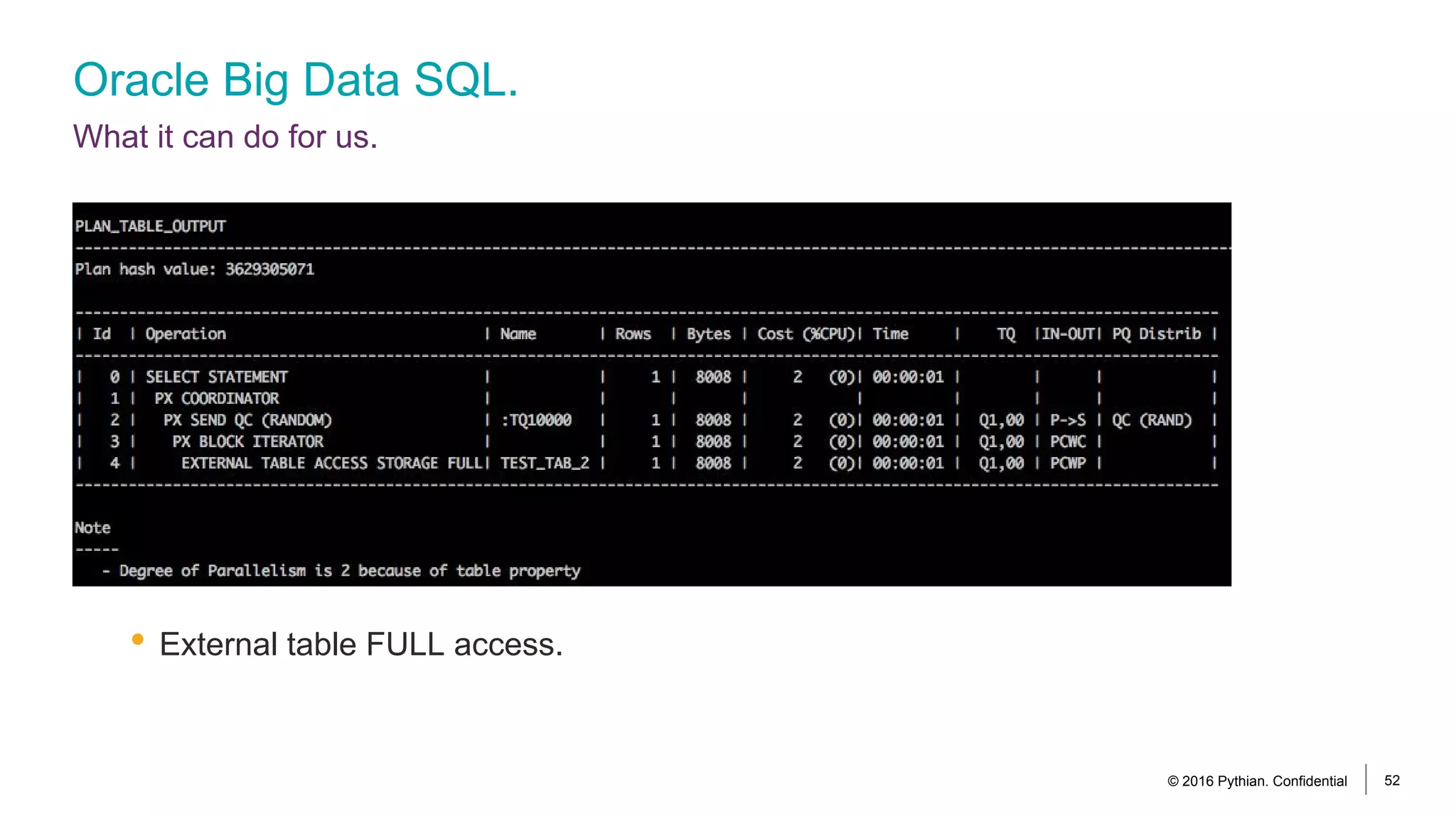

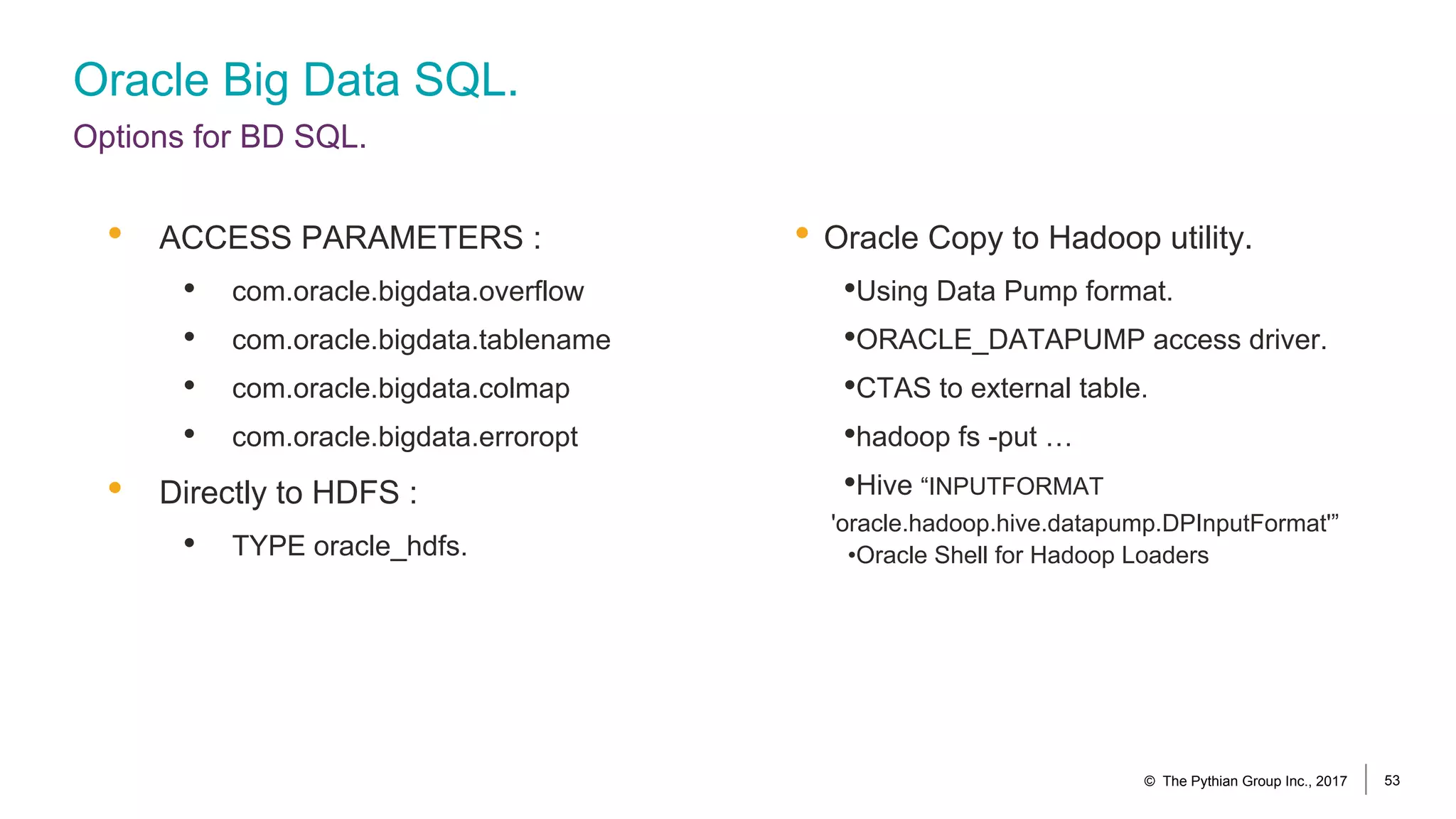

Gleb Otochkin presented ways to connect Oracle databases to Big Data platforms. Real-time replication from Oracle to HDFS, Kafka, Flume, and HBase can be done using Oracle GoldenGate. Batch loading is possible using Sqoop. Data from Big Data sources can be accessed from Oracle using tools like Oracle Data Integrator, Oracle SQL Connector for HDFS, and Oracle Loader for Hadoop.