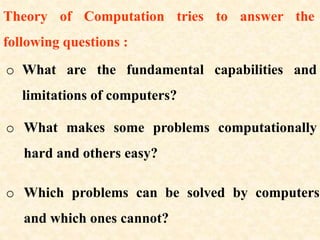

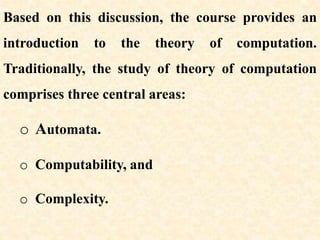

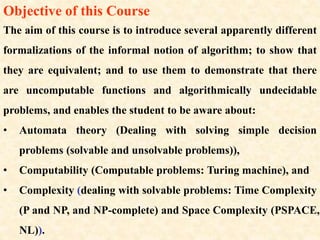

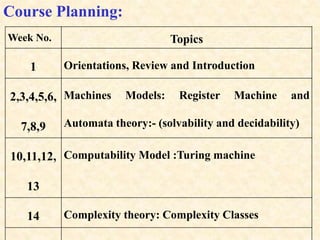

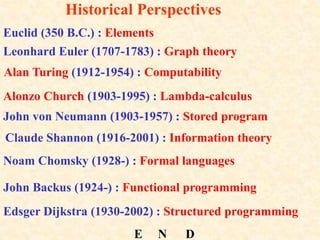

The document provides an overview of theory of computation. It defines computation as any type of information processing that can be represented as a precise algorithm. The theory of computation seeks to define algorithms formally and determine the capabilities and limitations of computation. It comprises three main areas: automata theory, computability, and complexity. Automata theory deals with solving simple decision problems. Computability determines which problems can be solved by computers. Complexity analyzes which problems can be computed efficiently. The document outlines a course on theory of computation covering these topics through examining machines, complexity classes, and the objectives of introducing different computation models and demonstrating uncomputable problems.

![Logical Implication

"" implies

33

1 yxyx Example:

"" if and only if (iff)

"" equivalent

)]()[()( ABBABA

or

ABBABA ](https://image.slidesharecdn.com/toclec1-170124230936/85/theory-of-computation-lecture-01-22-320.jpg)

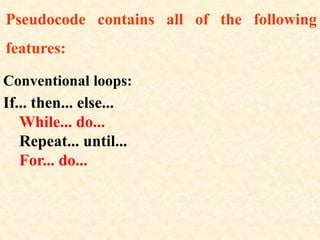

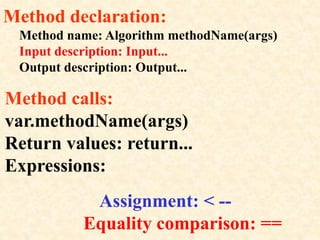

![In a pseudo code description of an algorithm, each line

can be viewed as a certain number of operations which

can be counted and added up to find a total value (order

of algorithm) for the algorithm. Some examples are shown

below:

• varNumber <-- X[1]

(2 operations - 1 array value retrieval, 1 assignment).

• For a <-- 1 to n do...

(n operations - any operations within this loop will

then be multiplied by n also as they will be carried

out n times).

• return varNumber

(1 operations).](https://image.slidesharecdn.com/toclec1-170124230936/85/theory-of-computation-lecture-01-55-320.jpg)