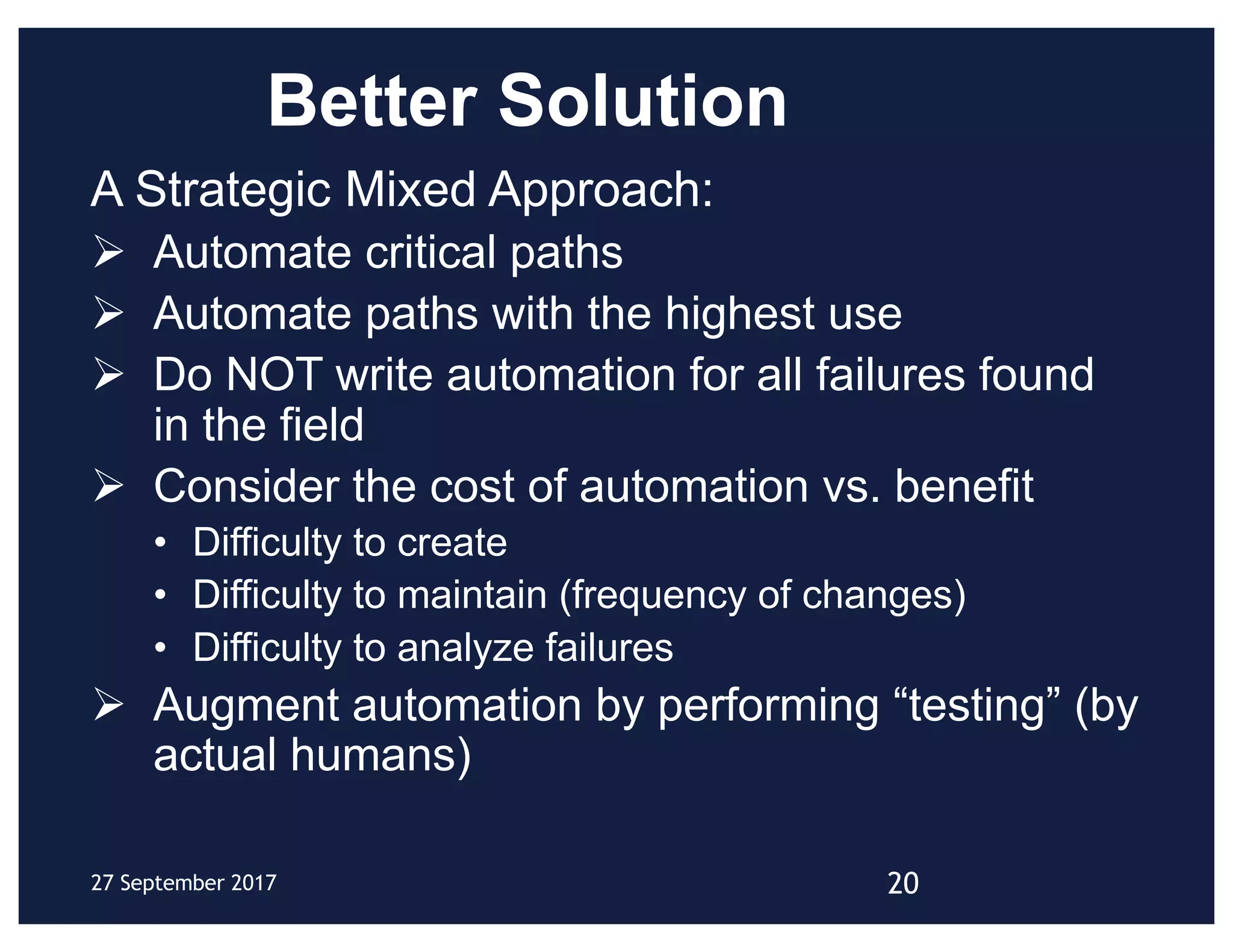

Paul Holland, a senior director at Medidata Solutions, discusses the limitations and challenges of automation in software testing, emphasizing that excessive automation can lead to inefficiencies. He presents findings showing that human testers often identify more bugs than automated scripts, suggesting a need for a balanced approach that combines automation with human testing. Key recommendations include focusing automation on critical paths and considering the costs and benefits of automation efforts.