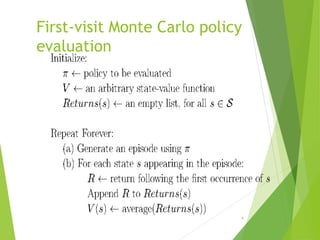

The document discusses Monte Carlo methods as techniques for learning optimal policies and value functions directly from episodic tasks through experience, without needing complete models. It highlights the application of these methods in scenarios like blackjack for policy improvement and estimation of action values, emphasizing advantages such as direct learning from the environment and reduced model reliance. However, it also cites the importance of maintaining exploration during the learning process.