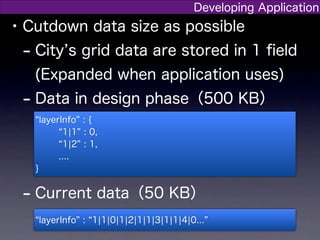

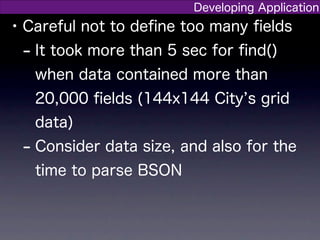

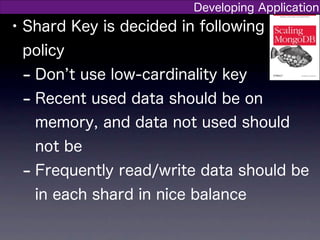

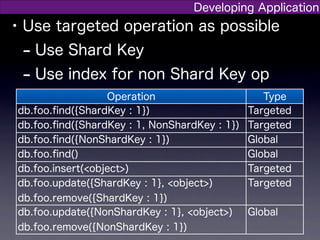

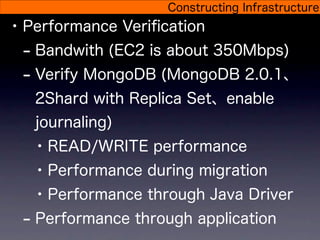

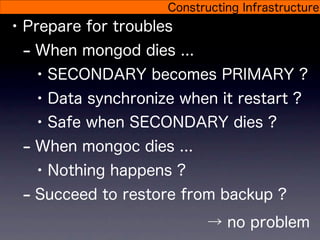

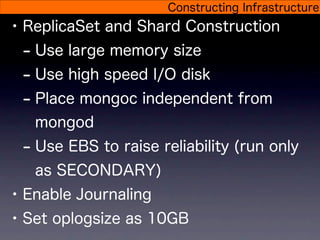

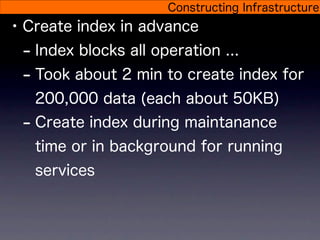

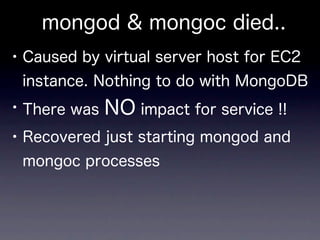

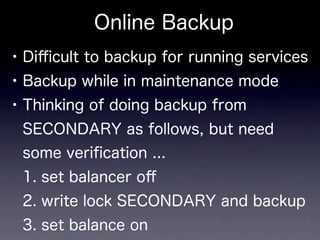

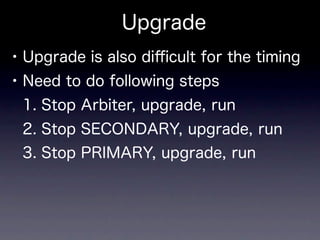

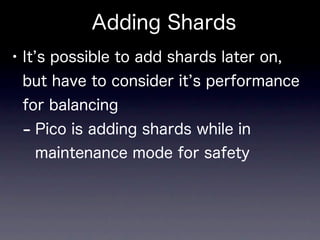

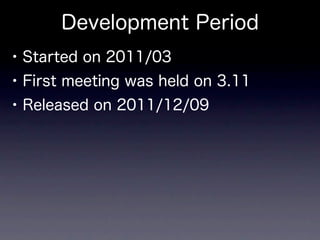

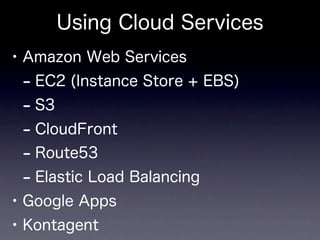

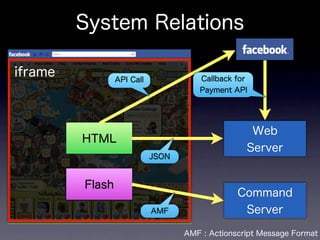

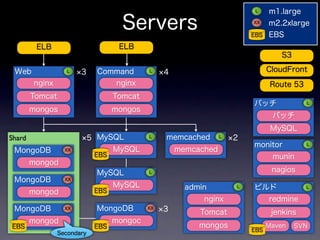

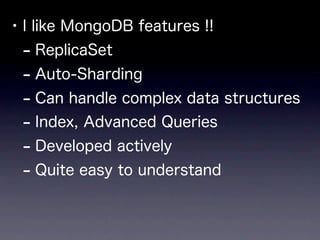

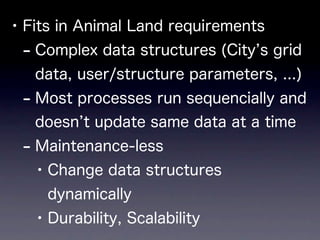

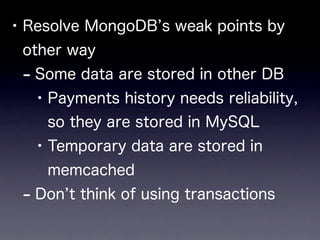

The document discusses the implementation of MongoDB in the social game 'Animal Land' developed by CyberAgent, Inc. It outlines the system architecture, benefits of MongoDB such as scalability and flexibility with data structures, and various development considerations including performance, infrastructure, and troubleshooting. Current challenges include online backups and shard management during upgrades.

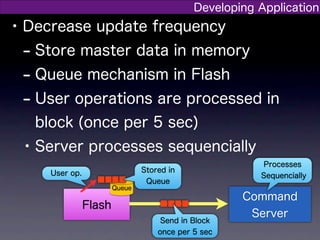

![Developing Application

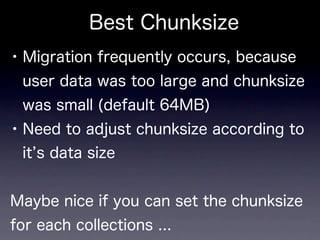

• Define data without using transaction

- User data are defined in 1 document

and updated only once in block

User Data

{ facebookId : xxx,

Image

status : { lv : 10, coin : 9999, ... },

layerInfo : 1¦1¦0¦1¦2¦1¦1¦3¦1¦1¦4¦0... ,

structure : {

1¦1 : { id : xxxx, depth : 3, width : 3, aspect : 1, ... }, ...

},

inventory : [ { id : xxx, size : xxx, ... }, ... ],

neighbor : [ { id : xxx, newFlg : false, ... }, ... ],

animal : [ { id : xxx, color : 0, pos : 20¦20 , ... } ],

...

}](https://image.slidesharecdn.com/mongotokyo2012en-120119042747-phpapp02/85/The-Case-for-using-MongoDB-in-Social-Game-Animal-Land-20-320.jpg)