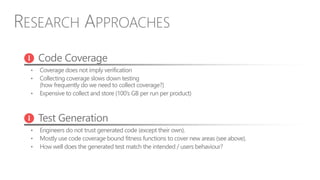

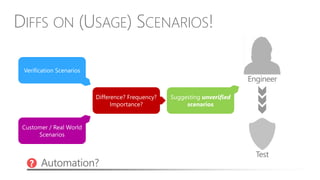

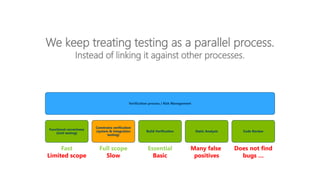

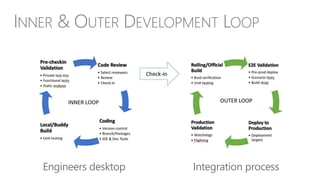

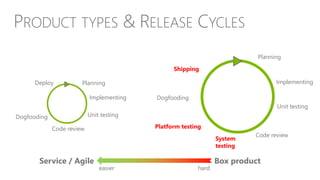

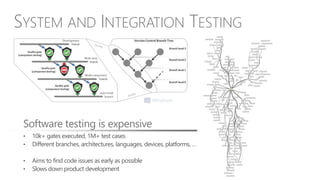

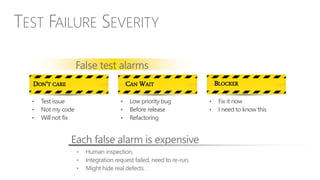

The document discusses the challenges and evolution of software testing in modern development processes, emphasizing the need for strategic risk management due to the increasing speed and complexity of software releases. It highlights the issues of false test alarms and the inefficiencies of traditional testing methods, advocating for a focus on cost-effective testing strategies that prioritize quality without sacrificing development speed. The author argues for innovative approaches to testing that integrate customer feedback and adapt to real-world usage scenarios to enhance overall efficiency and effectiveness.

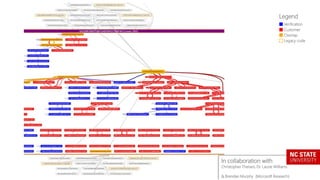

![COST MODEL

𝐶𝑜𝑠𝑡 𝐸𝑥𝑒𝑐𝑢𝑡𝑖𝑜𝑛 > 𝐶𝑜𝑠𝑡 𝑆𝑘𝑖𝑝 ? suspend ∶ execute test

𝐶𝑜𝑠𝑡 𝐸𝑥𝑒𝑐𝑢𝑡𝑖𝑜𝑛 = 𝐶𝑜𝑠𝑡 𝑀𝑎𝑐ℎ𝑖𝑛𝑒/𝑇𝑖𝑚𝑒 ∗ 𝑇𝑖𝑚𝑒 𝐸𝑥𝑒𝑐𝑢𝑡𝑖𝑜𝑛 + "Cost of potential false alarm"

= 𝐶𝑜𝑠𝑡 𝑀𝑎𝑐ℎ𝑖𝑛𝑒/𝑇𝑖𝑚𝑒 ∗ 𝑇𝑖𝑚𝑒 𝐸𝑥𝑒𝑐𝑢𝑡𝑖𝑜𝑛 + (𝑃𝐹𝑃 ∗ 𝐶𝑜𝑠𝑡 𝐷𝑒𝑣𝑒𝑙𝑜𝑝𝑒𝑟/𝑇𝑖𝑚𝑒 ∗𝑇𝑖𝑚𝑒 𝑇𝑟𝑖𝑎𝑔𝑒 )

𝐶𝑜𝑠𝑡 𝑆𝑘𝑖𝑝 = "Potential cost of elapsing a bug to next higher branch level"

= 𝑃 𝑇𝑃 ∗ 𝐶𝑜𝑠𝑡 𝐷𝑒𝑣𝑒𝑙𝑜𝑝𝑒𝑟/𝑇𝑖𝑚𝑒 ∗ 𝑇𝑖𝑚𝑒 𝐹𝑟𝑒𝑒𝑧𝑒 𝑏𝑟𝑎𝑛𝑐ℎ ∗ #𝐷𝑒𝑣𝑒𝑙𝑜𝑝𝑒𝑟𝑠 𝐵𝑟𝑎𝑛𝑐ℎ

[1] K. Herzig, M. Greiler, J. Czerwonka, and B. Murphy, “The Art of Testing Less without Sacrificing Quality,”

in Proceedings of the 2015 International Conference on Software Engineering, 2015.](https://image.slidesharecdn.com/kimherzigwebinarserieslatest-151210103526/85/Testing-As-A-Bottleneck-How-Testing-Slows-Down-Modern-Development-Processes-And-How-To-Compensate-21-320.jpg)

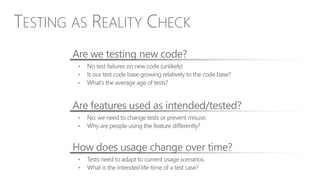

![Windows

Results

Simulated on

Windows 8.1

development period

(BVT only)

[1] K. Herzig, M. Greiler, J. Czerwonka, and B. Murphy, “The Art of Testing Less without Sacrificing Quality,”

in Proceedings of the 2015 International Conference on Software Engineering, 2015.](https://image.slidesharecdn.com/kimherzigwebinarserieslatest-151210103526/85/Testing-As-A-Bottleneck-How-Testing-Slows-Down-Modern-Development-Processes-And-How-To-Compensate-22-320.jpg)