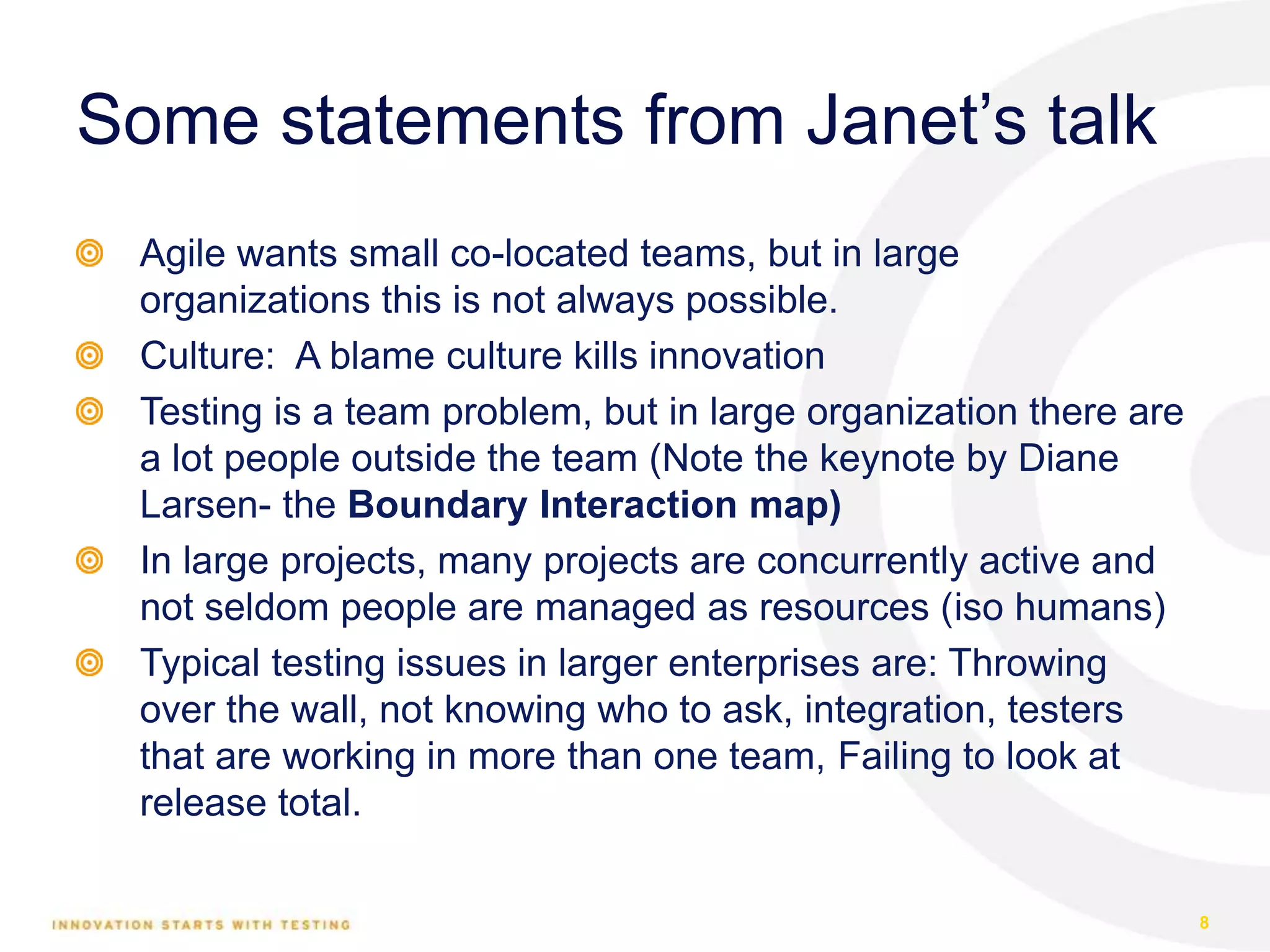

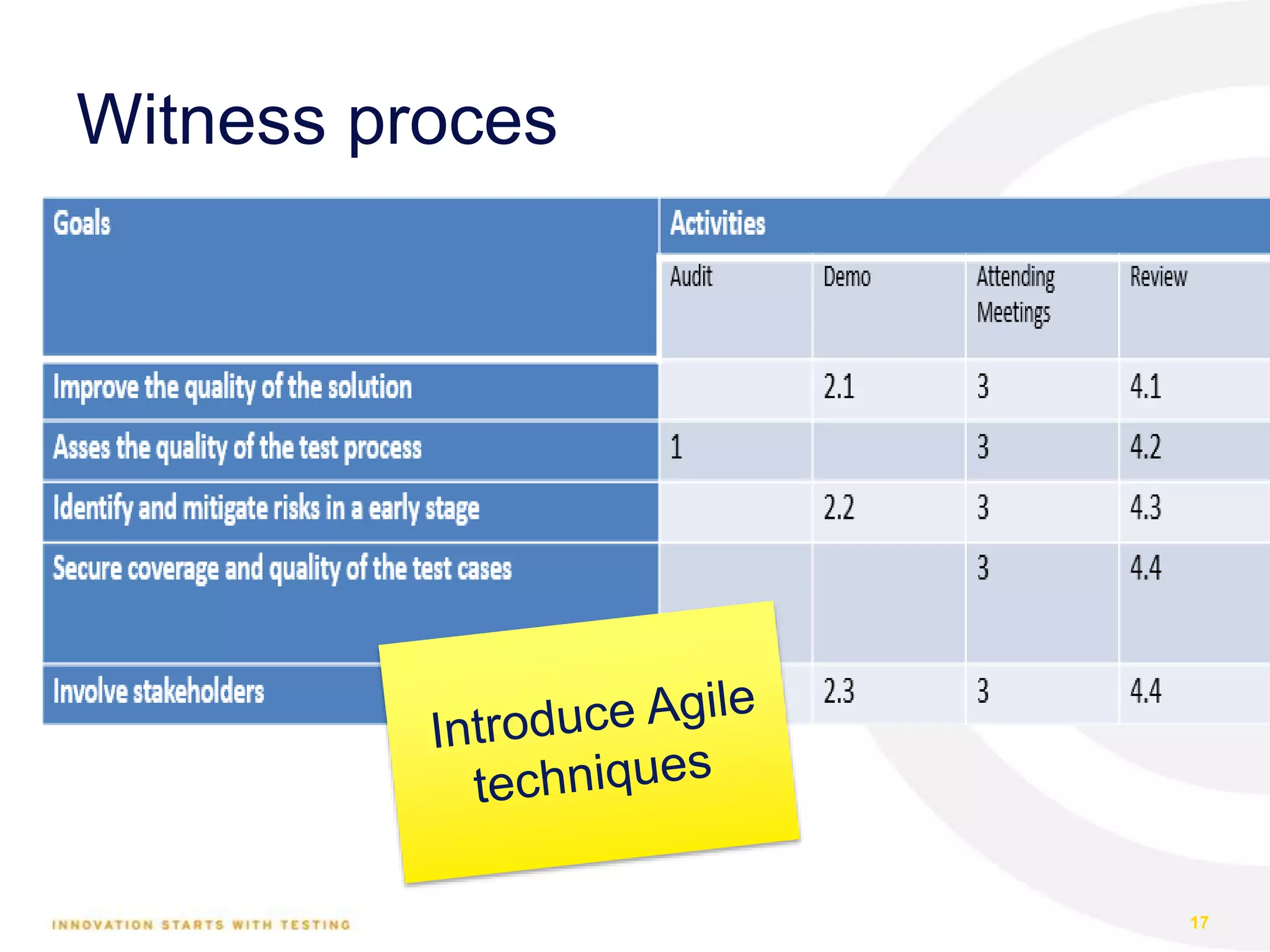

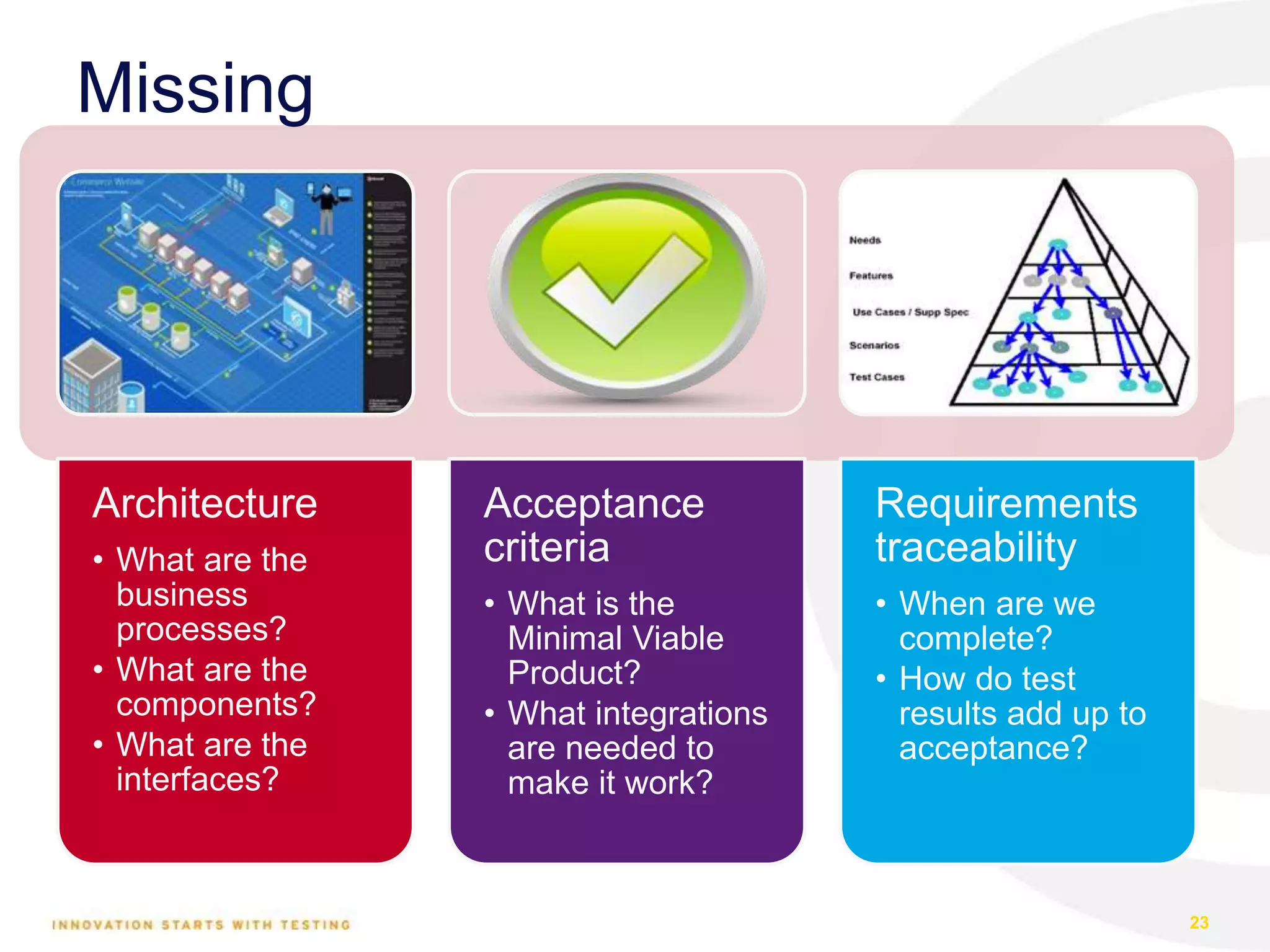

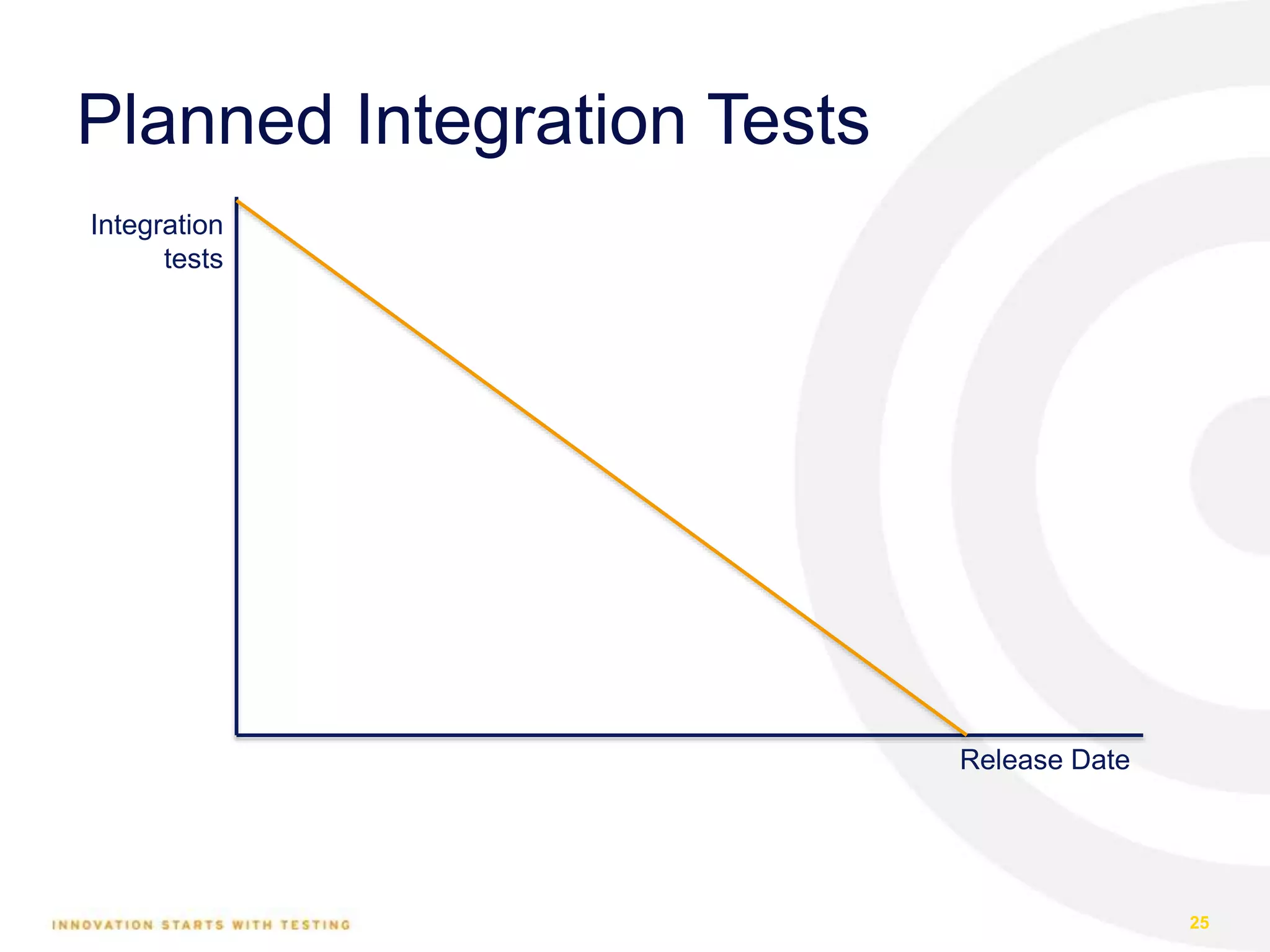

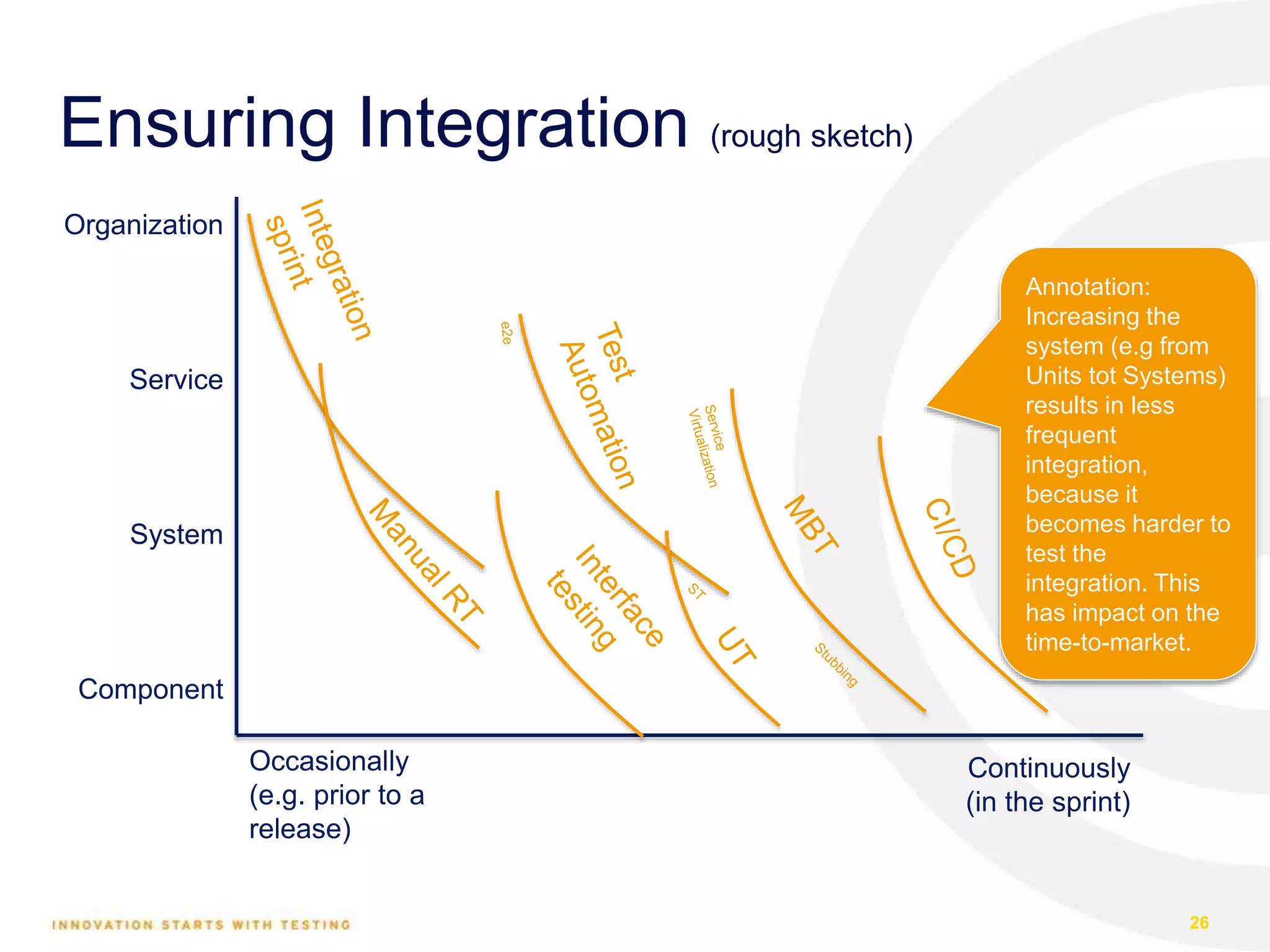

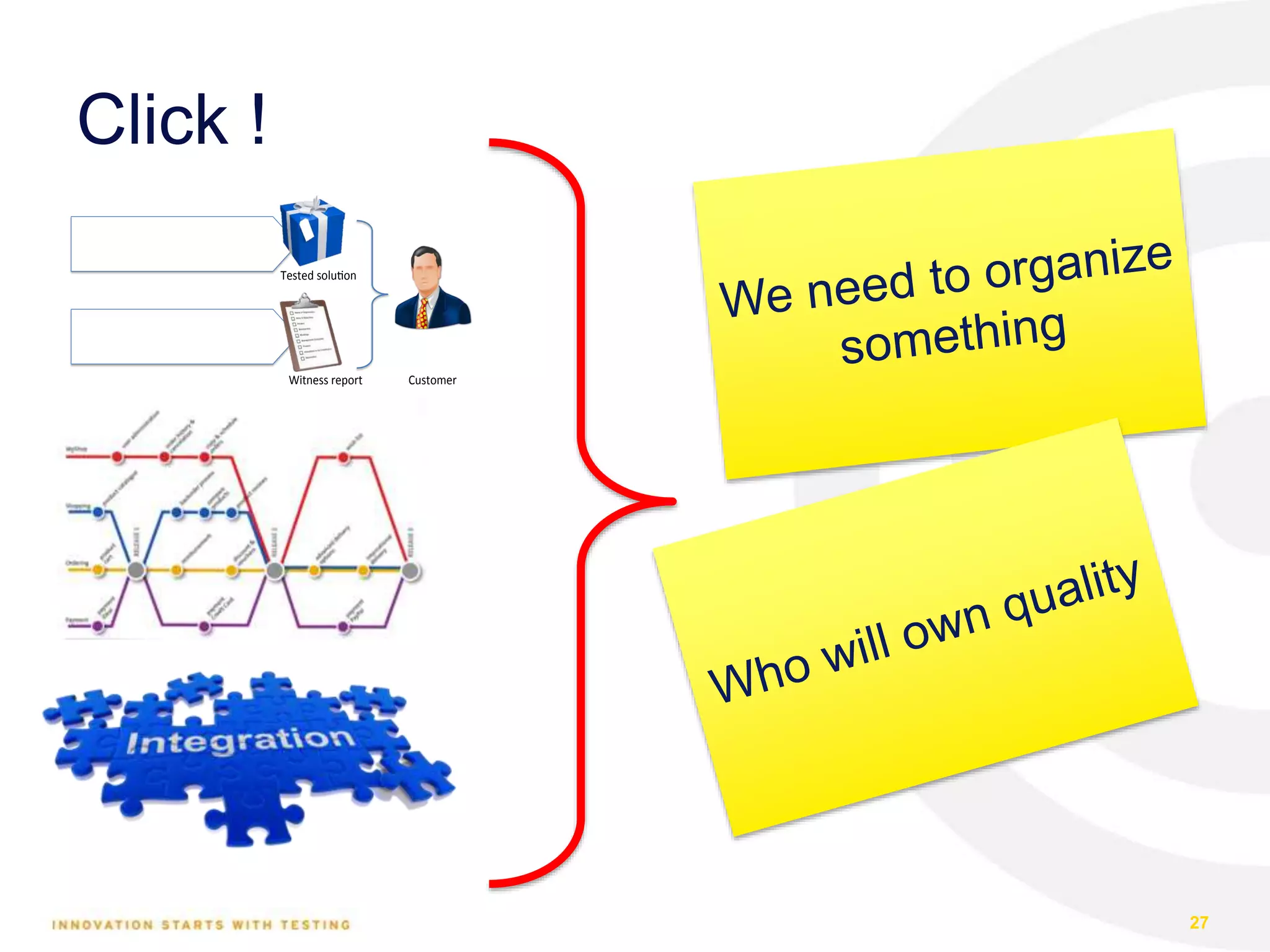

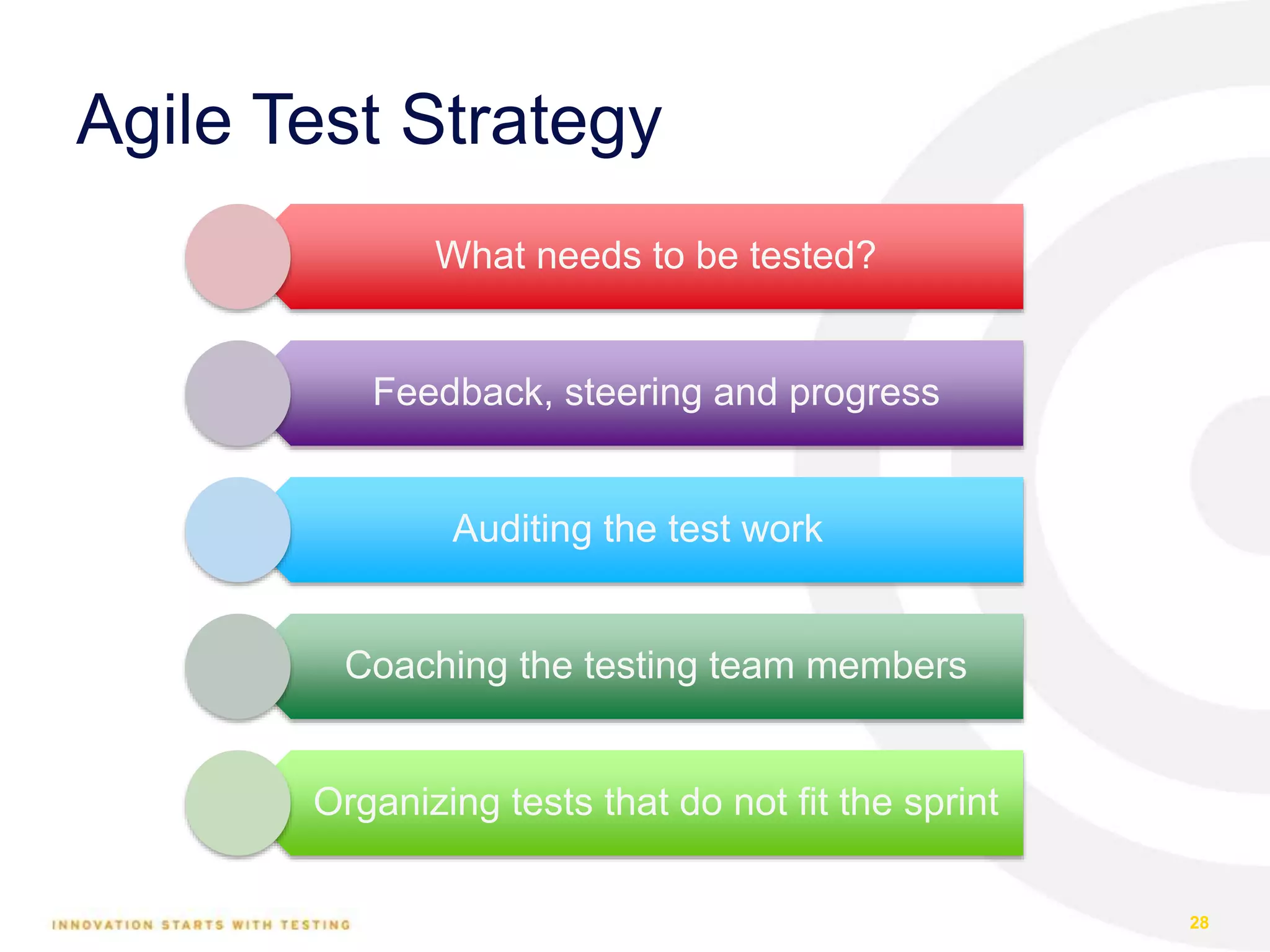

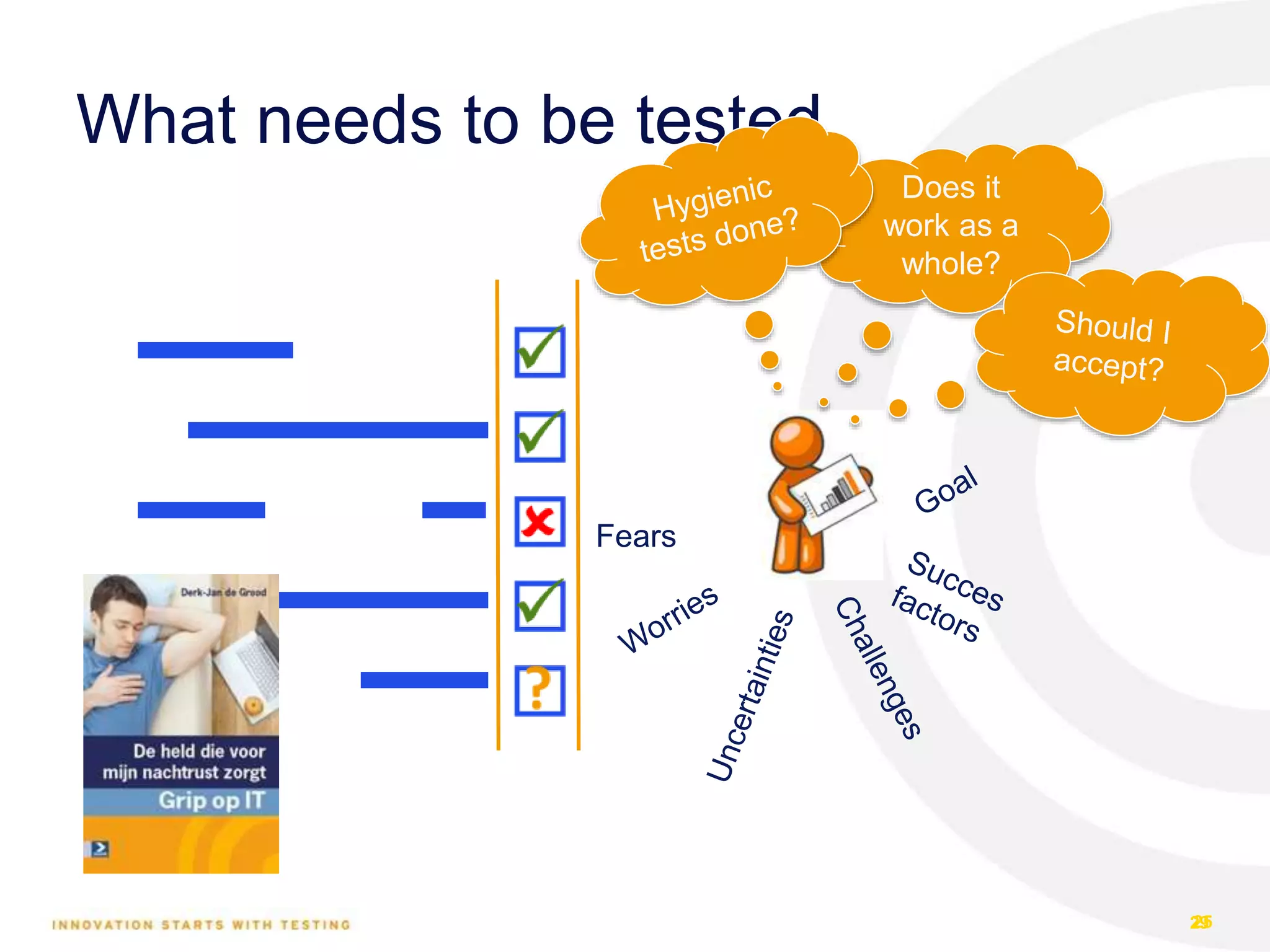

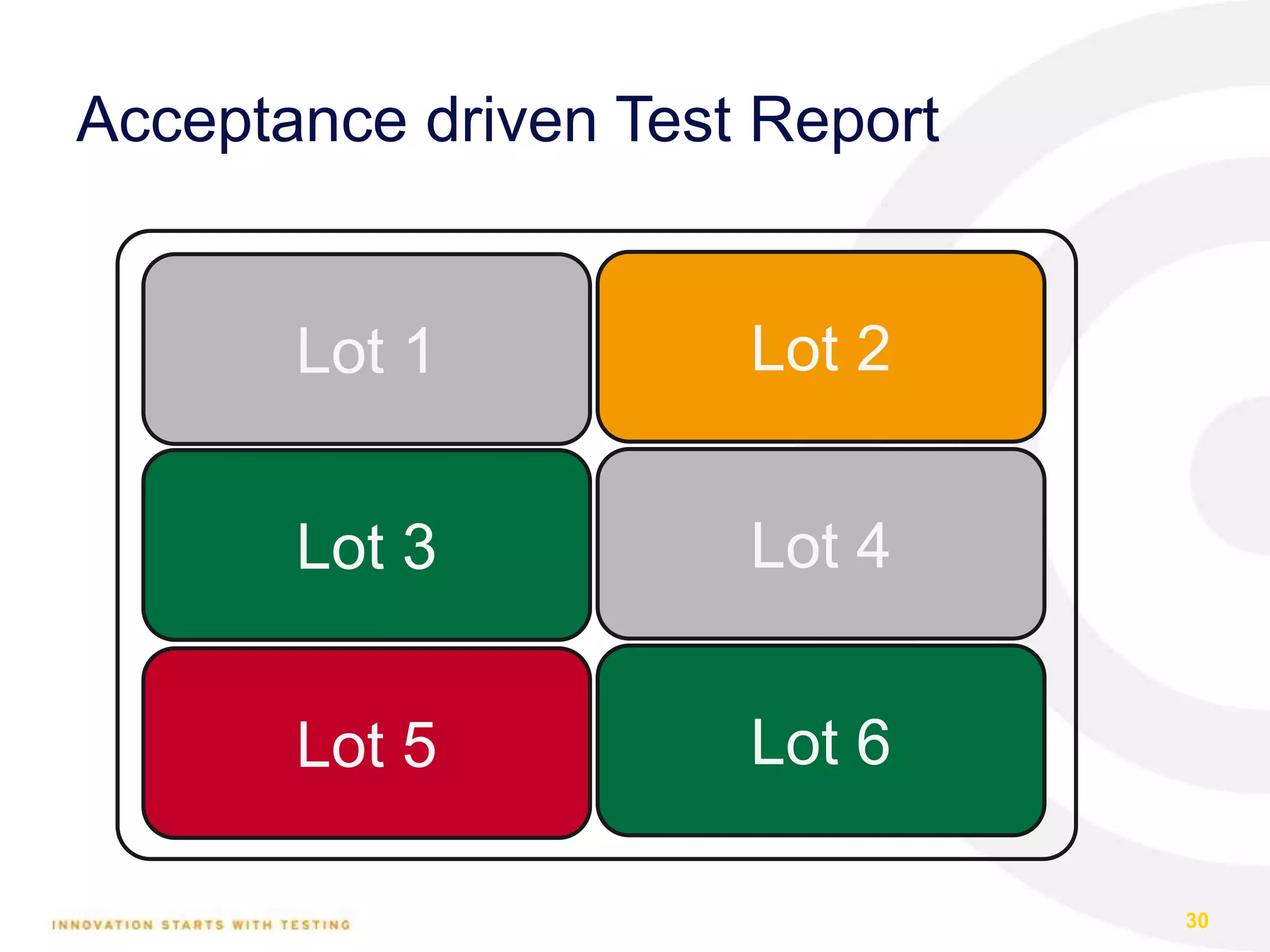

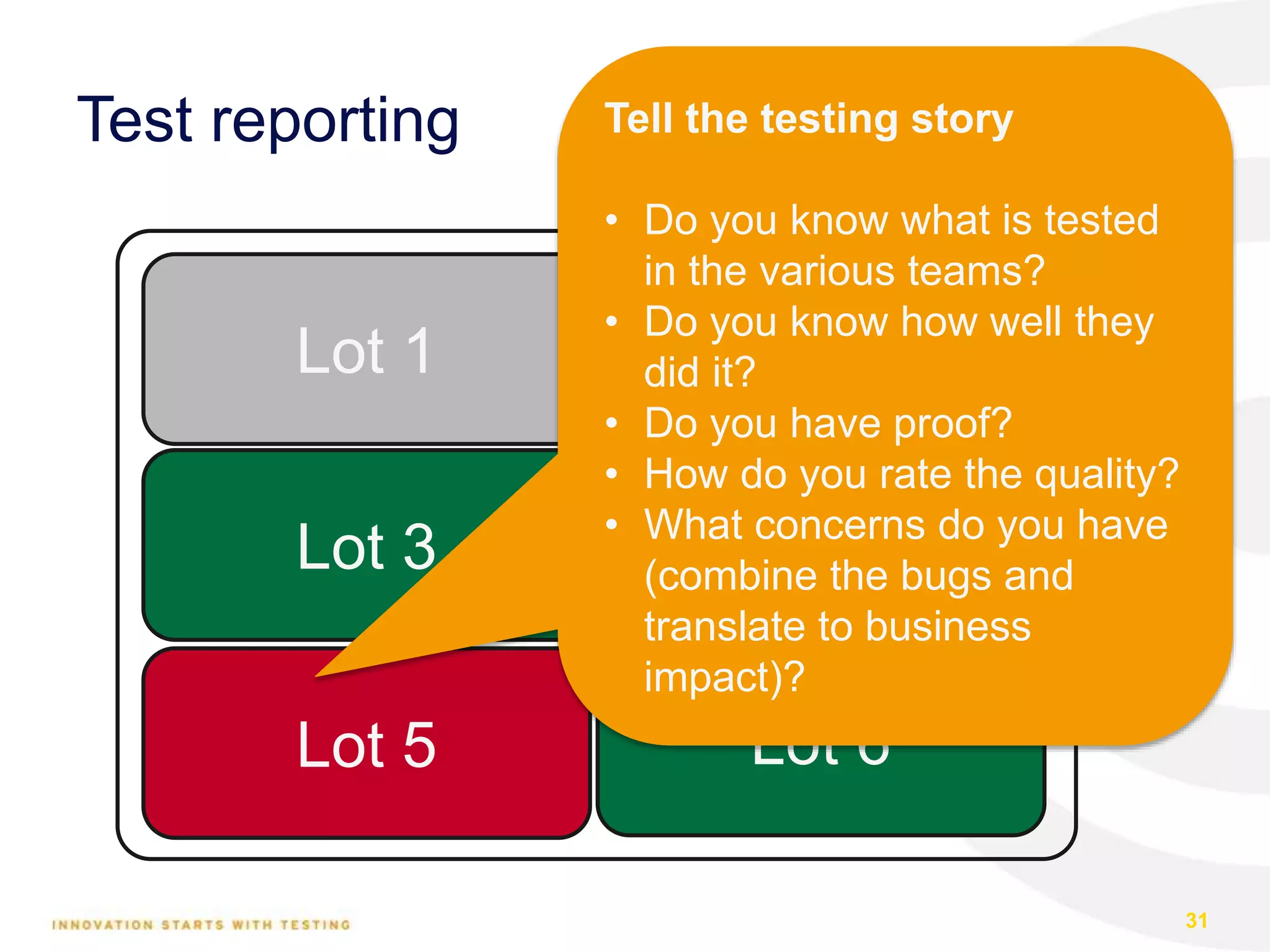

The webinar discusses agile testing strategies for large enterprises, highlighting the challenges such as blame culture, team collaboration, and integration complexities. It emphasizes the importance of effective testing to maintain software quality while managing multiple concurrent projects. Recommendations include establishing clear acceptance criteria, fostering team responsibility for testing, and incorporating continuous integration/deployment practices.

![Business[Agility]

4](https://image.slidesharecdn.com/eurostarwebinar-agileenterpriseteststrategiesv02-170215123602/75/Creating-Agile-Test-Strategies-for-Larger-Enterprises-4-2048.jpg)

![Testing and business agility

Effective testing ensures that the

organization can extend and

change software products whilst

retaining confidence in the quality

and correct operation of what is

delivered

[ING orange book on testing and Quality]

6](https://image.slidesharecdn.com/eurostarwebinar-agileenterpriseteststrategiesv02-170215123602/75/Creating-Agile-Test-Strategies-for-Larger-Enterprises-6-2048.jpg)

![[http://www.slideshare.net/janetgregoryca]7](https://image.slidesharecdn.com/eurostarwebinar-agileenterpriseteststrategiesv02-170215123602/75/Creating-Agile-Test-Strategies-for-Larger-Enterprises-7-2048.jpg)