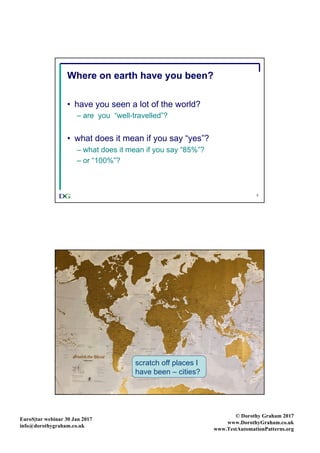

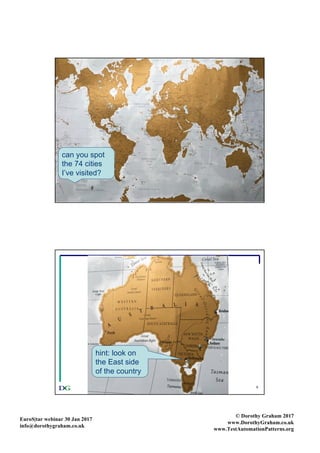

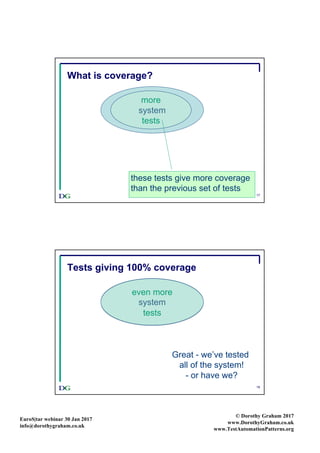

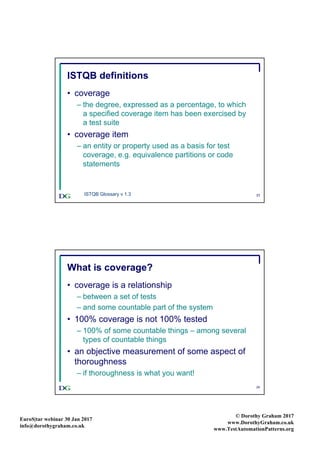

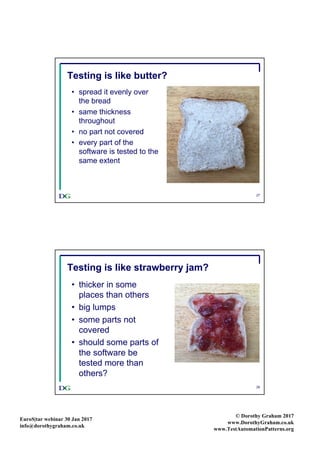

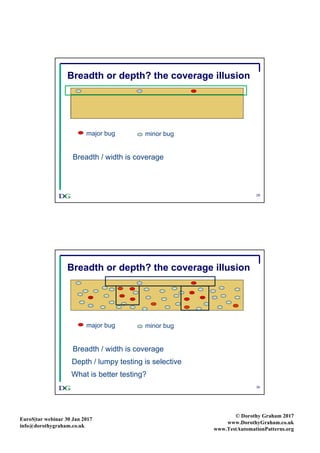

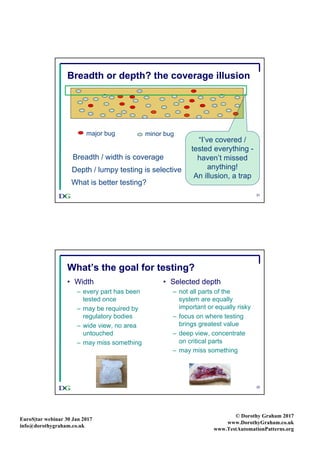

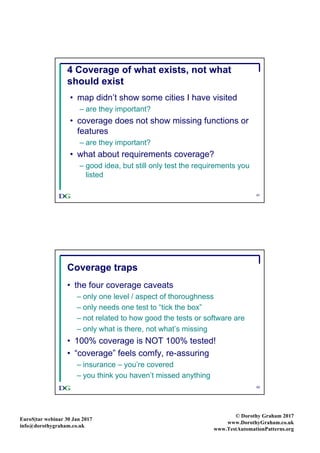

This document summarizes a presentation on test coverage given by Dorothy Graham. It uses an analogy of travel to different locations to explain what test coverage means and some caveats. Coverage refers to the relationship between tests and the parts of a system being tested, but achieving 100% coverage does not mean everything is tested. There are four caveats discussed: coverage only measures one aspect of testing, a single test can achieve coverage, coverage does not indicate quality, and it only applies to the existing system not missing pieces. The key recommendation is to ask "coverage of what?" when the term is used rather than assuming more coverage is always better.

![EuroS|tar webinar 30 Jan 2017

info@dorothygraham.co.uk

© Dorothy Graham 2017

www.DorothyGraham.co.uk

www.TestAutomationPatterns.org

33

Contents

Analogy with travelling

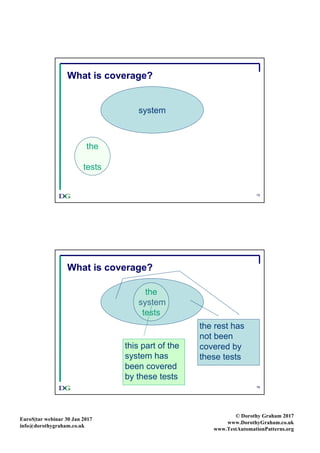

What is coverage?

Should testing be thorough?

What coverage is not (often mistaken for)

The four caveats of coverage

The question you should ask

Twitter: @DorothyGraham

34

Coverage is NOT

the system

the

tests

the

tests

this is test completion!

don’t call it “coverage”!

“we’ve run all of the tests”

[that we have thought of]](https://image.slidesharecdn.com/ukstarwebinarcov170130p-170130171725/85/Are-Your-Tests-Well-Travelled-Thoughts-About-Test-Coverage-17-320.jpg)

![EuroS|tar webinar 30 Jan 2017

info@dorothygraham.co.uk

© Dorothy Graham 2017

www.DorothyGraham.co.uk

www.TestAutomationPatterns.org

45

Next time you hear:

• “we need coverage”, ask: “of what”?

– exactly what countable things need to be

covered by tests?

– why is it important to test them [all]?

– how “deeply” should we cover things?

• would testing be more effective if lumpy, not smooth?

• what can we safely not test (this time)?

– we always miss something

• better to miss it on purpose than fool yourself into

thinking you haven’t missed anything

46

Summary

• coverage is a relationship

– between tests and what-is-tested

• coverage is not:

– test completion (my tests tested what they tested)

– 100% tested – only in one dimension

• beware the coverage traps

• when you hear “coverage”

– ask “of what”

www.DorothyGraham.co.uk email:info@DorothyGraham.co.uk

www.TestAutomationPatterns.org](https://image.slidesharecdn.com/ukstarwebinarcov170130p-170130171725/85/Are-Your-Tests-Well-Travelled-Thoughts-About-Test-Coverage-23-320.jpg)