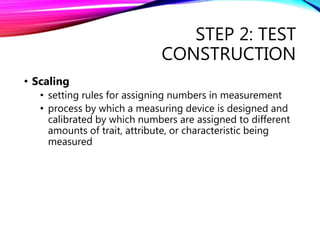

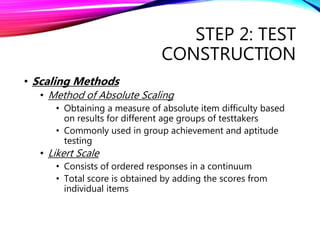

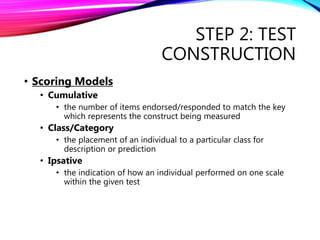

The document outlines the 5 main steps in test development: 1) test conceptualization which includes defining what will be measured and pilot studies, 2) test construction including scaling methods, writing items, and approaches, 3) test tryout, 4) item analysis to evaluate item difficulty, reliability, validity, and discrimination, and 5) test revision to ensure quality over time as needed. Key aspects include defining the construct being measured, using various scaling and scoring models, analyzing item performance, and revalidating tests periodically.