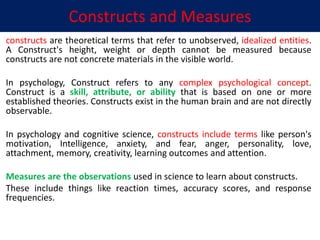

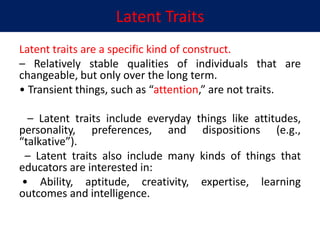

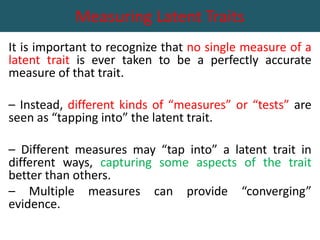

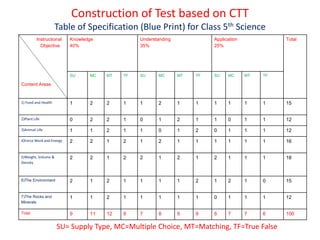

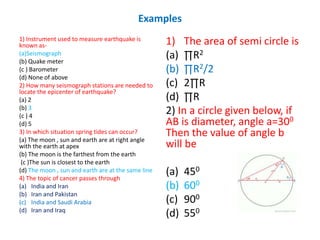

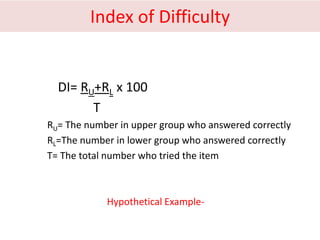

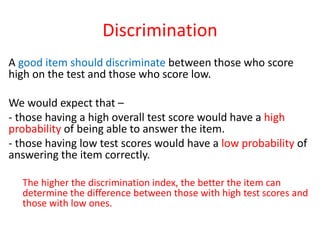

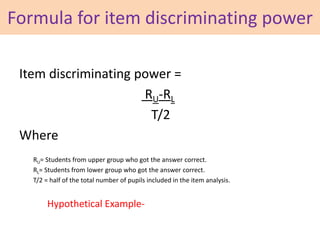

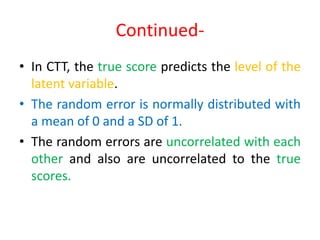

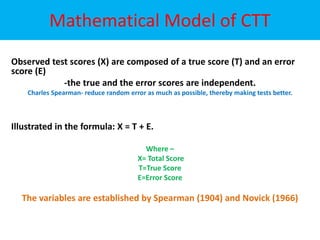

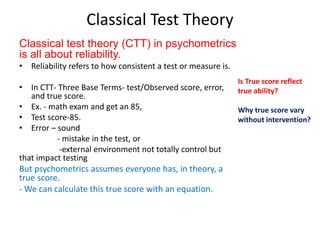

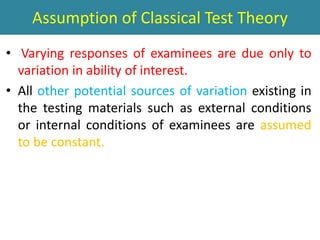

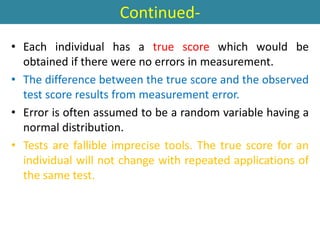

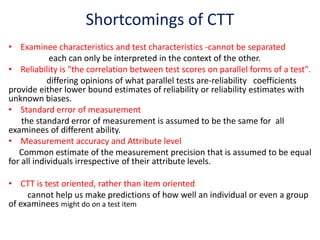

The document discusses the fundamentals of Classical Test Theory (CTT) including constructs and latent traits, emphasizing the importance of measuring these traits through various psychometric tests. It outlines the process of test construction, evaluation, and the challenges related to measurement errors and reliability. Additionally, it compares CTT with Item Response Theory (IRT), highlighting the limitations and the need for more complex analytic approaches in educational assessment.