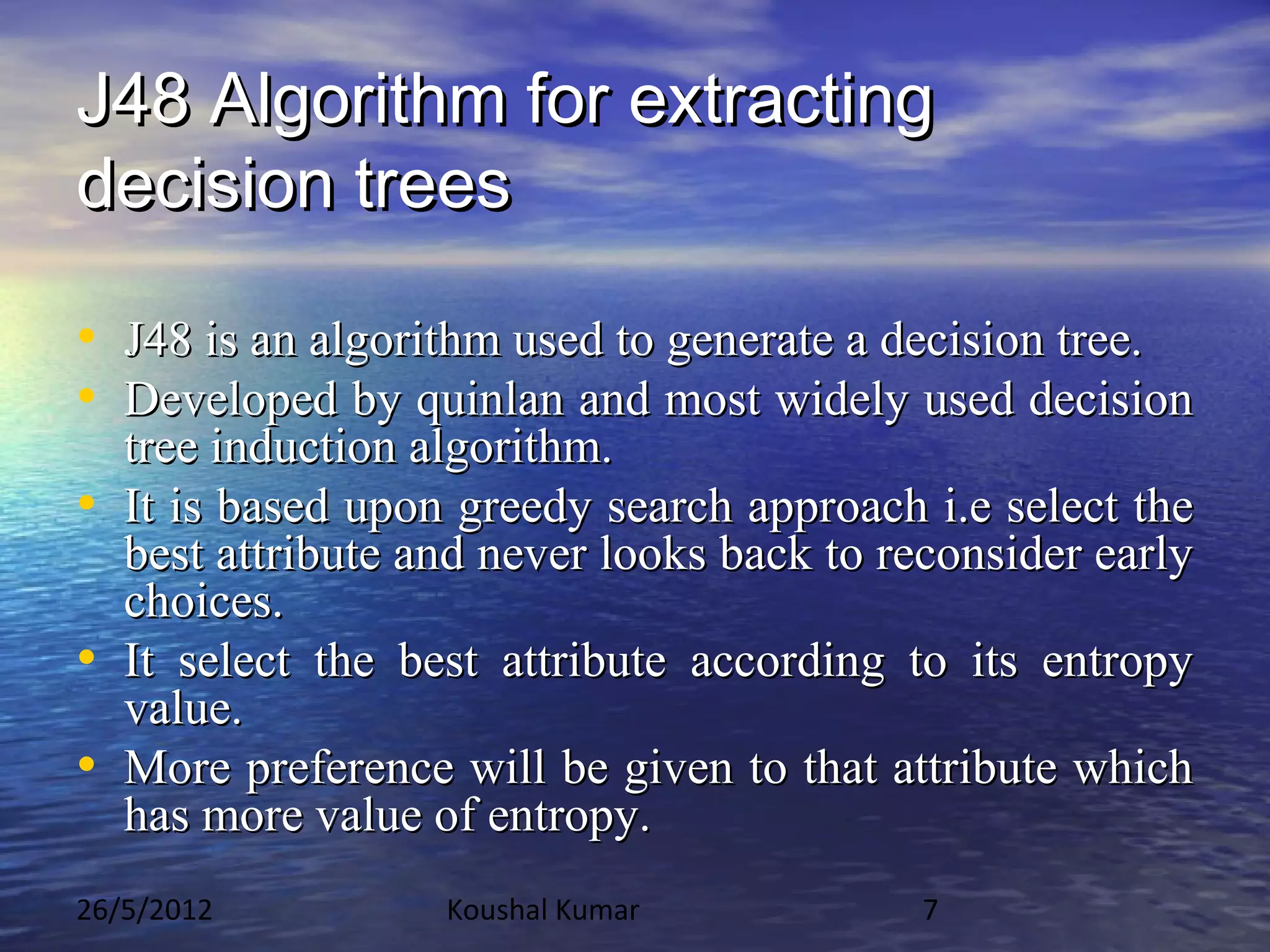

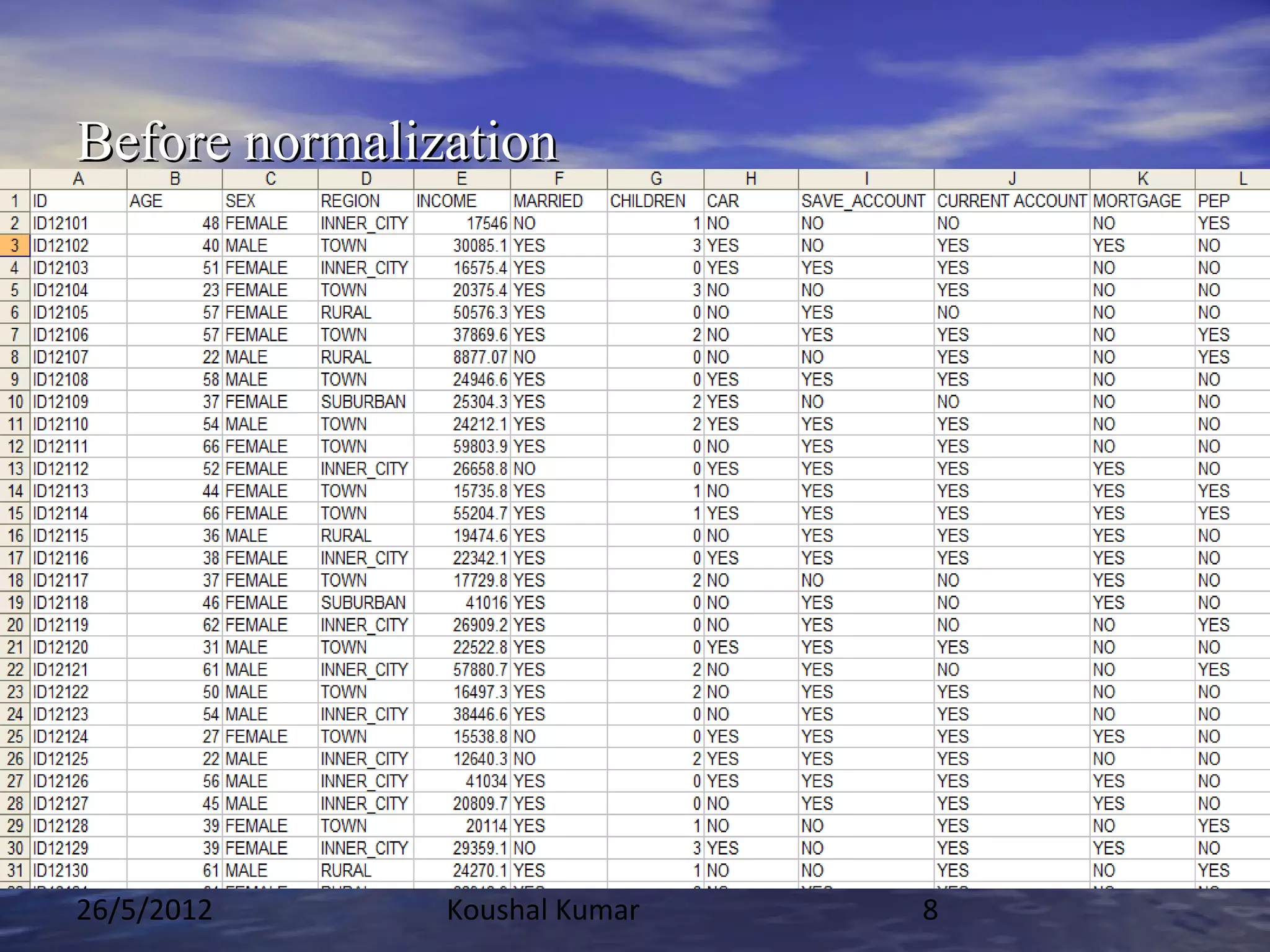

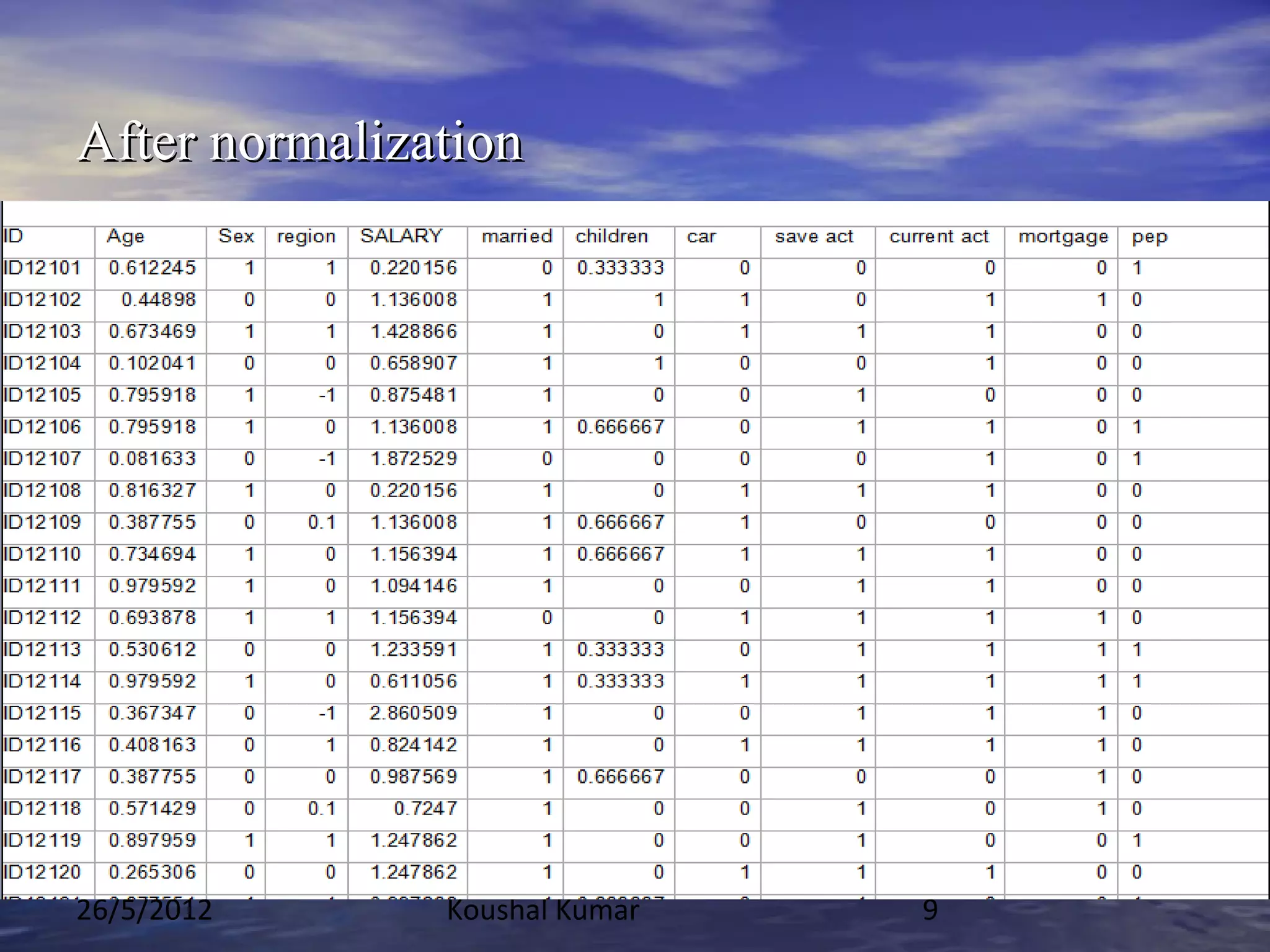

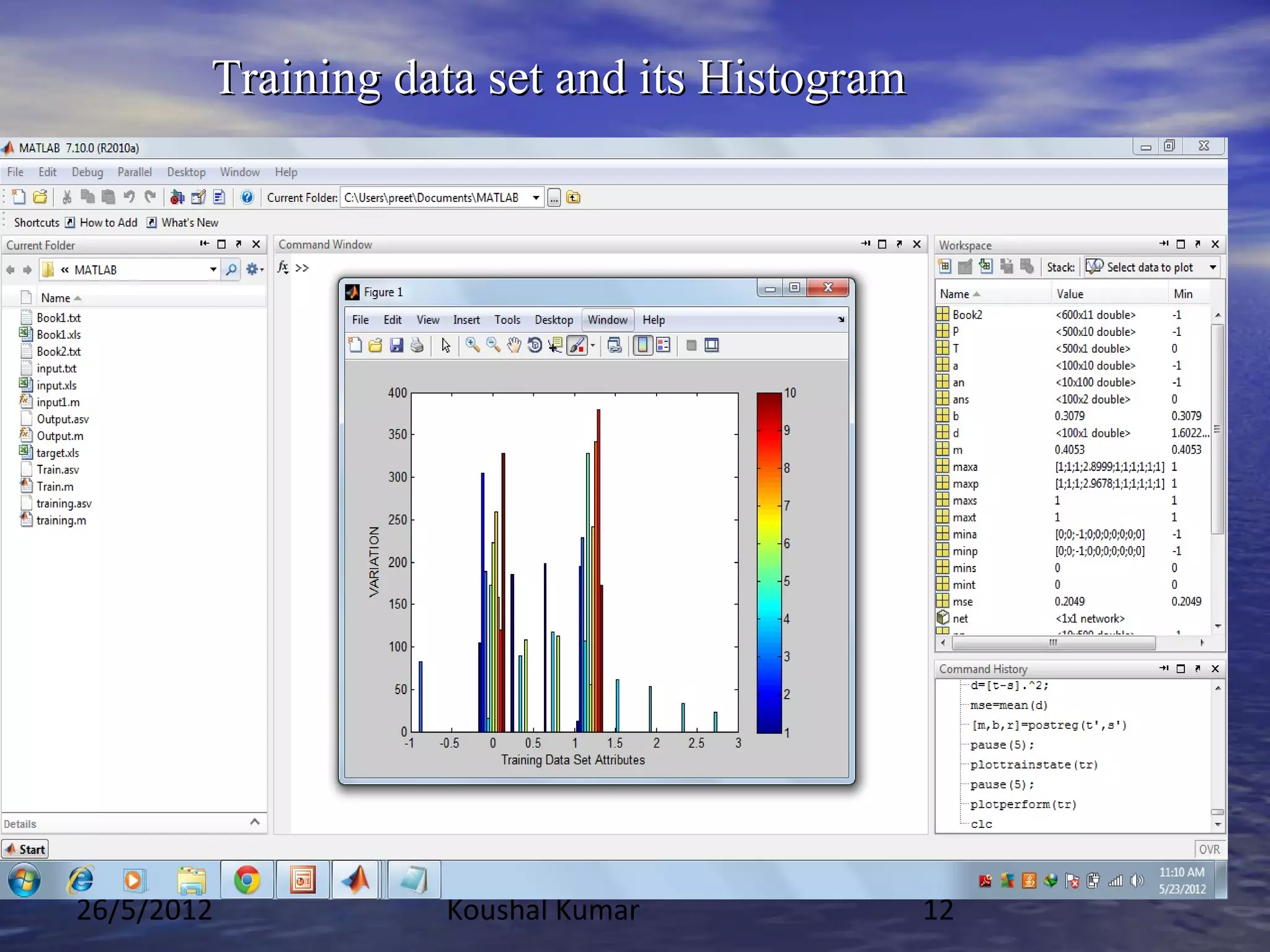

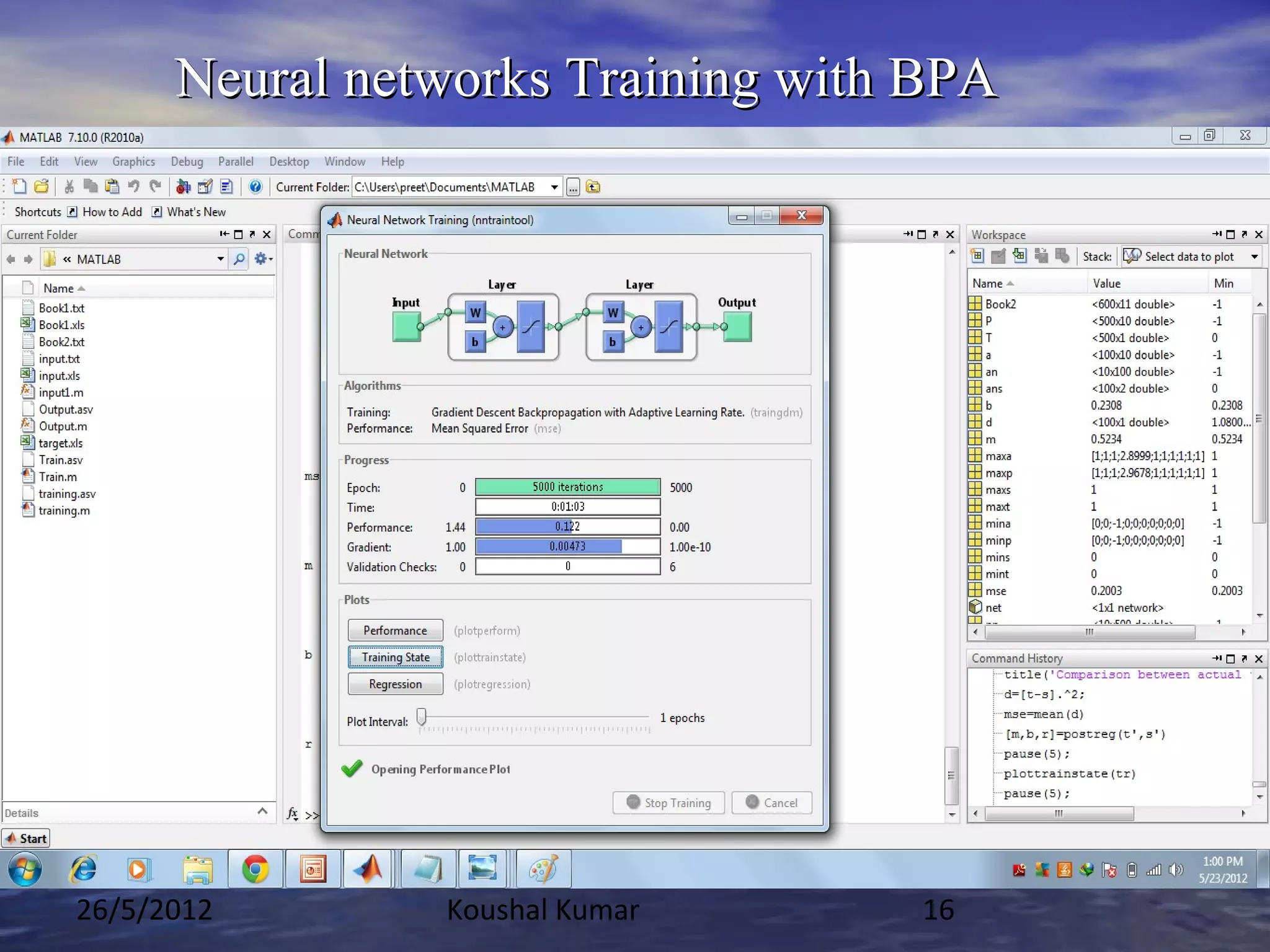

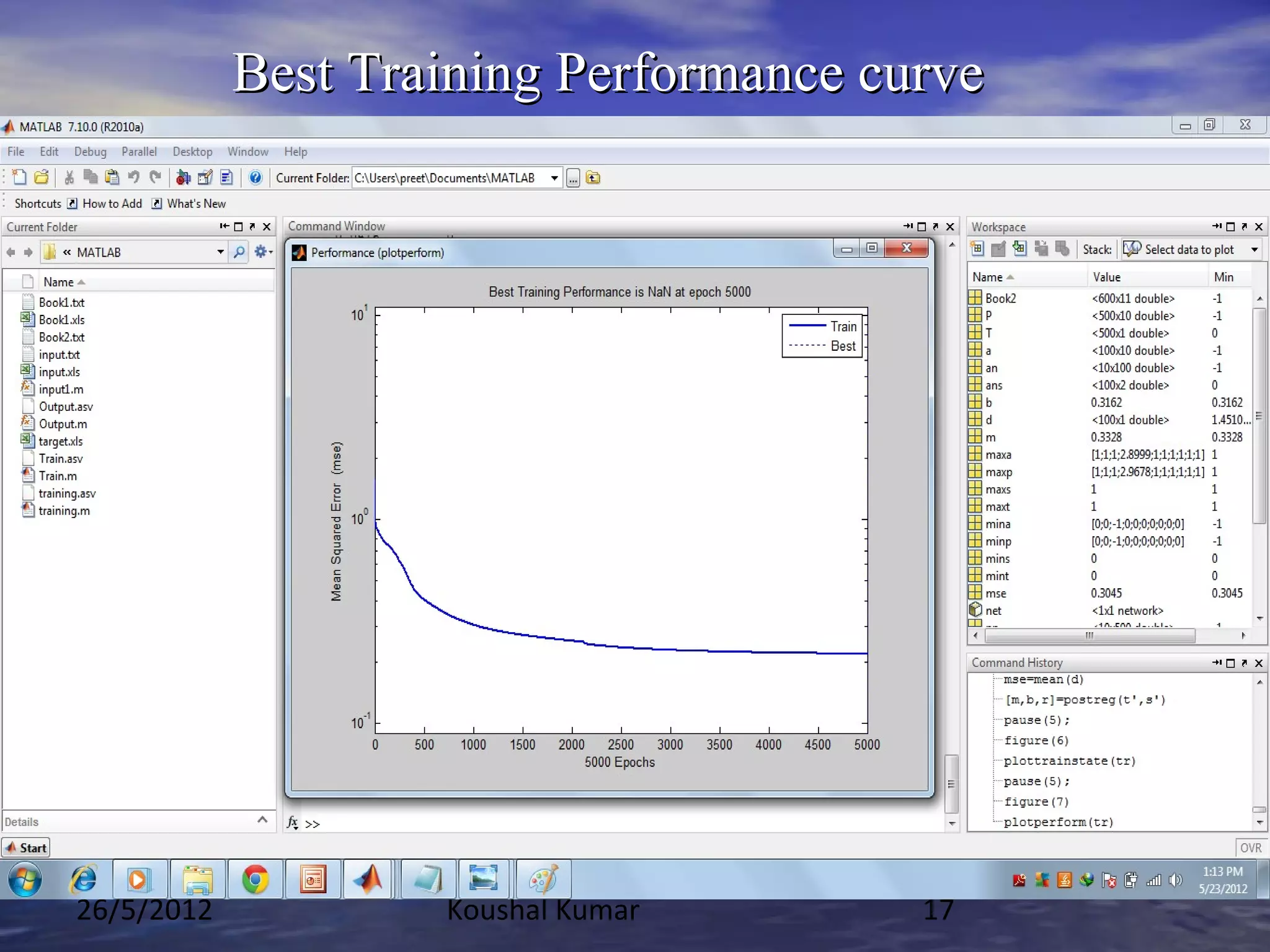

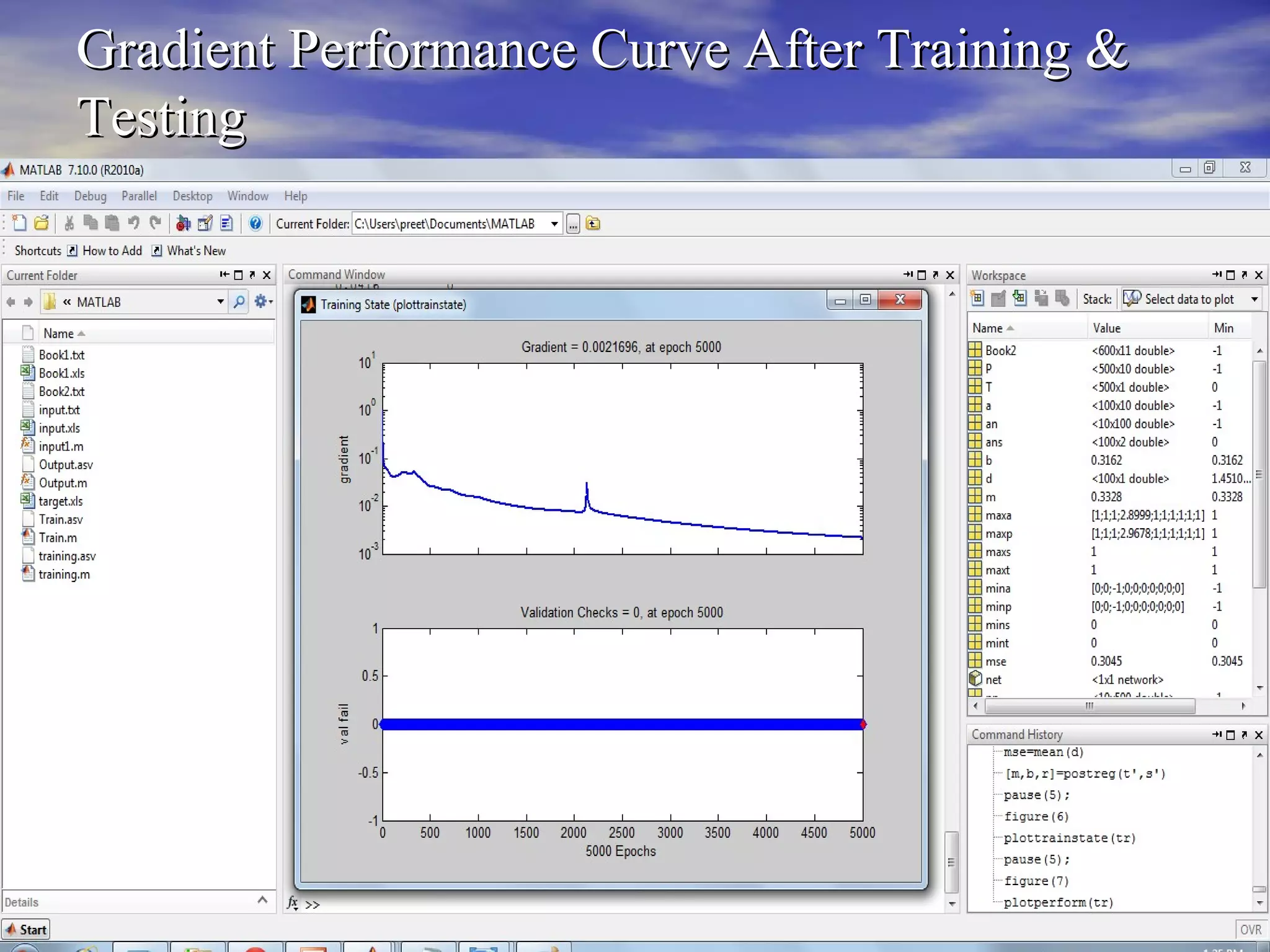

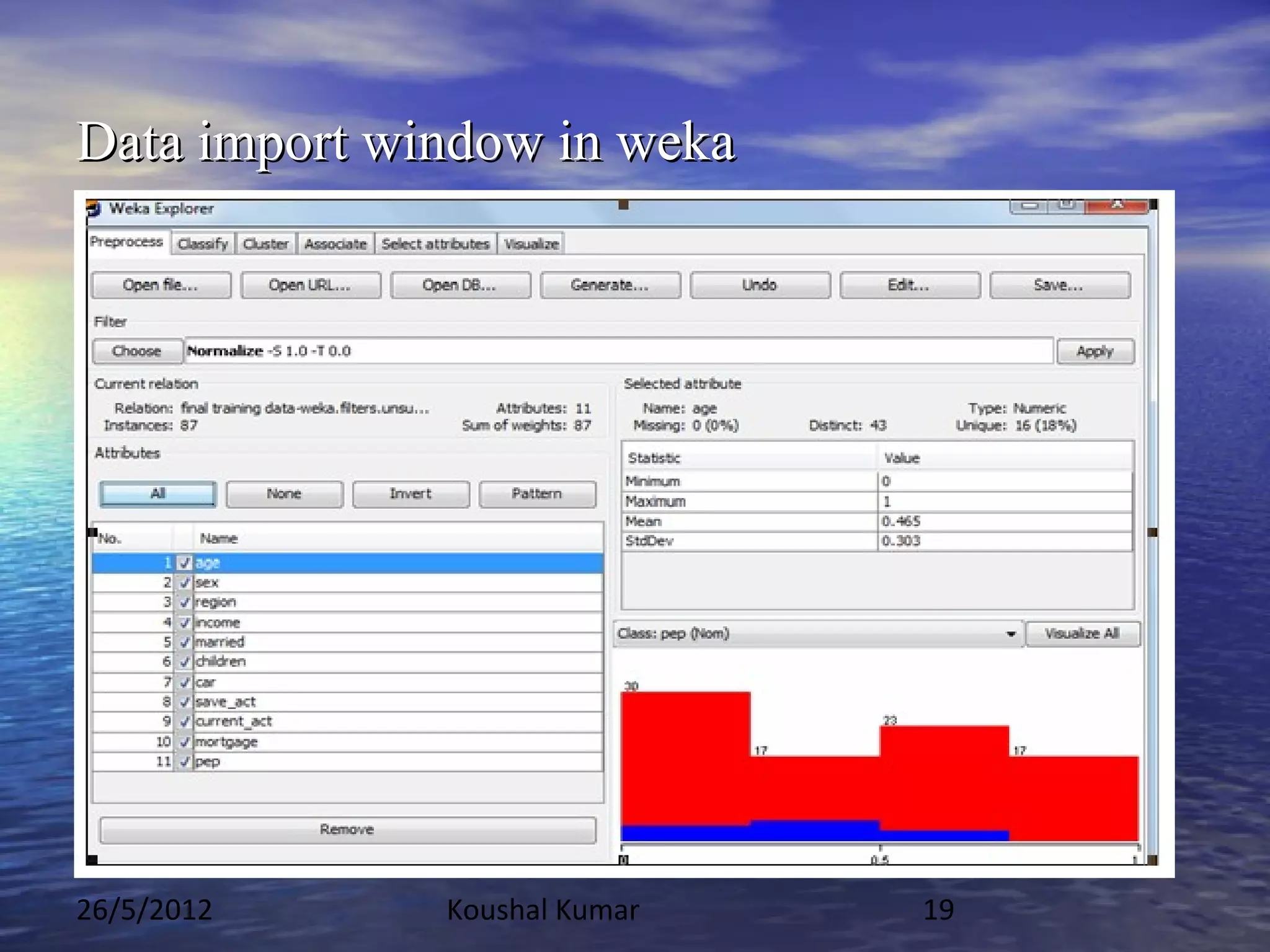

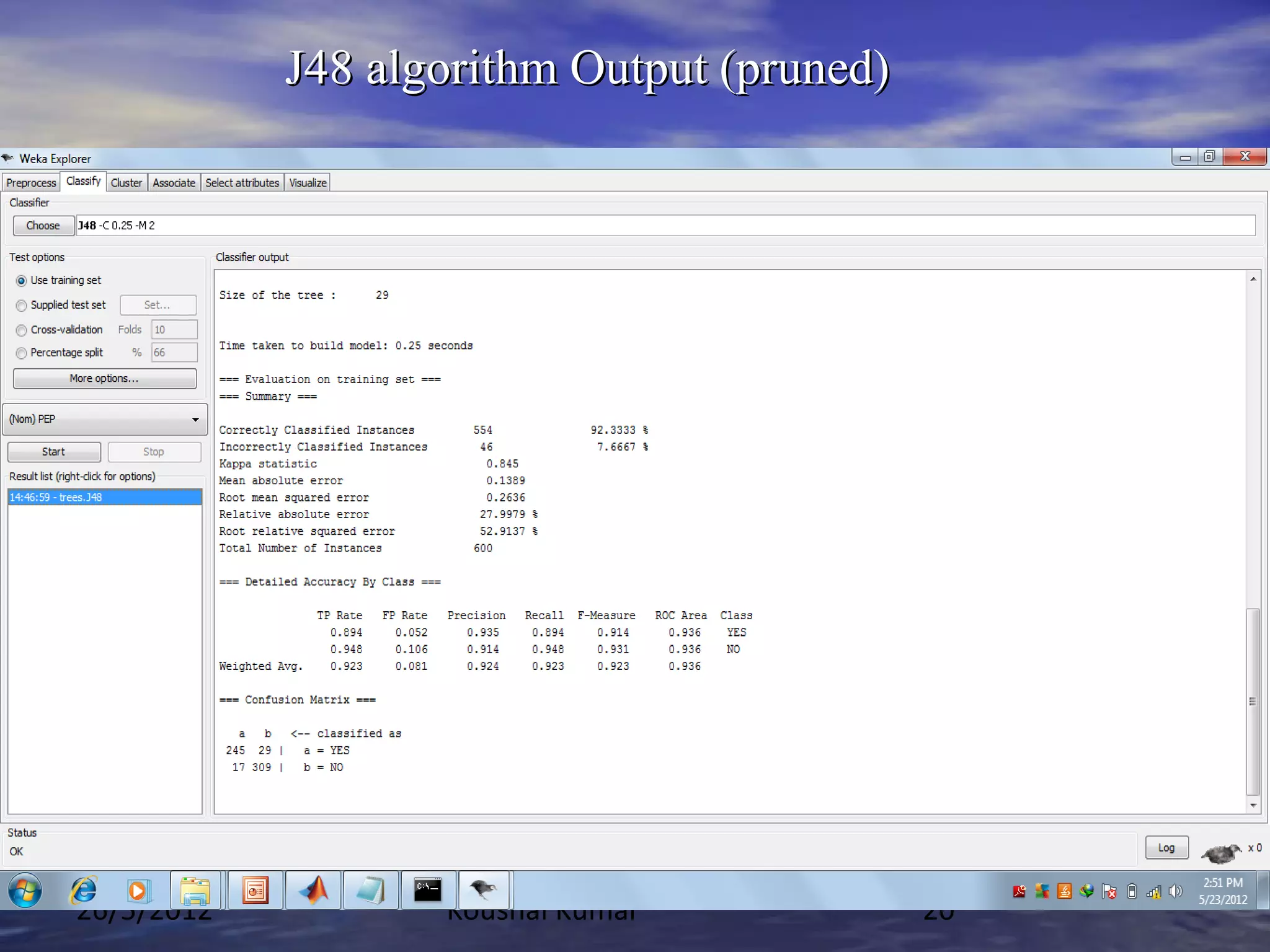

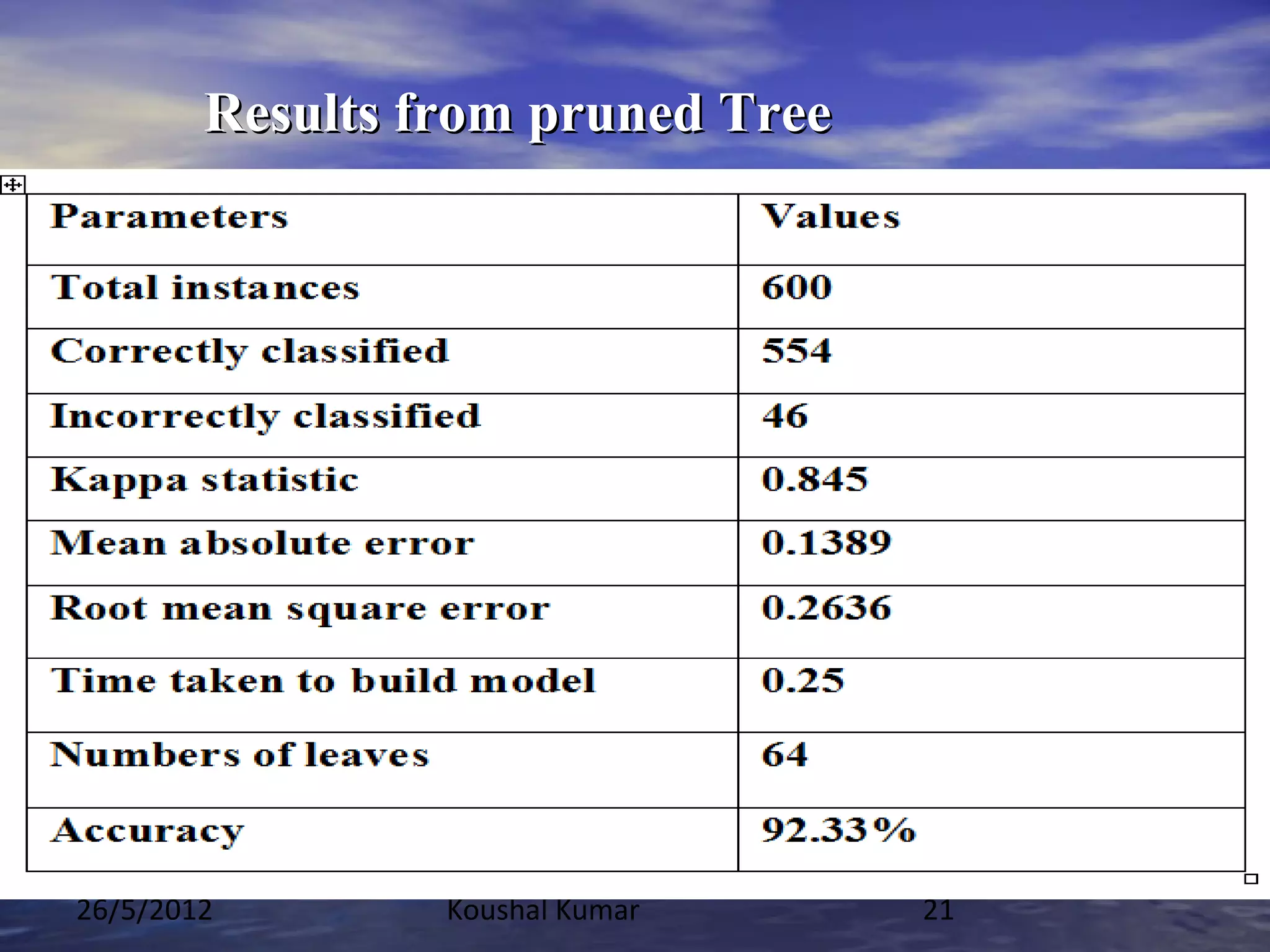

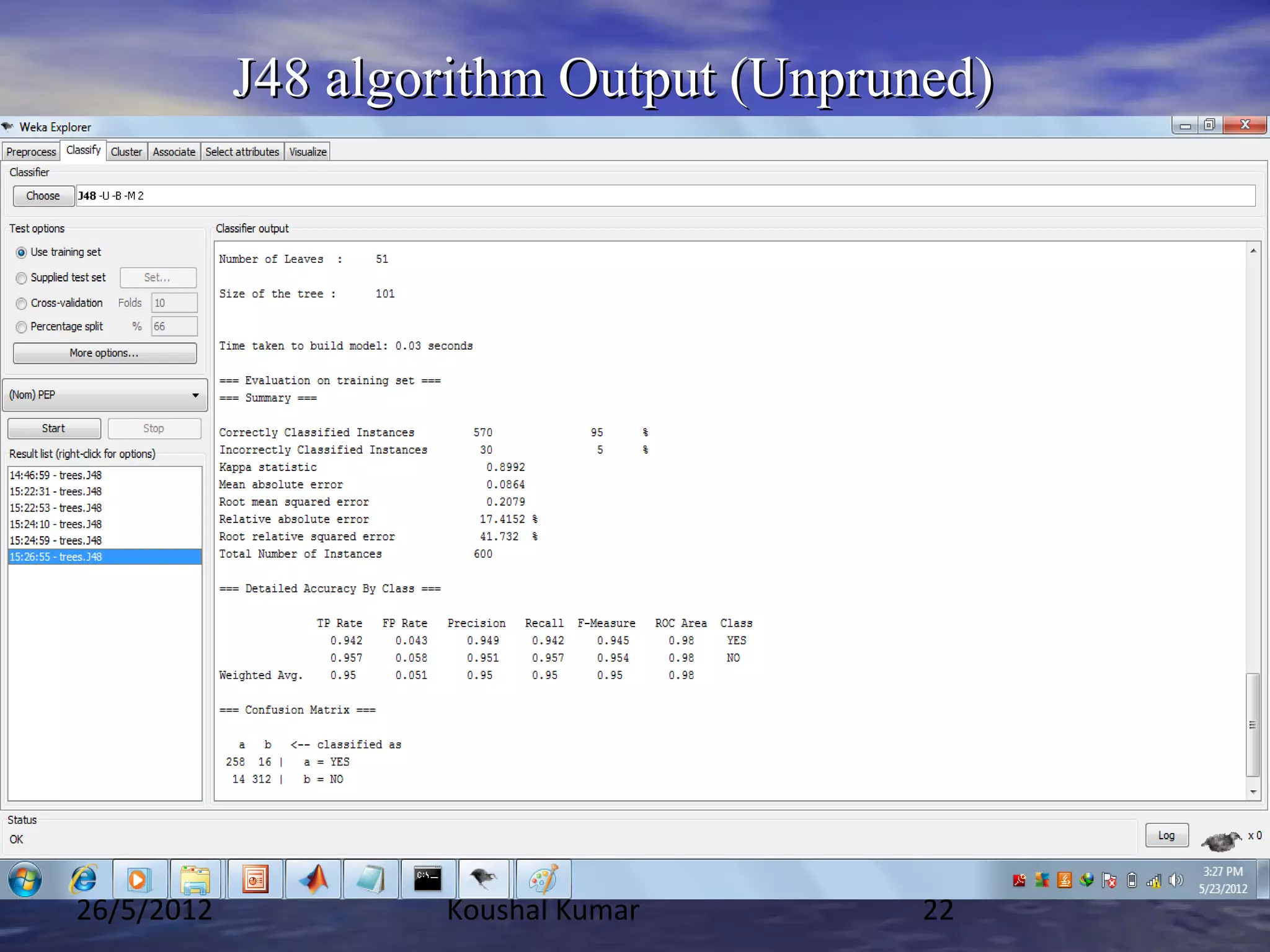

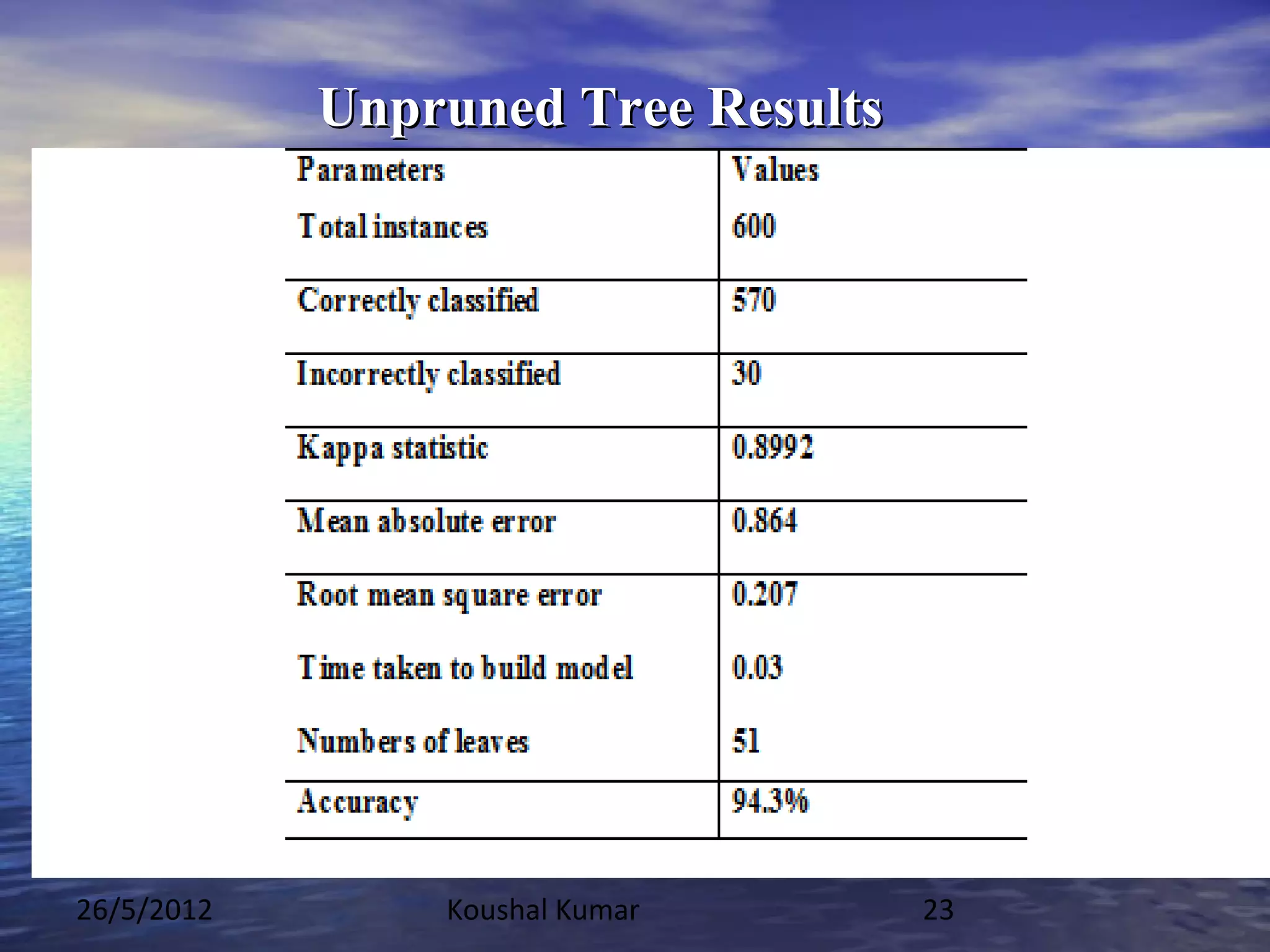

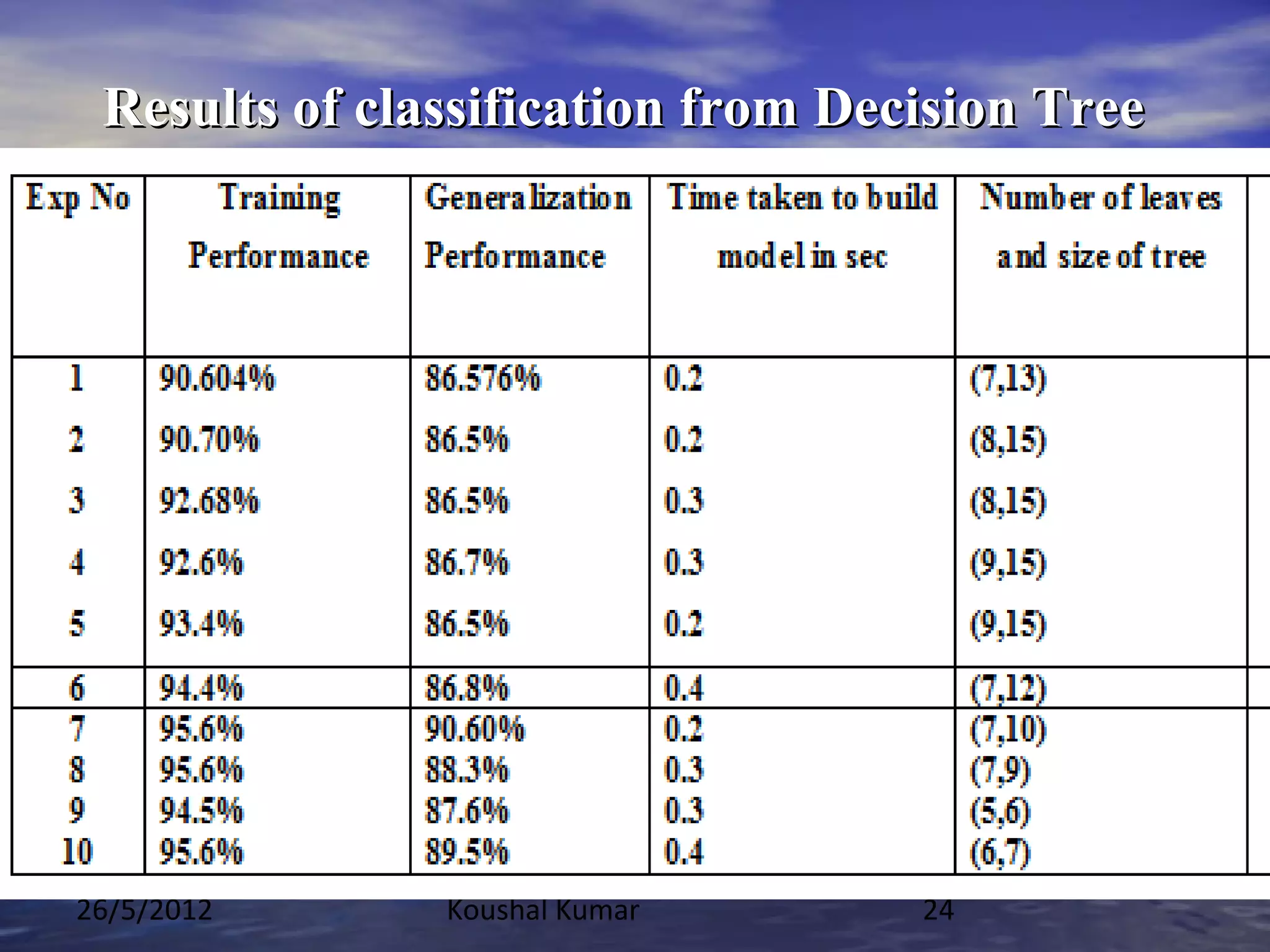

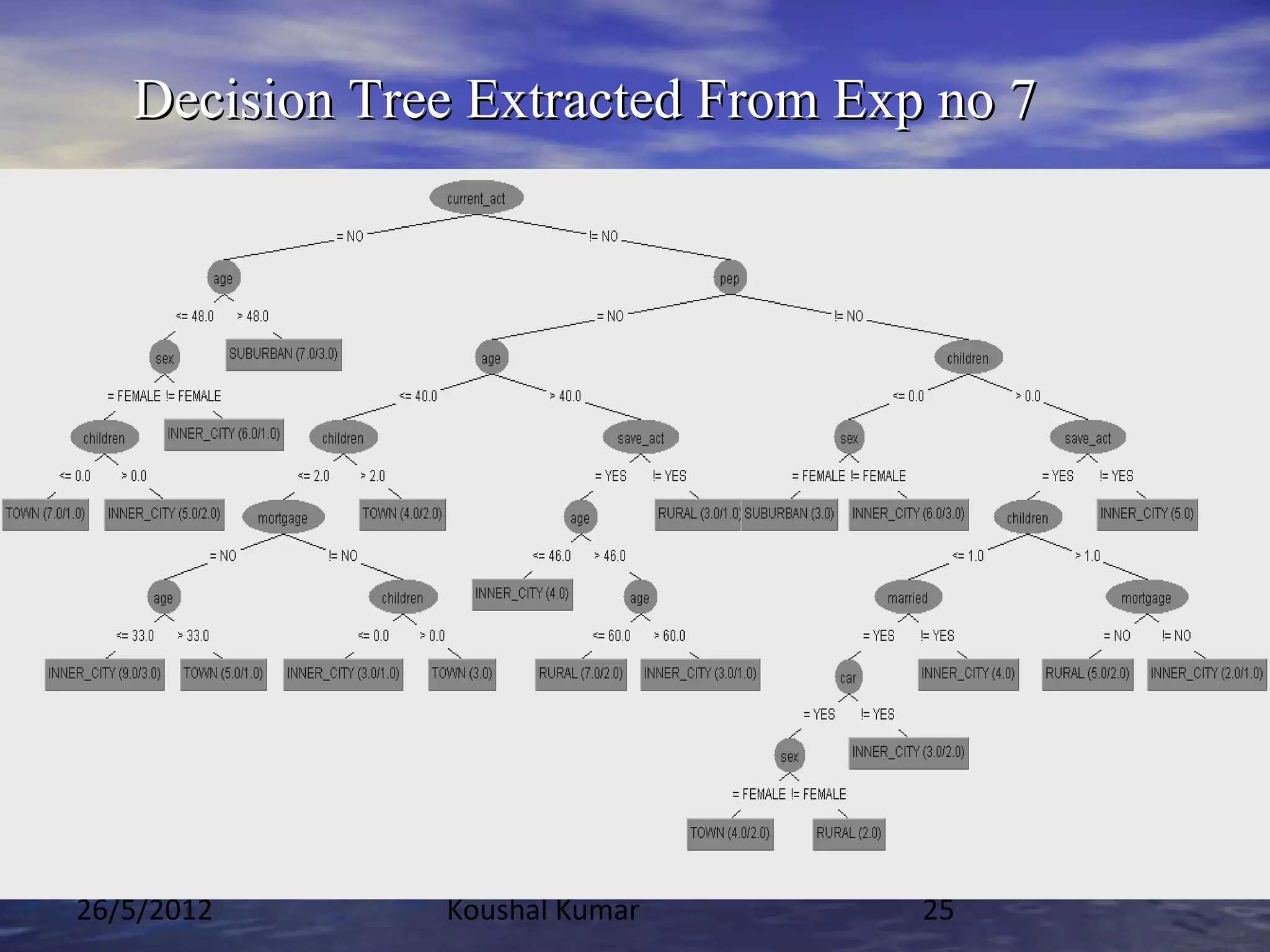

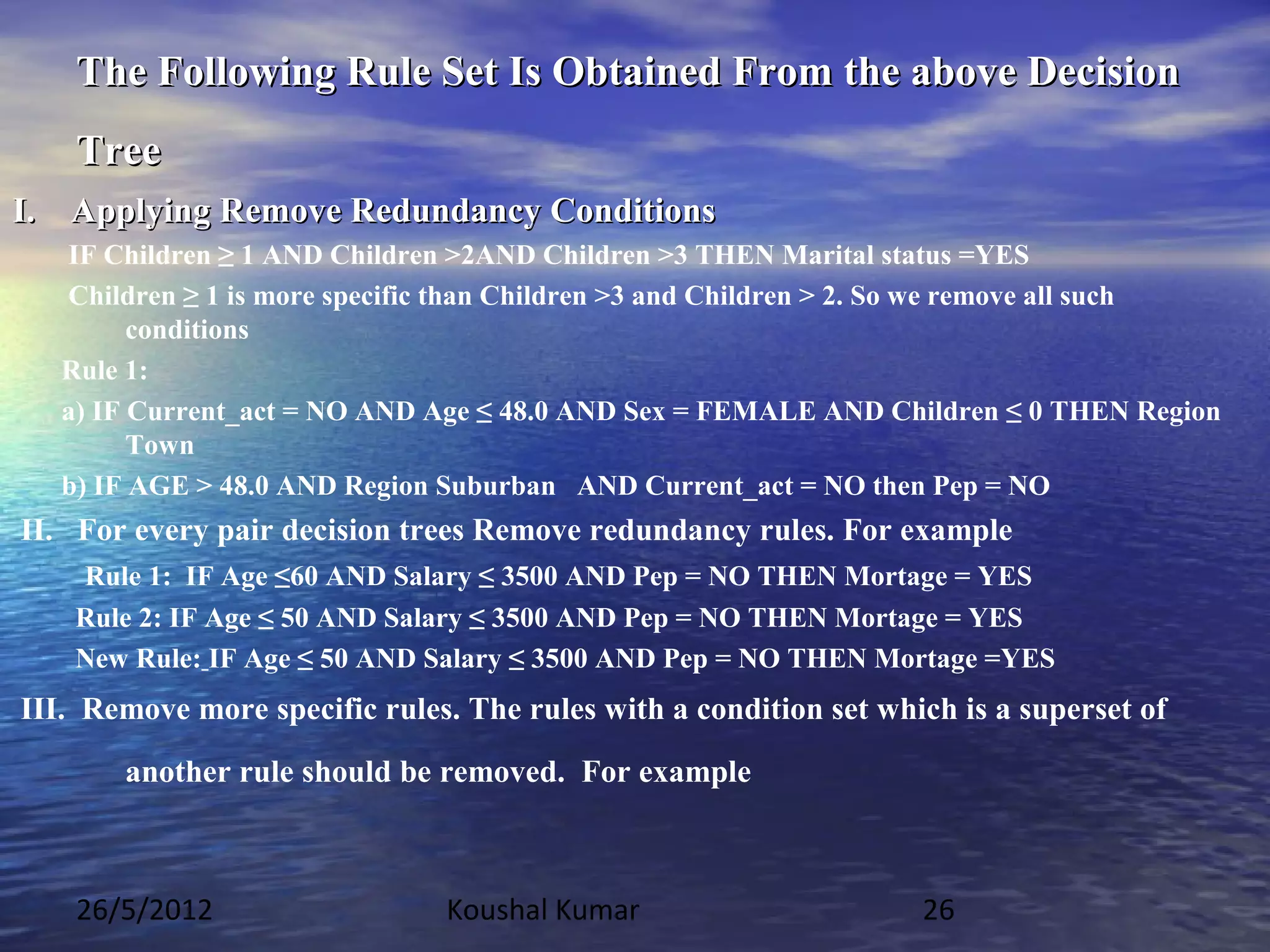

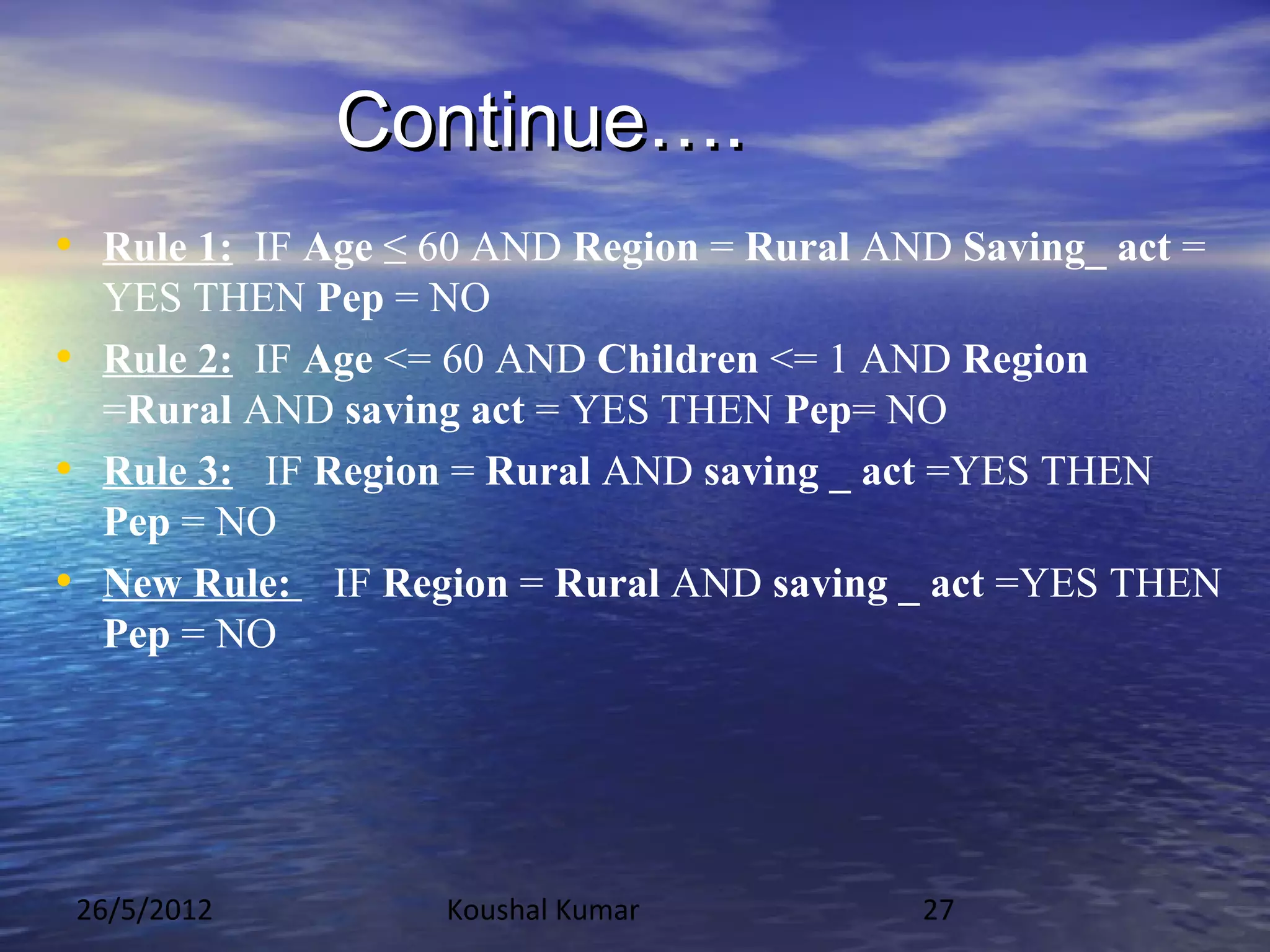

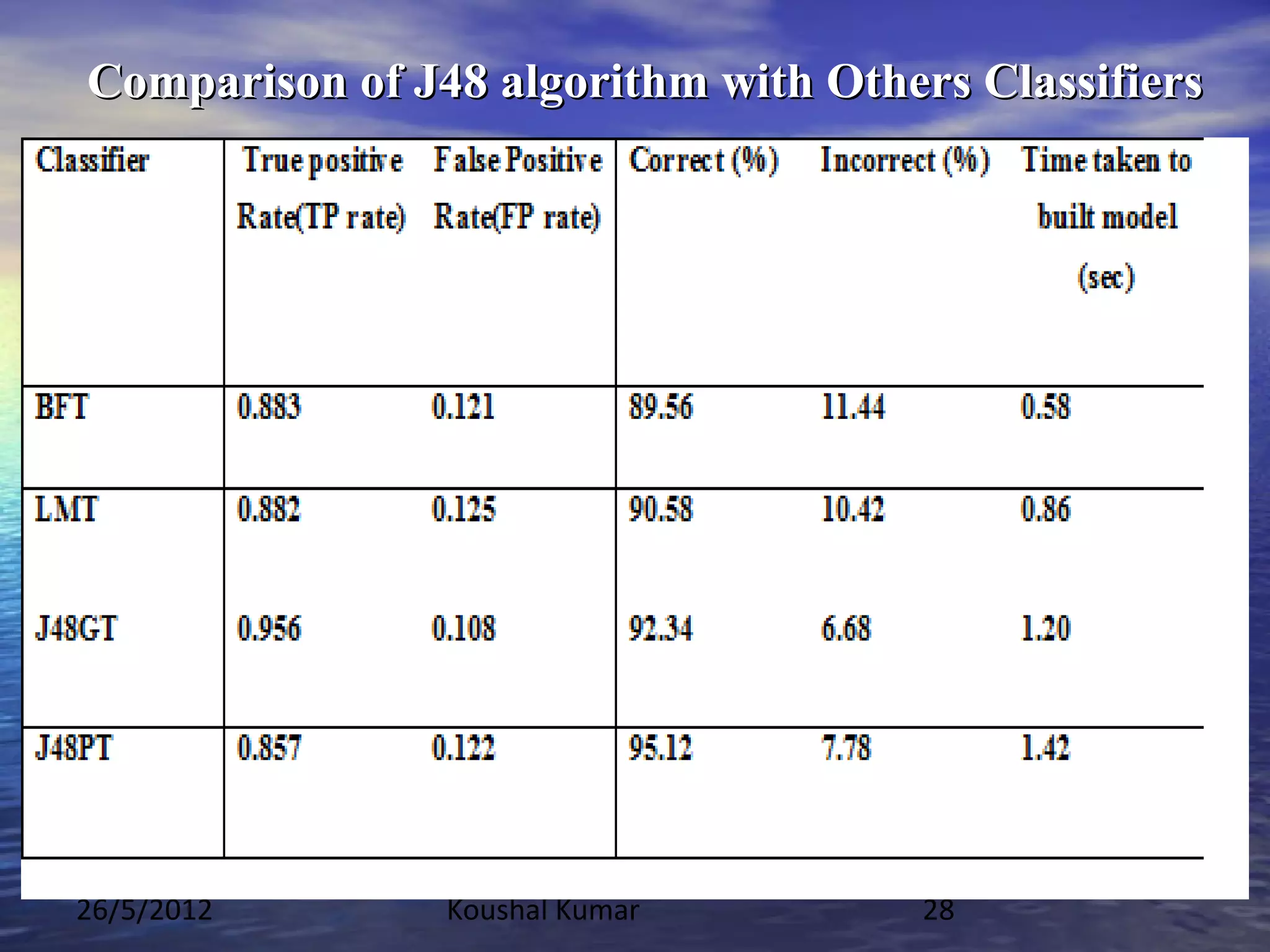

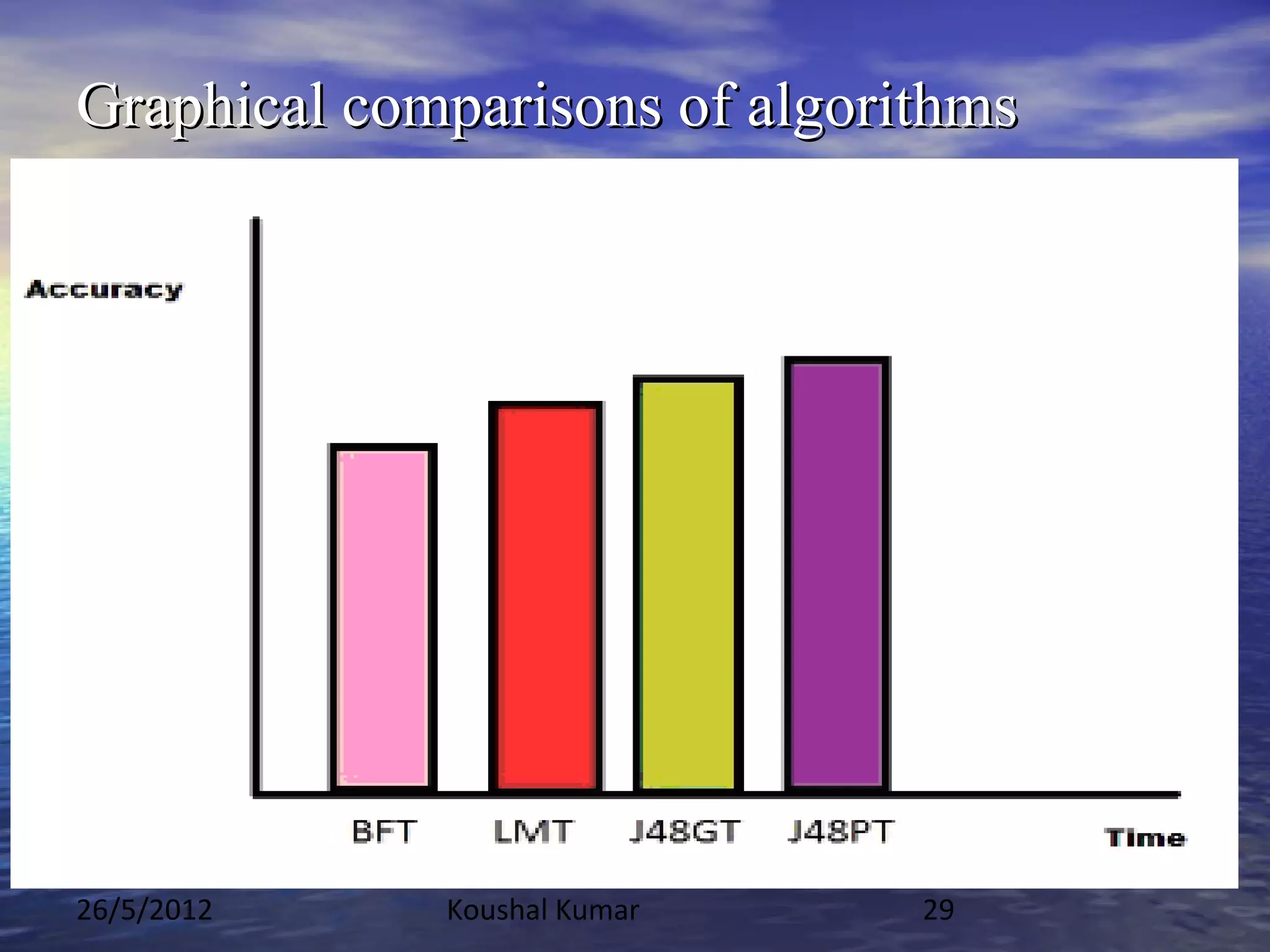

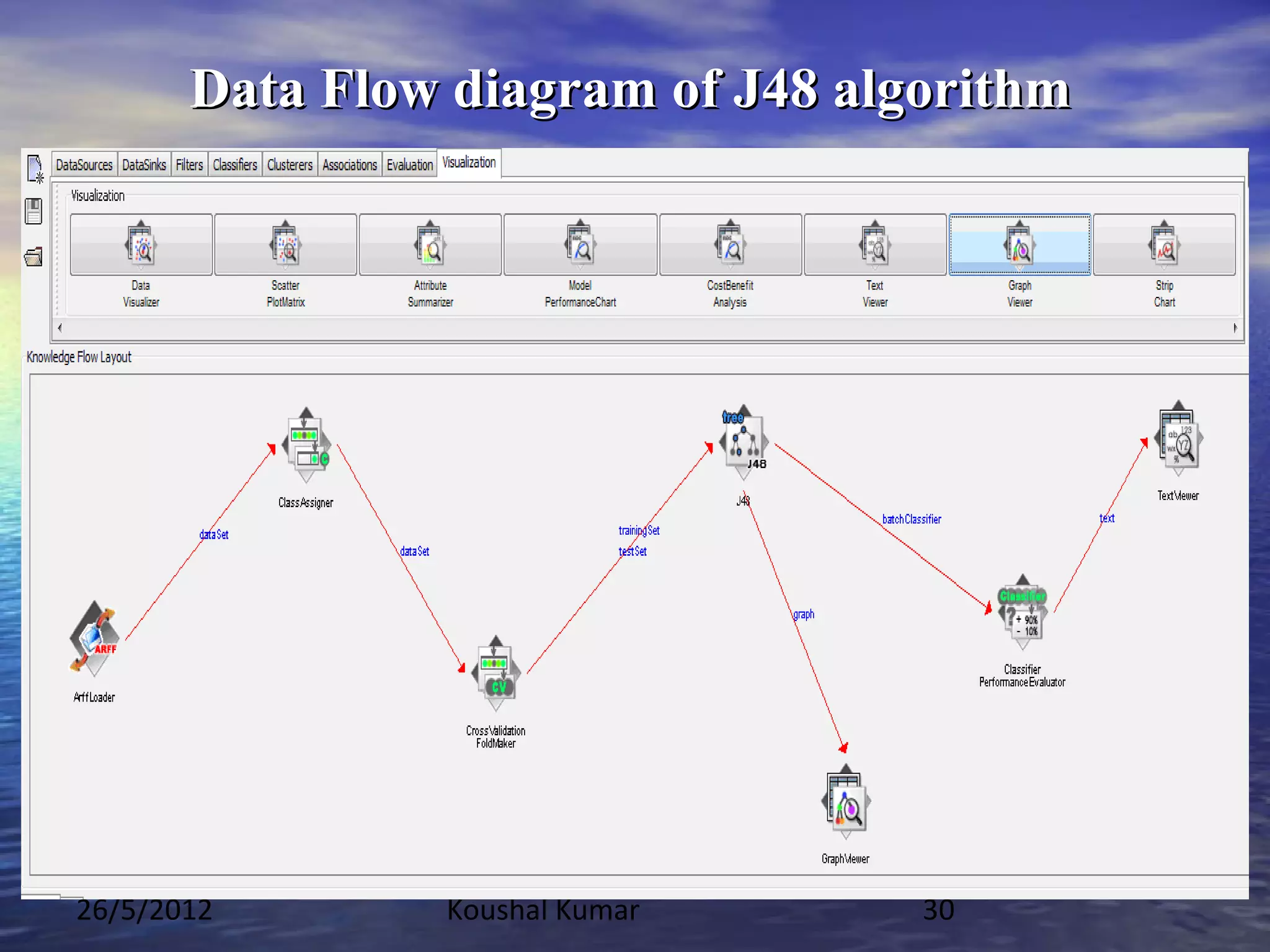

The document discusses extracting rules from trained neural networks to make them more interpretable. It describes how neural networks are typically "black boxes" that cannot explain their decisions. Various rule extraction techniques are presented, including IF-THEN rules and decision trees using the J48 algorithm. The J48 algorithm is demonstrated on a case study to extract rules from a neural network trained on a dataset. Comparisons are shown between J48 and other classifiers.